In a recent project, I had to compare several sets of externally stored data. Having used Python for over five years, I instinctively turned to Pandas for my data wrangling needs.

Pandas

Pandas is a Python library used for data analysis and manipulation on labeled datasets. The core mission of the Pandas development team is to “… be the fundamental high-level building block for doing practical, real-world data analysis in Python. Additionally, it has the broader goal of becoming the most powerful and flexible open source data analysis/manipulation tool available in any language.” It provides tools and methods for aligning, merging, transforming, as well as loading and writing data from various persistent stores, such as Excel, CSV, and SQL databases. It has been around since 2008 and is now regarded as the definitive tool for data analysis in Python, with wide usage from sales to bioinformatics.

As the datasets grew larger, with some tables exceeding 5 million rows, I noticed longer wait times for specific functions. That’s when I started exploring ways to optimize my code and discovered Polars.

Polars

Polars, which is relatively new, originated in 2020. It works similarly to Pandas and offers tools and methods for aligning, merging, transforming, and loading as well as writing data from various formats, including Excel, CSV, SQL, and Apache Arrow’s IPC format.

Some of the core goals of the Polars development team are:

- Utilizes all available cores on your machine.

- Optimizes queries to reduce unneeded work/memory allocations.

- Handles datasets much larger than your available RAM.

- A consistent and predictable API.

- Adheres to a strict schema (data-types should be known before running the query).

Initially, nothing appears to stand out significantly. Both libraries handle tabular data and support lookups, aggregations, and other calculations on datasets. They also leverage Series (a labelled array-like data structure) and DataFrames (a collection of Series) as the core elements. You can also create custom Python functions to generate new data from existing data. However, after refactoring some basic tasks from Pandas to Polars and using the %%timeit magic command, I was able to objectively notice significant performance improvements, which align with the core philosophy.

Testing methodology

My testing involved generating a random six-million-row dataset to test four basic operations:

- Filtering

- Arithmetic operations (eg, adding columns together)

- String manipulation

- and Transformation using a custom Python function

The test dataset consists of the following fields:

uuid: string

ip_address: string

date: string

country: string

200: integer

400: integer

500: integer

And was generated using the following code:

import random

import uuid

from faker import Faker

large_data_set = {'uuid': [str(uuid.uuid4()) for _ in range(0,6000000)],

'ip_address': [f"{random.randint(1,254)}.{random.randint(1,254)}.{random.randint(1,254)}.{random.randint(1,254)}" for _ in range(0,6000000)],

'date': [f"{random.randint(1990,2026)}-{random.randint(1,13):02}-{random.randint(1,29):02}" for _ in range(0,6000000)],

'country':[f"{Faker().country()}" for _ in range(0,6000000)],

'200': [random.randint(1,300) for _ in range(0,6000000)],

'400': [random.randint(1,300) for _ in range(0,6000000)],

'500': [random.randint(1,300) for _ in range(0,6000000)]

}

Basic Usage

Installation

Both can be installed conveniently using PIP

Pandas:

pip install pandas

Polars:

pip install polars

Loading a dataset

Both Pandas and Polars support reading CSVs, and the import statements are identical.

Pandas:

import pandas as pd

large_df = pd.read_csv('large_dataset_20250728.csv')

Polars:

import polars as pl

large_df = pl.read_csv('large_dataset_20250728.csv')

Both represent their respective DataFrames in a tabular format; however, Pandas also includes an Index series.

Pandas:

Polars:

Filtering

The filtering syntax is slightly different. The Pandas filtering syntax is somewhat more compact, where Polars presents an explicit “filter” method. In this example, I am filtering based on country, “Guam”, and date, “2023-07-11”. From the results, you can see Polars is blazingly fast!

Pandas:

Polars:

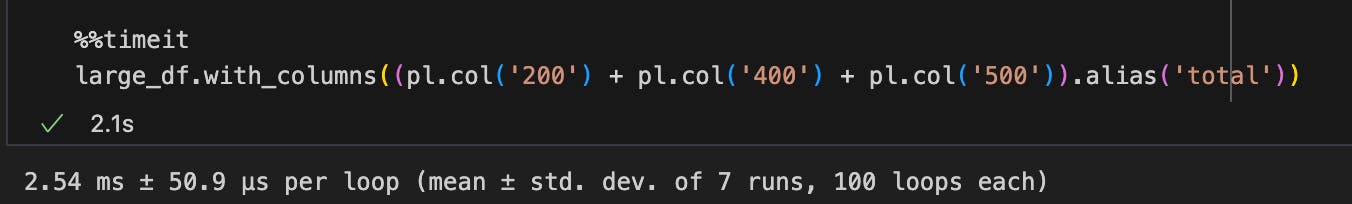

Addition

In addition to filtering, I occasionally need to perform some light arithmetic across columns. In this example, I’m summing three columns, “200”, “400”, and “500”, then storing the result in a new “total” column. With Polars, this is significantly faster.

Pandas:

Polars:

String operations

Both Pandas and Polars offer methods for performing various operations on string data types. With Pandas, the syntax is straightforward and more Pythonic. The syntax for a similar operation in Polars, however, appears to be more complex, but it provides a significant performance boost. In this example, I’m splitting the “date” column, which contains strings that look like “yyyy-mm-dd”, into separate “year”, “month”, and “day” columns.

Pandas:

Polars:

Operations on elements

This is where things start to get a little more interesting! While the feature set in Pandas and Polars is quite powerful, there are times when you might need to write a custom function to iterate over all the elements in a Series to generate new data for later use. In this example, I’m using Python’s ipaddress library to determine the IP version of the ip_address column, returning the value as an integer, and creating a new column with the result.

Again, the Pandas syntax appears to be much simpler compared to Polars. However, there does not seem to be any significant performance increase in using Polars for a per-element transformation. This is because the ip_version function is not an inherent Polars method, and therefore, is subject to the Global Interpreter Lock (GIL). Python does not natively optimize performance the way that Polars does, and there is no advantage to using Polars for operations such as this.

Pandas:

Polars:

Conclusion

Summary of results

| Time in seconds | Pandas | Polars | Difference | % Improvement |

| Query | 0.43 | 0.00249 | 0.42751 | 99.42 |

| Addition | 0.0157 | 0.00254 | 0.01316 | 83.82 |

| String Operations | 3.21 | 0.0324 | 3.1776 | 98.99 |

| Custom functions on elements | 10.5 | 9.9 | 0.6 | 5.71 |

Some key points

- Polars offers significant performance gains for native Series and DataFrame operations.

- Operations that aren’t native Polars methods do not inherit the performance gains to any significant degree.

- The syntax is slightly more complex than Pandas.

- Pandas is a more mature library that plays well with other Data Analysis tools.

Based on my experience with both tools, I would use the following guidelines:

Use Polars when:

- DataFrame calculations and operations can be performed using the native Polars tooling.

- The speed of operations is essential, and the dataset can fit in memory.

- If the libraries or modules that will be using a Polars DataFrame as input explicitly support Polars DataFrames.

Use Pandas when:

- The speed of calculations and operations is not critical or currently falls within acceptable standards.

- You want to use a Python data analytics library that currently has the broadest reach across teams or organizations.

- The DataFrame may be used as an input or in conjunction with other libraries, or you may want to leverage the broadest set of libraries and modules.

Polars offers a method to export a Polars DataFrame to a Pandas DataFrame to offset the limitation, with the additional pyarrow library. This allows you to work with Polars DataFrames, where there is a performance gain, and the ability to export it to a Pandas DataFrame for other utilities and libraries that expect it within a project.

import polars as pl

import pandas as pd

large_polars_df = pl.read_csv('large_dataset_20250728.csv')

large_pandas_df = large_polars_df.to_pandas()

It will ultimately depend on your specific use case to determine if it’s worthwhile to refactor existing projects to use Polars or integrate it in future projects.