Table of Links

Abstract and 1 Introduction

2 Technical Specifications

3 Academic benchmarks

4 Safety

5 Weakness

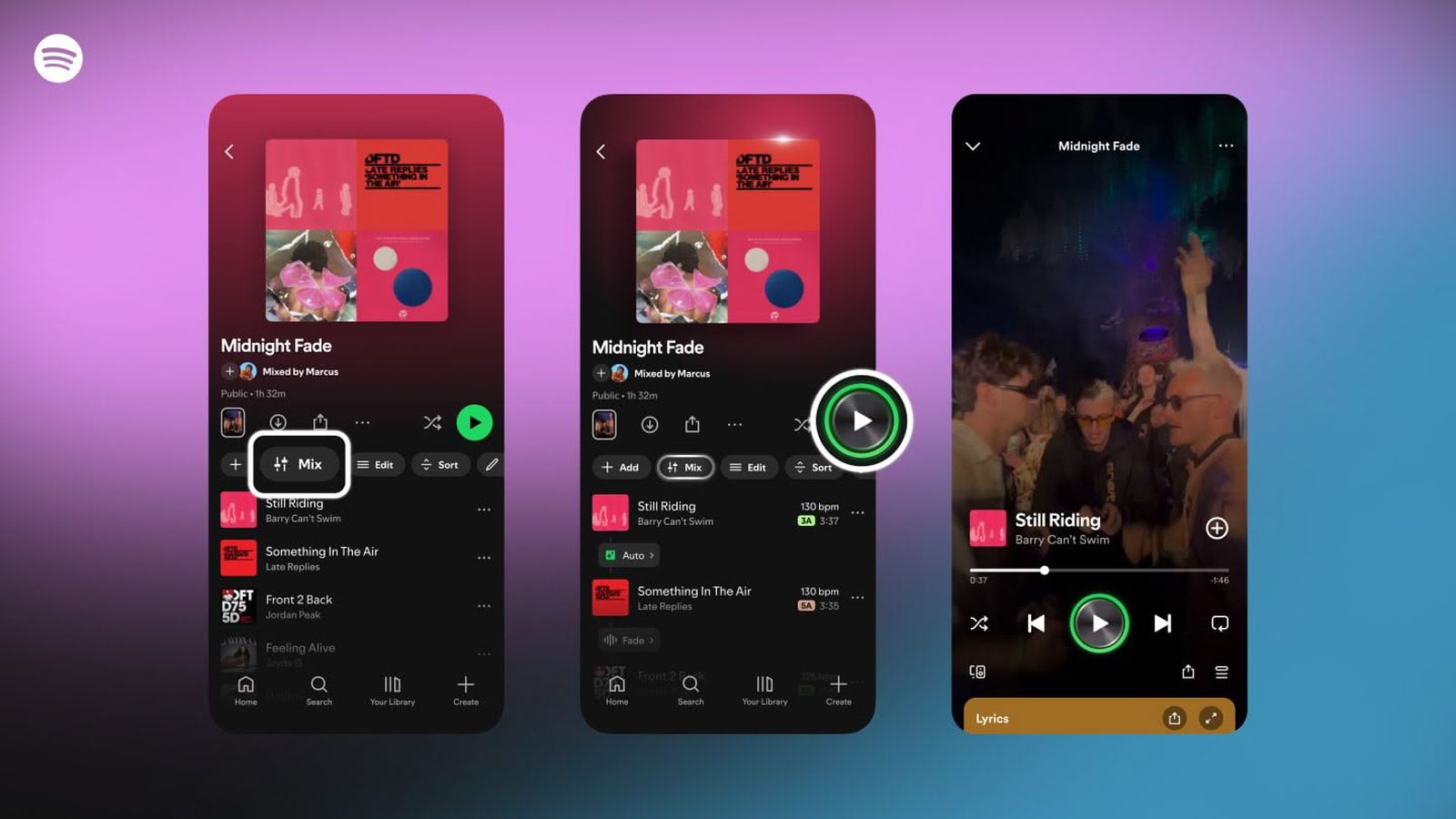

6 Phi-3-Vision

6.1 Technical Specifications

6.2 Academic benchmarks

6.3 Safety

6.4 Weakness

References

A Example prompt for benchmarks

B Authors (alphabetical)

C Acknowledgements

Abstract

We introduce phi-3-mini, a 3.8 billion parameter language model trained on 3.3 trillion tokens, whose overall performance, as measured by both academic benchmarks and internal testing, rivals that of models such as Mixtral 8x7B and GPT-3.5 (e.g., phi-3-mini achieves 69% on MMLU and 8.38 on MT-bench), despite being small enough to be deployed on a phone. The innovation lies entirely in our dataset for training, a scaled-up version of the one used for phi-2, composed of heavily filtered publicly available web data and synthetic data. The model is also further aligned for robustness, safety, and chat format. We also provide some initial parameter-scaling results with a 7B and 14B models trained for 4.8T tokens, called phi-3-small and phi-3-medium, both significantly more capable than phi-3-mini (e.g., respectively 75% and 78% on MMLU, and 8.7 and 8.9 on MT-bench). Moreover, we also introduce phi-3-vision, a 4.2 billion parameter model based on phi-3-mini with strong reasoning capabilities for image and text prompts.

1 Introduction

The striking progress of AI in the last few years can be largely attributed to major efforts throughout the world towards scaling-up to ever-larger models and datasets. Large Language Models (LLMs) have steadily increased in size from a mere billion parameters just five years ago (GPT-2 had 1.5 billion parameters [RWC+ 19]) to trillion parameters today. The impetus for this effort originates in the seemingly predictable improvement one obtains by training large models, the so-called scaling laws [KMH+ 20, HBM+ 22, MRB+ 23]. However these laws assume a “fixed” data source. This assumption is now significantly disrupted by the existence of frontier LLMs themselves, which allow us to interact with data in novel ways. In our previous works on the phi models [GZA+ 23, LBE+ 23, JBA+ 23] it was shown that a combination of LLM-based filtering of publicly available web data, and LLM-created synthetic data, enable performance in smaller language models that were typically seen only in much larger models. For example our previous model trained on this data recipe, phi-2 (2.7B parameters), matched the performance of models 25 times larger trained on regular data. In this report we present a new model, phi-3-mini (3.8B parameters), trained for 3.3T tokens on larger and more advanced versions of the datasets used in phi-2. With its small size, phi-3-mini can easily be inferenced locally on a modern phone (see Figure 2), yet it achieves a quality that seems on-par with models such as Mixtral 8x7B [JSR+ 24] and GPT-3.5.

Authors:

(1) Marah Abdin;

(2) Sam Ade Jacobs;

(3) Ammar Ahmad Awan;

(4) Jyoti Aneja;

(5) Ahmed Awadallah;

(6) Hany Awadalla;

(7) Nguyen Bach;

(8) Amit Bahree;

(9) Arash Bakhtiari;

(10) Jianmin Bao;

(11) Harkirat Behl;

(12) Alon Benhaim;

(13) Misha Bilenko;

(14) Johan Bjorck;

(15) Sébastien Bubeck;

(16) Qin Cai;

(17) Martin Cai;

(18) Caio César Teodoro Mendes;

(19) Weizhu Chen;

(20) Vishrav Chaudhary;

(21) Dong Chen;

(22) Dongdong Chen;

(23) Yen-Chun Chen;

(24) Yi-Ling Chen;

(25) Parul Chopra;

(26) Xiyang Dai;

(27) Allie Del Giorno;

(28) Gustavo de Rosa;

(29) Matthew Dixon;

(30) Ronen Eldan;

(31) Victor Fragoso;

(32) Dan Iter;

(33) Mei Gao;

(34) Min Gao;

(35) Jianfeng Gao;

(36) Amit Garg;

(37) Abhishek Goswami;

(38) Suriya Gunasekar;

(39) Emman Haider;

(40) Junheng Hao;

(41) Russell J. Hewett;

(42) Jamie Huynh;

(43) Mojan Javaheripi;

(44) Xin Jin;

(45) Piero Kauffmann;

(46) Nikos Karampatziakis;

(47) Dongwoo Kim;

(48) Mahoud Khademi;

(49) Lev Kurilenko;

(50) James R. Lee;

(51) Yin Tat Lee;

(52) Yuanzhi Li;

(53) Yunsheng Li;

(54) Chen Liang;

(55) Lars Liden;

(56) Ce Liu;

(57) Mengchen Liu;

(58) Weishung Liu;

(59) Eric Lin;

(60) Zeqi Lin;

(61) Chong Luo;

(62) Piyush Madan;

(63) Matt Mazzola;

(64) Arindam Mitra;

(65) Hardik Modi;

(66) Anh Nguyen;

(67) Brandon Norick;

(68) Barun Patra;

(69) Daniel Perez-Becker;

(70) Thomas Portet;

(71) Reid Pryzant;

(72) Heyang Qin;

(73) Marko Radmilac;

(74) Corby Rosset;

(75) Sambudha Roy;

(76) Olatunji Ruwase;

(77) Olli Saarikivi;

(78) Amin Saied;

(79) Adil Salim;

(80) Michael Santacroce;

(81) Shital Shah;

(82) Ning Shang;

(83) Hiteshi Sharma;

(84) Swadheen Shukla;

(85) Xia Song;

(86) Masahiro Tanaka;

(87) Andrea Tupini;

(88) Xin Wang;

(89) Lijuan Wang;

(90) Chunyu Wang;

(91) Yu Wang;

(92) Rachel Ward;

(93) Guanhua Wang;

(94) Philipp Witte;

(95) Haiping Wu;

(96) Michael Wyatt;

(97) Bin Xiao;

(98) Can Xu;

(99) Jiahang Xu;

(100) Weijian Xu;

(101) Sonali Yadav;

(102) Fan Yang;

(103) Jianwei Yang;

(104) Ziyi Yang;

(105) Yifan Yang;

(106) Donghan Yu;

(107) Lu Yuan;

(108) Chengruidong Zhang;

(109) Cyril Zhang;

(110) Jianwen Zhang;

(111) Li Lyna Zhang;

(112) Yi Zhang;

(113) Yue Zhang;

(114) Yunan Zhang;

(115) Xiren Zhou.