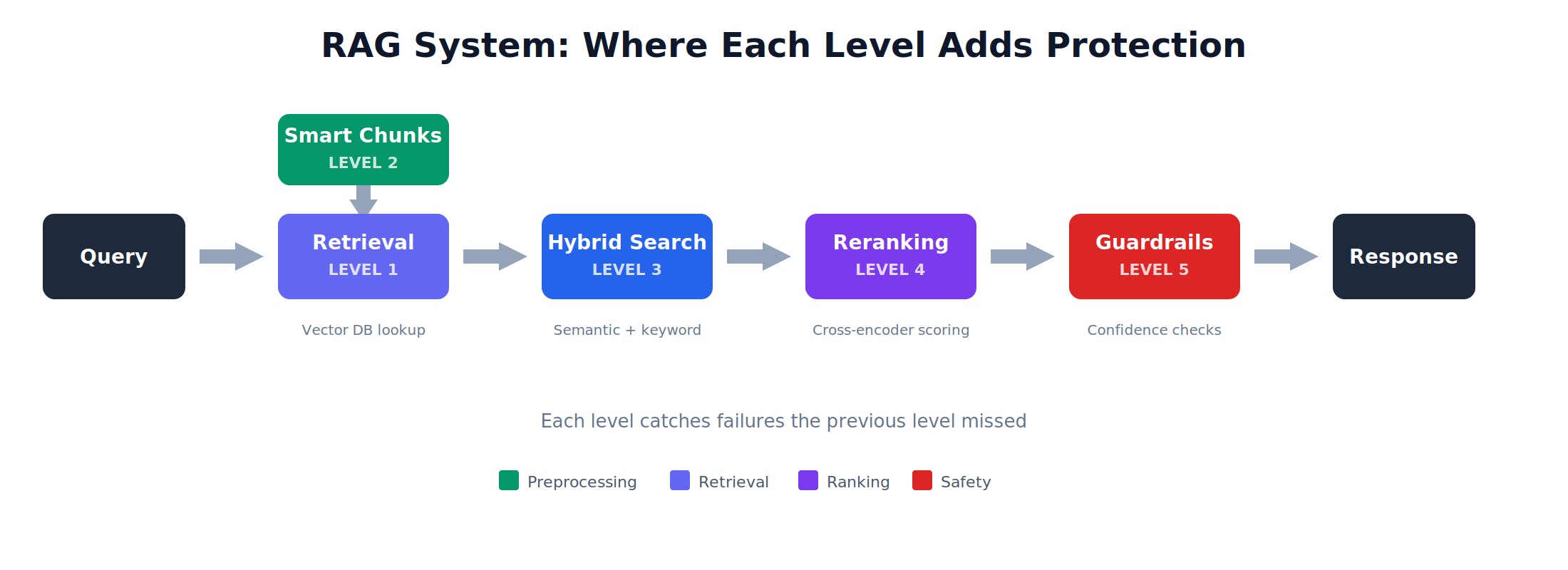

Most RAG systems fail in production because “semantic similarity” isn’t the same as relevance, and retrieval breaks under real queries. This article outlines five escalating levels—naive RAG, smarter chunking with overlap and metadata, hybrid semantic + BM25 retrieval, cross-encoder reranking, and production guardrails that refuse or clarify when confidence is low—plus a testing approach to measure retrieval precision and answer accuracy. The core lesson: build, break, diagnose the failure mode, and level up until the system reliably grounds answers and knows when not to answer.