In addition to rolling out Red Hat Enterprise Linux 10, Red Hat used their annual developer summit today for introducing llm-d as their newest open-source project.

The llm-d project aims to empower distributed Gen AI inference at scale. The llm-d project is supported by Red Hat along with NVIDIA, AMD, Intel, IBM Research, Google Cloud, CoreWeave, Hugging Face, and other vendors and AI organizations.

The llm-d software is built atop Kubernetes and relies on vLLM for distributed inference. Llm-d also employs LMCache for key-value cache offloading, AI-aware network routing, high performance communication APIs, and other features to help come up with a compelling solution for distributed Gen AI inference at scale.

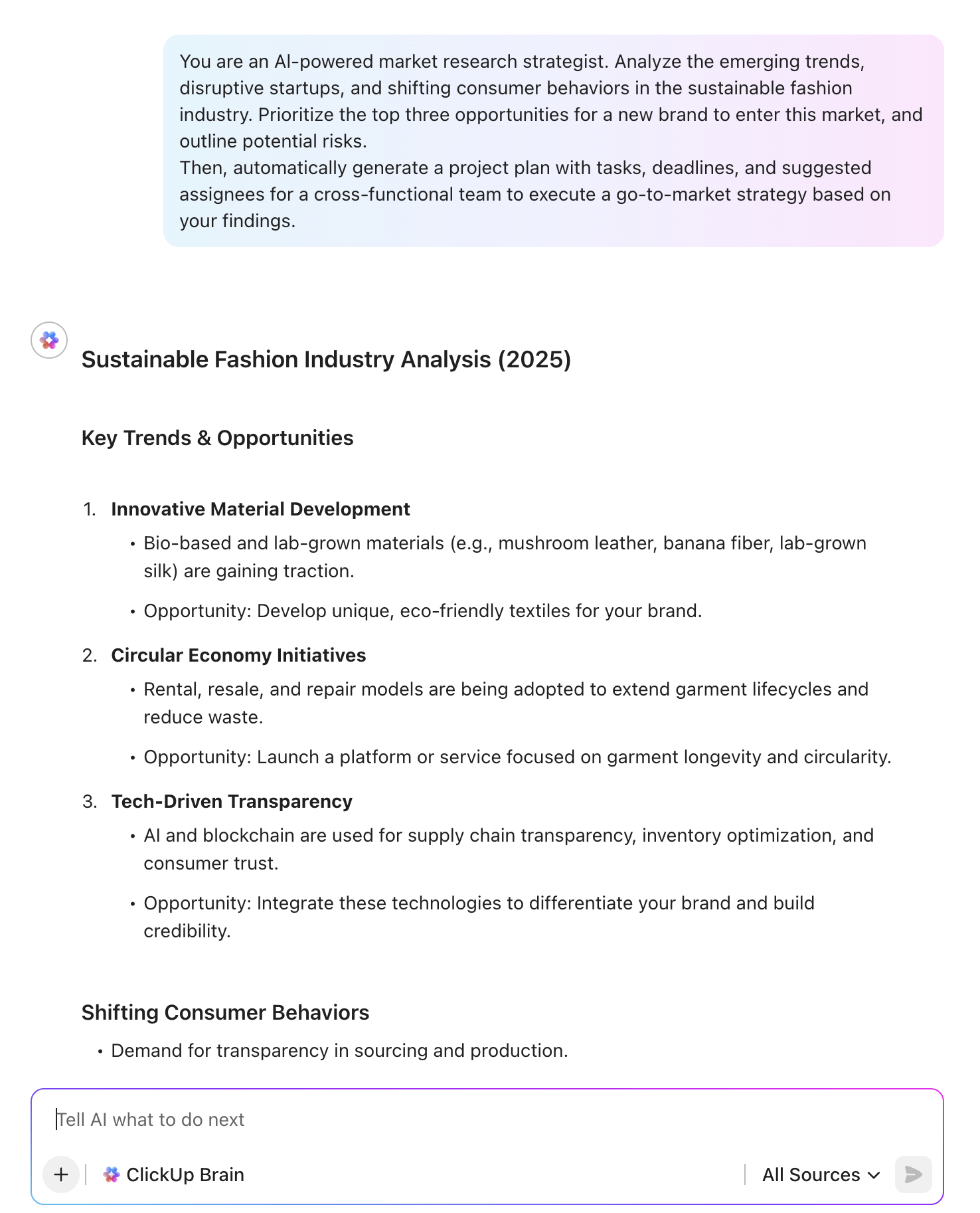

Llm-d is self-described on the new llm-d.ai project site as:

“llm-d is a Kubernetes-native high-performance distributed LLM inference framework

– a well-lit path for anyone to serve at scale, with the fastest time-to-value and competitive performance per dollar for most models across most hardware accelerators.With llm-d, users can operationalize gen AI deployments with a modular, high-performance, end-to-end serving solution that leverages the latest distributed inference optimizations like KV-cache aware routing and disaggregated serving, co-designed and integrated with the Kubernetes operational tooling in Inference Gateway (IGW).”

Those wishing to learn more about the llm-d project can do so via the Red Hat press release.