Red Hat has announced the launch of the LLM-D communityan Open Source project that addresses one of the needs that generative AI will have in the future: large -scale inference. With inference technologies for large-scale generative AI, LLM-D has a narive architecture of kubernetes, distributed inference based on VLLM and an intelligent network routing with AI conscience. In this way, the inference clouds of the LLM can meet the objectives of the production service level.

The LLM-D community allows Red Hat and its partners to amplify the power of VLLM to overcome the limitations of a single server, as well as unlock production at scale for the inference of AI. Thanks to the orchestration of kubernettes, LLM-D integrates advanced inference capabilities in existing business IT infrastructure. This unified platform allows IT teams to respond to service demands of critical business loads for the business.

VLLM, which has become the standard open -source de facto inference server, offers models support from the day or for emerging avant -garde models, as well as support for various accelerators, among which are the tensor processing units (TPU) of Google Cloud.

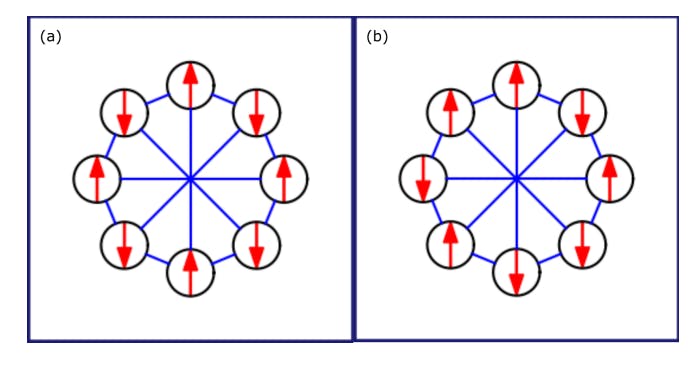

LLM-D also offers prefill and decode disaggregation to separate the input context phases and generation of AI tokens in discrete operations, where they can then be distributed among several servers. In addition, it has KV cache download (Key-Value), based on LMCache, which passes the KV cache memory load from the GPU memory to standard storage, such as the memory of the CPU or network storage.

It has clusters and controllers based on kubernetes for a more efficient programming of computing and storage resources as the demands vary, maintaining the performance and with less latency. Also with a network conscious network, for program incoming requests to servers and accelerators who have more probabilidates of having “hot” caches of previous inference calculations.

Apart from this, the community includes high -performance communication APIS for faster and more efficient data transfer, with support for NVIDIA Inference Xfer Library (Nixl).

This project already has the Support from various generative AI models, AI accelerator developers and AI cloud platforms. Among its fundamental collaborators are Coreweave, Google Cloud, IBM Research and Nvidia. Among their partners are AMD, Cisco, Hugging Face, Intel, Lambda and Mistral AI.

The LLM-D community also has the support of the founders of the Sky Computing Lab of the University of California, of the Creators of VLLM, and the LMCache Lab of the University of Chicago, architects of LMCache.