Researchers from Stanford University, SambaNova Systems, and UC Berkeley have proposed Agentic Context Engineering (ACE), a new framework designed to improve large language models (LLMs) through evolving, structured contexts rather than weight updates. The method, described in a paper, seeks to make language models self-improving without retraining.

LLM-based systems rely on prompt or context optimization to enhance reasoning and performance. While techniques like GEPA and Dynamic Cheatsheet show improvements, they often prioritize brevity, leading to “context collapse,” where detail is lost through repeated rewriting. ACE solves this by treating contexts as evolving playbooks that develop over time through modular generation, reflection, and curation.

The framework divides responsibilities among three components:

- Generator, which produces reasoning traces and outputs,

- Reflector, which analyzes successes and failures to extract lessons,

- Curator, which integrates those lessons as incremental updates.

Source: https://www.arxiv.org/pdf/2510.04618

Instead of rewriting full prompts, ACE performs delta updates—localized edits that accumulate new insights while preserving prior knowledge. A “grow-and-refine” mechanism manages expansion and redundancy by merging or pruning context items based on semantic similarity.

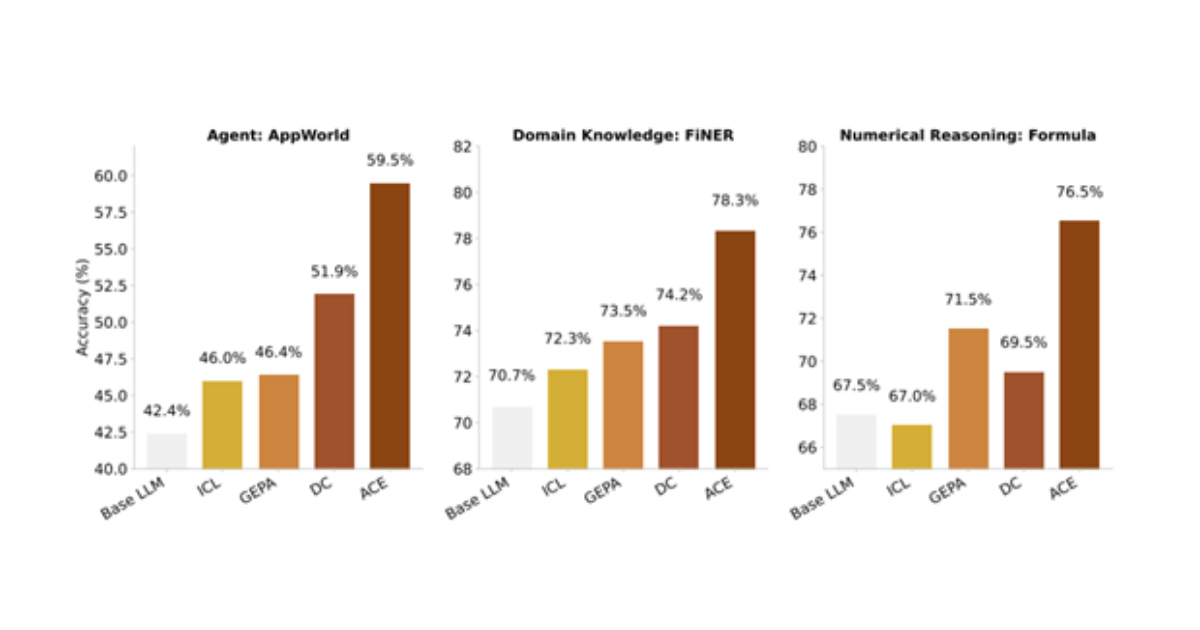

In evaluations, ACE improved performance across both agentic and domain-specific tasks. On the AppWorld benchmark for LLM agents, ACE achieved an average accuracy of 59.5%, outperforming prior methods by 10.6 percentage points and matching the top entry on the public leaderboard, a GPT-4.1–based agent from IBM. On financial reasoning datasets such as FNER and Formula, ACE delivered an average gain of 8.6%, with strong results when ground-truth feedback was available.

Source: https://www.arxiv.org/pdf/2510.04618

The authors highlight that ACE’s improvements were achieved without model fine-tuning or labeled supervision in many cases, relying instead on natural signals such as task outcomes or code execution results. They report that ACE reduced adaptation latency by up to 86.9% and computational rollouts by more than 75% compared to established baselines like GEPA.

According to the researchers, the approach enables models to “learn” continuously through context updates while maintaining interpretability—an advantage for domains where transparency and selective unlearning are crucial, such as finance or healthcare.

Community reactions have been optimistic. For example, one user shared on Reddit:

That is certainly encouraging. This looks like a smarter way to context engineer. If you combine it with post-processing and the other ‘low-hanging fruit’ of model development, I am sure we will see far more affordable gains.

ACE demonstrates that scalable self-improvement in LLMs can be achieved through structured, evolving contexts, offering an alternative path to continual learning without the cost of retraining.