Hi all! My name is Alena and QA Lead 🙂

In this article, I would like to share my experience in automating REST APIs using tools such as Postman, Newman and Jenkins.

Postman is a popular API client that allows you to test, share, create, collaborate, and document your API development process within a team. An important feature of Postman is the ability to write and run JavaScript-based tests for APIs. Postman offers built-in API integration tools for some continuous integration (CI) tools, such as Jenkins.

Creating Collections and Writing Autotests in Postman

First, you need to create a collection and populate it with requests. Once the collection is ready, you can start writing autotests. There are two ways to add JavaScript code:

- You can add a script that runs before sending a request to the server, which is done on the “Pre-Request Script” tab.

- Alternatively, you can add a script that executes after receiving a response from the server, which is done on the “Tests” tab.

I typically use the “Tests” tab. After adding the code to the tab, it will be launched when the request is executed. The result of the launch will be available on the Test Results tab of the response from the server. Dynamic variables can be used in test scripts. You can add checks for data from the response and transfer the received values between requests.

It is very convenient that Postman offers ready-made code snippets for standard tasks that can be edited to suit your needs to save time. Thus, this type of automation can be mastered by someone who knows only the basics of JavaScript.

The first simple autotest is to verify that the request was successful, and we received the status code 200 (or any other code that we expect). It is most convenient to enable this test at the collection level so that all queries under it inherit this check:

pm.test("Status code is 200", function () {

pm.response.to.have.status(200);

The following test example is a JSON schema check. Why is the JSON schema validation required? Because:

-

We monitor API responses and ensure that the format we receive is the same as expected.

-

We receive an alert whenever there is any critical change in the JSON response.

-

For integration tests, it is useful to check the schema. It can be generated once from the service response in order to test all future versions of the service with it.

let schema = { "items": { "type": "boolean" } }; pm.test("Схема корректна", () => { pm.response.to.have.jsonSchema(schema); });Thus, we already have two automated REST API checks. I won’t bore you with further autotest examples, as they can be found and studied in the documentation at

Postman’s learning site , so let’s move on to the exciting part: integrating our collection with Jenkins.

Integrating Postman with Continuous Integration (CI) Build Systems

The first question that arises is how to link Postman and Jenkins. To accomplish this, you need to use the CLI (command-line interface). Newman, an application that allows you to run and test Postman collections directly from the command line, is used to convert the Postman collection into command-line language.

Here is a simplified list of steps on how to proceed:

- Install Jenkins locally and run it. For more details, refer to the Jenkins documentation at Jenkins.io.

- Install Node.js and Newman in Jenkins:

- Go to your Jenkins server and log in.

- Navigate to “Manage Jenkins” > “Manage Plugins” and install the NodeJS plugin.

- Go to “Manage Jenkins” > “Global Tool Configuration” and select “Add NodeJS” in the NodeJS section.

- Enter a name for the Node.js installation.

- In the “Global npm packages” field, enter

newman. - Select “Save”. Detailed instructions can be found here.

- Verify that npm (the package manager for JavaScript) is also installed by entering the following commands in the command line:

node -v

npm -v

- Open the console and install Newman itself using the command:

npm install -g newman

- Save your collection of tests; you can either generate a URL for the tests (share) or save it as a file (export).

- Run the tests in the console with the following command:

-

If using a link:

newman run https://www.getpostman.com/collections/... # (replace with the actual URL) -

If using a file, use the command with the directory of the collection file:

newman run /Users/Postman/postman_collection.json

-

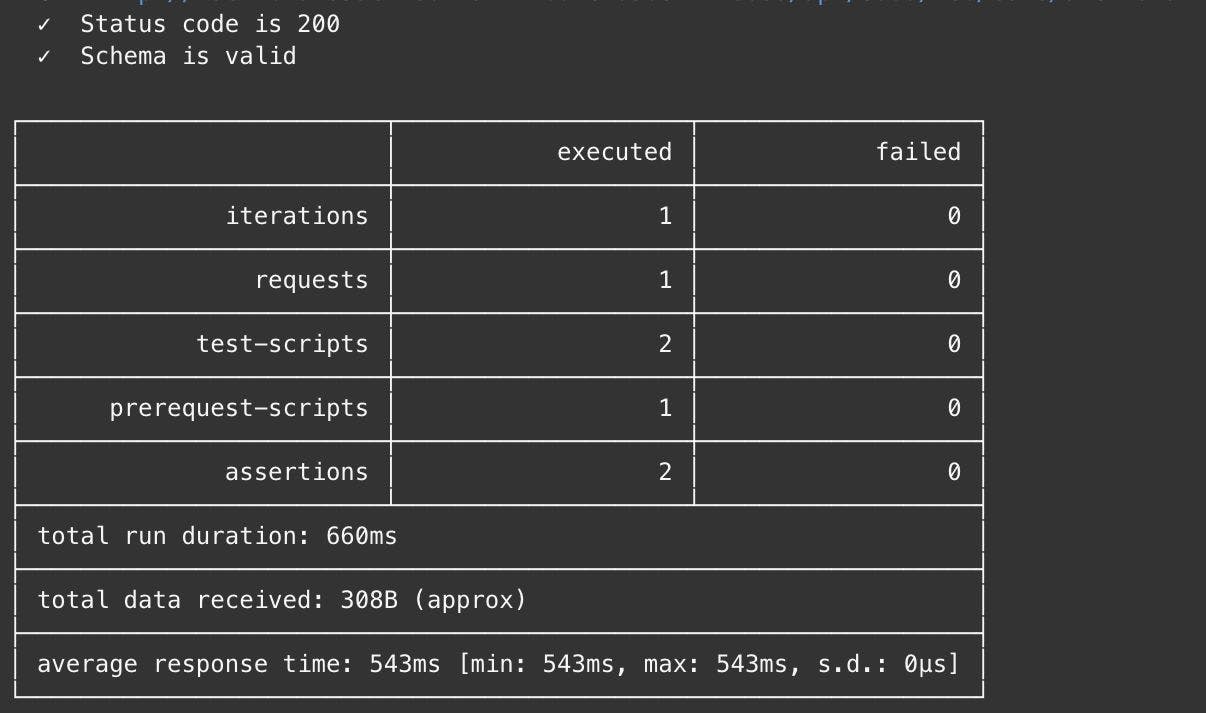

After running, the tests will execute in the console, and the results will be displayed:

After running, the tests will execute in the console, and the results will be displayed.

Next, you can execute Newman from Jenkins after each commit to test the correctness of the responses. It is advisable to prepare templates in Postman, such as placing the host address and other changing request parameters into variables, which can then be transferred from the Credentials plugin to Jenkins.

- To configure tasks, add a shell command that includes a Newman call. After that, Jenkins will run the tests. You can set the desired frequency by navigating to the build window → Configure → Build Triggers → Build periodically. Jenkins will then report whether the build was successful or unsuccessful.

You can also set up email distribution to inform team members about the latest builds and their statuses. This allows for active monitoring of your API builds along with other API components. Additionally, there are many other configurations you can implement to make the collection more dynamic.

Conclusion

In conclusion, I would like to outline the pros and cons of using this type of automation.

Advantages:

- Postman is intuitive and easy to use.

- It requires no complicated configuration.

- It supports various APIs (REST, SOAP, GraphQL).

- It integrates easily into CI/CD processes using Newman.

- Newman is user-friendly and ideal for those who frequently use Postman and seek extended functionality with minimal effort.

These tools are very effective for adding automation to testing, especially in the initial stages.

Disadvantages:

- Constructing automated tests for large projects with numerous REST services can be challenging.

- The inability to reuse code may lead to excessive duplication, making maintenance difficult, particularly when the number of scripts exceeds 1,000.

- This approach may not be viable if many tasks are being executed within the CI system—such as ongoing builds, running autotests, managing internal dependencies, or deploying builds to an environment. In such cases, you would need to increase the number of machines running the autotests and configure each one from scratch, ensuring that nothing is overlooked.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25829976/STK051_TIKTOKBAN_B_CVirginia_B.jpg)