A recent independent study conducted by SiliconData reveals key architecture points for the next generation of artificial intelligence infrastructure.

The most important takeaway from a recent independent performance study analyzed by theCUBE Research and the NYSE Wired team is it’s not about any single graphics processing unit or accelerator generation. It’s about the system wrapped around the silicon.

The GPU is no longer the differentiator — the system is.

How this performance was measured

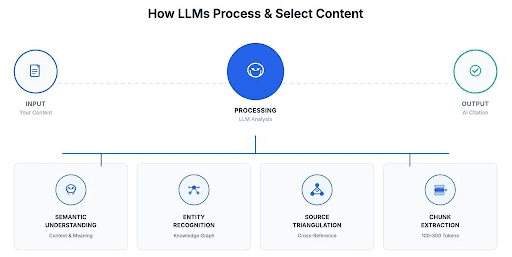

To understand why identical GPUs can deliver dramatically different outcomes in production, it’s important to understand how this performance was measured.

Silicondata’s SiliconMark™ is an independent, third-party GPU benchmarking platform designed specifically for system-level AI workloads. Rather than testing GPUs in isolation, SiliconMark™ measures real-world performance across compute throughput, memory bandwidth, interconnects, power behavior, and performance variance—revealing how the same hardware behaves once deployed inside real production systems.

For multi-GPU and multi-node clusters, SiliconMark™ also measures inter-node bandwidth and latency, making it possible to evaluate what increasingly matters most in modern AI environments: the true unit of compute is the system, not the chip.

This system-level approach is what makes the results of the study particularly revealing — and why they point to architectural differences that traditional GPU benchmarks often miss.

Across more than a thousand real-world benchmark runs, identical accelerators delivered dramatically different outcomes depending on how the surrounding infrastructure was designed, configured, and operated. Compute throughput, memory bandwidth, interconnect performance, power behavior, and variance all shifted based on system architecture. The conclusion is unavoidable: AI has entered a systems era, where infrastructure design — not chip specifications alone — determines real performance.

As one infrastructure leader on theCUBE put it succinctly:

“The unit of compute is no longer the GPU — it’s the data center.”

From GPUs to supercomputers disguised as data centers

Modern AI workloads are distributed by default. Large-scale training and inference now span thousands — sometimes tens of thousands — of accelerators. In this model, the data center itself becomes the computer, and performance emerges from how well compute, networking, memory and software are co-designed as a single system.

That reality has been underscored repeatedly by infrastructure leaders across the industry. Nvidia Corp. Senior Vice President Gil Shainer captured the core challenge clearly:

“Distributed AI only works when thousands of accelerators behave like one supercomputer. That requires ultra-low-jitter, deterministic interconnects — otherwise the GPUs are just waiting on data.”

In other words, the limiting factor is no longer how fast an individual GPU can run in isolation. It’s how efficiently the entire system moves data, synchronizes work, and sustains predictable behavior under load.

The redline approach: Industrialized AI at scale

One class of infrastructure providers in the independent study reflects a highly industrialized approach to AI. These environments consistently delivered the highest and most predictable compute performance. What stands out is not just peak throughput, but uniformity — tight performance distributions, minimal variance and near-identical mean and median results.

This profile signals deliberate design choices:

● Systems pushed close to thermal and power limits

● Highly standardized hardware configurations

● Tightly controlled software stacks

● Environments optimized for sustained throughput rather than flexibility

These platforms behave like classic AI factories — designed to run at scale, under load, with repeatable outcomes. For large, compute-bound workloads where predictability matters more than adaptability, this approach sets the performance ceiling. But peak throughput is not the only definition of value.

Where performance is really being won: data movement

A second performance pattern in the study reveals something increasingly important: data movement, not raw math, is becoming the bottleneck for modern AI workloads.

One key takeaway this year from theCUBE Research can be summarized it this way:

“When you treat the data center as one supercomputer, the efficiency of the fabric determines how much compute you actually get.”

Today’s AI applications are no longer dominated by long, uninterrupted training runs. Increasingly, they involve:

● Frequent data ingestion

● Retrieval-augmented generation pipelines

● Simulation and synthetic data workflows

● Agentic systems coordinating CPUs, accelerators and memory

In these scenarios, GPUs often stall not because they lack compute capacity, but because data does not arrive on time.

“In production AI, GPUs don’t stall because they lack performance — they stall because data doesn’t arrive on time.”

Operators consistently report low model-flop utilization in real workloads due to fragmented memory, limited KV-cache capacity, and slow or contended data paths. Improving interconnect bandwidth, memory hierarchy, and system balance has become one of the most effective ways to raise usable performance per watt and per rack.

Bottom line: The constraint in AI performance is increasingly operational, not technological. Better system design delivers higher returns on existing infrastructure investments.

Lambda as a case study in systems-first design

Within this context, Lambda stands out in the independent data — not because it chases peak theoretical compute at all costs, but because its architecture reflects a different philosophy about how AI systems should be built and used.

In the study, Lambda consistently demonstrated exceptional host-to-device and device-to-host bandwidth, approaching practical limits of modern interconnects. That design choice aligns closely with the realities of application-driven AI workloads, where moving data efficiently through the system often matters more than squeezing out the last percentage point of raw compute.

This emphasis on interconnect performance comes with a tradeoff: greater variability compared to tightly standardized environments. But that variability is best understood as a reflection of flexibility, not fragility.

Variability as a feature, not a bug

More flexible systems naturally expose a wider range of configurations and performance profiles. For AI-native developers, this variability often translates into faster experimentation, clearer bottleneck diagnosis, and the ability to tune infrastructure to application needs rather than conforming to a single, rigid performance envelope.

As one infrastructure expert noted, flexibility allows systems to be optimized for real workloads—not just benchmarks.

This is particularly relevant during model development and iteration, where developer control and visibility can be more valuable than absolute uniformity.

Why this resonates with AI-native developers

For application-focused teams, the key challenge is rarely maximizing headline FLOPS. It’s minimizing friction between idea and execution.

As Robert Brooks IV, a founding team member at Lambda, said on theCUBE NYSE Wired program at the Raise Summit in Paris last July:

“Most ML engineers aren’t DevOps experts. The system should disappear so they can focus on models.”

Lambda’s design philosophy reflects this reality. By abstracting away much of the infrastructure plumbing — drivers, cluster setup and software drift — while remaining opinionated where simplicity saves time, Lambda aims to give developers a platform that supports both rapid experimentation and a path to scale on the same underlying architecture.

This approach aligns closely with DevRel-driven ecosystems, where approachability, transparency, and repeatability matter as much as raw performance.

The bigger shift: AI factories as an operating model

The deeper insight from the study isn’t comparative — it’s evolutionary.

AI factories are no longer just collections of accelerators. They are operating models, defined by how effectively an entire system is designed, integrated, and run. Performance at scale now emerges from the coordination of:

● Power delivery and thermal engineering

● Memory hierarchy and bandwidth

● Interconnect design

● Scheduling and orchestration

● Software standardization

● Reliability, serviceability, and telemetry

This shift has been articulated most plainly at the architectural level. As Jensen Huang put it on stage at GTC last year:

“Networking has become the operating system of the AI factory.”

But the implications extend beyond racks and clusters to the physical data center itself. During a recent theCUBE + NYSE Wired segment on AI Factories – Data Centers of the Future, Lambda’s Kenneth Patchett offered a grounded view from the infrastructure front lines:

“The real scarcity isn’t GPUs — it’s data centers designed for AI-scale systems.”

Rather than framing today’s bottlenecks as simple GPU scarcity, Patchett pointed to a more fundamental constraint: the limited availability of data centers purpose-built for AI-scale systems. As hardware generations turn faster and densities rise, traditional facilities struggle to keep pace — not because they lack floor space, but because they weren’t designed for the power, cooling and systems integration AI now demands.

Patchett emphasized a modular, element-based approach, where power, water, air and physical layout are treated as first-class design variables and engineered in tight coordination across mechanical, electrical and plumbing disciplines. This kind of systems thinking, he argued, is what allows infrastructure to evolve alongside rapidly iterating compute and networking technologies.

In that sense, the AI factory is no longer just what runs inside the data center. It is the data center.

Why this matters

We are past the era where chip specifications alone define AI performance. AI is now a systems engineering problem, and the most meaningful innovation is happening in how complete systems are architected for real workloads. The independent data makes that clear.

Lambda’s results illustrate how prioritizing interconnects, system balance and developer experience can unlock value that raw silicon alone cannot.

The winners in this next phase won’t just assemble accelerators. They’ll design balanced, application-aware AI factories that let developers move faster, reason more clearly about performance, and build systems that behave the way modern AI actually does.

That’s the real Holy Grail.

Image: Wikipedia

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

- 15M+ viewers of theCUBE videos, powering conversations across AI, cloud, cybersecurity and more

- 11.4k+ theCUBE alumni — Connect with more than 11,400 tech and business leaders shaping the future through a unique trusted-based network.

About News Media

Founded by tech visionaries John Furrier and Dave Vellante, News Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.