Uber Engineering redesigned its mobile analytics architecture to standardize event instrumentation across iOS and Android, addressing fragmented ownership, inconsistent semantics, and unreliable cross-platform data. The goal was to simplify engineering effort, improve data quality, and provide trustworthy insights for product and data teams across rider and driver applications.

According to Uber engineers, mobile analytics are critical for decision-making, feature adoption, and measuring user experience. As apps and teams grew, instrumentation became decentralized. Feature teams defined and emitted events independently, shared UI components often lacked analytics hooks, and similar interactions were logged differently across different teams. As a result, over 40% of mobile events were custom or ad-hoc, complicating analysis and reducing confidence in aggregated metrics.

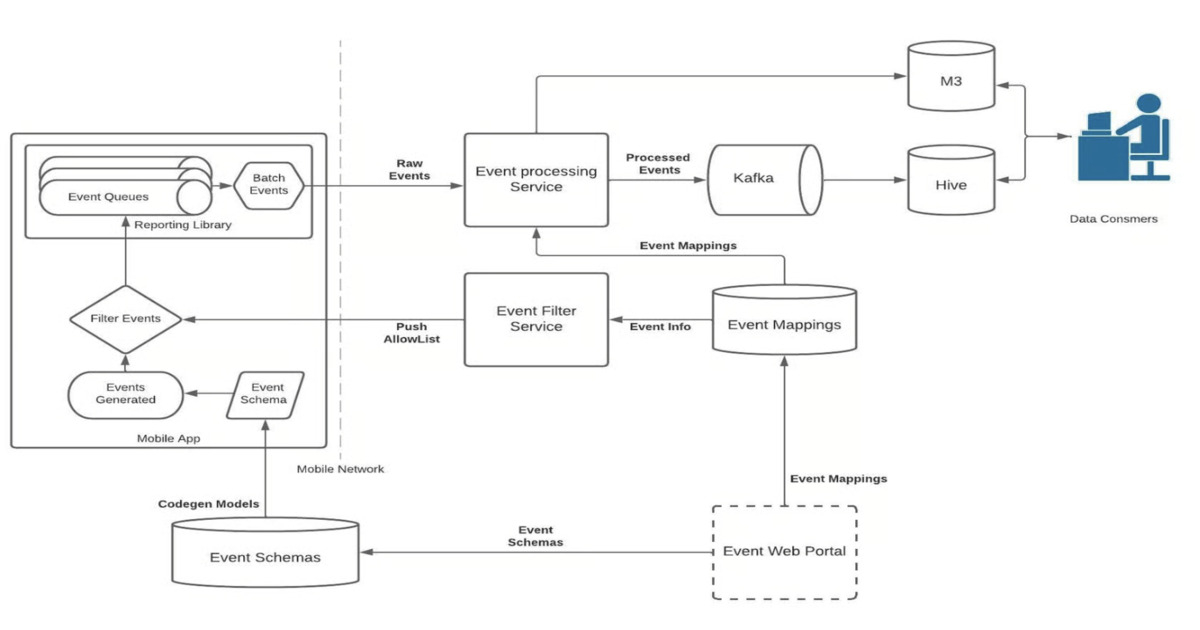

To address these challenges, engineers moved core analytics responsibilities from feature-level code to shared infrastructure. Working with product, design, and data science teams, they defined standardized event types such as taps, impressions, and scrolls. These events are code-generated from shared schemas, instrumented at the UI component level, emitted through a centralized reporting layer, enriched by backend services, and consumed via Uber’s analytics pipelines.

Uber’s mobile analytics system architecture (Source: Uber Blog Post)

A key decision was to embed analytics logic into platform-level UI components. Engineers introduced analytics builders to manage event lifecycle, metadata attachment, and emission logic, allowing feature teams to adopt standardized analytics without writing custom instrumentation. Performance testing with a sample app of 100 impression-logging components showed no regressions in CPU usage or frame rate, a prerequisite for rollout across performance-sensitive devices.

Data flow diagram for the ImpressionAnalyticsBuilder class event generation (Source: Uber Blog Post)

The platform also implemented common metadata collections. App-level metadata, such as pickup locations or restaurant UUIDs, is logged automatically, while event-type metadata, including list index, row identifiers, scroll direction, and view position, is captured by AnalyticsBuilder. Surfaces are standardized via Thrift models, ensuring consistent logging of container views, buttons, and sliders.

Analytics metadata pyramid overview (Source: Uber Blog Post)

To validate the platform, engineers dual-emitted analytics for two features through both legacy and new APIs. Queries verified event volumes, metadata, and surfaces matched across platforms, while semantics such as scroll-start/stop counts and view positions aligned. The pilot revealed platform discrepancies, divergent logging approaches, and highlighted benefits from list enhancements that combined multiple row events into single standardized events, simplifying querying and improving testability. Feature teams also adopted visibility checks, reducing bespoke implementations.

Following the pilot, Uber’s analytics team handled the migration of legacy events to standardized APIs, allowing product teams to stay focused on their roadmaps. Where support was needed, they created automated scripts that scan iOS and Android code, assess high-priority events, and produce a list suitable for migration. The platform team also added a linter to block new tap or impression events created with non-standard APIs, preventing further drift. Engineers reported improved cross-platform parity, consistent metadata and semantics, reduced instrumentation code, reliable impression counts, and extensible, out-of-the-box coverage for UI interactions.

Looking ahead, Uber engineers are advancing analytics through componentization, assigning unique IDs to UI elements such as buttons and lists to standardize event naming and metadata, further reducing developer effort.