Am I alone in thinking that pretty much every week, another paper drops claiming state-of-the-art performance on the latest model? You read it, understand the innovation, and then face the real question: will this actually work for my case?

In applied AI for computer vision (CV), we live in this cycle constantly. Research benchmarks rarely mirror the messy reality of industrial environments, like varied lighting, occlusions, and edge cases that academic datasets never capture. The gap between a promising paper and production-ready performance in vertical industries is wider than most teams expect.

Why Most Evaluation Approaches Fail

The problem isn’t a lack of metrics or benchmarks. It’s that evaluation systems are often:

- Too complex to implement quickly.

- Disconnected from real-world use cases.

- Massive time sinks upfront.

- Opaque about failure modes.

I ran into this firsthand while working on a challenging computer vision use case. Three engineers on my team were exploring different approaches – varying model architectures, third-party models, and training strategies. Progress looked promising until we tried to compare results.

Everyone was using slightly different datasets. Different metrics. Different preprocessing steps. Comparing apples to apples became impossible, and we realized we were burning time debating which approach was better without any reliable way to know.

We needed a new evaluation framework. Not eventually—immediately. How do you know which models are actually good?

And as a quick note: this framework isn’t just for evaluating third-party models. It’s equally valuable for internal model development, helping teams move faster and make better decisions about which approaches deserve resources and which should be shelved early.

The 3-Pilar Evaluation Framework

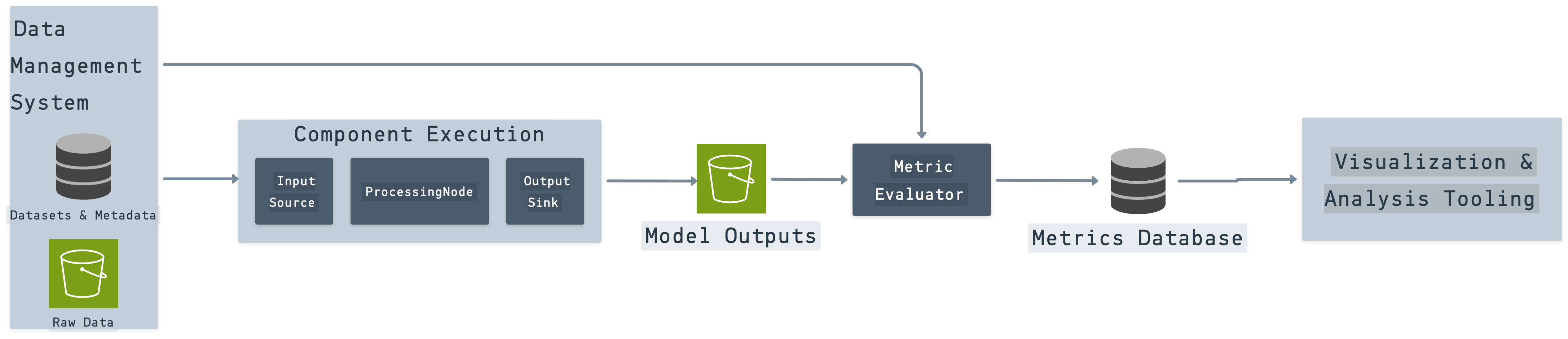

Good evaluation systems have three core components: execution framework, data, and result analysis tooling. Get all three right, and you can test models quickly with enough confidence to deploy.

Pilar 1: Fast Integration, Frictionless Execution

If testing a new model takes hours of setup, you won’t test enough models. Speed matters because iteration matters.

The execution system breaks into two stages:

- Component execution pulls data from your source, processes it with the model, and dumps raw results to a sink. We built this using abstract classes that anyone on the team can inherit from:

- InputSource handles varying inputs—videos, images, different storage locations

- ProcessingNode runs the model. Inherit from this class and update the code to try any new model, whether internally trained or third-party

- OutputSink takes model outputs and writes them to a datastore, handling different output types: classification, detection, segmentation, text

- Metric calculation takes raw model outputs, calculates metrics, and writes results to a database. The schema is strict: each evaluation job gets its own table with one required column – a row per data unit in the evaluation. This database connects directly to your visualization tool. Having a row per data unit allows you to aggregate and filter by metadata. Each data unit should be tagged with relevant metadata: which customer it came from, special conditions like low-lighting or outdoor cameras, time of day, or any other attributes that might influence model performance. This structure makes it straightforward to identify patterns—like discovering that your model struggles specifically with outdoor cameras during dawn hours, or that performance varies significantly across different customer environments.

The entire system runs parallelized and with incremental results. Parallelization ensures you get results quickly. As each input is processed, results are immediately written to the output database. You can track job progress live in a dashboard, identify issues early, and cancel or rerun jobs as needed. If a job crashes, you at least have partial data from the run.

This setup means integration is measured in minutes. Minimal setup. Easy enough that anyone on the team can run it.

n Pillar 2: Quality Data That Reflects Reality

Your evaluation is only as good as your data. You need datasets that cover two things:

- A representative sample of customer data. Random sampling often works here as a starting point, giving you a baseline understanding of how a model performs on typical inputs.

- Edge cases. The scenarios where models break—unusual lighting, rare object configurations, atypical user behavior, etc. These are the inputs that differentiate good models from great ones.

Building this dataset is a continuous process. As your application evolves, so do the edge cases worth tracking. You may need to mine data using embedding similarity search to find similar failure modes, or use diversity sampling on embeddings to systematically capture rare conditions. These techniques help you move beyond random sampling to deliberately surface the scenarios where models are most likely to break – ensuring your evaluation dataset stays relevant as your application matures.

Ground truth matters too. If your labels are wrong, your metrics are meaningless. Invest time here – whether through careful manual annotation, multiple annotators with consensus mechanisms, or validated automated labeling for clear-cut cases.

Pillar 3: Easy to Use and Integrate Post-Integration

Finding insights should be simple. A good evaluation interface lets you trigger a job on a specific data subset with minimal friction. Engineers shouldn’t need to write custom scripts or navigate complex config files just to run a comparison.

Incremental result updates are critical. Even with parallelization, evaluation jobs can take hours depending on data volume. Having metrics populate a dashboard as results come in helps engineers catch issues early and monitor progress. If a job fails partway through, you still have incremental data to work with.

Results need to be interpretable and actionable. You should be able to slice data by attribute – time of day, location, object type, whatever dimensions matter for your application. This helps root cause failure modes.

For CV, visualization is essential. Quantitative results show general performance. Qualitative visualization shows why – which images the model misclassified, what patterns emerge in failures, where the model confidently predicts wrong answers.

This combination of quantitative metrics and qualitative inspection gives engineers the full picture. Numbers tell you whether performance improved. Visualizations tell you whether it improved for the right reasons.

Putting It Into Practice

This framework scales across different model types – detection, classification, segmentation, vision-language models, etc. The principles remain consistent even as the specific implementations vary.

The key is getting user stories and requirements right upfront. An evaluation system only delivers value if people actually use it. If the interface is clunky, if results take too long, if insights are buried in tables that require manual analysis, adoption drops and teams revert to ad-hoc testing.

When adapting this framework, focus on the pain points your team faces most:

- If parallelization is critical, invest in robust job scheduling

- If edge case discovery is the bottleneck, build better data mining tools

- If qualitative analysis matters most, prioritize visualization features

The goal isn’t perfection. It’s building something good enough to use today that can evolve as your needs change.