Taalas Inc., a startup that develops chips optimized to run specific artificial intelligence models, has raised $169 million in funding.

Reuters reported today that the company’s backers include Quiet Capital, Fidelity and prominent semiconductor investor Pierre Lamond. The round brings Taalas’ total outside funding to more than $200 million.

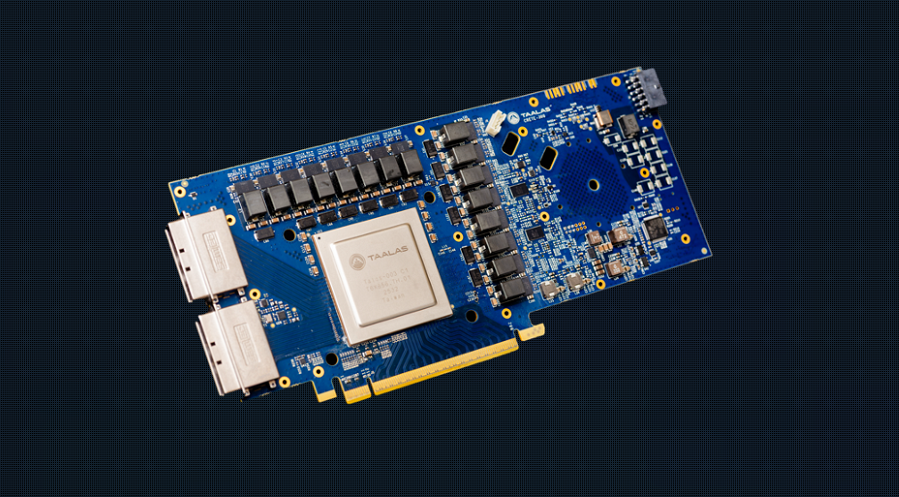

The company’s first product is a chip optimized to run the open-source Llama 3.1 8B language model. Taalas says that it can generate 17,000 output tokens per second, or 73 times more than Nvidia Corp.’s H200 graphics card. Moreover, the processor provides that performance while using 1/10th the power.

Tailoring a chip to a specific AI model improves efficiency partly because it allows engineers to do away with redundant components. An off-the-shelf graphics card, for example, might include more DRAM than an AI model can use. A custom chip can swap the unused RAM for extra transistors that enable the model to run faster.

Developing a fully custom processor is prohibitively expensive for most AI projects. According to Taalas, its engineers lower costs by only customizing only two of the more than 100 layers that make up its chips.

In most processors, only a handful of layers contain transistors. The others host supporting components such as the wires that move data between different chip sections. Usually, the layers that contain the wiring are located about the transistors.

The custom layers in Taalas’ chips feature what it describes as a mask ROM recall fabric. ROM is a type of memory to which users can only write data once. From there, they can read the data but not edit it.

According to Next Platform, each mask ROM recall fabric module can store four bits. Taalas’ architecture uses only a single transistor to process that data. The transistor carries out matrix multiplications, the mathematical calculations that AI models use to make decisions.

One benefit of Taalas’ design is that it removes the need for high-bandwidth memory modules. Those are integrated memory chips that a graphics card uses to store AI models’ data. Moving information to and from HBM takes a relatively long time, which slows down processing. According to Taalas, its approach avoids that delay while also doing away with the various auxiliary components needed to support a chip’s HBM modules.

The company is reportedly developing a new chip that can run a Llama model with 20 billion parameters. It’s expected to be ready this summer. Taalas plans to follow up the chip with an even more advanced processor, HC2, that will be capable of running a frontier model.

Image: Taalas

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

- 15M+ viewers of theCUBE videos, powering conversations across AI, cloud, cybersecurity and more

- 11.4k+ theCUBE alumni — Connect with more than 11,400 tech and business leaders shaping the future through a unique trusted-based network.

About News Media

Founded by tech visionaries John Furrier and Dave Vellante, News Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.