Ever tried extracting a polygonal mesh from a 3D discrete scalar field?

No?

Well, back in 1987, two programmers at General Electric did — and they ended up inventing the Marching Cubes algorithm. It’s the reason doctors can turn medical scans into 3D models that literally saved millions of lives.

That’s the power of an algorithm.

Whenever you instruct a machine to solve a problem with code, you’re designing a procedure to transform bits into meaning. Some algorithms are fast. Some are elegant. And some are so strange, they feel like sorcery.

In this story, we’re diving into ten of the weirdest, most brilliant algorithms ever devised — ones that help search billions of lines of code in milliseconds, generate infinite maps from nothing, and turn quantum weirdness into practical logic.

#1–Wave Function Collapse

One of the weirdest things in science is the double-slit experiment: particles behave like waves when you’re not looking, but the moment you observe them, they snap into place like particles. It’s bizarre — and surprisingly useful.

This idea of a “wave function collapsing” sounds abstract, but it’s been turned into a surprisingly practical algorithm. Imagine you’re designing a side-scrolling video game map. You want the world to feel handcrafted but also go on forever. You can’t draw an infinite map by hand, so instead you generate it procedurally.

In the beginning, each tile of the map is like a “wave” — it’s not yet anything specific. It’s full of possibilities. But as the player moves forward — observing the world — the algorithm “collapses” that uncertainty. The world solidifies into a specific tile, but it does so according to the rules you’ve set. Roads connect, rivers flow where they should, and everything feels like it was intentionally designed.

It’s randomness with a purpose, all without a single piece of generative AI. It’s just weird, beautiful logic.

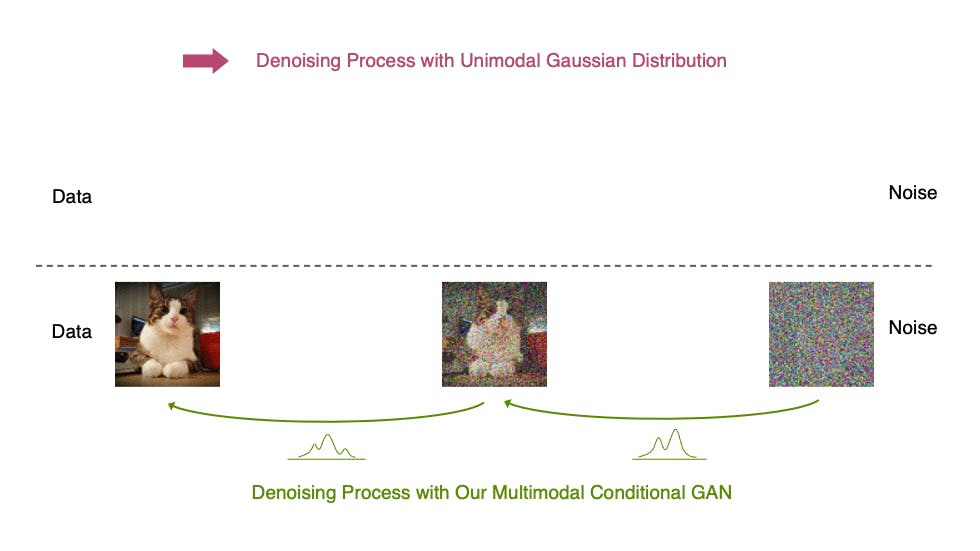

#2–The Diffusion Model

AI is weird. Really weird.

Take diffusion models — the tech behind image generators like DALL·E and Stable Diffusion. They’re based on an idea from thermodynamics: diffusion, where particles naturally spread from areas of higher concentration to areas of lower concentration.

In machine learning, that process gets flipped.

Instead of going from order to chaos, the algorithm starts with pure noise — like a picture of a cat — and learns how to gradually refined it into a meaningful image. You begin with randomness (high entropy) and end with a crisp, coherent output (low entropy).

But you need a model to do this well. The diffusion algorithm works in two phases.

First, during training, the model takes real images and gradually adds noise in a forward process until they become unrecognizable. Then, it learns how to reverse that process — reconstructing it back into a coherent image.

Do this across millions of labeled images, and you get a model that can dream up new images from scratch. Cats in space. Ancient Rome in Pixar style. Hyperrealistic avocado chairs. You name it.

It’s compute-heavy. But it doesn’t stop at images. Diffusion is already reshaping how we generate music, audio, and next up — video.

#3–Simulated Annealing

One of the most frustrating parts of programming is that there’s rarely one right solution — just a sea of okay ones, and a few great ones. Kind of like organizing an Amazon warehouse: dozens of ways to do it, but only a few that actually make sense at scale.

Simulated annealing borrows its name from metallurgy, where metals are heated and cooled repeatedly to remove defects. The same concept applies to optimization. You’re trying to find the best solution in a chaotic landscape full of local peaks and valleys.

Imagine you’re searching for the highest peak in a mountain range. A basic hill-climbing algorithm will get you stuck on the first bump that looks promising. But simulated annealing is smarter. It starts with a high “temperature,” which means it explores freely — sometimes even accepting worse paths to escape local traps. As the temperature gradually drops, it gets pickier, settling into only the best moves.

The trade-off is exploration vs. exploitation. And it’s not just useful in code — it’s a good metaphor for learning to code, too. Early on, you bounce between languages, tools, and frameworks. But eventually, you cool down, lock in, and specialize.

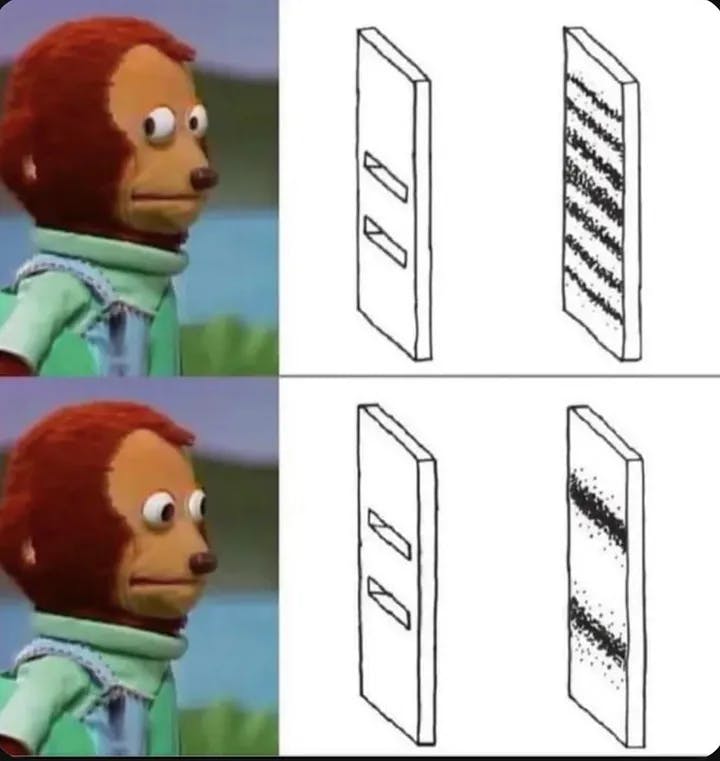

#4–Sleep Sort

You can’t talk about algorithms without bringing up sorting — and perhaps the most absurdly clever (and completely useless) ever devised is Sleep Sort.

Traditional sorting algorithms, like quicksort or mergesort, use divide-and-conquer strategies to recursively break arrays into subarrays and sort them efficiently. But somewhere on 4chan, a mad genius proposed a completely different approach.

Here’s how it works: for each number in the array, start a new thread. Each thread sleeps for a number of milliseconds equal to its value, then prints the number when it wakes up. Because smaller numbers sleep for less time, they get printed earlier — resulting in a sorted output.

The clever part? It skips all the usual logic and turns the CPU’s thread scheduler into the sorting engine. No comparisons. No recursion. Just sleep and print.

But it’s wildly impractical. It breaks with negative numbers, duplicates, or large values. It’s also inefficient, creating one thread per number. And it depends on sleep timing, which isn’t very accurate . Sleep Sort is both hilarious and useless — a perfect example of how clever doesn’t always mean useful.

# 5–BOGO Sort

BogoSort is a joke algorithm: it randomly shuffles an array over and over until, by pure luck, the result is sorted. It’s wildly inefficient — like trying to guess your way to the right answer by chance.

Now imagine combining this with the idea of quantum mechanics and the multiverse. In theory, if all possible outcomes exist across infinite parallel universes, then there’s some universe where your array is already sorted.

The technology isn’t quite there yet, but a“Quantum BogoSort” would work by creating all those possibilities at once, and then instantly collapsing into the universe where the array is sorted — essentially letting quantum randomness do the work for you.

Of course, this is sci-fi, not computer science. We can’t observe or collapse the multiverse at will. But it’s a playful thought experiment about brute-force randomness taken to its logical (and absurd) extreme.

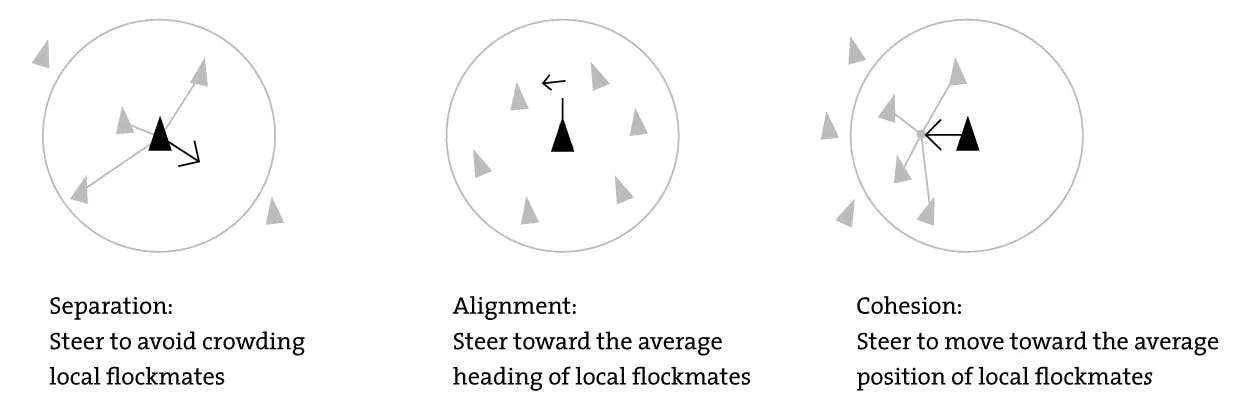

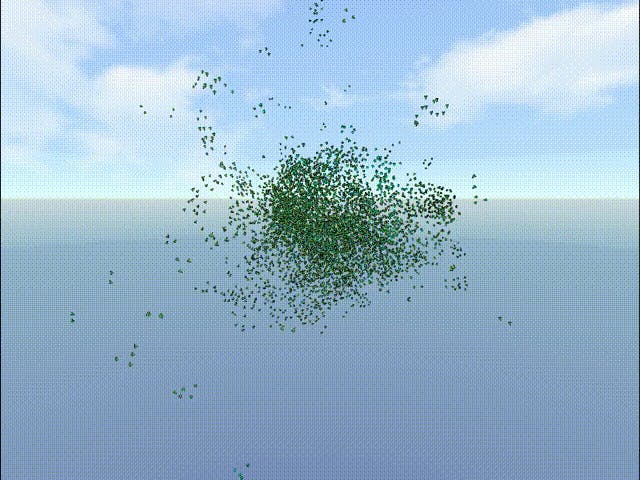

# 6 — BOID

And here’s my favorite one. What I find really cool about algorithms is how they can sometimes mirror nature. Take Craig Reynolds’ Boids program from 1986 — it was one of the first artificial life simulations and it mimics how birds flock. But what’s mind-blowing is how little code it takes to get something that feels so alive.

Each bird (or “boid”) follows just three rules: avoid crashing into neighbors (Separation), align with their direction (Alignment), and move toward the local group’s center (Cohesion).

That’s it. No one’s in charge. But when you simulate a bunch of these simple behaviors together, you get mesmerizing, organic patterns that shift and flow like a real flock.

The beauty here isn’t in the code — it’s in what emerges from it. These complex group dynamics don’t have to be explicitly programmed. They just happen. It’s like stumbling on nature’s cheat code for decentralized coordination.

# 7 — SHOR’s Algorithm

The idea of allowing people to lock their mailboxes and sign their letters with a unique signature is based on an important concept in cryptography: the difficulty of factoring large numbers.

The security behind most encryption methods relies on this simple mathematical reality — multiplying large numbers together is easy, but figuring out the two original prime numbers behind the product — a problem known as prime factorization — it’s extremely difficult and time consuming.

In fact, it’s so difficult that it could take your laptop hundreds of trillions of years to brute-force the solution — unless, of course, we start using quantum computers. Quantum computers have the potential to solve the integer factorization problem exponentially faster than any classical algorithm, thanks to algorithms like Shor’s Algorithm.

Shor’s Algorithm can break down large numbers into their prime factors much quicker than conventional methods. Prime factoring is pretty simple, but how this algorithm works is where things get truly weird.

At the heart of Shor’s Algorithm are quantum mechanics concepts like qubits, superposition, and entanglement. These allow quantum computers to perform massive calculations in parallel, something classical computers can’t even come close to doing.

While the algorithm itself is legitimate, its real-world application is still in its early stages. In fact, the largest number ever factored using quantum computing is just 21. IBM’s state-of-the-art quantum system, Q System One, fails to factor a number as simple as 35.

That said, quantum breakthroughs are happening. Recently, a Chinese team managed to factor a huge number using a quantum computer, but they used an algorithm that doesn’t scale well for much larger numbers, which means it’s not yet practical for general use.

However, if quantum computers get powerful enough and someone figures out how to scale these algorithms for truly large numbers, expect serious disruption in the world of cybersecurity. Once quantum computing can reliably break encryption, all of our current security protocols could be at risk.

# 8 — Marching Cubes

At the beginning of this story, we mentioned the Marching Cubes algorithm — but it’s worth diving into, because it was a major turning point for visualizing 3D data, especially in medicine.

Imagine you have a 3D scan of the human body, like from an MRI. The MRI doesn’t gives you a 3D model, but a giant cube of numbers — a 3D scalar field. Each point in that field holds a value that represents something like tissue density or signal intensity. This data is continuous in space, but we need to turn it into something visual — a surface, a shape, something that a computer can draw.

This is where Marching Cubes comes in. The algorithm takes this 3D field and marches through it one small cube at a time. Each cube is formed by eight neighboring data points in the field.

Now here’s the clever bit: each of those eight points is either above or below a threshold (say, where skin turns into bone). So each corner gets assigned a 0 or a 1, depending on whether it’s inside or outside the surface you’re trying to extract.

That gives you an 8-bit number — 256 possible combinations for each little cube. For each of those combinations, a specific pattern of triangles has been precomputed and stored in a lookup table. These triangles are used to approximate the surface passing through that cube. So instead of calculating complicated geometry every time, the algorithm just looks it up.

By repeating this process — marching through cube after cube, plugging in values, looking up shapes — the algorithm gradually builds up a full 3D mesh. What you get is a smooth surface that represents the boundary between different materials in the scan, like bone and tissue.

This was groundbreaking in the 1980s because it turned flat MRI or CT scan slices into actual 3D models that doctors could rotate, zoom in on, and analyze. And even today, Marching Cubes is still used in many fields — from medical imaging and geology to procedural generation in games.

# 9 — Practical Byzantine Fault Tolerance

In modern computing, we’re often working with distributed systems — networks of computers spread across the cloud. That brings us to one of the most famous problems in distributed computing: the Byzantine Generals Problem.

Picture this: several generals from the Byzantine army are surrounding a city. They need to coordinate and attack at the same time to win. But they can only communicate by sending messages, and some of those generals might be traitors trying to sabotage the plan. Maybe one decides to sleep in, or worse — lies about when to attack. If even one general fails or acts maliciously, the whole strategy could fall apart.

Computers face the same challenge. In a distributed system, some machines might crash, be slow, or even get hacked. How can the rest of the network agree on what to do when they can’t trust everyone?

That’s where consensus algorithms like PBFT — Practical Byzantine Fault Tolerance — come in. PBFT is designed to handle these kinds of failures. It works by having one node propose an action by broadcasting a “pre-prepare” message. The other nodes respond with acknowledgments. Once a certain number of nodes (usually two-thirds or more) agree, they reach consensus. Finally, the original node sends a “commit” message, telling everyone to execute the action. Even if up to one-third of the nodes are faulty or malicious, the system can still function correctly.

Algorithms like PBFT are the backbone of blockchain systems and distributed cloud databases, helping them stay consistent and trustworthy — even when things go wrong.

# 10— Boyer Moore

And finally, that brings us to an old-school algorithm that quietly powers some of the fastest tools we use today — like grep. It’s called the Boyer-Moore string search, and it recently blew my mind because of how counterintuitive it is: the longer the text, the faster it gets.

Here’s how it works. Most basic search algorithms go left to right, checking every character one by one. But Boyer-Moore flips that around — it scans from right to left within the search pattern, and it uses two clever tricks to skip big chunks of text. Imagine you’re looking for the word “needle” in a huge block of text.

1. Bad character rule:

If you’re searching for “needle” and the current text character is “z” — then there’s no way a match could start at or before that point. So instead of checking character by character, the algorithm jumps ahead by 6 positions — the full length of “needle” — and keeps going.

2. Good suffix rule:

If you’re searching for “needle” and you just matched “dle” at the end — but the next character breaks the match — the algorithm doesn’t start over from the next letter. Instead, it asks: does “dle” show up earlier in “needle”? If yes, it shifts the pattern so that earlier “dle” lines up. If not, it skips the whole “dle” part entirely. Either way, it jumps forward smartly instead of wasting time rechecking.

These heuristics skip strategies aren’t perfect, but they’re way more efficient than brute force methods.

The result? As the text gets longer, the algorithm tends to skip more and more characters, making it faster relative to the size of the input. That’s why tools like grep can chew through gigabytes of logs with surprising speed — and why this decades-old algorithm still feels like black magic today.

When Logic Meets Imagination: The True Power of Algorithms

Whether it’s turning quantum uncertainty into practical image generation, mimicking biological flocking behaviors with three simple rules, or searching text backwards to find patterns faster, these approaches shows that the most unconventional ideas often lead to the most powerful solutions.

What makes these algorithms brilliant isn’t their efficiency or novelty — it’s their audacity. They challenge assumptions. They flip problems on their heads. They turn randomness into structure, chaos into order, and abstract theory into real-world impact.

More than elegant mathematical tools — these 10 weird algorithms are a testament to human ingenuity.

Want to hear from me more often?

👉 Connect with me on LinkedIn!

I share daily actionable insights, tips, and updates to help you avoid costly mistakes and stay ahead in the AI world. Follow me here:

Are you a tech professional looking to grow your audience through writing?

👉 Don’t miss my newsletter!

My Tech Audience Accelerator is packed with actionable copywriting and audience building strategies that have helped hundreds of professionals stand out and accelerate their growth. Subscribe now to stay in the loop.