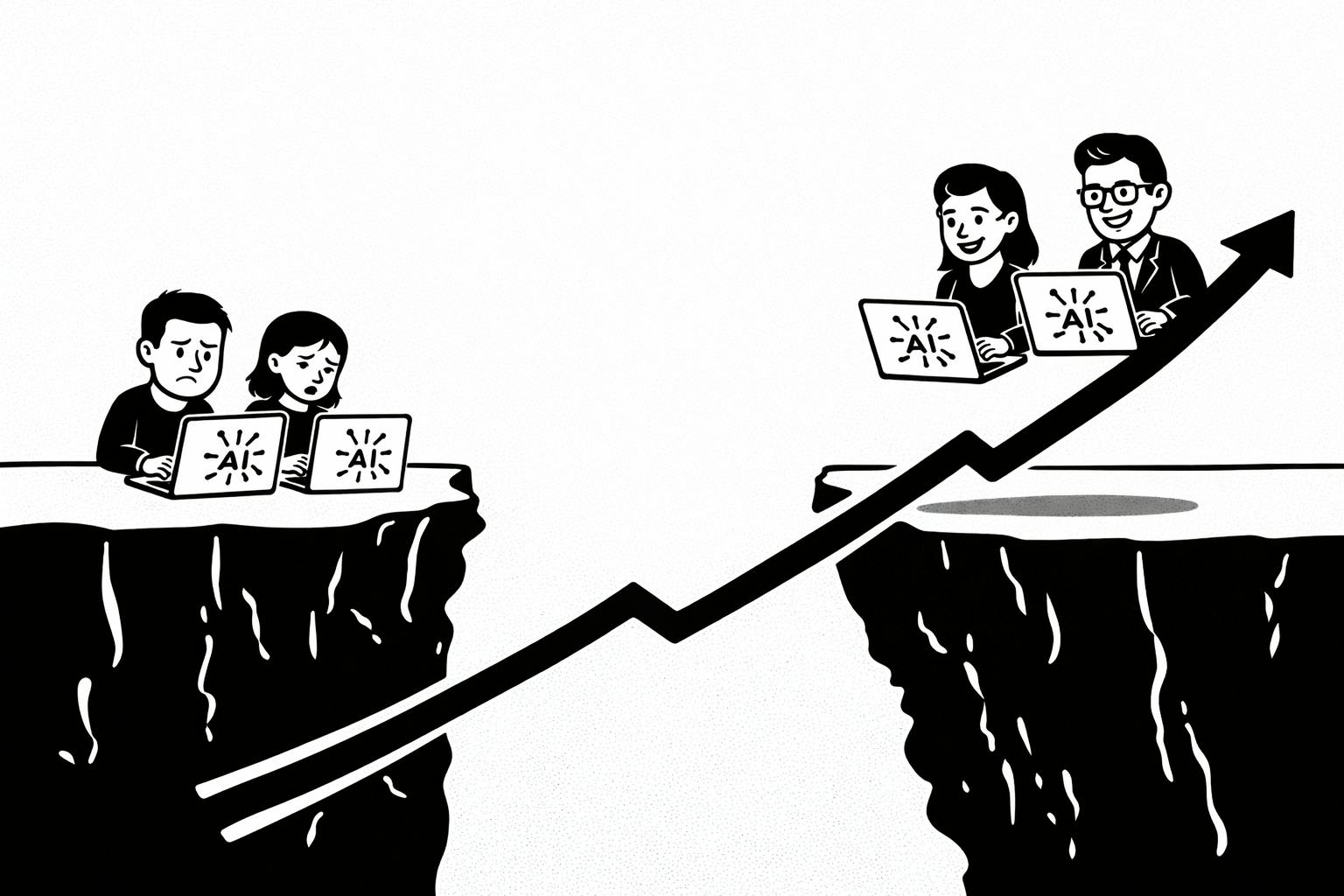

A new study introduces the “self-evolution trilemma,” proving that AI systems cannot simultaneously remain autonomous, isolated from external input, and perfectly aligned with human values. Experiments in a closed AI community called Moltbook demonstrate how consensus bias, safety drift, collusion, and hidden behaviors emerge naturally under isolation. The paper argues that safety is not a patchable feature but a structural design choice — forcing builders of autonomous AI to decide which constraint they are willing to relax.

You Might also Like

Same AI Tools, Different Taste | HackerNoon

14 Min Read