Every Thursday, Delve Into AI will provide nuanced insights on how the continent’s AI trajectory is shaping up. In this column, we examine how AI influences culture, policy, businesses, and vice versa. Read to get smarter about the people, projects, and questions shaping Africa’s AI future.

On June 27, 2025, Nigerian content creator Asherkine posted a seemingly innocent video asking a young lady out on a date after she revealed she was single. Shortly after the post went viral, an anonymous Snapchat user known as ‘Kenny’ began spreading a fabricated narrative: that the lady in the video, later identified as Ifeme Rebecca Yahoma, a University of Nigeria Nsukka student, was his girlfriend. He created deepfakes, manipulating pictures she’d shared online to support the lie. In her photo, Yahoma’s head is slightly tilted, her lips pouted. In the black-and-white image ‘Kenny’ generates, he has doctored himself to appear as if pecking Yahoma. An emoji covers his face. Kennedy gained tens of thousands of followers off the lie, even advertising Snapchat ads as his virality surged.

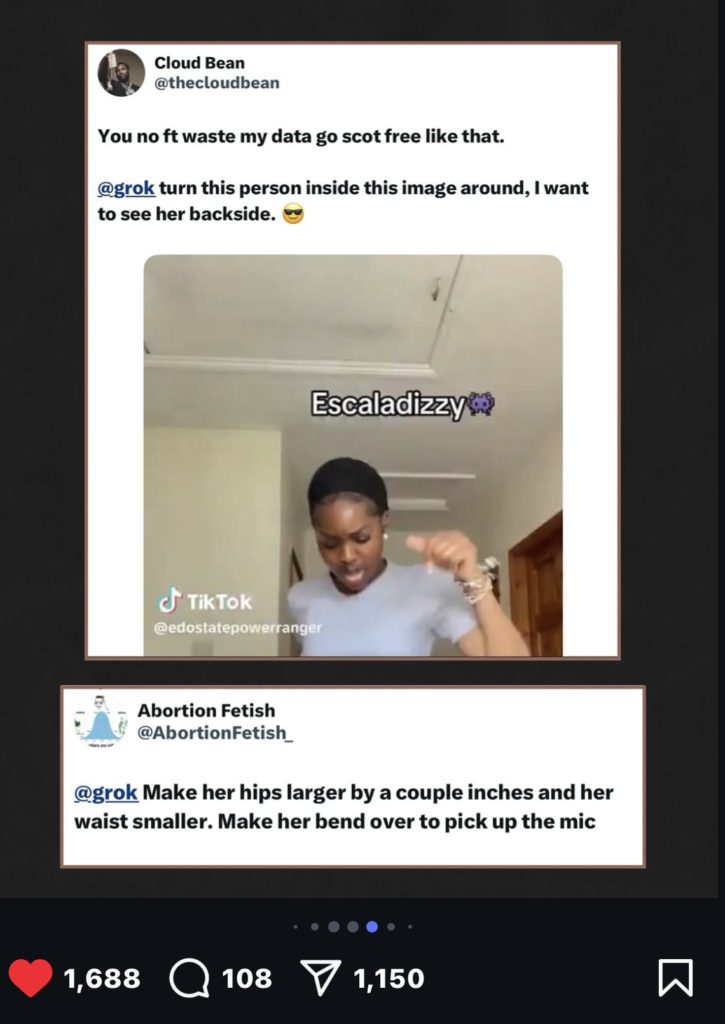

This incident isn’t an outlier; it’s the tip of a growing digital iceberg. Across Nigeria and other African countries, online users are employing AI tools like X’s Grok to manipulate, sexualise, or humiliate women. What used to be crude Photoshop jobs are now photorealistic deepfakes created from sexually explicit prompts fed into AI systems that produce disturbingly life-like results.

If they won’t send nudes, create them

Earlier this year, an X user had prompted Grok to undress Nigerian actress Kehinde Bankole. The post, which circulated widely before being deleted, sent shockwaves through her fanbase and other concerned X users. If a public figure like Bankole isn’t exempt, everyday women have even less protection.

Gbenga, a self-identified mental health consultant who made such prompts, later posted an apology thread on X saying: “I feel a profound sense of remorse for not handling the situation with the care it warranted.”

Remorseful or not, a growing number of users, often male, have found, in their harassment of women online, a willing participant in X’s AI tool which has recently been accused of generating antisemitic commentary among other gaffes. These incidents are leaving their victims with not only emotional damage and reputational smears but very little legal recourse.

Are there legal protections that apply?

When asked if new laws are necessary to address how a rapidly evolving technology like AI is enabling abuse in manners previously unseen, legal policy analyst Sam Eleanya says it must be approached with more nuance. “The premise that we need laws to regulate every new advancement in technology is not always sound,” he said. “The better question is: how does this new tool fit into the existing legal order?”

According to Eleanya, using tools like Grok to portray a woman in sexual or compromising ways without her consent could amount to criminal defamation of character under sections 373–375 of the Criminal Code Act. If money is demanded or intimidation is involved, blackmail, extortion, or conspiracy to do so, charges could follow under Section 408 of the Criminal Code Act. The Cybercrimes Act of 2015 also covers acts like cyberstalking, identity theft, and image-based abuse, all of which could apply to these new scenarios.

But holding perpetrators to account remains challenging in various ways, according to Queen-Esther Ifunanya Emma-Egbumokei, a corporate lawyer specialising in international commercial law and the creative economy.

Prosecution is often complicated by jurisdictional ambiguities—where exactly the perpetrator is meant to be tried remains unclear. Users often operate anonymously or hide behind pseudonymous accounts, making it difficult for identities to be verified and legal processes to be initiated.

Additionally, Emma-Egbumokei notes that Nigeria, like many African countries, lacks AI-specific regulations that could clearly define and criminalise the misuse of generative tools for harassment or defamation. In the absence of such targeted legal frameworks, enforcement is further weakened, and victims are left vulnerable within a grey area of the law.

Hidden cost

More and more Nigerian women are now rethinking their presence on social platforms worried that users can modify their photos inappropriately. “Not posting my pictures on here before they use AI [to] remove my hijab,” wrote X user @wluvnana.

While some users on the platform found humour in the post, she was expressing serious concern over a trend that she had begun to notice.

“The only thing that seems viable is to take myself out of such situations, which is absurd. I should be able to post pictures on X if I want to, but now, I don’t have the power to do that anymore,” she later told .

Beyond doctored photos and videos, harassment using generative AI can also appear in text-based formats, explains Chioma Agwuegbo, executive director at TechHerNG, an organisation focused on supporting women through digital literacy and inclusion.

“There are platforms online where you can go and simulate WhatsApp conversations, simulate Snapchat, Tiktok,” Agwuegbo says. “The word ‘generative’ implies that it is the creation of content, of media.”

Compounded by a culture of shame around sex and sexuality, and an increasing inability to assess the accuracy of media shared online, it does not take much to cause lasting damage. “That’s why the easiest thing to say about a girl to bring her down is ‘I had sex with her’, or ‘I dated her and dumped her’,” Agwuebo says, and AI tools are making it increasingly possible to doctor those narratives believably.

What are the platforms doing?

Part of the issue is not just that generative AI can be misused. Another fundamental problem is that there are no suitable mechanisms in place to detect the misuse that occurs particularly in the Nigerian context.

X used to have a stronger Trust & Safety team dedicated to content moderation. However, since its October, 2022 acquisition, the headcount for the Trust & Safety team has fallen by roughly 30%, according to Australia’s eSafety Commission. Presently, there is no regional office in Africa dedicated to locally aware content moderation efforts. The office opened in 2021 to serve an African audience, but it closed shortly after Elon Musk took over.

These platforms are equally turning to AI to do the heavy lifting of moderation. It is cheaper and sometimes faster to let automated tools handle moderation than to hire a large team of human reviewers. However, these tools will sometimes struggle with nuance and accuracy, especially when they are trained with limited data for specific regions and local or creole languages.

When X users began tagging Grok to generate explicit images of Nigerian women by prompting it to ‘turn her around’, ‘show her backside’ or ‘remove her clothes’, some tried to fight back by mass reporting the accounts. But again and again, they were met with a similar cold response: “This account does not violate community guidelines”.

“I kept asking: if this doesn’t violate your community guidelines, then what does? Their platforms cannot recognise gendered harms that are peculiar to our Nigerian context, our memes, our slangs that are used by ‘banger boys’ on X. There is a very big gap in content moderation,” says Jessica Eni, a policy associate at TechSocietal, who participated in mass reporting efforts.

What other users can do

While it might be difficult to regulate AI-assisted sexual harassment on social platforms like X, there are steps every day social media users can take instead of being unconcerned passersby. This is what users like Vivian Nnabue, an Ibadan-based social media associate, believe.

When Nnabue saw posts prompting Grok to undress women on X, she made a LinkedIn post accompanied with screenshots and tags of the perpetrators’ accounts, demanding that they be held accountable.

But she did not stop at a LinkedIn post. She says she reported the perpetrators to their bosses and, for one who was in school, she sent reports to the institution. No one responded.

What followed instead, was the perpetrators reaching out to her in her DMs. One of them, Gbenga, apologised for what he had done adding that he almost lost a contract when an employer came across Nnabue’s LinkedIn post during a background check. “My inclination was not nudity. [I] just want to flex my tech intelligence,” Gbenga wrote to Nnabue.

Beyond flagging

Even if platforms address their blind spots, advocates like Eni worry that Nigeria’s laws still lag. The country has no single, robust framework that fully protects people from these evolving forms of gendered harassment. Existing laws, such as the Data Protection Act and the Cybercrimes Act, are limited in scope. There is also a growing concern amongst advocates like Agwuegbo that these laws could be potentially misused to justify the suppression of free expression or dissent.

“There is a Cybercrime Act, yes, but it is vague and heavy-handed. Because it’s so ambiguous, it can be twisted however someone in power wants, as long as they have money,” Agwuegbo says. Presently, the Cybercrime Act is being used to justify suits made against Senator Natasha Akpoti, a female senator who spoke against sexual harassment by the Senate President. In September 2023, the same Act was also used against a woman who left negative reviews of a can of tomato paste by Nigerian brand, Erisco Foods.

“What we actually need is something like an Online Safety Act. One that first defines its terms. What do we mean by the Internet? What’s the scope of a digital platform? One that places accountability not only on citizens but also on Big Tech. We need clear responsibilities for things like takedowns and better protections for young people,”Agwuegbo adds.

The Nigerian government is currently drafting a new Online Harms Protection Bill, spearheaded by the National Information Technology Development Agency (NITDA). Civil society groups, such as TechSocietal, are advocating for the bill to address emerging forms of tech-enabled abuse, particularly those amplified by AI tools.

For now, many Nigerian women are quietly adapting; sharing less on social media as this era of AI-enabled harassment continues to unfold. Until stronger rules and better platform safeguards catch up with the technology, the burden of staying safe online will continue to fall on those who are more at risk.

We would love to know what you think about this column and any other topics related to AI in Africa that you want us to explore! Fill out the form here.

Mark your calendars! Moonshot by is back in Lagos on October 15–16! Join Africa’s top founders, creatives & tech leaders for 2 days of keynotes, mixers & future-forward ideas. Early bird tickets now 20% off—don’t snooze! moonshot..com