A practical look at AI gateways, the problems they solve, and how different approaches trade simplicity for control in real-world LLM systems.

If you’ve built anything serious with LLMs, you probably started by calling OpenAI, Anthropic, or Gemini directly.

That approach works for demos, but it usually breaks in production.

The moment costs spike, latency fluctuates, or a provider has a bad day, LLMs stop behaving like APIs and start behaving like infrastructure. AI gateways exist because of that moment when “just call the SDK” is no longer good enough.

This isn’t a hype piece. It’s a practical breakdown of what AI gateways actually do, why they’re becoming unavoidable, and how different designs trade simplicity for control.

What Is an AI Gateway (And Why It’s Not Just an API Gateway)

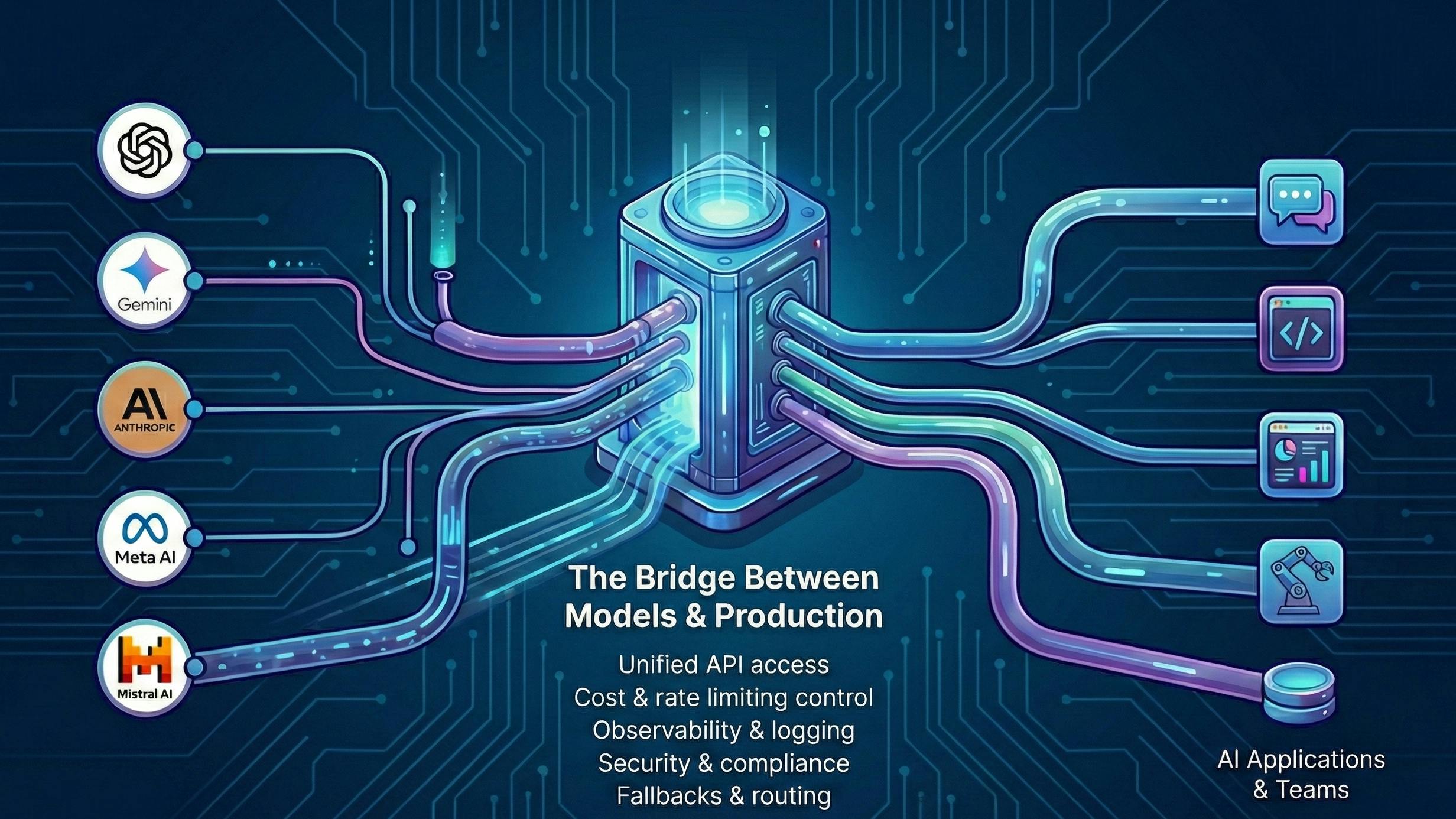

An AI gateway is a middleware layer that sits between your application and one or more LLM providers. Its job is not just routing requests, it’s managing the operational reality of running AI systems in production.

At a minimum, an AI gateway handles:

- Provider abstraction

- Retries and failover

- Rate limiting and quotas

- Token and cost tracking

- Observability and logging

- Security and guardrails

Traditional API gateways were designed for deterministic services. LLMs are probabilistic, expensive, slow, and constantly changing. Those properties break many assumptions that classic gateways rely on.

AI gateways exist because AI traffic behaves differently.

Why Teams End Up Needing One (Even If They Don’t Plan To)

1. Multi-provider becomes inevitable

Teams rarely stay on one model forever. Costs change, Quality shifts & New models appear.

Without a gateway, switching providers means touching application code everywhere. With a gateway, it’s usually a configuration change. That difference matters once systems grow.

2. Cost turns into an engineering problem

LLM costs are not linear. A slightly worse prompt can double token usage.

Gateways introduce tools like:

- Semantic caching

- Routing cheaper models for simpler tasks

- Per-user or per-feature quotas

This turns cost from a surprise into something measurable and enforceable.

3. Reliability can’t rely on hope

Providers fail. Rate limits hit. Latency spikes.

Gateways implement:

- Automatic retries

- Fallback chains

- Circuit breakers

The application keeps working while the model layer misbehaves.

4. Observability stops being optional

Without a gateway, most teams can’t answer basic questions:

- Which feature is the most expensive?

- Which model is slowest?

- Which users are driving usage?

Gateways centralize this data and make optimization possible.

The Trade-Offs: Five Common AI Gateway Approaches

Not all AI gateways solve the same problems. Most fall into one of these patterns.

Enterprise Control Planes

These focus on governance, compliance, and observability. They work well when AI usage spans teams, products, or business units. The trade-off is complexity and a learning curve.

Customizable Gateways

Built on traditional API gateway foundations, these offer deep routing logic and extensibility. They shine in organizations with strong DevOps maturity, but come with operational overhead.

Managed Edge Gateways

These prioritize ease of use and global distribution. Setup is fast, and infrastructure is abstracted away. You trade advanced control and flexibility for speed.

High-Performance Open Source Gateways

These offer maximum control, minimal latency, and no vendor lock-in. The cost is ownership: you run, scale, and maintain everything yourself.

Observability-First Gateways

These start with visibility costs, latency, usage, and layer routing on top. They’re excellent early on, especially for teams optimizing spend, but lighter on governance features.

There’s no universally “best” option. Each is a different answer to the same underlying problem.

How to Choose One Without Overthinking It

Instead of asking “Which gateway should we use?”, ask:

- How many models/providers do we expect to use over time?

- Is governance a requirement or just a nice-to-have?

- Do we want managed simplicity or operational control?

- Is latency a business metric or just a UX concern?

- Are we optimizing for cost transparency or flexibility?

Your answers usually point to the right category quickly.

Why AI Gateways Are Becoming Infrastructure, Not Tools

As systems become more agentic and multi-step, AI traffic stops being a simple request/response. It becomes sessions, retries, tool calls, and orchestration.

AI gateways are evolving into the control plane for AI systems, in the same way API gateways became essential for microservices.

Teams that adopt them early:

- Ship faster

- Spend less

- Debug better

- Avoid provider lock-in

Teams that don’t usually end up rebuilding parts of this layer later under pressure.

Final Thought

AI didn’t eliminate infrastructure problems. n It created new ones just faster and more expensive.

AI gateways exist to give teams control over that chaos. Ignore them, and you’ll eventually reinvent one badly. Adopt them thoughtfully, and they become a multiplier instead of a tax.