Observers commonly say that artificial intelligence is still in the “early innings.” The reality is AI is much further along than many acknowledge.

In 2012, AlexNet was a watershed deep learning moment when massive and freely available internet datasets met Nvidia Corp.’s graphics processing units. This is what truly kicked off the modern AI era, leading to further breakthroughs like generative adversarial networks. In 2017, Google researchers introduced the transformer architecture to the world followed by key research on scaling laws. Then of course, mass adoption began with ChatGPT setting off the current AI arms race.

Fourteen years into the modern AI era, our research indicates AI is maturing rapidly. The data suggests we are entering the enterprise productivity phase, where we move beyond the novelty of retrieval-augmented-generation-based chatbots and agentic experimentation. In our view, 2026 will be remembered as the year that kicked off decades of enterprise AI value creation.

We can’t promise that it won’t be messy. White-collar job pressures, AI safety, new security and governance threats all loom. But the AI train isn’t stopping and enterprises that don’t get on board risk obsolescence.

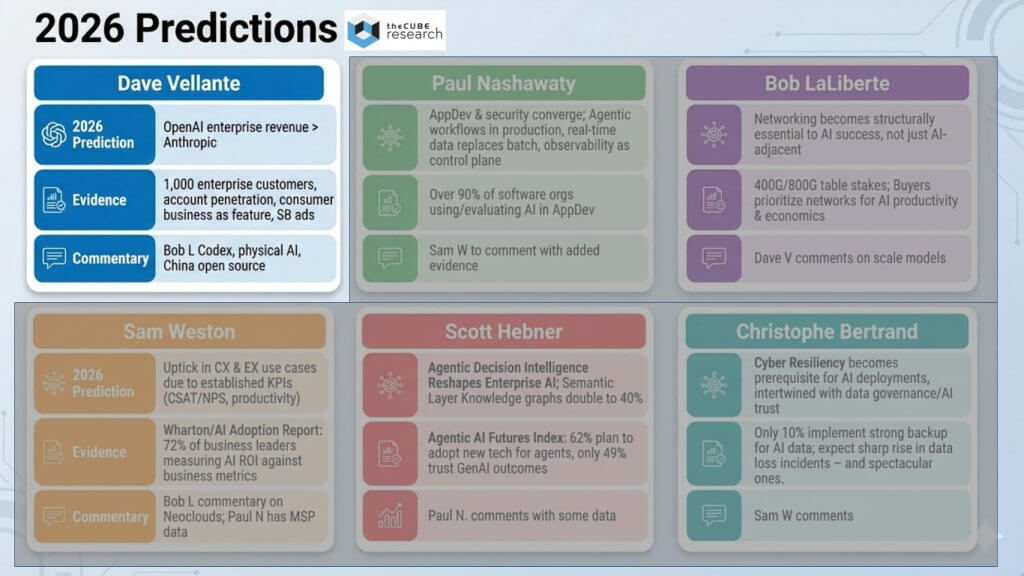

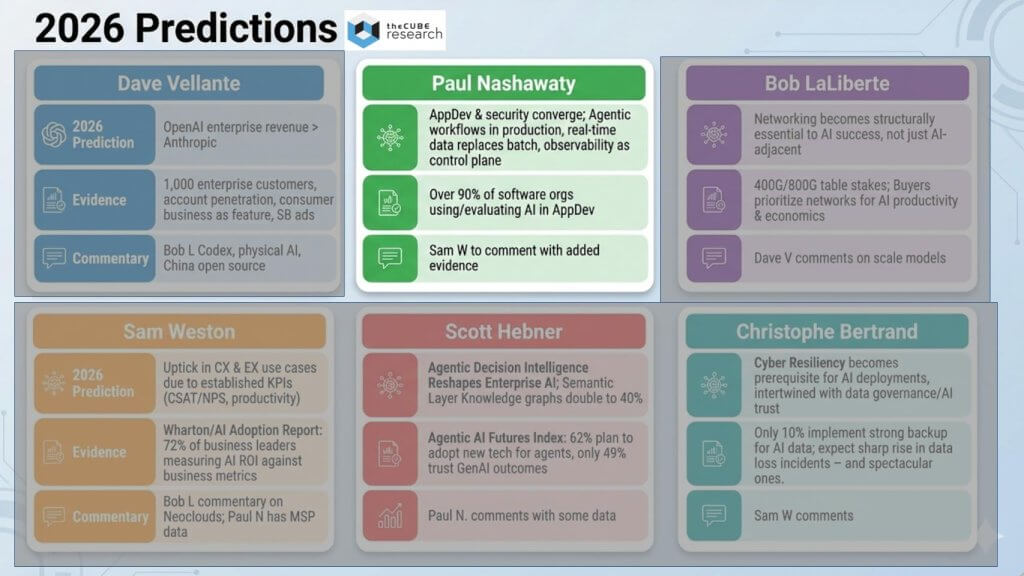

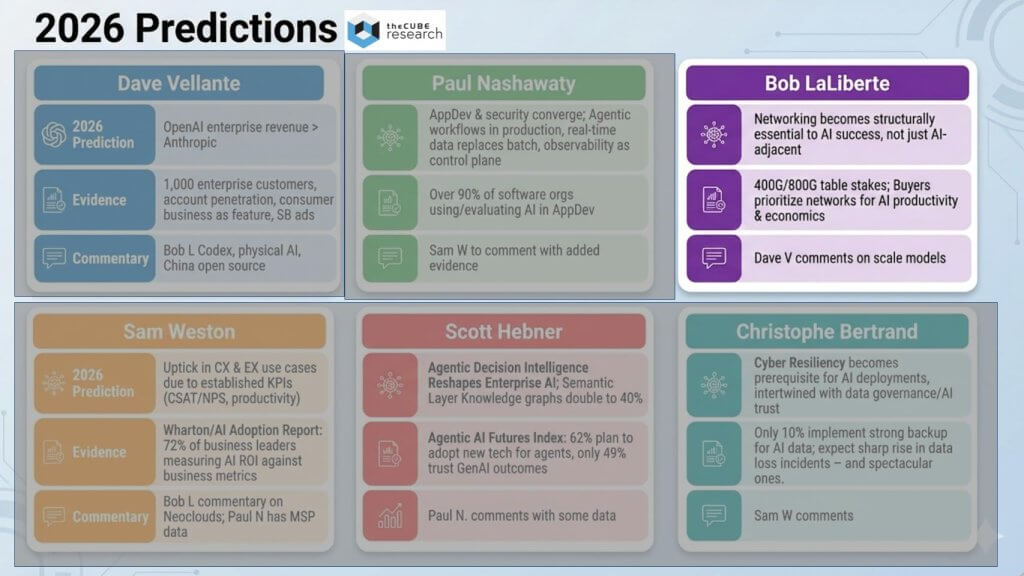

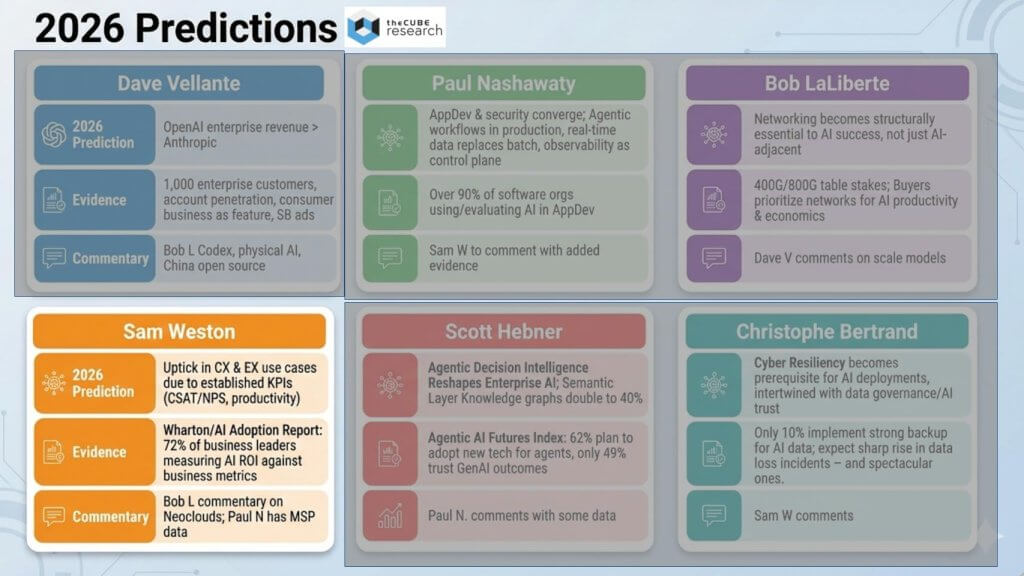

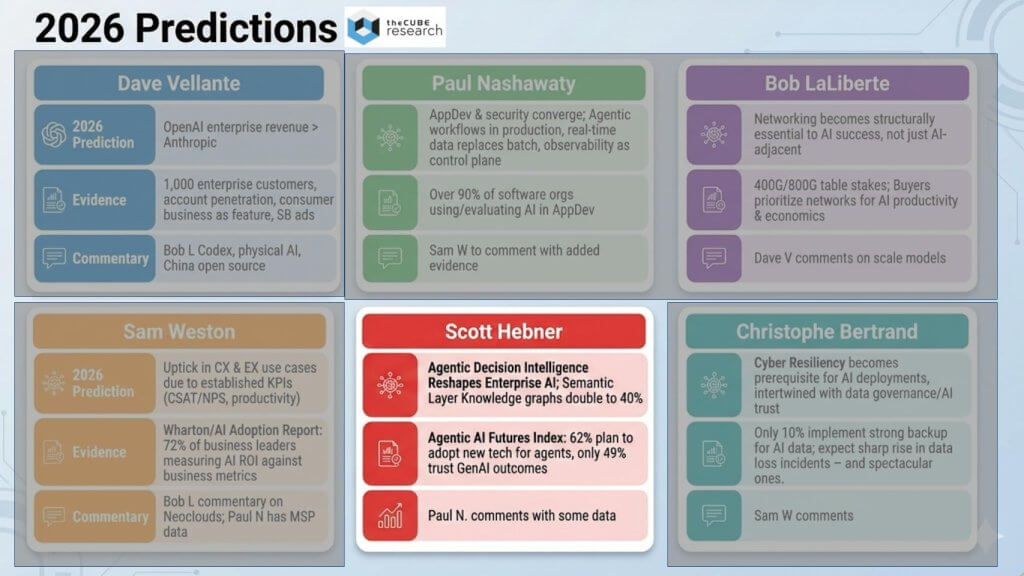

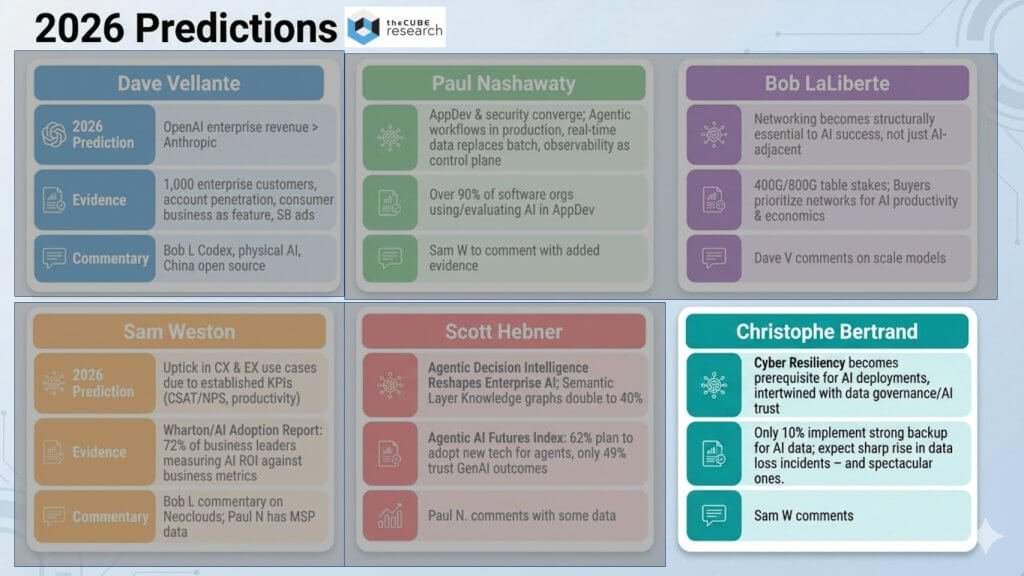

In this Breaking Analysis, top analysts from theCUBE Research team including Dave Vellante, Paul Nashawaty, Bob LaLiberte, Sam Weston, Scott Hebner and Christophe Bertrand, share our predictions for 2026 and beyond.

TheCUBE Research 2026 predictions at a glance

Our predictions are delivered in a straightforward format. One analyst makes the call, then one or two others weigh in with agreement, disagreement or debate. The goal is to test the ideas in real time, ideally with measurements that can determine whether the prediction came true a year from now. We will push the analysts to commit how they will measure the prediction a year from now.

We start with a prediction on the competitive battle between Anthropic and OpenAI, with Bob and others chiming in. From there, Paul takes the baton with a view on application development and the infusion of agentic workflows, followed by discussion with the panel. Next, Bob brings the networking perspective, with a prediction that the importance of networking in AI becomes more obvious in 2026. Sam then discusses her prediction around productivity with customer and employee experiences increasingly under the microscope in 2026. Then Scott follows with a call on decision intelligence, including data points around knowledge graphs that Paul will also touch. We close with Christophe’s prediction on cyber resilience, with Sam commenting on that assertion.

Analyst prediction from Dave Vellante: OpenAI’s enterprise revenue surpasses Anthropic’s in 2026

We kick things off with a long-shot call: OpenAI Group PBC’s enterprise revenue surpasses Anthropic PBC’s in 2026. We noted that both companies talk in terms of run-rate revenue, with OpenAI exiting 2025 at a $20 billion run rate and Anthropic around $9 billion. Adjusting to calendar-year 2025, we put OpenAI at roughly $13 billion to $15 billion and Anthropic at roughly $4 billion to $5 billion.

We estimated that 20% to 25% of OpenAI’s 2025 revenue came from enterprise – roughly $3 billion – while the vast majority of Anthropic’s revenue came from enterprise, around $4 billion to $5 billion. Looking ahead, we projected OpenAI approaching $40 billion in total revenue in 2026, with Anthropic at roughly half that, about $15 billion to $20 billion. The key swing in this call is mix. We predict more than half of OpenAI’s 2026 revenue comes from enterprise – roughly $20 billioin – creating a close race, “a photo finish at the wire,” where we pick the longer shot, OpenAI.

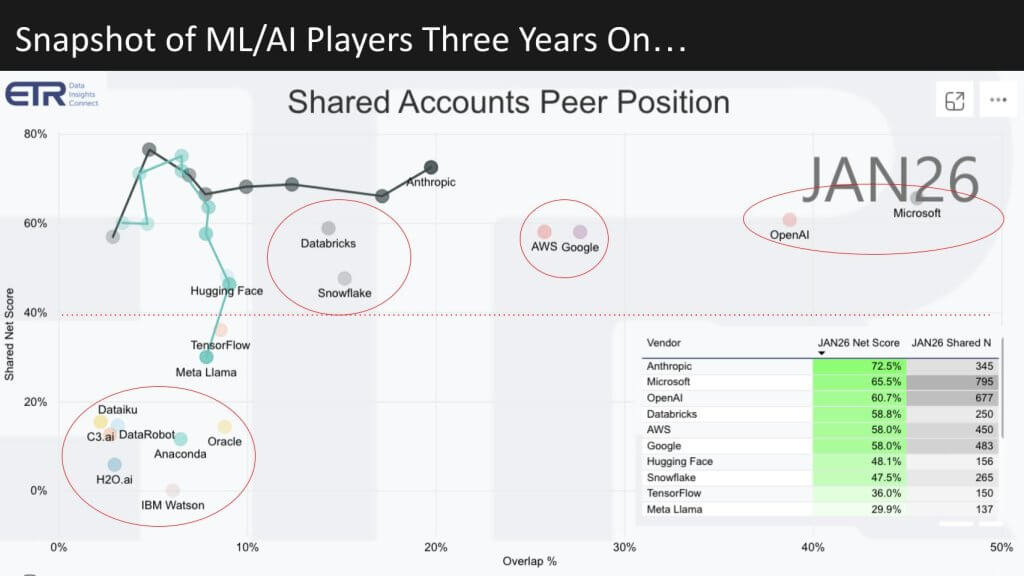

ETR data shows Anthropic making huge strides with OpenAI showing well in enterprise accounts

We now turn to Enterprise Technology Research’s machine learning and AI sector view for context. The vertical axis in the chart below is spending momentum based on ETR’s proprietary Net Score methodology, and the horizontal axis is overlap, representing penetration in an account-based survey of roughly 1,700 respondents. Three points stand out in the reading:

- Anthropic has made significant progress, gaining enterprise share with momentum above the red 40% line (highly elevated).

- Meta Platforms Inc.’s Llama was near the top a couple of years ago and has fallen well below the 40% line.

- OpenAI, through its Microsoft Corp. relationship, is deeply penetrated in enterprise accounts.

The common wisdom is Anthropic is focused and gaining share with Claude Code and other innovations, while OpenAI appears unfocused, with Code Reds, flip-flops on advertising, and visible tension in the Microsoft relationship. We invoke the Andy Grove line John Furrier often cites – “Let chaos reign, and then reign the chaos in” – and we’re arguing OpenAI may be doing exactly that.

We anchor this call on two advantages for OpenAI. First, there’s its deep account penetration and an enterprise base exceeding 1,000 customers, growing rapidly. Second, we argue OpenAI’s consumer scale is a benefit, not a drawback, because scale matters, data matters, and OpenAI has priority allocation from Nvidia for advanced technologies such as its latest-generation GPU Vera Rubin, which lowers cost per token. We go to Bob Laliberte for reaction.

Analyst feedback

Bob Laliberte agreed the prediction is plausible and argued OpenAI is clearly pushing into the enterprise developer segment. He said the consumerization pattern is repeating – consumer adoption often drives faster enterprise adoption – and he viewed OpenAI’s Super Bowl presence as a flag in the ground, with Codex ads and meaningful spend behind them.

He said he is hearing from enterprises using Codex in meaningful ways, including cases where as much as three quarters of programming is done with Codex, and discussions of a first 100% Codex-developed product. He emphasized that driving broader adoption requires leaning on early adopters, surfacing use cases, and showing productivity gains so they can be replicated across environments.

He added two cautions. First, these tools work better on greenfield applications than legacy environments, so targeting enterprise means leaning into greenfield opportunities rather than trying to retrofit everything at once. Second, enterprises need to start adopting, and adoption requires leadership. He cited Fran Katsoudas at Cisco Systems Inc., saying AI adoption doesn’t come from an email, it comes from leading by example – if leaders want teams coding with AI tools, it starts with them showing the way.

We closed the exchange by noting the Super Bowl ad dynamic – Anthropic taking a shot at OpenAI and OpenAI countering with Codex – and we referenced developer behavior, including OpenClaw creator Peter Steinberger switching from Claude to Codex for workflow reasons, suggesting the tool race in coding remains wide open.

Analyst prediction: Paul Nashawaty on AppDev, agentic workflows and security moving into the platform

Paul Nashawaty said application development is bifurcating. Lines of business and citizen developers are taking on more responsibility for work that historically sat with professional developers. He said professional developers don’t go away – their work shifts toward “true professional development,” while line of business developers focus on immediate outcomes.

He summarized the shift as follows: 2025 was the year of experimentation and 2026 is the year of implementation. He said agent-first development and intent-driven agentic workflows are moving from experimentation into production, changing how applications are designed and shifting interfaces toward user intentions and automated agents.

He highlighted five AppDev trends:

- Agent-first development – intent-driven agentic workflows moving into production and changing how applications are designed.

- Real-time data everywhere – enterprises prioritizing query engines and data-as-a-product architectures so AI apps can access fresh, distributed data without costly extract/transform/load processes.

- Partners run the stack – in 2026, most organizations rely on service delivery partners and integrators to operate cloud-native and AI-ready platforms as in-house skills remain constrained. He cited research that 67% of organizations are hiring generalists over specialists.

- Observability becomes a control plane for AI operations – unified telemetry, metrics, and observability platforms treated as first-class infrastructure for securing, debugging and governing AI apps.

- Cost-aware AppDev – a resurgence in FinOps, with usage-based billing and cloud optimization becoming a bigger factor as AI scales.

Nashawaty said DevSecOps and AppSec become a major factor in 2026. He described AI-accelerated secure software delivery becoming standard practice, with policy engines and secure-by-default workflows shifting security earlier in development and integrating governance into the continuous integration and delivery or CI/CD pipeline. He said platform engineering centralizes “security as a service,” AppSec modernizes around AI, application programming interfaces and behavioral controls, supply chain security moves to continuous verification with software bills of materials evolving into living operational artifacts, and SecOps becomes an AI-augmented workflow with agents acting as first responders in the security operations center.

He then put a number on the prediction. By the end of 2026, in regulated environments such as financial services, healthcare and government, 30% of application workloads and operations have AI embedded directly into production workflows. In less regulated industries, he predicted faster adoption – 40% to 60% of nonregulated application workloads leveraging AI-driven automation, agentic workflows, and embedded intelligence in production systems. He said the gap reflects governance, auditability and compliance requirements that slow production deployment.

He cited supporting data such as more than 90% of software organizations are actively using or evaluating AI development in their pipelines, productivity gains of 20% to 40% from AI-assisted code automation, observability becoming mission-critical with over 60% driving real-time telemetry across cloud-native and AI workloads, and SBOM adoption exceeding 50% in regulated enterprises with AI-assisted security scanning and automation rising. He said these signals show AI-first development, platform engineering and automated DevSecOps are moving from experimental to structural.

Analyst feedback

Sam Weston said AppDev is a treadmill and not going away, and that Paul could do the episode every quarter and have something new to say. She said she was curious to hear the regulated industry angle later in the show, and focused her questions on observability.

Weston said many companies cite using six to 15 different observability tools and say they want consolidation, while observability features are also being added into products and platforms, with AI layered on top. She asked four questions: 1) whether product-level observability takes precedence over pure-play observability vendors; 2) whether that consolidates the tool stack or just pushes features lower; 3) whether a hybrid approach emerges where product-level observability feeds a unified view using observability vendors and AI; and 4) what that does to observability vendors.

Nashawaty said observability is becoming bidirectional inside organizations. He said major observability vendors are meeting developers where they are, not just IT ops, by feeding insights into the IDE. He cited Kiro (by Amazon Web Services Inc.), Dynatrace Inc., Chronosphere Inc. and Splunk Inc. as examples. He said more mature platforms are incorporating observability insights from vendors directly into their platforms.

He said there will never be a single pane of glass, but customer choice matters. He said an open ecosystem allows calls to be leveraged directly so organizations can use a pane of glass to make things more unified. He cited data that 54% of organizations are looking for a unified solution in observability.

Vellante then asked how to score the prediction a year from now. Nashawaty said the criterion is faster, more streamlined automation throughout the CI/CD pipeline. He cited AppDev research showing 24% of organizations want to release code hourly but only 8% can do so today. He said that 8% should rise to at least 15% and agreed to make it 16% as a “double.”

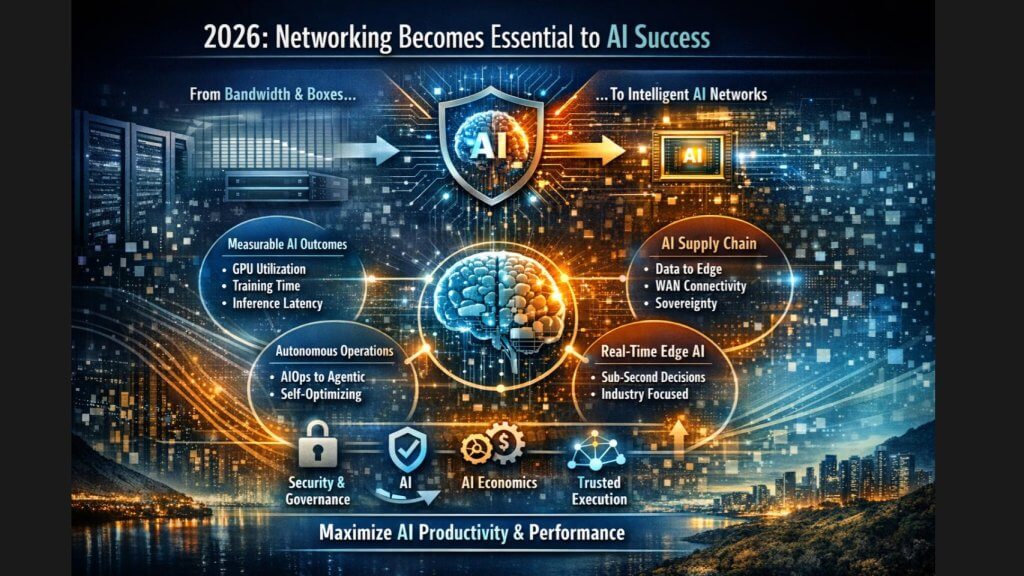

Analyst prediction: Bob Laliberte on networking becoming structurally essential to AI success

Bob Laliberte said 2026 will be remembered as the year networking stopped being AI-adjacent and became structurally essential to AI success. He said networking has long been positioned as enabling AI through more bandwidth, lower latency, and faster fabrics, but 2026 feels different because the network becomes a determining factor for AI outcomes. He said vendors have to translate AI complexity into control, trust and measurable business results, and organizations that keep selling bandwidth and boxes without business context will fall behind.

He said enterprises will evaluate networks based on measurable AI outcomes, including GPU utilization, how long training takes to complete, inference latency and cost efficiency per workload. He said network speed of 400G to 800G and beyond becomes table stakes, and differentiation comes from predictable performance, multitenant isolation and economic efficiency, especially as GPU-as-a-service providers, neoclouds and sovereign clouds demand hard segmentation and deterministic fabrics that traditional cloud networking abstractions were never designed to support.

He then predicted the network’s role to expand beyond the data center and become an end-to-end story, with the wide-area network becoming part of the AI supply chain linking distributed data sources, centralized training clusters and edge inference endpoints under sovereignty and policy constraints. He said AI workloads are inherently more distributed, making latency, jurisdiction and bandwidth elasticity strategic variables rather than operational details.

He stated that operational models evolve from AIOps as insight to agentic operations that act autonomously within well-defined guardrails because AI-era networks are too dynamic for human-in-the-loop operations at scale. He said there will be a time-to-comfort period where human-in-the-loop remains important, but he cited Jensen’s phrasing from the recent Cisco AI Summit: It’s not about human in the loop, it’s about AI in the loop.

He added that at the edge, networking shifts from device connectivity to real-time inference enablement. He gave examples such as 5G-enabled routers in cars where cars become mini data centers, and retail, healthcare and industrial environments with autonomous robotics requiring sub-second decision making. He said security, governance and enforcement move from overlays to intrinsic capabilities, and the network becomes the control plane for trusted AI execution.

He closed by saying 2026 marks the point where networking’s value is not measured in gigabits but in the intelligence it can deliver. He said the conversation shifts from speeds and feeds to AI economics, determinism and trust, and enterprises will expect networks to maximize GPU investments, enforce sovereignty policies and deliver real-time outcomes at the edge. He said winners won’t simply build faster networks, they’ll build networks that make AI work.

Analyst feedback

Dave Vellante picked up on Laliberte’s reference to Nvidia Chief Executive Jensen Huang’s “AI in the loop” comment and noted the broader implication that networking is no longer just scale up and scale out inside a single environment, but increasingly out and scales across AI factories.

Vellante then asked how the group should measure the prediction a year from now, using the example that a prediction about Nvidia becoming the No. 1 networking company by revenue would have been a great call in 2024.

Laliberte said there are several ways to look back, and joked that after being blamed for information technology problems for 25 years, it’s good that the network is “cool again.” He said the measurement will come from the outcome metrics he cited: GPU utilization, how fast training completes, how effectively inference is established at the edge in real time, and whether those drive business outcomes. He said it won’t be measured by who gets to the next throughput milestone first, but by end-to-end solutions that drive AI outcomes.

He also emphasized that though Nvidia is a major player focused heavily on the backend, enterprise AI is distributed across clouds, private data centers, colocation centers and edge locations, and the network has to enforce policies, sovereignty, governance and security infused into the network itself. He said there are many factors that make networking the control plane, and though he didn’t offer a single concrete percentage target, he expects more intelligence and more end-to-end use of networking to show up in AI adoption patterns.

Analyst prediction: Samantha Weston on AI ROI becoming non-negotiable, led by CX and EX

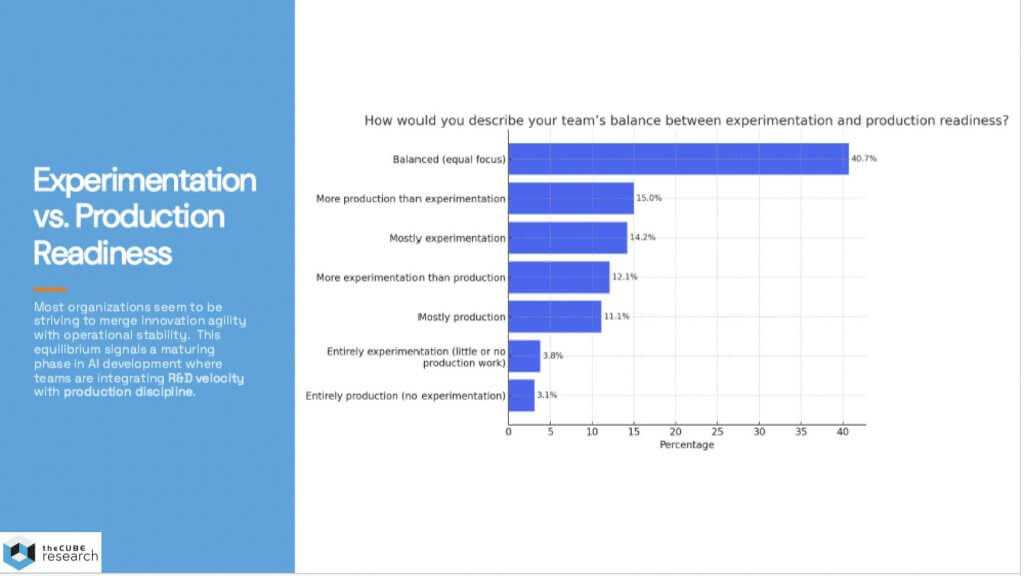

Sam Weston said 2026 is the year AI return on investment becomes non-negotiable. AI can no longer be an experiment or an innovation project without outcomes. She linked her view to the panel’s earlier theme that 2025 was the year of experimentation and 2026 is the year of deployment, and said her focus for 2026 is ROI.

She cited research from early 2025 showing about 25% of IT budgets were being allocated to AI initiatives, but said that when talking to organizations, many initiatives were ambiguous, with no clearly defined business goals or direction. A lot of money was being spent, with many LLM-powered chatbots deployed, but without clearly defined value.

She referenced the oft-cited MIT report that said 95% of AI initiatives were not reaching production in 2025. She said that headline sounds alarming, but a 5% success rate during experimentation is not failure, it’s an investment where organizations separate novelty from value. She described 2025 as the year of trying many ideas, seeing what fits, then dialing it back.

As 2025 progressed, she said the conversation began drifting toward AI ROI. In her research, when asked whether do-it-yourself AI infrastructure or managed AI platforms would produce higher ROI, the majority said DIY would deliver higher ROI, and very few said DIY would deliver lower ROI than a managed service. She said organizations were behind the idea that AI value would be internal rather than external, and she expects that to change in 2026.

She cited another spending intentions study where machine learning and AI ranked No. 1 for the second half of 2025 into 2026, around 70%, ahead of cloud infrastructure. She said AI is not fading, and that what makes technology foundational versus a trend is ROI. She said organizations now accept AI can’t go away and they want more of it, but they have to prove value, and she sees 2026 as the turning point.

Sam cited that 72% of business leaders are formally measuring AI ROI with the goal of increasing productivity returns to about 30%. She said this is the outcome metric to watch, and contrasted it with roughly 6% productivity increases reported by some respondents today. She said this shift comes from starting with outcomes instead of starting with tools, building and buying for ROI outcomes, and applying AI where action moves the needle.

She said the fastest-growing AI investment for ROI will show up in customer experience and employee experience because the key performance indicators are well defined. She listed CSAT, NTS, resolution time, employee productivity and cycle time as examples, and said the impact becomes immediately visible because the metrics are established and widely accepted. She closed by saying 2026 is the year AI stops being judged by potential and starts being judged by results, and customer experience and employee experience are where accountability shows up first.

Analyst feedback

Dave Vellante agreed that CX and EX are obvious places where ROI shows up and tied ROI to tokenomics, describing ROI as benefit over cost. He said Vera Rubin coming in volume lowers the denominator, and when you lower the denominator in a fraction the result gets bigger, and that is what will show up in 2026. He set up Bob Laliberte saying neoclouds matter because they provide infrastructure, access to intelligence, and GPUs, and their costs keep getting better for users.

Bob agreed with Sam on ROI and said at the National Retail Federation show that in retail, people were not talking about technology, they were talking about outcomes, value and return. He gave an example beyond the contact center: applying the same AI capabilities to frontline retail associates, with access to a knowledge base and inventory to answer customer questions in real time on the floor. He said these are not abstract future-state ideas, they are real and deployed today. He also connected ROI speed to neoclouds building multitenant GPU environments that help organizations scale faster and get to ROI faster, and said he’s looking forward to seeing how the stats improve as AI drives tangible benefits to frontline workers across CX and EX.

Paul Nashawaty agreed with the ROI focus but challenged the difference between delivering ROI and steady state. He cited 2025 research showing 40% of respondents saw higher ROI from building it themselves. He said building it yourself means you own it, and without enterprise-level support you can’t offload that debt. He said managed services and managed delivery can drive faster time to value and free up teams, but may limit feature functionality. He said he does not believe bespoke solutions will be the preferred delivery model coming out of 2026, and expects platforms and enterprise environments built by vendors to reduce complexity and address the skills gap.

Analyst prediction: Scott Hebner on decision intelligence and decision-grade agent architectures

Scott Hebner began by commenting briefly on two earlier predictions. On the OpenAI versus Anthropic call, he said there are two value factors including: 1) ecosystem, which he believes favors OpenAI; and 2) quality control or guardrails, which he believes favors Anthropic. He said ecosystem is often what ultimately makes tech businesses successful, and OpenAI has an advantage there. On networking, he referenced the e-business era, saying early adjacent e-commerce sites underperformed until they were integrated into the core infrastructure, and that winners doubled down on networking to resolve latency and performance issues. He said AI follows the same pattern and needs networking power as it scales.

Hebner’s main prediction was that in 2026, winners shift from large language model-only agents to decision-grade agents built on layered architectures. He described three layers:

- An LLM plus RAG plus chain-of-thought layer for fluency and coherence

- A semantic layer for meaning and context specific to the business, its industry and region

- A causal decision intelligence layer for interactive decision analysis

He said enterprises will increasingly demand decision-grade systems because the next wave of ROI depends on decisions that hold up under enterprise scrutiny, and he argued that current agent stacks are not decision-grade today.

He tied the shift to AI trust, saying trust is becoming the limiting factor on agentic AI ROI and is slowing adoption of higher-value use cases. He cited the Agentic AI Futures Index, saying only 49% of 625 AI professionals report a high degree of trust in LLM outcomes.

He then pointed to recent research published in January from Carnegie Mellon, Oxford, Johns Hopkins, MIT and Northeastern, saying LLMs plus RAG plus chain-of-thought tend to misrepresent their underlying reasoning to users, fabricating explanations after the fact that do not necessarily reflect how the decision was derived. He said Johns Hopkins went as far as stating LLMs are basically lying to you.

He said users want agents that help make decisions, but they will want to intervene, ask what-if questions, test counterfactuals and see what happens if conditions change. He said trust is the currency of ROI for enterprise AI because decisions are consequential, must be explained and justified, trigger actions and investments, and invite scrutiny from regulators, risk and compliance teams. He said this drives a layered architecture in 2026, anchored in explainability, so decisions can be verified, traced and trusted enough for enterprise requirements.

He said the agentic AI ROI ramp erodes unless trust is engineered into the architecture, and winners won’t be those with the most agents, but those with the most decision-grade outcomes per agent.

He summarized the arc as:

- 2024 was the year of fluency and coherence

- 2025 was the year of automation and autonomy

- 2026 will be the year of decision intelligence, explainability and defensibility

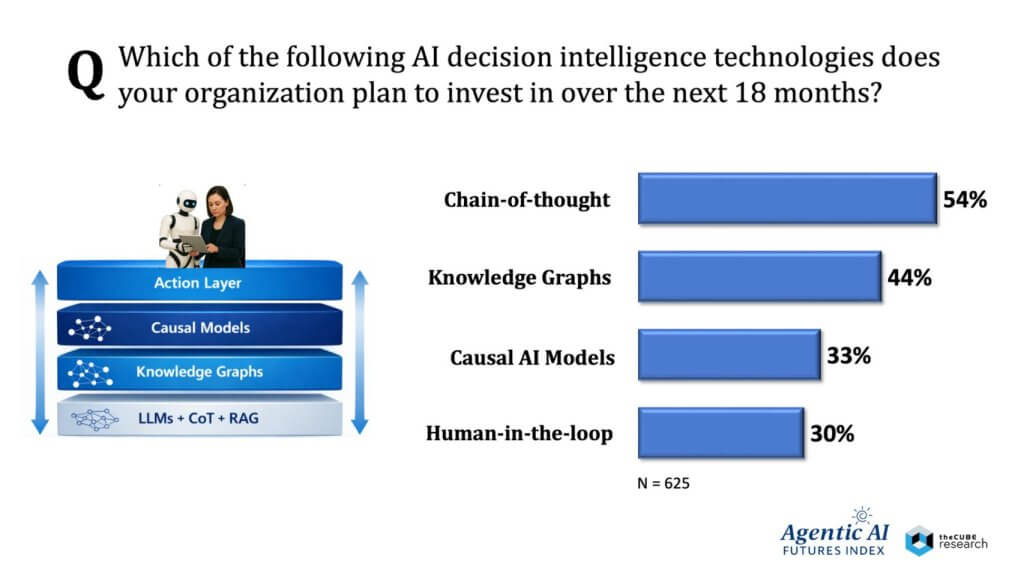

He cited the Agentic AI Futures Index investment intent signals, saying roughly 30% to 44% plan to invest in decision intelligence technologies, knowledge graphs, causal AI and human intervention capabilities over the next 18 months.

On measurement, he said semantic layer and knowledge graph deployments across global enterprises are somewhere between 5% and 10% today, maybe up to 20% in billion-dollar-plus enterprises, and he predicted that doubles in 2026 to reach 40% in larger enterprises. For the causal layer, he said it is about 3% to 5% today across all enterprises, maybe 15% in larger enterprises, and he predicted that triples in 2026.

Analyst feedback

Paul Nashawaty said Hebner’s predictions are aggressive and that’s where the market wants to go, but he raised an adoption concern. He said vendors are often about three years ahead of broad adoption, and he questioned whether most organizations are ready for a 2026 decision intelligence and defensibility push given skill gaps, complexity and maturity. He asked how Hebner rationalizes competitive advantage for organizations that don’t have a starting point.

Hebner responded that if workers and executives are making decisions off LLM-based systems, he wouldn’t do it because it creates a liability trap. He said “AI told me to do it” is not a defensible position, referenced a Delaware fiduciary law example, and said corporate officers still need to explain and prove decisions. He said enterprises face a choice: Deploy untrustworthy systems and accept auditability and compliance risk, or slow down and do it right from the beginning. He said an LLM-only architecture is not sufficient, chain-of-thought is not reasoning, and enterprises need meaning and context. He gave an example distinguishing correlation from causation using humidity and pipe condensation, saying the causal direction changes actions and investments. He said the trust issue is real and is why deployments are slowing, and these layers help create confidence for knowledge workers.

Nashawaty added that accountability pressures are rising through regulation and compliance, citing the EU CRA and reporting requirements for applications by September 2026 and broader compliance requirements by the end of December 2027, with penalties for noncompliance. He said accountability and experimentation must come together.

Hebner said people should look at Microsoft and IBM Corp., which he said have integrated semantic layers and knowledge graphs into what they are doing, and he suggested AWS appears to be moving in that direction as well. He said enterprise players will incorporate knowledge graphs as a step, and over time understanding causality becomes important to know how the system is being used. He said the goal is trustworthy AI that is traceable, auditable and explained, and that is why he expects greater investment.

Vellante cited Palantir Inc. as winning today with the equivalent of a knowledge graph, a 4D map of the enterprise, and mentioned Salesforce Inc., ServiceNow and Celonis SE as moving in that direction. He said AI trust is currently a blocker and adoption will be choppy, with bad things happening along the way, but enterprises have to build trust into the system or risk market cap and reputation harm.

Hebner closed by adding that Anthropic is starting to use knowledge graphs in workflow elements, and said the enterprise trick is democratizing the tooling so you don’t need a Ph.D., with knowledge graphs becoming part of agentic architectures while larger enterprises engineer deeper implementations. Vellante noted that knowledge graphs historically were expressive but hard to query, and said the step change is that now you can talk to them rather than going back to older query approaches.

Analyst prediction: Christophe Bertrand on cyber resilience as the prerequisite for scaling AI

Christophe Bertrand said cyber resilience becomes the prerequisite for scaling AI, and that it is not only about backup, it is about recovery across the application portfolio. He said it feels like Groundhog Day because the industry has been here before, citing earlier adoption cycles where trust blocked progress, including cloud and other technologies. He said AI will evolve the same way, but it is early and the basics still matter.

He said the infrastructure has to be cyber resilient and the data has to be resilient. He said if you don’t have data resilience injected into infrastructure, governed and compliant, you can’t get to the outcomes the panel was describing. He said people aren’t getting ROI and many don’t know what risks they are running.

He cited research indicating AI deployments are maturing, with about 45% of respondents self-reporting AI fully integrated into some business processes and about 30% saying they are in development. He said a lack of trust and ROI issues are showing up due to multiple challenges. He said the No. 1 challenge respondents cite is high costs and costs being out of control from an implementation standpoint. He said the second challenge is cyber resiliency and security issues, with a small difference in percentage points from the No. 1 challenge, making it close to an equal No. 1. He said other challenges include skill sets and integrations, and that the lack of maturity is consistent with prior technology adoption patterns.

He said AI is creating significant additional data and organizations don’t yet know what that looks like, creating fertile ground for attackers. He said AI infrastructure is often nascent and not well governed, with people not waking up thinking about recoverability, full security, and related basics. He said there have already been instances of attacks on AI infrastructure, and attackers are also leveraging AI.

He described survey results on AI-related attacks, noting that it was a pick-your-poison question and results did not add up to 100%. He said 46% cited data inference attacks, 36% cited data poisoning and 36% cited data corruption, with exfiltration and unauthorized access also ranking high. He noted unauthorized access was No. 5, which he suggested should be easier to control in a restricted infrastructure, but it still showed up.

He said these attacks wouldn’t be as big a problem if organizations had strong recovery and backup practices. He cited research that only 11% said they were backing up more than 75% of AI-generated data. He said that was only about the produced data and not even about protecting the infrastructure itself, and that the value and ROI is in what is produced, yet many are not backing it up.

He said challenges around data governance and compliance remain significant. He said scaling AI requires trust, and trust starts with trusted infrastructure, trusted and protected data, cyber resilient data that can be recovered, and compliant and governed data so organizations don’t use data that creates business risk. He said compliance has multiple layers: compliance of the data used and compliance of the decision-making process, including auditability and traceability. He tied that back to the need for decision auditability in agentic systems.

He described 2026 as a “back to reality” year focused on getting governance, compliance, data resiliency, infrastructure resiliency and network resiliency in place so organizations know what outcomes they will get when attacked and can recover and protect themselves. He predicted cyber resiliency becomes even more relevant for years to come, intertwined with data governance and compliance. He also predicted more M&A activity as cyber resilience increasingly combines backup, storage, data management and cybersecurity, with blurred lines creating new challenges for end users in choosing ecosystems and placing bets.

When asked who gets acquired, he said he would not name names, and suggested surprises are possible with private equity dynamics, potential initial public offering paths and the idea that products become features.

Analyst feedback

Samantha Weston said it is a needed buzzkill and that the reality check is important. She said hesitation around AI adoption is tied to lack of maturity in governance and compliance, and that there is limited guidance on what maturity looks like. She said at the federal level existing law is being applied to AI, and at the state level a patchwork is emerging, citing Colorado action on algorithmic discrimination and California action requiring transparency and disclosure of training data. She said this is not enough to cover risks of a fast-advancing technology, leaving organizations to set policies independently, and that will go wrong. She asked whether the market will see an uptick in federal and state regulation, or whether open standards or an independent regulatory body emerges.

Bertrand said it depends on geography. He said Europe has already started with regulations and expects more. He said the U.S. is more polarized and may prefer less regulation, but global companies will adopt the highest compliance bar needed to transact globally, similar to GDPR. He said smaller businesses may face less depending on where they operate.

He then reemphasized compliance risk in two parts. First, you cannot use noncompliant data, and you cannot feed any data into a model without being careful about exposing private or proprietary data. Second, outcomes, auditability and fairness matter when AI is used for decision-making, and he referenced an end user discussion in the credit decisions space as an example.

He said he expects guidelines from industry groups and more regulation from Europe, likely some from parts of Asia, and fragmented state-driven approaches in North America that may eventually become federal. He said these dynamics will be exacerbated by cyber resiliency failures, and he predicted some spectacular AI-related cyber resilience issues that will be very public.

On balance we concur with Christophe’s prediction and we fully expect some notable negative headlines in 2026 as a result of poor security and governance practices. But we believe that won’t stop the AI train, and that people will keep rolling on.

Damn the torpedoes – full speed ahead….

Disclaimer: All statements made regarding companies or securities are strictly beliefs, points of view and opinions held by News Media, Enterprise Technology Research, other guests on theCUBE and guest writers. Such statements are not recommendations by these individuals to buy, sell or hold any security. The content presented does not constitute investment advice and should not be used as the basis for any investment decision. You and only you are responsible for your investment decisions.

Disclosure: Many of the companies cited in Breaking Analysis are sponsors of theCUBE and/or clients of theCUBE Research. None of these firms or other companies have any editorial control over or advanced viewing of what’s published in Breaking Analysis.

Image: theCUBE Research/Reve

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

- 15M+ viewers of theCUBE videos, powering conversations across AI, cloud, cybersecurity and more

- 11.4k+ theCUBE alumni — Connect with more than 11,400 tech and business leaders shaping the future through a unique trusted-based network.

About News Media

Founded by tech visionaries John Furrier and Dave Vellante, News Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.