For years, artificial intelligence was a distant promise, an abstract idea fueled by paperssimulations, expectations and a lot of imagination. But that distance has been shortening with disconcerting speed. Today, conversational models are no longer an oddity or a laboratory tool: they are everyday interfaces, personal assistants, search extensions, and work platforms. In this context of accelerated progress, Gemini 3 emerges as Google’s most ambitious betnot only for its technical power, but for its declared intention: to build an AI that truly understands, dialogues and acts with judgment.

The path to Gemini 3 began with a fundamental strategic decision: merge Google’s artificial intelligence divisions, Brain and DeepMind, under a single technical direction. That move marked the beginning of a unified approach, with explicit ambitions to build more useful, versatile and secure models. The first version of Gemini debuted in late 2023, quickly followed by Gemini 1.5 in early 2024, which introduced notable improvements to long context handling and smoother integration with Google products. But those versions were still transitional stages: powerful, yes, but also stepping stones to something more.

Gemini 3 represents that “something more.” It is the first model completely built under that joint vision of DeepMind and Google Researchdesigned from its architecture to its training to address tasks with greater depth and precision. It is no longer just about generating coherent text, but about understanding intent, holding complex conversations and adapting to the context of each user. In the words of Sundar Pichai, CEO of Google, it is about making AI “truly useful.” And that usefulness begins by understanding not only what is said, but what is meant.

Gemini 3 technical capabilities and performance

One of the areas where Gemini 3 shows a clear jump is reasoning. The model has been trained to tackle tasks that require not just superficial memory or logic, but structured reflection and problem solving. This translates into responses that are more tailored to specific contexts, a better ability to handle ambiguity, and a finer understanding of the intent behind a question. Google claims that the model not only responds, but that it “thinks before responding,” and although that statement has more value as a metaphor than as a literal description, the change in the quality of the output is tangible.

In terms of multimodal processing, Gemini 3 has significantly improved its ability to work with different types of combined information: text, images, audio, and code. This allows you to perform tasks that were previously fragmented between different models. It can analyze an image, contextualize it within a conversation, interpret source code, and even reason about complex data with a fluidity that was not possible in previous generations. In addition, it is capable of maintaining long conversations without losing coherence, which solves one of the persistent problems in previous models: the fragility of the extended context.

All this is reflected in the results obtained in multiple benchmarks. Although Google has not published all the comparative details, it has has claimed that Gemini 3 far outperforms its predecessors in standard tests of understanding, coding and reasoning, and competes directly with leading models such as GPT-4 and Claude 2. In addition, the model’s alignment and security mechanisms have been reinforced, incorporating new quality filters and controls that allow better management of the tone, veracity and usefulness of the responses generated.

Available models and functional innovations

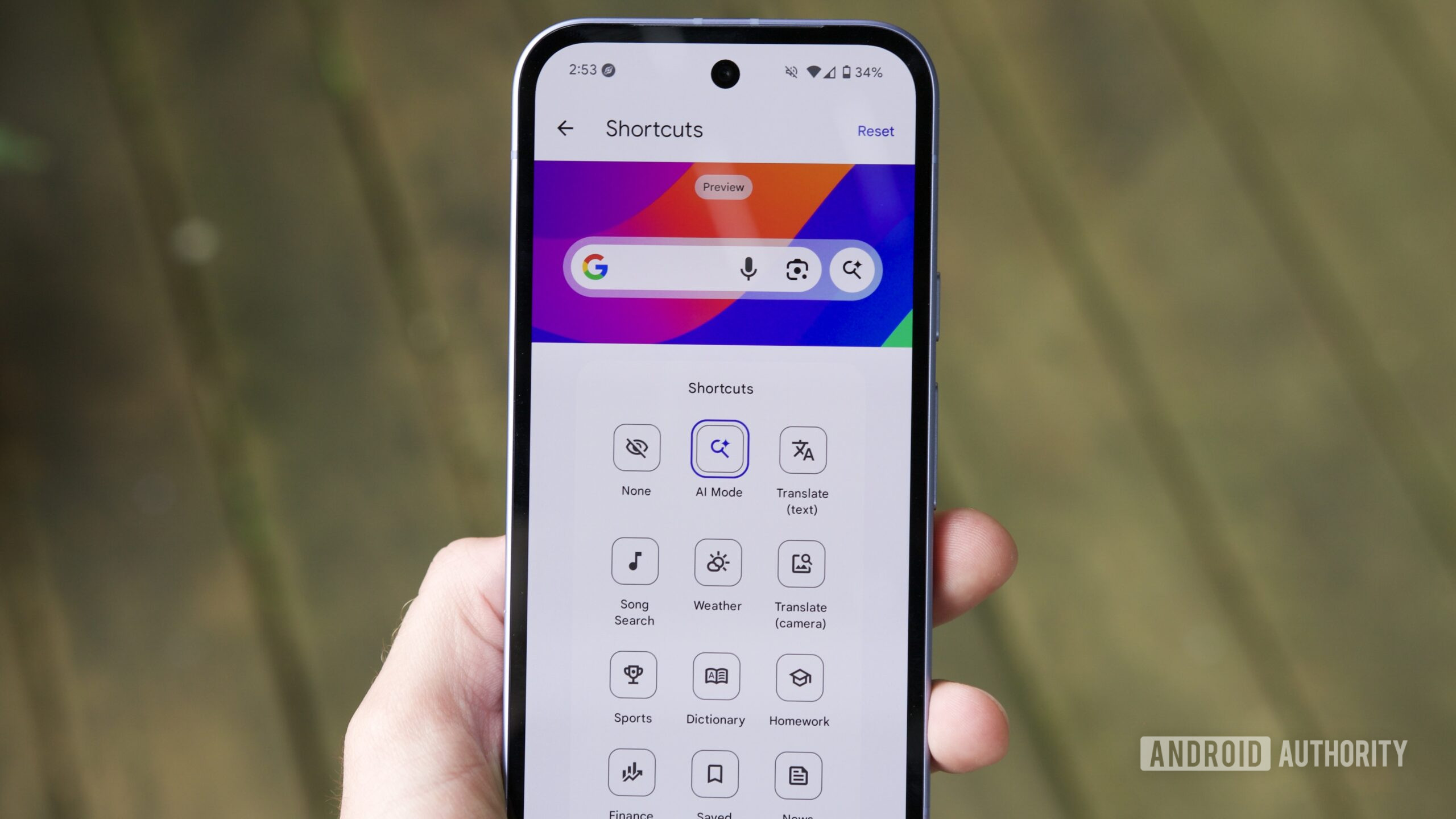

Gemini 3 deployment It has been made in several versions, starting with Gemini 3 Prothe model that is already available in the Gemini app and in products like Gmail, Docs, and other Workspace tools. This version represents Google’s new operating standard in its services, replacing previous models without the need for changes by the user. It is a silent but strategic integration, which introduces profound improvements to the experience without altering the interface: more precise responses, more contextual suggestions and a more sustained dialogue even in complex tasks.

Besides, Google prepares the launch of Gemini 3 Ultrathe most advanced model of this new generation. Although it is not yet available to the general public, it is in the testing phase and will be launched in the coming weeks, as confirmed by the company. The difference between the versions lies mainly in the size of the model and its capacity for more complex tasks. Ultra will be aimed at more demanding applications, such as personal assistants with expanded functions, large-scale code generation, or automated tutoring with detailed tracking and feedback.

One of the most interesting developments, as we mentioned before, is the introduction of Deep Think, a feature designed to enable a slower and more exhaustive type of reasoning. Instead of being limited to immediate responses, this option allows the model to “reflect” before responding, evaluating possible solutions in more depth. For now, it is available only to select testers and users, but it anticipates a clear direction: AI that not only reacts, but analyzes. This capability, applied to the analysis of long documents, data interpretation or advanced programming, could transform many of the tasks that we continue to solve manually today.

Deployment in the Google ecosystem and development tools

The Gemini 3 launch is not limited to the model itself, but It is part of a total integration strategy within the Google ecosystem. From day one, the model has been implemented in the Gemini app—available on Android and via the web on iOS—as a general conversational assistant. But it has also reached products in the Workspace environment simultaneously, including Gmail, Docs, Sheets and Slides. In all these cases, the user benefits from the new model without having to modify their workflow: the AI adapts to the context, improves the quality of the suggestions and responds more precisely to the specific needs of each tool.

In parallel, Gemini 3 is now available to developers and enterprises through the Vertex AI and AI Studio platforms. This means that any organization already working with Google cloud solutions can integrate the new model into their own applications, automated flows or data analysis environments. This opening reinforces Google’s role as an AI provider not only for the end user, but also for the productive, educational and scientific fabric. The model can be customized, adjusted by domain and scaled according to the needs of each client.

Another highlight is the integration of Gemini 3 into Antigravity, Google’s experimental environment for testing advanced AI features before its public release. In this environment, developers can experiment with new capabilities, specialized agents, and complex conversational flows, making Gemini not just a tool, but an evolving platform. The commitment to a global and simultaneous deployment, without intermediate phases or limited regional versions, reinforces Google’s message: Gemini 3 is ready to operate, and it does so from the core of its ecosystem.

Strategic vision and what comes next

Beyond immediate capabilities, Gemini 3 represents a key piece in Google’s strategy towards more autonomous, personalized and useful artificial intelligence. The company has insisted that its goal is not only to build conversational models, but lay the foundation for a new generation of intelligent agents. These agents will not be limited to answering questions, but will be able to execute tasks, anticipate needs, adapt to personal contexts and collaborate with humans in increasingly complex environments. It is a vision that brings AI closer to the role of a real co-pilot in multiple spheres: from daily work to continuous learning.

Among the most relevant intended uses are: programming, educational support, professional assistance and content creation. Gemini is being trained not only to understand questions, but also to offer explanations, debug code, tutor students or write documents with editorial criteria. This functional breadth is intended to be rolled out gradually, starting with secure and controlled environments, but with a view to freer adoption as governance, customization and ethical alignment tools are refined.

In this framework, Google seems determined to compete not only as a model, but also in its ability to offer real value in specific products. Faced with rivals such as OpenAI with GPT-4 or Anthropic with Claude, whose power is undeniable but whose applications are even more fragmented, Google’s advantage lies in the depth of its ecosystem: search engine, email, productivity, cloud and mobile. Gemini 3 doesn’t just want to be a better model, it wants to be everywhere we already work and live digitally.

Personally, I am less impressed by the isolated power of the model than by its silent integration into everyday life.. Gemini 3 is not an experiment, nor an isolated product, nor a promising beta: it is an infrastructure that is already working, embedded in tools that millions of people use every day. That marks a key difference from previous stages of artificial intelligence. We are not just testing what an AI can do, we are living with it. And from now on, the most important thing will not be what we ask of it, but what we allow it to learn from us.

More information