For decades, CPUs (central processing units) were the backbone of modern computing. From personal computers to powerful servers, CPUs handled the vast majority of tasks thanks to their ability to execute instructions sequentially and efficiently. However, in recent years, a quiet revolution has transformed this landscape. La gip (graphics processing unit), originally designed to handle complex graphics calculations for video games and visual rendering, has emerged as the new queen of computing.

And it’s not just a matter of popularity or trend. The rise of the GPU is based on fundamental architectural differences that fit perfectly with today’s most demanding workloads. From artificial intelligence and scientific simulations to technologies blockchain and real-time graphics rendering, GPUs have become indispensable.

A shift in architectural power

The central reason for this change lies in the architecture of the GPUs. While CPUs usually have fewer cores, but more powerful and optimized for sequential processing, GPUs feature thousands of smaller, more efficient cores that excel at parallel processing. This architecture allows GPUs to perform a huge amount of calculations simultaneously, making them ideal for tasks that require processing large volumes of data quickly.

In areas such as artificial intelligence and deep learningthis is essential. Training a complex neural network on a CPU could take weeks, while a GPU can handle the same workload in a fraction of the time. This speed has driven innovation in numerous sectors, allowing researchers and companies to update faster and produce results that were previously impossible.

AI, Big Data and beyond

Artificial intelligence has probably been the biggest beneficiary of the GPU revolution. Training and deploying deep neural networks requires enormous computational power. GPUs not only provide the necessary speed, but also scalability for these tasks. Companies like OpenAI, Meta, and Google rely heavily on GPU-based infrastructures for their large-scale AI projects.

The analysis of big data It has also undergone a transformation. Processing terabytes of information in distributed systems becomes much more manageable with GPU acceleration. This has had implications in sectors such as finance, healthcare, retail and more, where the speed and ability to obtain information can translate into a competitive advantage.

High Performance Computing (HPC)

GPUs have also found a place crucial in the scientific and engineering communities. High-performance computing tasks, such as climate modeling, genome sequencing, and physics simulations, require enormous amounts of processing power. This is where GPUs shine. Its ability to handle parallel workloads allows simulations that previously took months to run in days or even hours.

Institutions such as CERN, NASA, and leading universities around the world now rely on GPU clusters to expand the boundaries of knowledge. The scalability of GPUs has opened up new possibilities in scientific discovery.

The evolution of the ecosystem

Software support has played a critical role in this change. Platforms like NVIDIA’s CUDA and AMD’s ROCm have matured significantly, offering robust ecosystems for developers. The frameworks of machine learning as TensorFlow and PyTorch are designed to take advantage of GPU accelerationmaking it easier for engineers and data scientists to write code that utilizes the power of GPUs without requiring in-depth knowledge of parallel programming.

These frameworks also integrate seamlessly with cloud platforms such as AWS, Google Cloud, and Azure. Now, businesses of all sizes can access high-performance GPU instances on demand, democratizing access to power that was previously reserved only for large corporations.

Economic and industrial impacts

The rise of GPUs has dramatically transformed the semiconductor industry. NVIDIA, once considered a niche graphics card company, is now among the most valuable technology companies globally. AMD and Intel have responded by accelerating the development of their own GPUs, leading to fierce competition and rapid innovation.

High demand for GPUs has even led to supply chain disruptions and global shortages. The race for access to powerful chips has become a geopolitical issue, with governments recognizing the strategic importance of semiconductor manufacturing.

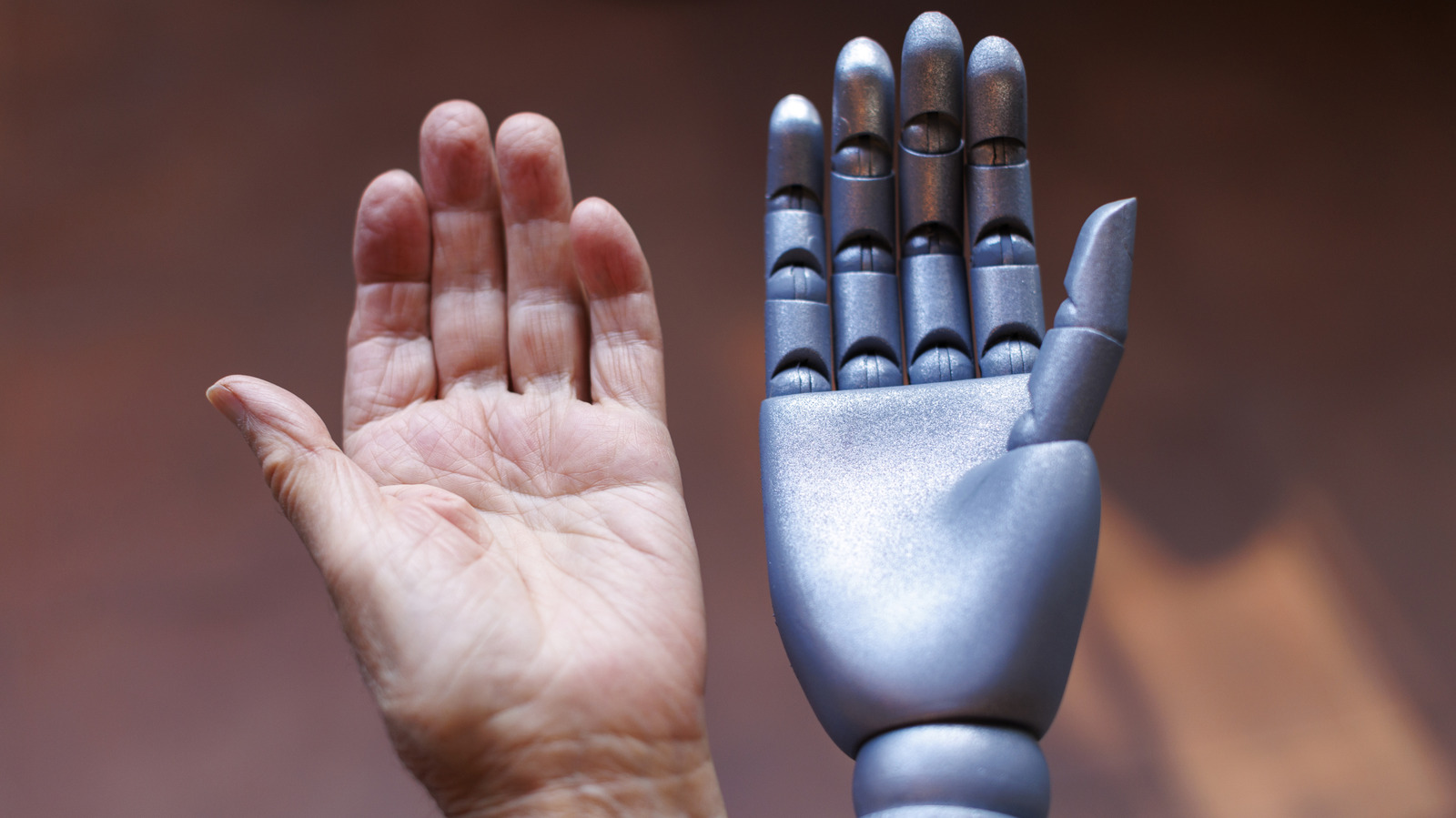

CPUs still have their place

Despite the dominance of GPUs in many sectors, CPUs are still important. They are more suitable for tasks requiring low latency and high performance in a single threadsuch as managing operating systems, running traditional business applications, and handling light multitasking. Most modern systems continue to rely on a combination of CPU and GPU, with the CPU coordinating the system and the GPU handling the bulk of the computational load.

But in the most advanced and fast-growing technology segments, the CPU is no longer the protagonist. It is the assistant, the manager that delegates the heavy work to the GPU.

Energy efficiency and challenges

A common criticism of GPUs is their power consumption. High-performance GPUs can consume several hundred wattsraising concerns about sustainability. However, when measuring performance per watt in parallel workloads, GPUs are typically more efficient than CPUs.

Continued innovation in chip design, cooling technology, and software optimization continues to address these concerns. NVIDIA’s Hopper and AMD’s CDNA architectures, for example, focus on delivering better power efficiency and superior thermal performance.

Looking to the future

So what does the future hold for us? As our world becomes increasingly driven by data and automation, demand for parallel processing will only continue to grow. Generative AI, autonomous vehicles, virtual and augmented reality – all of these technologies rely heavily on the capabilities of GPUs.

In fact, we could see a future where GPU-like architectures dominate even general-purpose computing. Hybrid chips that combine CPU and GPU functions are already gaining traction, especially in mobile and consumer computing. Apple’s M-series chips and Qualcomm’s Snapdragon line suggest what this future could look like.

In the past, the CPU was the undisputed center of computing. But today, the GPU has taken that crown, not by completely replacing the CPU, but by surpassing it in relevance, performance, and versatility for the demands of modern computing.

As new challenges and opportunities emerge, GPU dominance is expected to continue to grow. The era of the CPU as king is over. Long live the GPU!

Por Frank Scheufens, Product Manager Professional Visualization en PNY Technologies