The Nobel Prize ceremony took place in December this year, celebrating both the work in artificial intelligence and the Nihon Hidankyo group’s efforts to end nuclear war.

It was a striking combination that did not escape my attention, as a mathematician who studies how deep learning works. In the first half of the 20th century, Nobel committees awarded prizes in physics and chemistry for discoveries that revealed the structure of atoms. This work also made possible the development and subsequent deployment of nuclear weapons. Decades later, this year’s Nobel Committees awarded the Peace Prize for their work to counter the way nuclear science was ultimately used.

There are parallels between the development of nuclear weapons based on basic physics research, and the risks posed by applications of AI that emerge from work that began as basic research in computer science. These include the new Trump administration’s push for ‘Manhattan Projects’ for AI, as well as a broader spectrum of societal risks, including disinformation, job displacement and surveillance.

About supporting science journalism

If you like this article, please consider supporting our award-winning journalism by subscribe. By purchasing a subscription, you help shape the future of impactful stories about the discoveries and ideas shaping our world today.

I fear that my colleagues and I are not sufficiently connected to the effects that our work can have. Will the Nobel Committees in the next century award a Peace Prize to the people who clean up the mess left behind by AI scientists? I am determined that we do not repeat the nuclear weapons story.

About 80 years ago, hundreds of the world’s top scientists joined the Manhattan Project in a race to build an atomic weapon before the Nazis did. But after German bombing stopped in 1944 and even after Germany surrendered the following year, work at Los Alamos continued without interruption.

Even when the Nazi threat was over, only one Manhattan Project scientist – Joseph Rotblat – left the project. Looking back, Rotblat explains: “You get involved in a certain way and forget that you are human. It becomes an addiction and you just continue to create a gadget, without thinking about the consequences. And then, after you do this, you find a justification for producing it. Not the other way around.”

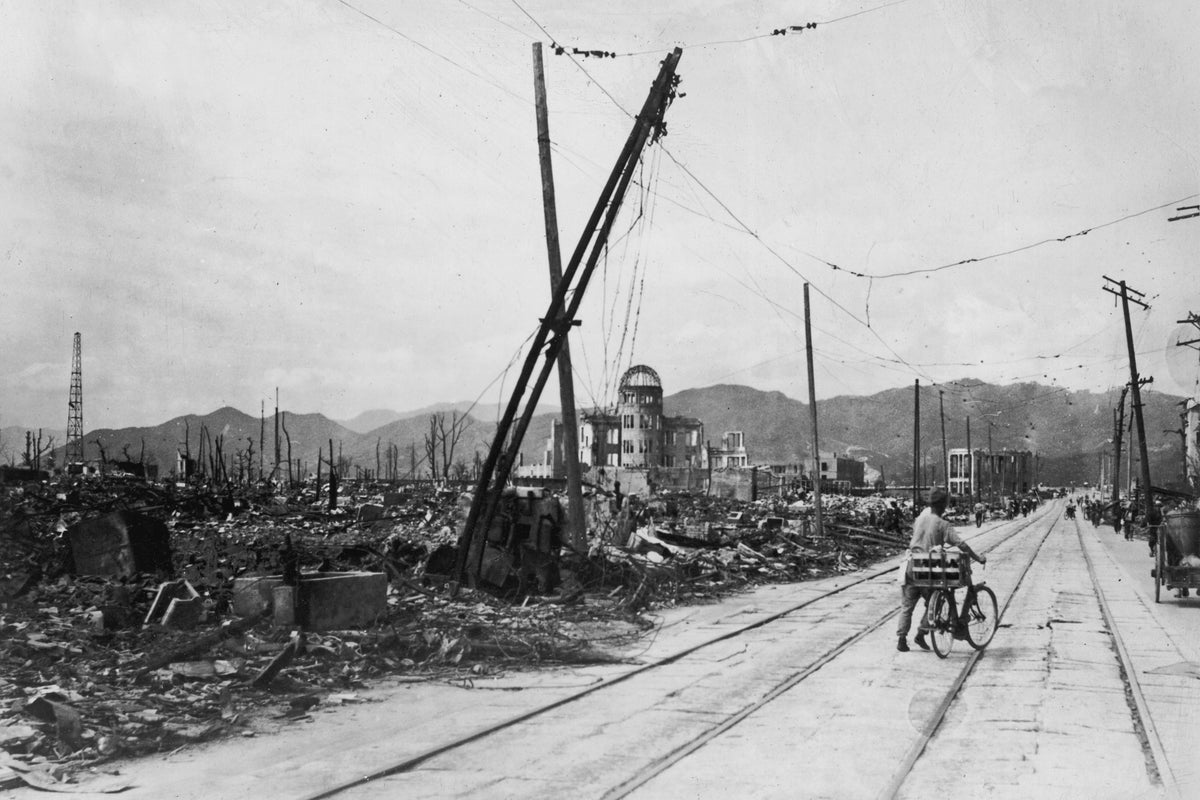

Shortly afterwards, the US military conducted the first nuclear test. Then American leaders authorized the twin bombings of Hiroshima and Nagasaki on August 6 and 9. The bombs killed hundreds of thousands of Japanese civilians, some instantly. Others died years and even decades later from radiation poisoning.

Although Rotblat’s words were written decades ago, they are an eerily accurate description of the prevailing ethos in current AI research.

I first began to see parallels between nuclear weapons and artificial intelligence while working at the Princeton Institute for Advanced Study, where the haunting final scene of Christopher Nolan’s film Oppenheimer was set. After making some progress in understanding the mathematical innards of artificial neural networks, I also began to worry about the eventual social implications of my work. On the advice of a colleague, I talked to the then director of the institute, physicist Robbert Dijkgraaf.

He suggested that I look to the life story of J. Robert Oppenheimer for guidance. I read one biography and then another. I tried to guess what Dijkgraaf had in mind, but I saw nothing appealing in Oppenheimer’s path, and by the time I finished the third biography, the only thing that was clear to me was that I didn’t want my own life to be a would be a reflection of. I didn’t want to reach the end of my life with a burden like Oppenheimer’s.

Oppenheimer is often quoted as saying that when scientists “see something that is technically beautiful, they do it.” Geoff Hinton, one of the winners of the 2024 Nobel Prize in Physics, has referred to this. This is not universally true. The leading female physicist of the time, Lise Meitner, was asked to join the Manhattan Project. Despite being Jewish and having narrowly escaped Nazi occupation, she flatly refused, saying, “I don’t want anything to do with a bomb!”

Rotblat also offers another model for how scientists can meet the challenge of harnessing talent without losing sight of values. After the war he returned to physics, focusing on medical applications of radiation. He also became a leader in the nuclear anti-proliferation movement through the Pugwash Conferences on Science and World Affairs, a group he co-founded in 1957. In 1995, he and his colleagues received a Nobel Peace Prize for this work.

Now, as then, there are thoughtful, grounded individuals standing out in the development of AI. With an attitude reminiscent of Rotblat, Ed Newton-Rex resigned last year as leader of the music generation team at Stability AI, over the company’s push to create generative AI models trained on copyrighted data without any prior use to pay. This year, Suchir Balaji resigned as a researcher at OpenAI over similar concerns.

Following Meitner’s refusal to work on military applications of her discoveries, Meredith Whittaker addressed employee concerns at an internal company town hall in 2018 about Project Maven, a Department of Defense contract to develop AI to help military drone drive targeting and surveillance. Ultimately, workers managed to pressure Google, where 2024 Nobel Prize winner for physics Demis Hassabis works, to drop the project.

There are many ways in which society influences the way scientists work. A direct one is financial; Collectively we choose which research we fund, and individually we choose which products from that research we pay for.

An indirect but very effective factor is prestige. Most scientists care about their legacy. When we look back on the nuclear age – for example, if we choose to make a film about Oppenheimer, among other scientists of that era – we are sending a signal to scientists today about what we consider important. When the Nobel Prize Committees choose which people among those working on AI today to award with Nobel Prizes, they provide a powerful incentive for the AI researchers of today and tomorrow.

It is too late to change the events of the 20th century, but we can hope for better outcomes for AI. We can start by looking past those in machine learning who focus on the rapid development of capabilities, and instead follow the example of the likes of Newton-Rex and Whittaker, who insist on focusing on the context of their work and who have the ability to not only evaluate, but also respond to changing circumstances. Paying attention to what scientists like them say will provide the best hope for positive scientific development now and in the future.

As a society, we have a choice about who we want to elevate, emulate, and uphold as role models for the next generation. As the nuclear age teaches us, now is the time to carefully evaluate which applications of scientific discoveries, and which of today’s scientists, reflect the values not of the world we currently live in, but of the world in which we hope to live.

This is an opinion and analysis article, and the views of the author or authors are not necessarily those of Scientific American.