Uber engineers have described how they evolved their distributed storage platform from static rate limiting to a priority-aware load management system to protect their in-house databases. The change addressed the limitations of QPS-based rate limiting in large, stateful, multi-tenant systems, which did not reflect actual load, handle noisy neighbors, or protect tail latency.

The design protects Docstore and Schemaless, built on MySQL® and serving traffic through thousands of microservices supporting over 170 million monthly active users, including riders, Uber Eats users, drivers, and couriers. By prioritizing critical traffic and adapting dynamically to system conditions, the system prevents cascading overloads and maintains performance at scale.

Uber engineers noted that early quota-based approaches relied on static limits enforced through centralized tracking but proved ineffective. Stateless routing layers lacked timely visibility into partition-level load, and requests of similar size imposed varying CPU, memory, or I/O costs. Operators frequently retuned limits, sometimes shedding healthy traffic while overloaded partitions remained unprotected.

As Dhyanam V. Uber Engineer noted in a LinkedIn post,

Overload protection in stateful databases is a multi-dimensional problem at scale.

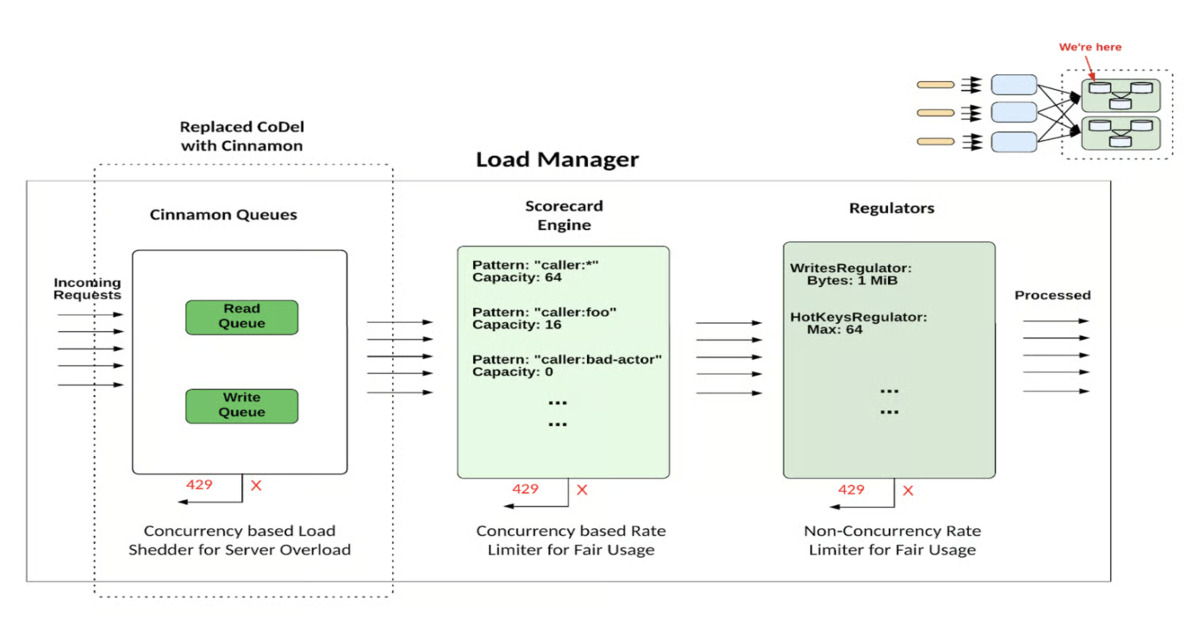

To address this, Uber colocated load management with stateful storage nodes, combining Controlled Delay (CoDel) queuing with a per-tenant Scorecard. CoDel adjusted queue behavior based on latency, while Scorecard enforced concurrency limits, and additional regulators monitored I/O, memory, goroutines, and hotspots. CoDel treated all requests equally, dropping both low-priority and user-facing traffic, which increased the on-call load and negatively impacted user experience. It also relied on fixed queue timeouts and static in-flight limits, which could trigger thundering herd retries and drop high-priority requests. While it prevented catastrophic failures, the system lacked the dynamism and nuance required for consistent performance, highlighting the need for priority-aware queues.

Load manager setup with CoDel queue (Source : Uber Blog Post)

The next evolution introduced Cinnamon. This priority-aware load shedder assigns requests to ranked tiers, allowing lower-priority traffic to be dropped before latency-sensitive operations are affected. Cinnamon dynamically tunes in-flight limits and queue timeouts using high-percentile latency metrics, reducing dependence on static thresholds and enabling smoother degradation during overload events.

Load manager setup with Cinnamon queue (Source : Uber Blog Post)

Uber later unified local and distributed overload signals into a single modular control loop using a “Bring Your Own Signal” model. This architecture allows teams to plug in both node-level indicators, such as in-flight concurrency and memory pressure, and cluster-level signals, including follower commit lag, into a centralized admission control path. Consolidating these signals eliminated fragmented control logic and avoided conflicting load-shedding decisions seen in earlier token bucket based systems.

According to Uber, the results have been substantial. Under overload conditions, throughput increased by approximately 80 percent, while P99 latency for upsert operations dropped by around 70 percent. The system also reduced goroutine counts by roughly 93 percent and lowered peak heap usage by about 60 percent, improving overall efficiency and reducing operational toil.

Uber highlights key lessons from its load management evolution: prioritize critical user-facing traffic, shedding lower-priority requests first; reject requests early to maintain predictable latencies and reduce memory pressure; use PID-based regulation for stability; place control near the source of truth; adapt dynamically to workloads; maintain observability; and favor simplicity to ensure consistent, resilient operation under stress.