Table of Links

Abstract and 1 Introduction

2 Technical Specifications

3 Academic benchmarks

4 Safety

5 Weakness

6 Phi-3-Vision

6.1 Technical Specifications

6.2 Academic benchmarks

6.3 Safety

6.4 Weakness

References

A Example prompt for benchmarks

B Authors (alphabetical)

C Acknowledgements

2 Technical Specifications

The phi-3-mini model is a transformer decoder architecture [VSP+ 17], with default context length 4K. We also introduce a long context version via LongRope [DZZ+ 24a] that extends the context length to 128K, called phi-3-mini-128K.

To best benefit the open source community, phi-3-mini is built upon a similar block structure as Llama-2 [TLI+ 23] and uses the same tokenizer with vocabulary size of 32064[1]. This means that all packages developed for Llama-2 family of models can be directly adapted to phi-3-mini. The model uses 3072 hidden dimension, 32 heads and 32 layers. We trained using bfloat16 for a total of 3.3T tokens. The model is already chat-finetuned, and the chat template is as follows:

The phi-3-small model (7B parameters) leverages the tiktoken tokenizer (for better multilingual tokenization) with a vocabulary size of 100352[2] and has default context length 8192. It follows the standard decoder architecture of a 7B model class, having 32 heads, 32 layers and a hidden size of 4096. We switched from GELU activation to GEGLU and used Maximal Update Parametrization (muP) [?] to tune hyperparameters on a small proxy model and transfer them to the target 7B model. Those helped ensure better performance and training stability. Also, the model leverages a grouped-query attention, with 4 queries sharing 1 key. To optimize the training and inference speed, we design a novel blocksparse attention module. For each attention head, the blocksparse attention enforces different sparsity patterns over KV cache. This ensures that all tokens are attended to on different heads for the given choice of sparsity. As illustrated in Figure 1, the context is then efficiently divided and conquered among attention heads, with significant KV cache reduction. To achieve actual deployment speed-up from the blocksparse design, we implemented highly efficient, yet flexible kernels for both training and inference. For training, we build a triton kernel based on Flash Attention [DFE+ 22]. For inference, we implemented a kernel for the prefilling phase and extended the paged attention kernel in vLLM for the decoding phase [KLZ+ 23]. Lastly, in phi-3-small architecture, we alternate dense attention layers and blocksparse attention layers to optimize KV cache savings while maintaining long context retrieval performance. An additional 10% multilingual data was also used for this model.

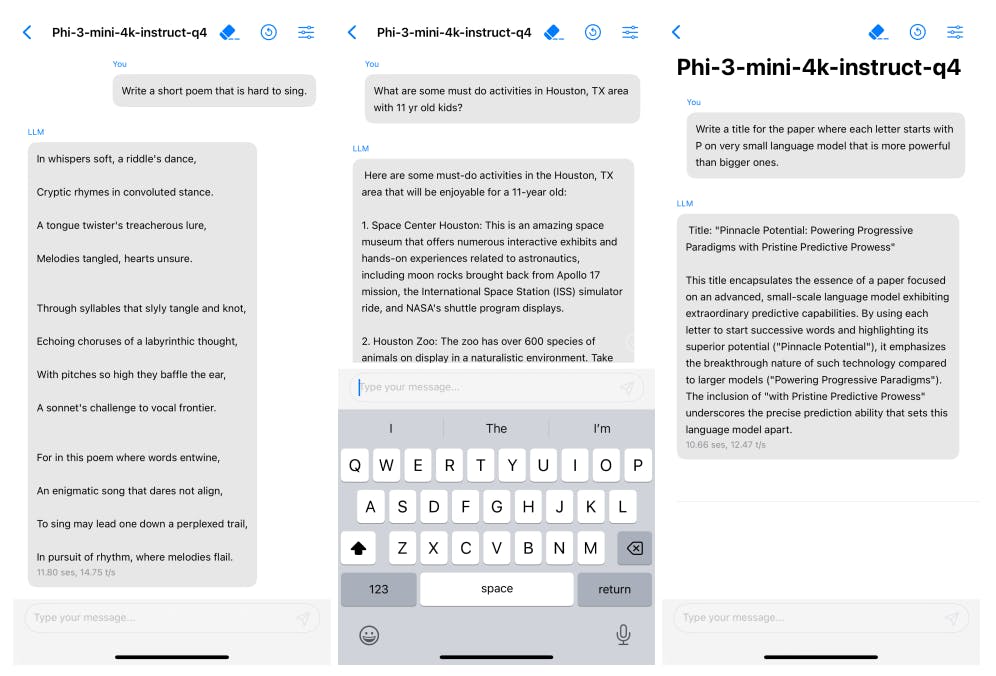

Highly capable language model running locally on a cell-phone. Thanks to its small size, phi3-mini can be quantized to 4-bits so that it only occupies ≈ 1.8GB of memory. We tested the quantized model by deploying phi-3-mini on iPhone 14 with A16 Bionic chip running natively on-device and fully offline achieving more than 12 tokens per second.

Training Methodology. We follow the sequence of works initiated in “Textbooks Are All You Need” [GZA+ 23], which utilize high quality training data to improve the performance of small language models and deviate from the standard scaling-laws. In this work we show that such method allows to reach the level of highly capable models such as GPT-3.5 or Mixtral with only 3.8B total parameters (while Mixtral has 45B total parameters for example). Our training data of consists of heavily filtered publicly available web data (according to the “educational level”) from various open internet sources, as well as synthetic LLM-generated data. Pre-training is performed in two disjoint and sequential phases; phase-1 comprises mostly of web sources aimed at teaching the model general knowledge and language understanding. Phase-2 merges even more heavily filtered webdata (a subset used in Phase-1) with some synthetic data that teach the model logical reasoning and various niche skills.

Data Optimal Regime. Unlike prior works that train language models in either “compute optimal regime” [HBM+ 22] or “over-train regime”, we mainly focus on the quality of data for a given scale. [3] We try to calibrate the training data to be closer to the “data optimal” regime for small models. In particular, we filter the publicly available web data to contain the correct level of “knowledge” and keep more web pages that could potentially improve the “reasoning ability” for the model. As an example, the result of a game in premier league in a particular day might be good training data for frontier models, but we need to remove such information to leave more model capacity for “reasoning” for the mini size models. We compare our approach with Llama-2 in Figure 3.

To test our data on larger size of models, we also trained phi-3-medium, a model with 14B parameters using the same tokenizer and architecture of phi-3-mini, and trained on the same data for slightly more epochs (4.8T tokens total as for phi-3-small. The model has 40 heads and 40 layers, with embedding dimension 5120. We observe that some benchmarks improve much less from 7B to 14B than they do from 3.8B to 7B, perhaps indicating that our data mixture needs further work to be in the “data optimal regime” for 14B parameters model.

Post-training. Post-training of phi-3-mini went through two stages, including supervised finetuning (SFT) and direct preference optimization (DPO). SFT leverages highly curated high-quality data across diverse domains, e.g., math, coding, reasoning, conversation, model identity, and safety. The SFT data mix starts with using English-only examples. DPO data covers chat format data, reasoning, and responsible AI (RAI) efforts. We use DPO to steer the model away from unwanted behavior, by using those outputs as “rejected” responses. Besides improvement in math, coding, reasoning, robustness, and safety, post-training transforms a language model to an AI assistant that users can efficiently and safely interact with.

As part of the post-training process, we developed a long context version of phi-3-mini with context length limit enlarged to 128K instead of 4K. Across the board, the 128K model quality is on par with the 4K length version, while being able to handle long context tasks. Long context extension has been done in two stages, including long context mid-training and long-short mixed post-training with both SFT and DPO.

Authors:

(1) Marah Abdin;

(2) Sam Ade Jacobs;

(3) Ammar Ahmad Awan;

(4) Jyoti Aneja;

(5) Ahmed Awadallah;

(6) Hany Awadalla;

(7) Nguyen Bach;

(8) Amit Bahree;

(9) Arash Bakhtiari;

(10) Jianmin Bao;

(11) Harkirat Behl;

(12) Alon Benhaim;

(13) Misha Bilenko;

(14) Johan Bjorck;

(15) Sébastien Bubeck;

(16) Qin Cai;

(17) Martin Cai;

(18) Caio César Teodoro Mendes;

(19) Weizhu Chen;

(20) Vishrav Chaudhary;

(21) Dong Chen;

(22) Dongdong Chen;

(23) Yen-Chun Chen;

(24) Yi-Ling Chen;

(25) Parul Chopra;

(26) Xiyang Dai;

(27) Allie Del Giorno;

(28) Gustavo de Rosa;

(29) Matthew Dixon;

(30) Ronen Eldan;

(31) Victor Fragoso;

(32) Dan Iter;

(33) Mei Gao;

(34) Min Gao;

(35) Jianfeng Gao;

(36) Amit Garg;

(37) Abhishek Goswami;

(38) Suriya Gunasekar;

(39) Emman Haider;

(40) Junheng Hao;

(41) Russell J. Hewett;

(42) Jamie Huynh;

(43) Mojan Javaheripi;

(44) Xin Jin;

(45) Piero Kauffmann;

(46) Nikos Karampatziakis;

(47) Dongwoo Kim;

(48) Mahoud Khademi;

(49) Lev Kurilenko;

(50) James R. Lee;

(51) Yin Tat Lee;

(52) Yuanzhi Li;

(53) Yunsheng Li;

(54) Chen Liang;

(55) Lars Liden;

(56) Ce Liu;

(57) Mengchen Liu;

(58) Weishung Liu;

(59) Eric Lin;

(60) Zeqi Lin;

(61) Chong Luo;

(62) Piyush Madan;

(63) Matt Mazzola;

(64) Arindam Mitra;

(65) Hardik Modi;

(66) Anh Nguyen;

(67) Brandon Norick;

(68) Barun Patra;

(69) Daniel Perez-Becker;

(70) Thomas Portet;

(71) Reid Pryzant;

(72) Heyang Qin;

(73) Marko Radmilac;

(74) Corby Rosset;

(75) Sambudha Roy;

(76) Olatunji Ruwase;

(77) Olli Saarikivi;

(78) Amin Saied;

(79) Adil Salim;

(80) Michael Santacroce;

(81) Shital Shah;

(82) Ning Shang;

(83) Hiteshi Sharma;

(84) Swadheen Shukla;

(85) Xia Song;

(86) Masahiro Tanaka;

(87) Andrea Tupini;

(88) Xin Wang;

(89) Lijuan Wang;

(90) Chunyu Wang;

(91) Yu Wang;

(92) Rachel Ward;

(93) Guanhua Wang;

(94) Philipp Witte;

(95) Haiping Wu;

(96) Michael Wyatt;

(97) Bin Xiao;

(98) Can Xu;

(99) Jiahang Xu;

(100) Weijian Xu;

(101) Sonali Yadav;

(102) Fan Yang;

(103) Jianwei Yang;

(104) Ziyi Yang;

(105) Yifan Yang;

(106) Donghan Yu;

(107) Lu Yuan;

(108) Chengruidong Zhang;

(109) Cyril Zhang;

(110) Jianwen Zhang;

(111) Li Lyna Zhang;

(112) Yi Zhang;

(113) Yue Zhang;

(114) Yunan Zhang;

(115) Xiren Zhou.

[1] We remove BoS tokens and add some additional tokens for chat template.

[2] We remove unused tokens from the vocabulary.

[3] Just like for “compute optimal regime”, we use the term “optimal” in an aspirational sense for “data optimal regime”. We are not implying that we actually found the provably “optimal” data mixture for a given scale.