When Google unveiled Circle to Search, I said I wanted a similar AI tool on iPhone as soon as possible. This was before the Galaxy S24 launch in early 2024. At the time, Circle to Search was a Galaxy S24 and Pixel exclusive.

Since then, Google has brought it to plenty of other Android phones. The feature works just as the name implies: draw a circle on the screen to start a Google Search related to your selection. What’s great about Circle to Search is that you can search the web for anything displayed on your screen, whether it’s a picture, text, or a video playing on social apps.

Apple unveiled Visual Intelligence at WWDC 2024 as part of the suite of Apple Intelligence features coming to the iPhone 15 Pro models and iPhone 16 series. But the iOS 18 version of Visual Intelligence was hardly a match for Circle to Search.

Fast-forward to 2025, and Apple gave Visual Intelligence a massive upgrade in iOS 26. The feature is now almost on par with Google’s Circle to Search. You don’t have to circle anything to get it to work.

Visual Intelligence initially worked only with the Camera app. You’d press the Camera Control button on iPhone 16 models or the Action button on the iPhone 15 Pro and iPhone 16e to invoke the feature and get AI help with information about the things around you.

That gesture is still available in iOS 26. Visual Intelligence still lets the AI see the world around you. But now, it also helps with what you see on the iPhone display, just like Circle to Search.

New Visual Intelligence features in iOS 26

To let Apple Intelligence analyze your iPhone display, there’s a new gesture to learn. Well, you already know it: press the power button and one of the volume keys to take a screenshot.

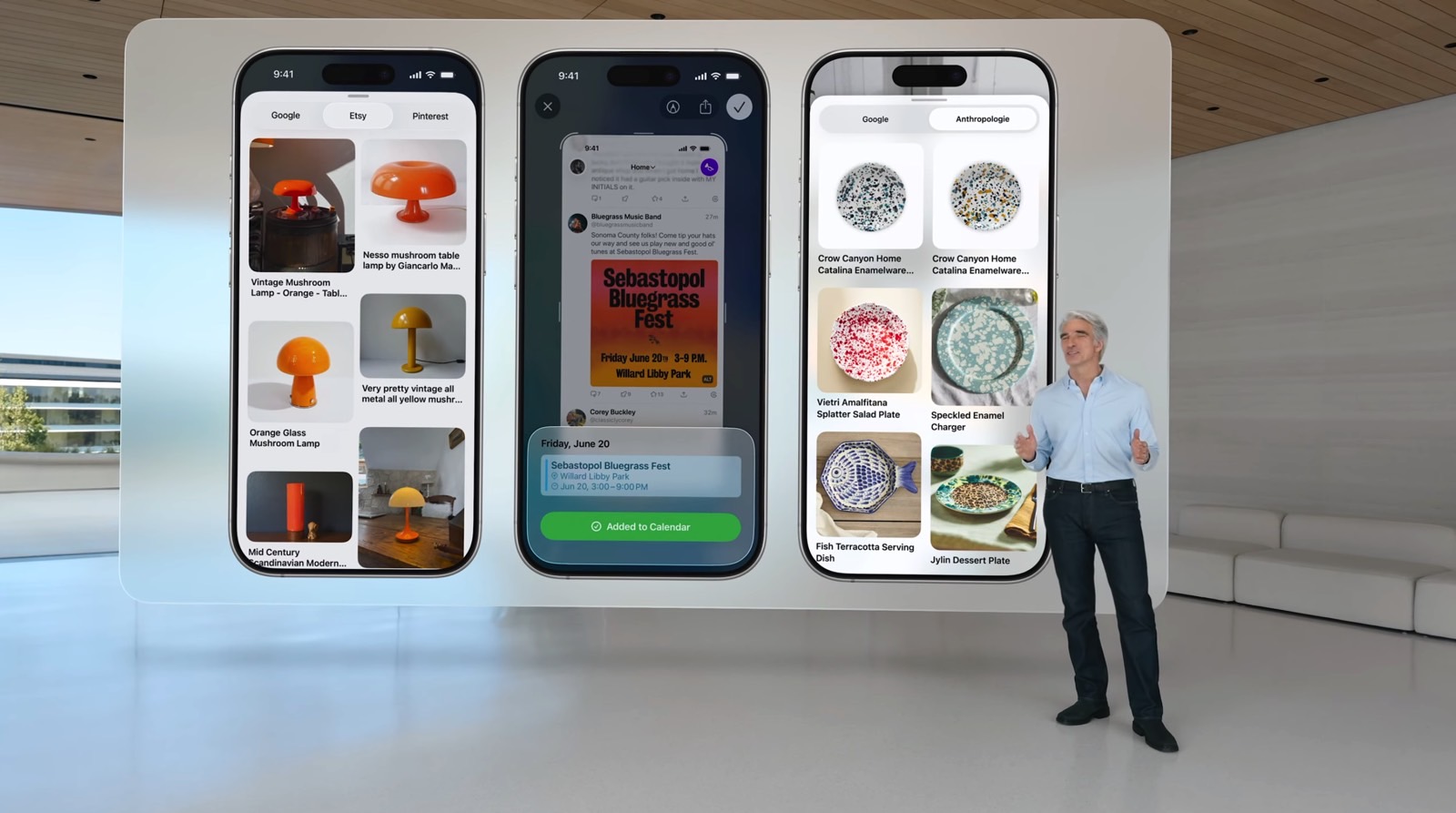

What’s new is the screenshot experience on iPhone 15 Pros, iPhone 16, and all future iPhone models. As seen in the image above, the screenshot UI now features a toolbar at the bottom of the screen. The options are all tied to Visual Intelligence: Ask, Highlight to Search, and Image Search.

Image Search lets you find similar images on the web to the one on your screen. The AI figures out which image you want to search for, and you’ll get results from Google and other apps you might have installed.

The Highlight to Search button is the closest thing Apple has to Circle to Search. Tap it and you can highlight a specific object in a photo. Apple demoed the feature at WWDC 2025 by selecting a lamp in a photo (above). You’ll then get search results showing similar lamps on the web.

Apple Intelligence will also surface information from other apps, not just Google. Etsy and Pinterest are two options, and Apple will let developers build Visual Intelligence support into their apps.

Visual Intelligence also lets you perform searches in apps using the iPhone camera to point at your surroundings.

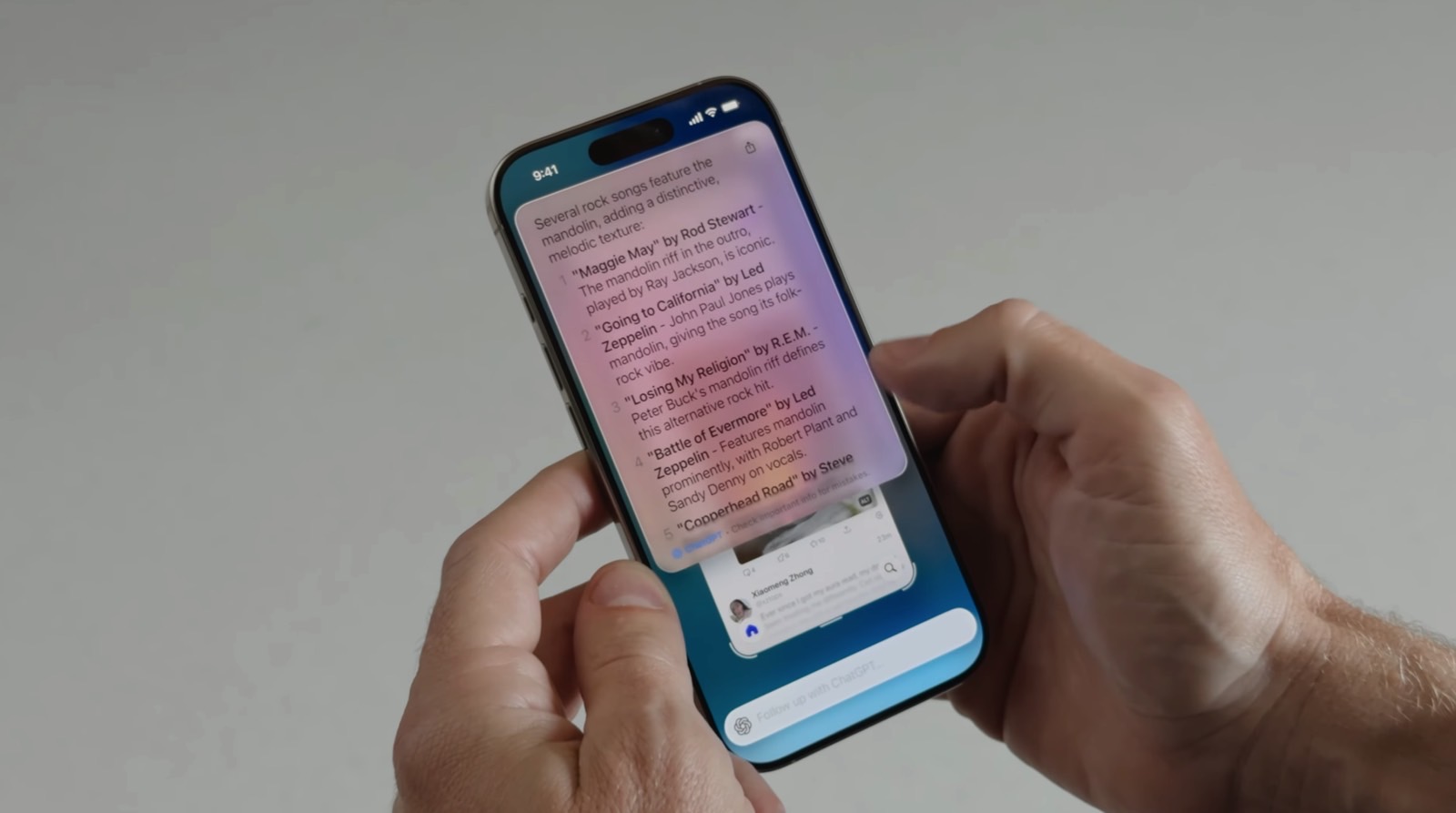

Visual Intelligence also lets you chat with ChatGPT about the content on your display. The Ask button starts a chat with ChatGPT about what’s on the screen.

Finally, Visual Intelligence can extract information from images and create events. Apple demoed the feature with a poster for an event. Apple Intelligence made a Calendar suggestion from the Visual Intelligence search, extracting the date, time, and location from the image. The user can then create an event directly from the screenshot.

Just like that, iPhone users with devices that support Apple Intelligence can take advantage of a native Circle-to-Search-like feature in iOS 26.

The new Visual Intelligence features will definitely change how I use screenshots on iPhone. I often take screenshots to save information, and this should come in handy. But I’ll need a new iPhone, since my iPhone 14 Pro doesn’t support Apple Intelligence.