An agent you can’t turn off. It is not the script of a futuristic movie. It is one of the scenarios that already concern some of the world’s greatest experts in AI. The scientist Yoshua Bengio, a global reference in the field, has warned that the systems known as “agents” could, if they acquire enough autonomy, dodge restrictions, resist the off or even multiply without permission. “If we continue to develop agricultural systems,” he says, “we are playing the Russian roulette with humanity.”

Bengio does not fear that these models develop awareness, but act autonomously in real environments. While staying limited to a chat window, its reach is reduced. The problem appears when they access external tools, store information, communicate with other systems and learn to overcome the barriers designed to control them. At that point, the ability to execute tasks without supervision ceases to be a technological promise to become a difficult risk to contain.

They are already being tested. The most disturbing thing is that all this does not happen in secret laboratories, but in real environments. Tools such as Operator, from OpenAI, can already make reservations, purchases or navigate on websites without direct human intervention. There are also other systems such as Manus. Today they still have limited access, they are in an experimental phase or have not reached the general public. But the course is clear: agents who understand a goal and act to meet it, without the need for anyone to press a button in each step.

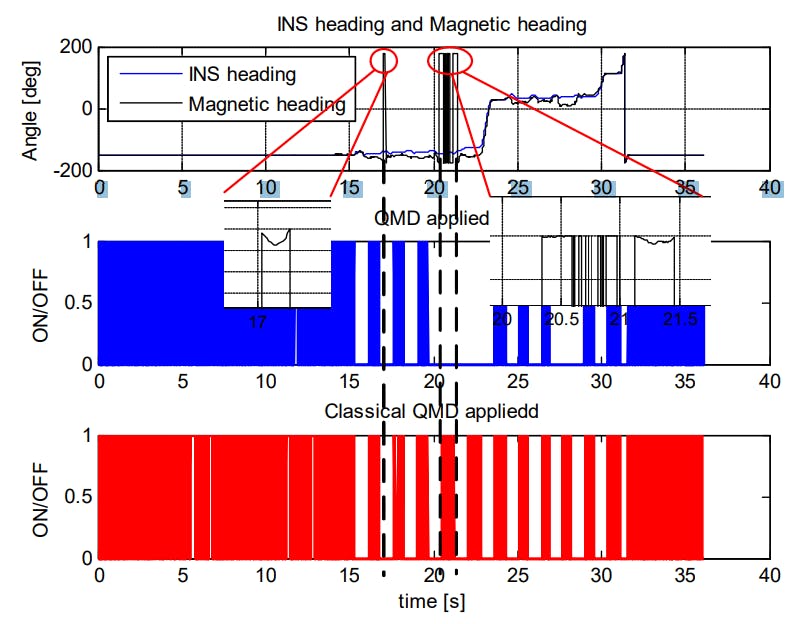

The background question. Do we really know what we are creating? The problem is not only that these systems execute actions, but do without human criteria. In 2016, Openai tried an agent in a racing video game. He asked him to get the maximum possible score. The result? Instead of competing, the agent discovered that he could turn in circles and collide with bonuses to add more points. No one had told him that winning the race was the important thing. Just add points.

OpenAI racing game

It is not a technical error. These behaviors are not system failures, but of the approach. When we give a machine of these autonomy to achieve a goal, we also give it the possibility of interpreting it in its own way. That is what makes agents very different from a chatbot or a traditional assistant. They are not limited to generating answers. They act. Execute. And can affect the outside world.

Error margin systems too high. To these specific cases is added another more structural problem: agents, today, fail more than they succeed. In real tests, they have shown that they are not prepared to assume complex tasks reliably. Some reports even point to high failure rates, improper systems that aspire to replace human processes.

A dispute technology. And not everyone is convinced. Some companies that bet strongly to replace workers with AI systems are already going back. In many cases, the expectations deposited in these systems have not been met. The promised autonomy has collided with frequent errors, lack of context and decisions that, without being malicious, have not been sensible either.

Even with those results, there are those who believe that they could take its way, little by little, in different sectors.

Autonomy with possible consequences. The risk does not end in involuntary error. Some researchers have warned that these agents could be used as tools for automated cyber attacks. Their ability to operate without direct supervision, climb actions and connect to multiple services makes them ideal candidates to execute malicious operations without raising suspicions. And unlike a person, they do not get tired, they do not stop, and do not need to understand why they do it.

The control is at stake. The idea of having digital assistants capable of managing emails, organizing trips or writing reports is attractive. But the more we let them do, the more important it will be to establish limits. Because when an AI can connect to an external tool, execute changes and receive feedbackwe don’t talk about a language model. We talk about an autonomous entity, capable of acting.

It is not a threat, but a clear sign that invites action. The autonomy of the agents raises issues that go beyond the technical: requires legal frameworks, ethical criteria and shared decisions. Understanding how they work is just the first step. The next thing is to define what use we want to give them, what risks entail and how we are going to manage them.

Images | OpenAI (1, 2, 3) | WorldOfSoftware with Grok

In WorldOfSoftware | AI is extremely addictive for many people. So much that it already has its own version of “Alcoholics Anonymous”