Table of Links

Abstract and 1 Introduction

2.1 Software Testing

2.2 Gamification of Software Testing

3 Gamifying Continuous Integration and 3.1 Challenges in Teaching Software Testing

3.2 Gamification Elements of Gamekins

3.3 Gamified Elements and the Testing Curriculum

4 Experiment Setup and 4.1 Software Testing Course

4.2 Integration of Gamekins and 4.3 Participants

4.4 Data Analysis

4.5 Threats to Validity

5.1 RQ1: How did the students use Gamekins during the course?

5.2 RQ2: What testing behavior did the students exhibit?

5.3 RQ3: How did the students perceive the integration of Gamekins into their projects?

6 Related Work

7 Conclusions, Acknowledgments, and References

5.1 RQ1: How did the students use Gamekins during the course?

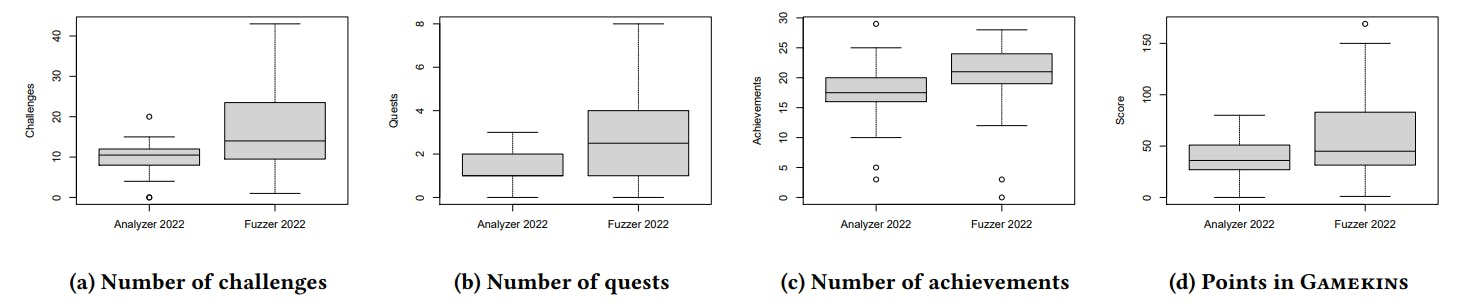

The students completed a total of 730 challenges, 113 quests, and 941 achievements in Gamekins. They collected an average of 39.5 and 61.5 points for the Analyzer and Fuzzer projects, respectively,

with Gamekins (Fig. 5d). In the Analyzer project, on average they completed 10.2 challenges (Fig. 5a) and 1.6 quests (Fig. 5b), and 17.0 challenges and 3.1 quests for the Fuzzer project. The students were new to Gamekins in the Analyzer project, so they likely required more time and assistance to become familiar with it. As a result, they completed significantly more challenges (𝑝 = 0.026) and quests (𝑝 = 0.011) in the Fuzzer project. The number of achievements (Fig. 5c) is higher compared to challenges and quests because the achievements in Gamekins target large industrial projects and may currently be configured to be too easy to obtain on the relatively small student projects. Over half (52 %) of the students used avatars to represent themselves in the leaderboard, indicating that they actually consulted the leaderboard. We also displayed the leaderboard during the exercise sessions to enhance visibility and competition among the students.

According to the data in Table 2, the majority of the challenges solved by the students were coverage-based challenges (36.2 %), followed by Mutation (29.9 %), Test (19.2 %), and Smell Challenges (10.4 %). This distribution is expected because the coverage-based challenges are fast and easy to solve while the Mutation Challenges reveal untested code if the coverage is already high. The students rejected a total of 154 challenges, with the majority of rejections occurring in the Fuzzer project (125). The reasons varied:

• Automatically rejected by Gamekins (49)

• No idea how to test (33)

• Line already covered (generated because of missing branches) (28)

• Not feasible to test (defensive programming) (18)

• Changed/deleted code (10)

• No mutated line available (byte code to source code translation is not always possible) (6)

• Mutant already killed (PIT does not recognize the mutant as killed) (6)

• Challenge is out of scope (generated for a part of the program the students do not need to test) (4)

The rejection rates also varied for different types of challenges. The easiest challenges, such as Build (0 %), Test (3 %), and Class Coverage Challenges (12 %), had the lowest rejection rates (Table 2). The most successfully solved challenge overall was the Line Coverage Challenge shown in Fig. 6a. This example challenge for the Analyzer project involves checking if the class to be instrumented was not in the wrong package and not a test class and the challenge is solved by any test that calls this method. Mutation Challenges were rejected most often overall (25 %), followed by Line Coverage challenges (22 %). These challenges are quite specific and require testing specific lines or mutants and do not give students a chance to pick easier targets for their tests. In particular writing tests that can detect mutants can be non-trivial. For instance, Fig. 6b represents a challenging mutant that removes a line necessary for parts of the instrumentation. Detecting this mutant in the Analyzer project requires examining the resulting byte code after instrumentation. Many students struggled with challenges like this, particularly for the Analyzer project, which required mocking, and we also had to fix some issues with PIT.

Authors:

(1) Philipp Straubinger, University of Passau, Germany;

(2) Gordon Fraser, University of Passau, Germany.