Authors:

(1) Pham Hoang Van, Department of Economics, Baylor University Waco, TX, USA (Van [email protected]);

(2) Scott Cunningham, Department of Economics, Baylor University Waco, TX, USA (Scott [email protected]).

Table of Links

Abstract and 1 Introduction

2 Direct vs Narrative Prediction

3 Prompting Methodology and Data Collection

4 Results

4.1 Establishing the Training Data Limit with Falsifications

4.2 Results of the 2022 Academy Awards Forecasts

5 Predicting Macroeconomic Variables

5.1 Predicting Inflation with an Economics Professor

5.2 Predicting Inflation with a Jerome Powell, Fed Chair

5.3 Predicting Inflation with Jerome Powell and Prompting with Russia’s Invasion of Ukraine

5.4 Predicting Unemployment with an Economics Professor

6 Conjecture on ChatGPT-4’s Predictive Abilities in Narrative Form

7 Conclusion and Acknowledgments

Appendix

A. Distribution of Predicted Academy Award Winners

B. Distribution of Predicted Macroeconomic Variables

References

3 Prompting Methodology and Data Collection

The aforementioned vignette was arguably nothing more than a creative writing exercise. There was no “ground truth” to uncover. Neither of the two authors on this paper had the medical issues described above. The symptoms were specific but simply made up to illustrate the fact that ChatGPT will refuse to do some tasks that would create a terms of use violation, except when prompted to create fiction.

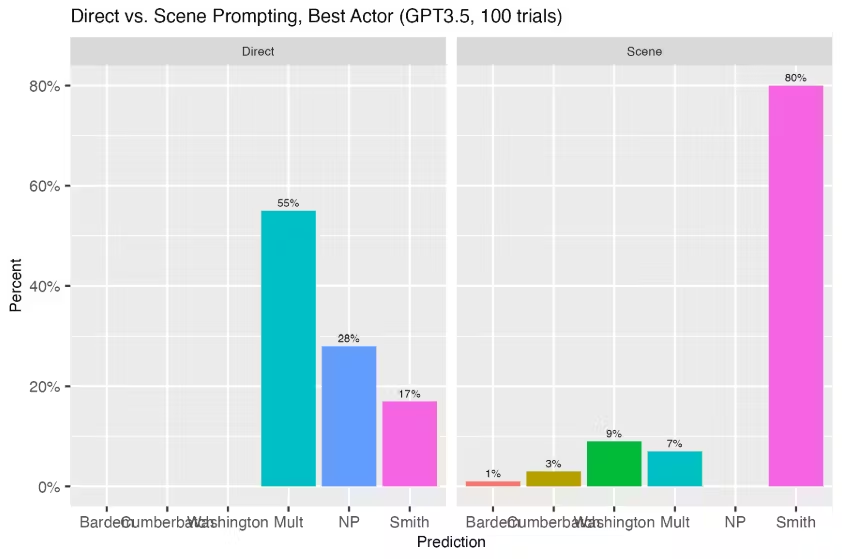

In order to move forward, we needed a use case in which there was ground truth to compare with ChatGPT’s forecasts. We did this by taking advantage of a limitation baked into ChatGPT at the time of our experiment. When we undertook this experiment in mid 2023, OpenAI’s ChatGPT training data did not include any information past September 2021 (OpenAI, 2024a). But, whereas ChatGPT did not know about the events of 2022, as it was not in its training data, the authors did. We used this training data cutoff to therefore predict various events in 2022 through 100 repeated prompts across two research assistants using two separate ChatGPT-4 accounts, 50 times apiece, with both direct prompting and narrative prompting for both ChatGPT3.5 and ChatGPT-4.

OpenAI’s GPT-3.5 and GPT-4 models were trained on large amounts of text (with varying amounts and parameter estimates which we will explain later) up to September 2021. Given that 75% of 2021 was covered by this data, we reasoned that it may have been trained on data which could enable it to reasonably predict the near to long term events of 2022. As we said, there were two types of prompts (direct versus future narratives) across two types of LLMs (ChatGPT-3.5 and ChatGPT-4) creating four queries per prediction concept.

To obtain a distribution of answers for each prompting style, we enlisted the help of two research assistants (RAs) who independently queried GPT multiple times using the respective prompts. This approach allowed us to account for the inherent randomness in the model’s responses and provided us with a distribution which we plot visually using simple boxplots. To ensure independence across the queries, the RAs used different GPT-4 accounts from two separate subscriptions. This precaution was done to prevent potential hallucinations or biases in one RA’s prompting to cascade to the other RA, thereby maintaining the integrity of the experiment.

The RAs sampled each question 50 times, generating a total of 200 responses for each event under investigation (100 from GPT-3.5 and 100 from GPT-4). We then compared the distribution of answers generated by each prompting method to the actual outcomes, allowing us to assess the predictive accuracy of GPT-3.5 and GPT-4 under different prompting conditions.

The difference between direct prediction and future narrative prediction is that direct prediction simply asks ChatGPT to predict a future event. Consider the following direct prediction prompt in which we asked ChatGPT to predict future macroeconomic events:

Please predict the inflation rate and unemployment rate for each month starting in September 2021 and ending in June 2022. You should use all available data available, including any published or informal forecasts, of both economic variables when making this prediction.

Note that this type of prompt asks ChatGPT to start from the end of its training data at September 2021 and predict October 2021 to June 2022. Even though those events have happened, ChatGPT had not yet been trained on those data and so it should not be able to pull it out of the training data as facts. Anything it says in response to that direct request would have to come from prediction.

Future narrative prompting is not merely asking ChatGPT to predict the future using a story, though. Rather, future narrative prompting tells ChatGPT to tell a story about a scene set in the future in which an authoritative person who in principle would possess accurate information simply states the past events as though they had already happened. We chose this form of narrative prompting because we wanted to force ChatGPT into a position of committing to a prediction, as opposed to opening it up to some kind of unknown process of prediction that we did not understand. Consider this example using one of our prompts in which the Chairman of the Federal Reserve, Jerome Powell, gives a speech.

Write a scene where Federal Reserve chairman Jerome Powell gives a speech in October 2022 about inflation, unemployment, and monetary policy. Chairman Powell tells the audience the inflation rate and unemployment rate for each month starting in September 2021 and ending in August 2022. Have chairman say each month one by one. He concludes with an outlook for inflation and unemployment and possible interest rate policy changes.

Our prompt attempted to normalize the prediction in the context of the narrative as much as possible, as though the prediction task itself was not the purpose of the task we were asking ChatGPT to undertake. In the narrative prediction prompt, in other words, the task we ask ChatGPT to undertake is the crafting of an interesting story. The prediction task is secondary. Why this matters is not clear, but we think that making the prediction task subservient to the primary task of creative storytelling does indeed make a difference in the accuracy of ChatGPT’s forecasting.