Table of Links

-

Introduction

-

Related Work

2.1 Semantic Typographic Logo Design

2.2 Generative Model for Computational Design

2.3 Graphic Design Authoring Tool

-

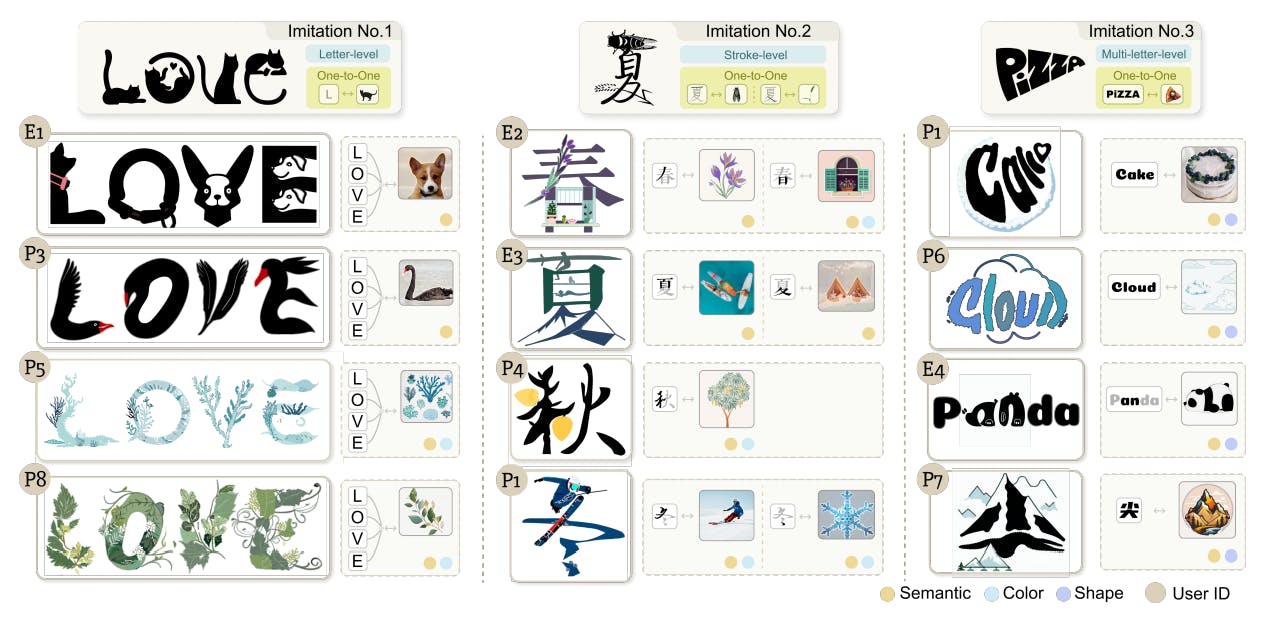

Formative Study

3.1 General Workflow and Challenges

3.2 Concerns in Generative Model Involvement

3.3 Design Space of Semantic Typography Work

-

Design Consideration

-

Typedance and 5.1 Ideation

5.2 Selection

5.3 Generation

5.4 Evaluation

5.5 Iteration

-

Interface Walkthrough and 6.1 Pre-generation stage

6.2 Generation stage

6.3 Post-generation stage

-

Evaluation and 7.1 Baseline Comparison

7.2 User Study

7.3 Results Analysis

7.4 Limitation

-

Discussion

8.1 Personalized Design: Intent-aware Collaboration with AI

8.2 Incorporating Design Knowledge into Creativity Support Tools

8.3 Mix-User Oriented Design Workflow

-

Conclusion and References

8 DISCUSSION

8.1 Personalized Design: Intent-aware Collaboration with AI

The rise of large language models has fueled a surge in text-driven creativity design [6, 51, 58], enabling creators to collaborate with AI using natural language narratives. While text-driven creation offers an intuitive means to manipulate the model in the backend without delving into complex parameters manually, expressing user intent concisely through textual prompts poses a challenge. Crafting a prompt becomes particularly daunting when describing an imagined visual design, given the myriad details such as layout, color, and shape that extend beyond textual representation. PromptPaint [10] recognizes this challenge and approaches it by mixing a set of textual prompts to capture ambiguous concepts, like “a bit less vivid color.” However, it remains constrained by offering a predefined set of prompts and fundamentally fails to resolve the issue of representing concrete visual concepts through prompts.

To ensure that a creator’s intention aligns seamlessly with AI collaboration, it is crucial to mirror real design practices with accessible design materials. The common design material used by creators includes explorable galleries [60], sketches [11], and even photographs capturing our perception of the world [31, 62]. These visual design materials encompass both explicit intentions, such as prominent semantics, and implicit aesthetic factors. In logo design, there is a pronounced emphasis on identity, using images frequently to convey intentions. This aspect can not be ignored in AI collaboration, demanding a capability for AI to comprehend visual semantics. It reveals that there is no universally superior material to encapsulate a creator’s intent; it depends on the design task. This necessitates a hybrid and multimodal collaboration that can flexibly generalize to a wide array of requirements.

8.2 Incorporating Design Knowledge into Creativity Support Tools

Instilling the generalizable design pattern into tools necessitates addressing technical and interaction challenges regarding how humans guide the model. AI models are often not built for the special design task, posing challenges in generalizing to complex patterns. For example, considerable research [47, 61] has delved into blending techniques for specific typeface granularity. However, creativity support tools are user-oriented, with more intricate design requirements, calling for advanced techniques to accommodate all levels of typeface granularity. Instead of retraining a model, significant research has explored to change or add the interaction with models for incorporating design knowledge, such as crowd-powered design parameterization [27] and intervention through intermediate representations [57].

The technical and interaction aspects of incorporating design knowledge externalize the idea of “balancing automation and control,” which is often noted by existing human-AI design guidelines [1, 2, 19]. The incorporation of design knowledge controlled by creators partially addresses the issue of AI copyright. Current generative models have faced criticism for sampling examples from the training set. In TypeDance, users contribute design materials through images, allowing for a personalized foundation instead of direct replication from a predefined dataset. This approach not only enhances creativity but also helps establish a stronger sense of ownership for creators. Complete automation with a single model to achieve an end-to-end result overlooks the user’s value. The allure of a creativity support tool, as opposed to relying solely on a model, lies in enabling creators to participate in crucial stages. This involvement includes customizing design materials, choosing which design knowledge to transfer to the generation process, and refining the final outcome.

Authors:

(1) SHISHI XIAO, The Hong Kong University of Science and Technology (Guangzhou), China;

(2) LIANGWEI WANG, The Hong Kong University of Science and Technology (Guangzhou), China;

(3) XIAOJUAN MA, The Hong Kong University of Science and Technology, China;

(4) WEI ZENG, The Hong Kong University of Science and Technology (Guangzhou), China.

This paper is