Those AI tools are being trained on our trade secrets. We’ll lose all of our customers if they find out our teams use AI. Our employees will no longer be able to think critically because of the brain rot caused by overreliance on AI. These are not irrational fears. As AI continues to dominate the headlines, questions about data privacy and security, intellectual property, and work quality are legitimate and important.

So, what do we do now? The temptation to just say “No” is strong. It feels straightforward and safe. However, this “safe” route is actually the riskiest of all. An outright ban on AI is a losing strategy that creates more problems than it solves. It fosters secrecy, increases security risks, and puts you at a massive competitive disadvantage.

I’m the founder of two tech agencies and a big proponent of AI. As a business, we also deal with customer data, often from industries like government, healthcare, and education. However, I believe there’s a much better way to address the threats posed by AI. In this article, I’ll share the dangers of flat-out AI bans and what companies can do instead.

Curiosity crisis

You can ban tools, but you can’t ban curiosity, especially among developers and product managers who are paid to be innovative. They’re not living in a vacuum. They’ve heard they can do this and that in a fraction of the time, and they simply want to try it out.

Additionally, employees may feel that not using AI daily puts them at a disadvantage compared to their peers working at other companies where AI is allowed.

When you forbid the use of AI tools, you don’t stop it. Multiple studies confirm that you simply drive it underground. A Cisco survey revealed that 60% of respondents (including security and privacy professionals from various countries) entered information about company internal processes into genAI tools; 46% entered employee names or information, and 31% entered customer names or information.

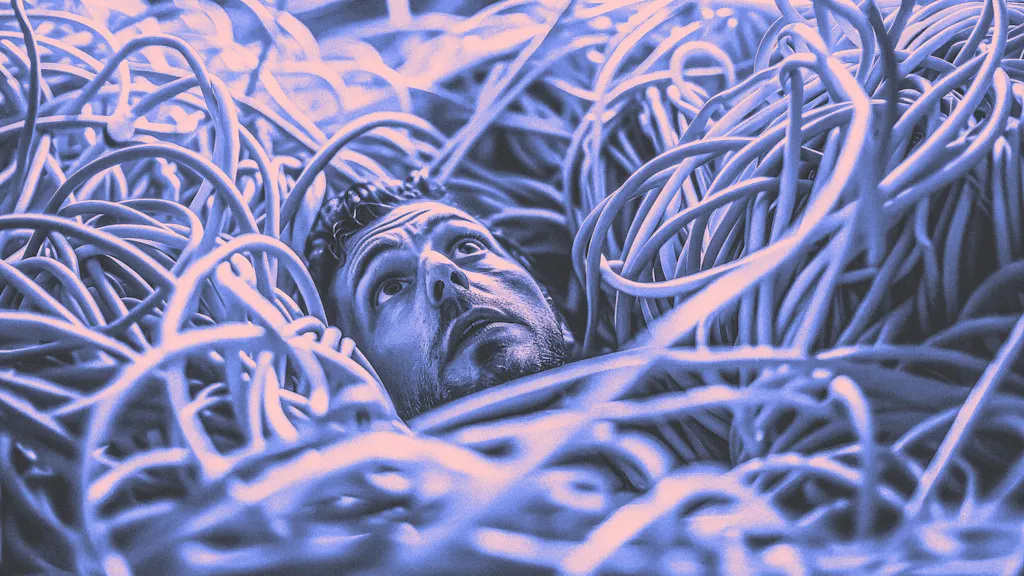

This creates “Shadow IT”—the unsanctioned use of technology within an organization. In recent years, a new term has also emerged: Bring Your Own AI, or BYOI.

Another study by Anagram paints even a more shocking picture: 58% of the surveyed employees across the US admit to pasting sensitive data into large language models. Furthermore, 40% were willing to knowingly violate company policy to complete a task faster. I guess the forbidden fruit is indeed the sweetest.

As a result, you have zero visibility. You don’t know what tools are being used, what data is being input, or what risks are being taken. The irony is real: the problem you wanted to control is now completely out of your control.

Security paradox

The primary reason for a ban is to protect sensitive data. However, the ban makes a leak more likely, not less.

Employees may create personal accounts and use free or cheaper plans, which often default to using your data for model training. They lack robust security features, audit logs, and data processing agreements (DPAs).

On the contrary, enterprise plans, such as ChatGPT Business or Enterprise, often come with assurances that your data will not be used for training purposes. They may offer SSO, data encryption, access controls, and administrative oversight.

Your sensitive data, which you feared would be leaked through an official channel, gets leaked through dozens of untraceable personal accounts. You could have secured it with an enterprise plan, but instead, you pushed it into the wild.

Driving in the slow lane

While you’re debating, your competitors are executing. With only 19% of C-level executives reporting a more than 5% increase in revenue attributed to AI, it may be too early to talk about the ROI. Also, the reason for a relatively small ROI may not be in the AI itself, but in the way we use it. More and more businesses are now considering applying AI to central business operations rather than peripheral processes.

AI use cases in a business setting are manifold. I know firsthand that the productivity gains from AI are not marginal. At Redwerk, we do not shy away from AI-assisted development, and we’re teaching our clients how they can set up such workflows. We don’t view AI as a threat stealing developers’ lunch; we view it as a tool, allowing us to do more in-depth work faster.

One practical use case for developers is generating boilerplate code or documenting APIs in seconds, rather than hours. With AI, our product managers can analyze user feedback at scale, brainstorm feature ideas, and conduct market research far more quickly.

At QAwerk, we use a range of AI testing tools to generate test cases, identify obscure edge cases, and even perform initial security vulnerability scans.

AI is here to stay

It’s not a fad, and it’s not going anywhere. More and more apps are developing AI features, keeping pace with the competition. AI will continue changing the anatomy of work. Workplace productivity tools like Slack, Zoom, and Asana (which are all now enhanced with AI) have become ingrained in the daily operations of tech-forward businesses. Major cloud and database providers are now offering agentic AI for enterprises.

Investors are pouring billions into OpenAI, despite the company operating at a loss, because they recognize that AI is the future. All these facts clearly signal one thing: AI is here to stay.

How to adopt AI responsibly

Throughout my entrepreneurial journey, I’ve quickly learned that being proactive is a more effective strategy than being reactive. And that pertains to everything, including AI. When questions like “Are you using AI?” are asked to support employees rather than reprimand them, you’re on the right track. You don’t need to overcomplicate things; just start.

Step 1: Guide, don’t forbid

Create a simple Acceptable Use Policy (AUP) with dos and don’ts. Please, no 40-page PDFs no one has ever touched (besides the person creating it). Clearly define what is and is not acceptable. For example, AI tools are approved for brainstorming, learning, and working with nonsensitive code. Do not input any client data, PII, or company IP into public AI models.

Step 2: Equip your team

Invest in a secure, enterprise-level AI tool. The cost is minimal compared to the productivity gains and the risk of unmanaged use. Before you do that, survey the team for their preferences. They probably have a ton of prompts that maybe work better in Gemini rather than Claude or ChatGPT, or vice versa. You need to gather all major use cases and conduct research on the tool that can address them best. This provides your team with a secure, approved sandbox to work in.

Step 3: Educate and empower

Did you know that millennials are even bigger advocates for AI than Gen Zers? 62% of millennials self-reported high expertise with AI. In many organizations, millennials occupy managerial positions, and they can become true champions of change. So, their enthusiasm should be nurtured rather than stifled.

Run workshops. Share best practices for prompt engineering. Create a dedicated Slack/Teams channel for people to share cool use cases and discoveries. Turn it into a positive, collaborative exploration.

Step 4: Listen and iterate

Don’t let the policy be a stone tablet. Let your team explore, get their feedback, and then formulate more detailed policies grounded in their practical, real-world experience. You’ll learn what actually works and where the real risks lie.

final thoughts

Things are moving extremely fast in the AI space. So fast, it’s challenging to keep up even without any bans. If you can’t avoid the inevitable, embrace it. Yes, data privacy and security are no joke, but banning AI is not how you ensure its integrity. Let your team experiment and innovate within the guardrails you both find reasonable and agree on. Allow industry-compliant tools, provide training, and use them to your advantage.

The early-rate deadline for Fast Company’s World Changing Ideas Awards is Friday, November 14, at 11:59 pm PT. Apply today.