Table of Links

Abstract and I. Introduction

II. Background

III. Paranoid Stateful Lambda

IV. SCL Design

V. Optimizations

VI. PSL with SCL

VII. Implementation

VIII. Evaluation

IX. Related Work

X. Conclusion, Acknowledgment, and References

IV. SCL DESIGN

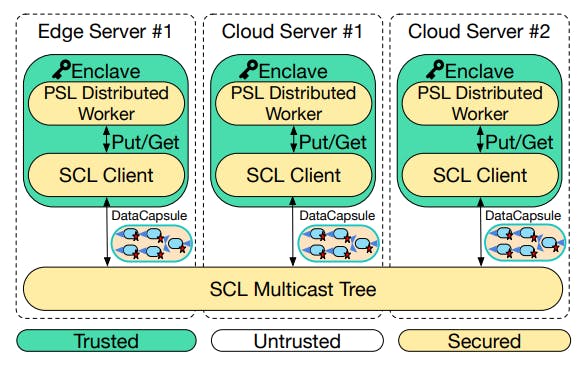

A. Overall Architecture

The essence of SCL is that every secure enclave replicates a portion of the underlying DataCapsule (Section IV-B), namely the portion dealing with active keys. This portion can be thought of as a write-ahead log. By allowing this log to branch, we free workers to be independent of one another for periods

of time. The intuition is that we arrange these temporarily divergent histories to include sufficient information to provide well-defined, coherent, and eventually-consistent semantics. We do so while allowing updates to be propagated over an insecure and unordered multicast tree.

Figure 4 provides an overview of SCL’s architecture. Each enclave maintains an in-memory replicated cache called a memtable. All put() operations are placed into the local memtable, timestamped, and linked with previous updates before being encrypted, signed, and forwarded via multicast. The code which performs these operations is a CAAPI that provides the KVS interface on top of the DataCapsule storage.

The DataCaspule record appends are propagated by a network multicast tree. If an enclave receives a record to append, it verifies the cryptographic signatures and hashes, and merges the record with its own replica of the DataCapsule. The merge does not require explicit coordination due to the CRDT property of the DataCapsule hash chain, but the hash chain is periodically synchronized to bound the consistency. DataCapsule changes are also reflected to the memtable with the eventual consistency semantics. SCL enables fast KVS read queries because of its shared memory abstraction, so all get() operations can directly read from the enclave’s local memtable without querying other nodes.

B. DataCapsule Contents For SCL

Figure 5 shows a visual representation of DataCapsule contents for SCL. Each record contains one or more SHA256 hash pointers to previous records, encrypted data, and a signature. Multiple previous hash pointers occur during epoch-based resynchronization, which we discuss shortly. The data block of a record includes SenderID, a unique identifier of the writer, Timestamp, that indicates the sending time of the record, and Data, the actual data payload. In SCL, Data is an AES-encrypted string that contains the updated keyvalue pairs. The record contains a Signature signed on the entire record with Elliptic Curve Digital Signature Algorithm (ECDSA) using the private key of the writer.

C. Memtable

The Memtable KVS is an in-enclave cache of the most recently updated key-value pairs. The key-value pairs are stored in plaintext, as the confidentiality and integrity of the memtable are protected by the secure enclave’s EPC. In-enclave distributed applications communicate with other enclaves by interacting with the memtable using the standard KVS interface: put(key,value) to store a key-value pair and get(key) to retrieve the stored value for a given key.

SCL communicates with other enclaves to replicate the memtable consistently across enclaves. Local changes to the memtable are propagated to other enclaves and updates received from other enclaves are reflected in the local memtable. When receiving an update from other enclaves, the memtable performs verification and decryption before putting the keyvalue pair into the memtable. To achieve coherence, updates received from remote enclaves are only placed in the local cache if they have later timestamps (see below).

D. Consistency

SCL guarantees eventual consistency of values associated with each key, namely that if there were no further updates to a specific key k, then get(k) from each of the in-enclave memtables should return the latest value. In addition, SCL guarantees the property of coherence among values, namely that no two enclaves will ever observe two updates to a given key in different orders. Since updates are propagated over an unorder multicast tree, SCL needs to order the DataCapsule updates in the memtable, and reject updates that are causally earlier than the ones already in the memtable. Naive approaches may lead to undesirable outcomes: for example, replacing the memtable’s value whenever a new record arrives leaves the memtable in inconsistent state.

To decide the order of the updates, SCL uses a Lamport logical clock to associate every key-value pair with a logical timestamp. Without relying on the actual clock time, SCL increments a local Sequence Number (SN) whenever there is an update to the local memtable. When receiving a new DataCapsule record, it also synchronizes the SN with the received SN in the record by SN ← max(localSN, receivedSN)+1.

Although a vector clock is usually deemed as an upgrade to Lamport logical clocks by including a vector of all collected timestamps, SCL uses a Lamport clock because a DataCapsule already carries equivalent versioning and causality information, and a vector clock introduces additional complexity and messaging overhead to the system. The reason for not using the actual timestamp from the operating system is that getting such timestamp costs more than 8,000 CPU cycles due to enclave security design [45]. Getting trusted hardware counters from RDTSC and RDTSCP instructions is also expensive (60-250 ms) [9] only supported by SGX2.

E. Epoch-based Resynchronization

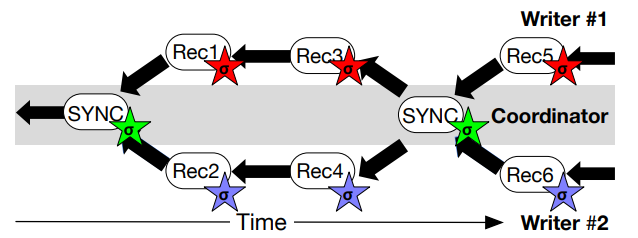

For performance, SCL branches the DataCapsule data structure by letting every enclave write to its own hash chain. Every append includes a hash pointer to the previous write from the same writer, instead of the previous write across all enclaves. To merge the hash chains maintained by each writer, SCL uses a synchronization (SYNC) report as a rendezvous point for all DataCapsule branches. The resultant DataCapsule hash chain is structured in a diamond shape like Figure 6. All writers’ first writes include a hash pointer to the previous SYNC report, and the next SYNC report includes a hash pointer to the last message of every writer. The SYNC report is useful in the following ways:

• Detecting the freshness of a message: Every message includes a monotonically increasing SYNC report sequence number, which is incremented when a new SYNC report is generated. As a

• Fast Inconsistency Recovery: After an enclave receives a SYNC report, it can use the hash pointers to backtrack to previous messages from the same writer until it reaches the last SYNC report. It detects a message is lost if a hash pointer cannot be recognized during the backtrack.

The usage of SYNC reports establishes a notion of epochbased resynchronization, a tradeoff between synchronization overhead and consistency. With epoch-based resynchronization, the user defines a synchronization time interval called an epoch. Between the epochs, the enclaves use timestamps to achieve coherency and eventual consistency. At the end of an epoch, enclaves cross-validate their own DataCapsule replica with the SYNC report generated by a special Coordinator Enclave (CE).

F. Multicast Tree

We conclude this section by showing the overall structure of the multicast tree. Multicast enables one node on the multicast tree to communicate with multiple nodes by routers. Routers receive the message and re-broadcast the message to multiple nodes or routers. The overall structure forms a multicast tree. The tree-like structure extends the scalability that allows more than one router to handle the communication. SCL is agnostic to the multicast tree topology, as long as a node on the tree publishes the message, and all the rest of the nodes can receive that message. As a result, we can abstract SCL’s multicast tree structure as a plane where worker enclaves publish DataCapsule updates through multicast routers, the third party durability storage can log all the messages and thirdparty authenticators can verify the validity of the DataCapsule hashchain.

G. Duraibilty and Fault Tolerance

We discuss the durability semantics of SCL, and how we use it to store inactive keys. We also discuss SCL when facing multiple types of failures.

SCL Durability with DataCapsule: Any secure enclave in SCL may fail by crashing or losing its network connection, causing it to fall behind or even leave its in-memory states inconsistent. SCL is durable if a such enclave can recover all of the in-memory states (i.e. the memtable) consistently and catch-up with the on-going communication. Due to the equivalence of the DataCapsule hash chain and the memtable shown in Section IV-C, the durability of SCL is achieved by making the DataCaspule hash chain persistent. An analogy to SCL’s durability is Write-ahead Logging(WAL) in many databases, which logs an update persistently before committing to the permanent database. In SCL, DataCapsule is the appendonly log that records the entire history of the memtable, which a crashed enclave can use to recover. The crashed enclave can merge its local inconsistent DataCaspule hash chain with the persistent DataCapsule received from other components, either an in-enclave LSM tree DB or DataCapsule servers. The CRDT property of DataCapsule guarantees the consistency of the hash chain after the merge.

DataCapsule Replication: A DataCapsule replica may fail by crashing or network partitioning, resulting in service interruption by SCL. A DataCapsule replica may also be corrupted and lose partial or the entire SCL data permanently. To ensure durability and availability of DataCapsule replicas, we implement a continuous DataCapsule replication system that uses write quorum to tolerate user-defined f replica failures or network partitioning in the system without disturbing the PSL computation.

Coordinator Failure: The failure of the coordinator only influences the resynchronization interval, but does not influence the strong eventual consistency given by the CRDT property of DataCapsules and the logical timestamp of the memtable. However, one can use multiple read-only shadow coordinators to improve the fault tolerance and to remove the single point of failure. If multiple consistency coordinators are on the same multicast tree, one consistency coordinator can actively send RTS broadcasts to the multicast tree. Other consistency coordinators remain in shadow mode until a SYNC report is not sent for an extended period of time.

H. CapsuleDB

CapsuleDB is a key-value store inspired by LSM trees and backed by DataCapsules. It is built to specifically take advantage of the properties of DataCapsules to provide longterm storage of large amounts of data as well as accelerate PSL recovery beyond reading every single record in the DataCapsule. Figure 3 shows how CapsuleDB fits into the PSL framework with its separate enclave running on behalf of the Worker Enclaves.

CapsuleDB has two main data structures, CapsuleBlocks and indices, as well as its own memtable. CapsuleBlocks are groups of keys, each of which represents a single record in the DataCapsule backing the database. It’s data storage structure is inspired by level databases such as RocksDB [40] and SplinterDB [12]. Data is split into levels, each with increasing size. In CapsuleDB, Level 0 (L0) is the smallest while subsequent levels L1, L2, and so on increase by a factor of ten each time. Each level is made up of CapsuleBlocks. In L0, each block represents a memtable that has been filled and marked immutable. Blocks in lower levels each contain a sorted run of keys, such that the keys in the level are monotonically increasing.

The index manages which CapsuleBlocks are in each level, the hashes of each block, and which blocks contain active data. The index also acts as a checkpoint system for CapsuleDB, as it too is stored in the DataCapsule. While the main purpose of storing the index in the DataCapsule is to ensure CapsuleDB can quickly restore service after a failure, it has the added benefit that old copies of the indices serve as snapshots of the database over the lifetime of its operation.

Writing to CapsuleDB CapsuleDB participates in SCL just like the worker enclaves. Consequently, every write is stored into CapsuleDB’s local memtable. In this way, it has visibility to the most recent values written by the workers. Once the memtable fills, it is marked as immutable and appended to the DataCapsule. The resulting record’s hash is then stored in L0 in the index. If this write causes L0 to become full, the compaction process, described below is triggered, pushing compacted blocks out to the DataCapsule.

Reading from CapsuleDB Retrieving a value from CapsuleDB is triggered by a get operation from one of the worker enclaves when it attempts to find a value that is not stored in its local memtable. This get is routed to CapsuleDB where it begins at the CapsuleDB’s memtable. If the requested key is not found, the request moves to the index for CapsuleBlock retrieval. Note that once CapsuleDB finds a value for the requested key, it multicasts the result as a put on the multicast tree with a timestamp from the blocks—exactly as if it were a worker enclave.

When searching for the most recent value associated with a key, CapsuleDB checks the index associated with L0. If the key is found after scanning through each block, then the corresponding tuple is returned. If the search fails, the process is repeated at L1. However, L1 is sorted, so our search can be performed substantially faster. In addition, L1 is likely too large to bring fully into memory, especially given the tight memory constraints imposed by some secure enclaves. As such, only the requested block is retrieved from the DataCapsule. Again, it is checked to see if the requested KV pair is present. If not, the same procedure is run at lower levels until it is found, or CapsuleDB determines it does not have the requested KV pair.

Indexing the Blocks The index is at the core of CapsuleDB and serves several critical roles. Primarily, it tracks the hashes of active CapsuleBlocks to quickly lookup keys. Whenever a block is added, removed, or modified, the hash mapping is updated in the index. When compaction, the process of moving old data to lower levels, occurs, the index updates which levels the moved CapsuleBlocks are now associated with. In this way, CapsuleDB can always quickly find the most recently updated CapsuleBlock that may have a requested key. Further, CapsuleDB keeps a complete record of the history of updates to the KV store, effectively acting similiar to a git repository.

Since the index is written out whenever it is modified, the CapsuleDB instance can be instantly restored simply by loading the most recent index. Then, only the records since the last update need to be played forward to restore the most recent KV pairs that were in CapsuleDB’s memtable.

Compaction Compaction is critical to managing the data in any level-based system. CapsuleDB’s compaction process uses key insights from the flush-then-compact strategy of SplinterDB [12] to limit write amplification. Each level has a maximum size; we say a level is full once the summed sizes of the CapsuleBlocks in that level meets or exceeds the level’s maximum size. This triggers a compaction.

All writes to CapsuleDB are first stored in the in-memory memtable. Once the memtable fills, it is marked as immutable and written to L0 as a CapsuleBlock, also simultaneously appending the block as a record to the DataCapsule. Once L0 is full, compaction begins by sorting the keys in L0. They are then inserted into the correct locations in L1 such that after all the keys are inserted L1, the level is still a monotonically increasing run of keys. Any keys in L0 that are already present in L1 would replace that data in L1, since the L0 value and timestamp would be fresher. Finally, the CapsuleBlocks and their corresponding hashes are written out to the DataCapsule and updated in the index, marking the end of compaction.

:::info

Authors:

(1) Kaiyuan Chen, University of California, Berkeley ([email protected]);

(2) Alexander Thomas, University of California, Berkeley ([email protected]);

(3) Hanming Lu, University of California, Berkeley (hanming [email protected]);

(4) William Mullen, University of California, Berkeley ([email protected]);

(5) Jeff Ichnowski, University of California, Berkeley ([email protected]);

(6) Rahul Arya, University of California, Berkeley ([email protected]);

(7) Nivedha Krishnakumar, University of California, Berkeley ([email protected]);

(8) Ryan Teoh, University of California, Berkeley ([email protected]);

(9) Willis Wang, University of California, Berkeley ([email protected]);

(10) Anthony Joseph, University of California, Berkeley ([email protected]);

(11) John Kubiatowicz, University of California, Berkeley ([email protected]).

:::

:::info

This paper is available on arxiv under CC BY 4.0 DEED license.

:::