How Not to Mess Up Your AI Build (and Actually Save Time)

We’re in the era of AI. Every company wants to add some kind of AI feature to their product. LLMs (large language models) are popping up everywhere - some are even building their own. But while everyone’s jumping into the hype, I just want to share a few things I’ve learned after working with LLMs across different projects over the past year.

1. Pick the Right Model for the Right Job

One big mistake I’ve seen (and made) is assuming all LLMs are equal. They’re not.

Some models have way more knowledge about certain locations or domains (Gemini is great at some areas where OpenAI models don’t perform well). Some are good at reasoning but slow. Others are fast but bad at critical thinking.

Each model has its own strengths. Use OpenAI GPT-4 for deep reasoning tasks. Use Claude or Gemini for other areas depending on how they’ve been trained. Models like Gemini Flash are optimized for speed, but they tend to skip deeper reasoning.

The bottom line: Don’t use one model for everything. Be intentional. Try, test, and pick the best one for your use case.

2. Don’t Expect LLMs to Do All the Thinking

I used to believe you could just throw a prompt at an LLM, and it would do all the heavy lifting.

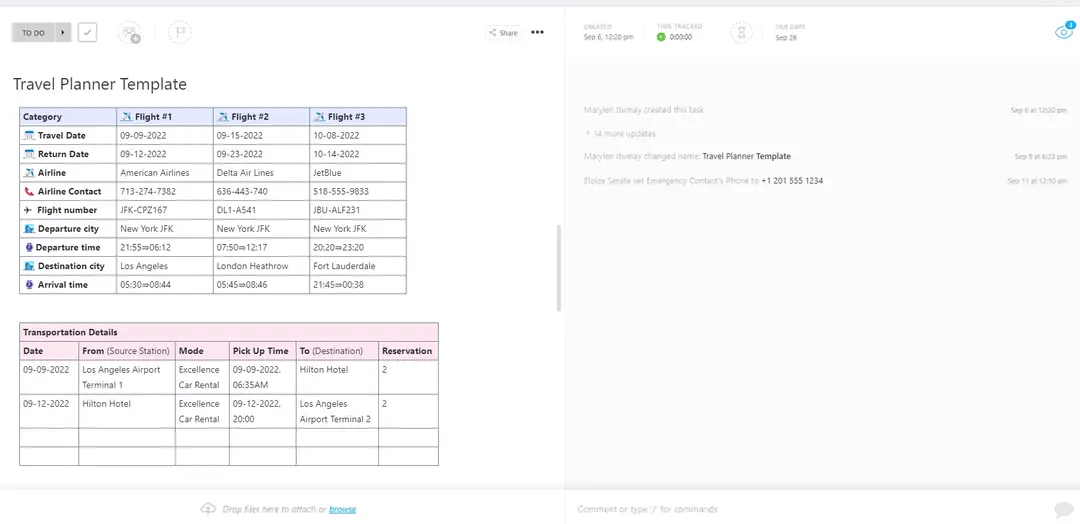

For example, I was working on a project where users selected their favorite teams, and the app had to generate a match-based travel itinerary. At first, I thought I could just send the whole match list to the LLM and expect it to pick the best ones and build the itinerary. It didn’t work.

It was slow, messy, and unreliable.

So I changed the approach: the system picks the right matches first, then passes only the relevant ones to the LLM to generate the itinerary. That worked much better.

Lesson? Let your app handle the logic. Use LLMs to generate things, not to decide everything. They’re great at language; not always great at logic, at least for now.

3. Give Each Agent One Responsibility

Trying to make a single LLM do multiple jobs is a recipe for confusion.

In one of my projects, I had a supervisor agent that routed messages to different specialized agents based on user input. Initially, I added too much logic to it - handling context, figuring out follow-ups, deciding continuity, etc. It eventually got confused and made the wrong calls.

So, I split it up. I moved some logic (like thread continuity) outside and kept the supervisor focused only on routing. After that, things became much more stable.

Lesson: Don’t overload your agents. Keep one responsibility per agent. This helps reduce hallucinations and improves reliability.

4. Latency Is Inevitable – Use Streaming

LLMs that are good at reasoning are usually slow. That’s just the reality right now. Some models like GPT-4 or Claude 2 take their time, especially with complex prompts. You can’t fully eliminate the delay, but you can make it feel better for the user.

One way to do that? Stream the output as it’s generated. Most LLM APIs support text streaming, allowing you to start sending partial responses - even sentence by sentence - while the rest is still being processed.

In my apps, I stream whatever’s ready to the client as soon as it’s available. It gives users a sense of progress, even if the full result takes a bit longer.

Lesson: You can’t avoid latency, but you can hide it. Stream early, stream often - even partial output makes a big difference in perceived speed.

5. Fine-Tuning Can Save You Time (and Tokens)

People often avoid fine-tuning because it seems complex or expensive. But in some cases, it actually saves a lot.

If your prompts need to include the same structure or context every time and caching doesn’t help, you’re spending extra tokens and time. Instead, just fine-tune the model with that structure. After that, you don’t need to pass the same example every time - it just knows what to do.

But be careful: don’t fine-tune on data that changes frequently, like flight times or prices. You’ll end up teaching the model outdated information. Fine-tuning works best when the logic and format are stable.

Lesson: Fine-tune when things are consistent - not when they’re constantly changing. It saves effort in the long run and leads to faster, cheaper prompts.

🎯 Final Thoughts

Working with LLMs is not just about prompts and APIs. It’s about architecture, performance, clarity, and most importantly, knowing what to expect (and what not to expect) from these models.

Hope this helps someone out there building their next AI feature.