Content Overview

- Overview

- Setup

- Enabling the new type promotion

- Two modes: ALL mode vs SAFE mode

- Dtypes

- Example of precision losing operations

- Example of bit-widening operations

- A System Based on a Lattice

- Type Promotion Lattice

- Type Promotion Table

- Advantages of The New Type Promotion

- WeakTensor

- Overview

- WeakTensor construction

Overview

There are 4 options for type promotion in TensorFlow.

- By default, TensorFlow raises errors instead of promoting types for mixed type operations.

- Running

tf.numpy.experimental_enable_numpy_behavior()switches TensorFlow to use NumPy type promotion rules. - This doc describes two new options that will be available in TensorFlow 2.15 (or currently in

tf-nightly):

pip install -q tf_nightly

Note: experimental_enable_numpy_behavior changes the behavior of all of TensorFlow.

Setup

import numpy as np

import tensorflow as tf

import tensorflow.experimental.numpy as tnp

print("Using TensorFlow version %s" % tf.__version__)

Using TensorFlow version 2.20.0-dev20250306

Enabling the new type promotion

In order to use the JAX-like type promotion in TF-Numpy, specify either 'all' or 'safe' as the dtype conversion mode when enabling NumPy behavior for TensorFlow.

This new system (with dtype_conversion_mode="all") is associative, commutative, and makes it easy to control what width of float you end up with (it doesn’t automatically convert to wider floats). It does introduce some risks of overflows and precision loss, but dtype_conversion_mode="safe" forces you to handle those cases explicitly. The two modes are explained more in detail in the next section.

tnp.experimental_enable_numpy_behavior(dtype_conversion_mode="all")

WARNING:tensorflow:UserWarning: enabling the new type promotion must happen at the beginning of the program. Please ensure no TF APIs have been used yet.

Two Modes : ALL mode vs SAFE mode

In the new type promotion system, we introduce two modes: ALL mode and SAFE mode. SAFE mode is used to mitigate the concerns of “risky” promotions that can result in precision loss or bit-widening.

Dtypes

We will be using the following abbreviations for brevity.

bmeanstf.boolu8meanstf.uint8i16meanstf.int16i32meanstf.int32bf16meanstf.bfloat16f32meanstf.float32f64meanstf.float64i32*means Pythonintor weakly-typedi32f32*means Pythonfloator weakly-typedf32c128*means Pythoncomplexor weakly-typedc128

The asterisk (*) denotes that the corresponding type is “weak” – such a dtype is temporarily inferred by the system, and could defer to other dtypes. This concept is explained more in detail here.

Example of precision losing operations

In the following example, i32 + f32 is allowed in ALL mode but not in SAFE mode due to the risk of precision loss.

# i32 + f32 returns a f32 result in ALL mode.

tnp.experimental_enable_numpy_behavior(dtype_conversion_mode="all")

a = tf.constant(10, dtype = tf.int32)

b = tf.constant(5.0, dtype = tf.float32)

a + b # <tf.Tensor: shape=(), dtype=float32, numpy=15.0>

WARNING:tensorflow:UserWarning: enabling the new type promotion must happen at the beginning of the program. Please ensure no TF APIs have been used yet.

WARNING: All log messages before absl::InitializeLog() is called are written to STDERR

I0000 00:00:1741314679.354086 16587 gpu_device.cc:2018] Created device /job:localhost/replica:0/task:0/device:GPU:0 with 13638 MB memory: -> device: 0, name: Tesla T4, pci bus id: 0000:00:05.0, compute capability: 7.5

I0000 00:00:1741314679.356319 16587 gpu_device.cc:2018] Created device /job:localhost/replica:0/task:0/device:GPU:1 with 13756 MB memory: -> device: 1, name: Tesla T4, pci bus id: 0000:00:06.0, compute capability: 7.5

I0000 00:00:1741314679.358627 16587 gpu_device.cc:2018] Created device /job:localhost/replica:0/task:0/device:GPU:2 with 13756 MB memory: -> device: 2, name: Tesla T4, pci bus id: 0000:00:07.0, compute capability: 7.5

I0000 00:00:1741314679.360752 16587 gpu_device.cc:2018] Created device /job:localhost/replica:0/task:0/device:GPU:3 with 13756 MB memory: -> device: 3, name: Tesla T4, pci bus id: 0000:00:08.0, compute capability: 7.5

<tf.Tensor: shape=(), dtype=float32, numpy=15.0>

# This promotion is not allowed in SAFE mode.

tnp.experimental_enable_numpy_behavior(dtype_conversion_mode="safe")

a = tf.constant(10, dtype = tf.int32)

b = tf.constant(5.0, dtype = tf.float32)

try:

a + b

except TypeError as e:

print(f'{type(e)}: {e}') # TypeError: explicitly specify the dtype or switch to ALL mode.

WARNING:tensorflow:UserWarning: enabling the new type promotion must happen at the beginning of the program. Please ensure no TF APIs have been used yet.

<class 'TypeError'>: In promotion mode PromoMode.SAFE, implicit dtype promotion between (<dtype: 'int32'>, weak=False) and (<dtype: 'float32'>, weak=False) is disallowed. You need to explicitly specify the dtype in your op, or relax your dtype promotion rules (such as from SAFE mode to ALL mode).

Example of bit-widening operations

In the following example, i8 + u32 is allowed in ALL mode but not in SAFE mode due to bit-widening, which means using more bits than the number of bits in the inputs. Note that the new type promotion semantics only allows necessary bit-widening.

# i8 + u32 returns an i64 result in ALL mode.

tnp.experimental_enable_numpy_behavior(dtype_conversion_mode="all")

a = tf.constant(10, dtype = tf.int8)

b = tf.constant(5, dtype = tf.uint32)

a + b

WARNING:tensorflow:UserWarning: enabling the new type promotion must happen at the beginning of the program. Please ensure no TF APIs have been used yet.

<tf.Tensor: shape=(), dtype=int64, numpy=15>

# This promotion is not allowed in SAFE mode.

tnp.experimental_enable_numpy_behavior(dtype_conversion_mode="safe")

a = tf.constant(10, dtype = tf.int8)

b = tf.constant(5, dtype = tf.uint32)

try:

a + b

except TypeError as e:

print(f'{type(e)}: {e}') # TypeError: explicitly specify the dtype or switch to ALL mode.

WARNING:tensorflow:UserWarning: enabling the new type promotion must happen at the beginning of the program. Please ensure no TF APIs have been used yet.

<class 'TypeError'>: In promotion mode PromoMode.SAFE, implicit dtype promotion between (<dtype: 'int8'>, weak=False) and (<dtype: 'uint32'>, weak=False) is disallowed. You need to explicitly specify the dtype in your op, or relax your dtype promotion rules (such as from SAFE mode to ALL mode).

A System Based on a Lattice

Type Promotion Lattice

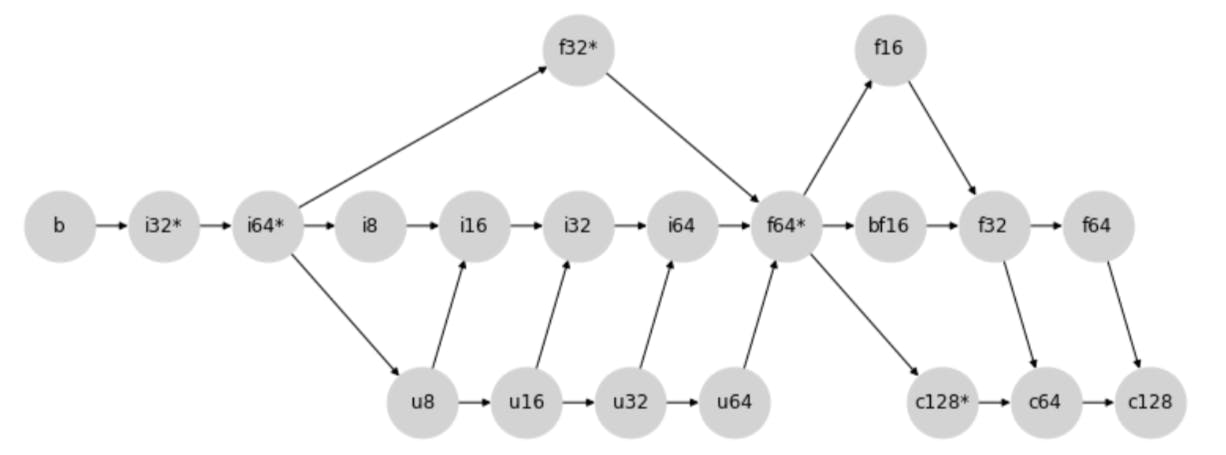

The new type promotion behavior is determined via the following type promotion lattice:

More specifically, promotion between any two types is determined by finding the first common child of the two nodes (including the nodes themselves).

For example, in the diagram above, the first common child of i8 and i32 is i32 because the two nodes intersect for the first time at i32 when following the direction of the arrows.

Similarly as another example, the result promotion type between u64 and f16 would be f16.

Type Promotion Table

Following the lattice generates the binary promotion table below:

Note: SAFE mode disallows the highlighted cells. ALL mode allows all cases.

We adopt a JAX-like lattice-based system for our new type promotion, which offers the following advantages:

Advantages of Lattice-Based System

First, using a lattice-based system ensures three very important properties:

- Existence: There is a unique result promotion type for any combinations of types.

- Commutativity:

a + b = b + a - Associativity:

a + (b + c) = (a + b) = c

These three properties are critical for constructing a type promotion semantics that is consistent and predictable.

Advantages of JAX-like Lattice System

Another crucial advantage of the JAX-like lattice system is that outside unsigned ints, it avoids all wider-than-necessary promotions. This means you cannot get 64-bit results without 64-bit inputs. This is especially beneficial for working on accelerators as it avoids unnecessary 64-bit values, which was frequent in the old type promotion.

However, this comes with a trade-off: mixed float/integer promotion is very prone to precision loss. For instance, in the example below, i64 + f16 results in promoting i64 to f16.

# The first input is promoted to f16 in ALL mode.

tnp.experimental_enable_numpy_behavior(dtype_conversion_mode="all")

tf.constant(1, tf.int64) + tf.constant(3.2, tf.float16) # <tf.Tensor: shape=(), dtype=float16, numpy=4.2>

WARNING:tensorflow:UserWarning: enabling the new type promotion must happen at the beginning of the program. Please ensure no TF APIs have been used yet.

<tf.Tensor: shape=(), dtype=float16, numpy=4.19921875>

To migitage this concern, we introduced a SAFE mode that will disallow these “risky” promotions.

WeakTensor

Overview

Weak tensors are Tensors that are “weakly typed”, similar to a concept in JAX.

WeakTensor‘s dtype is temporarily inferred by the system, and could defer to other dtypes. This concept is introduced in the new type promotion to prevent unwanted type promotion within binary operations between TF values and values with no explicitly user-specified type, such as Python scalar literals.

For instance, in the example below, tf.constant(1.2) is considered “weak” because it doesn’t have a specific dtype. Therefore, tf.constant(1.2) defers to the type of tf.constant(3.1, tf.float16), resulting in a f16 output.

tf.constant(1.2) + tf.constant(3.1, tf.float16) # <tf.Tensor: shape=(), dtype=float16, numpy=4.3>

<tf.Tensor: shape=(), dtype=float16, numpy=4.30078125>

WeakTensor Construction

WeakTensors are created if you create a tensor without specifying a dtype the result is a WeakTensor. You can check whether a Tensor is “weak” or not by checking the weak attribute at the end of the Tensor’s string representation.

First Case: When tf.constant is called with an input with no user-specified dtype.

tf.constant(5) # <tf.Tensor: shape=(), dtype=int32, numpy=5, weak=True>

<tf.Tensor: shape=(), dtype=int32, numpy=5, weak=True>

tf.constant([5.0, 10.0, 3]) # <tf.Tensor: shape=(3,), dtype=float32, numpy=array([ 5., 10., 3.], dtype=float32), weak=True>

<tf.Tensor: shape=(3,), dtype=float32, numpy=array([ 5., 10., 3.], dtype=float32), weak=True>

# A normal Tensor is created when dtype arg is specified.

tf.constant(5, tf.int32) # <tf.Tensor: shape=(), dtype=int32, numpy=5>

<tf.Tensor: shape=(), dtype=int32, numpy=5>

Second Case: When an input with no user-specified dtype is passed into a WeakTensor-supporting API.

tf.math.abs([100.0, 4.0]) # <tf.Tensor: shape=(2,), dtype=float32, numpy=array([100., 4.], dtype=float32), weak=True>

<tf.Tensor: shape=(2,), dtype=float32, numpy=array([100., 4.], dtype=float32), weak=True>

Originally published on the