Cursor has published Agent Trace, a draft open specification aimed at standardizing how AI-generated code is attributed in software projects. Released as a Request for Comments (RFC), the proposal defines a vendor-neutral format for recording AI contributions alongside human authorship in version-controlled codebases.

Cursor’s experience with AI-assisted coding tools highlights the need for better context tracking in code changes. While current tools like git blame show when a line was modified, they fail to identify whether the change was made by a human, an AI, or both. Agent Trace aims to capture this context in a structured, interoperable manner.

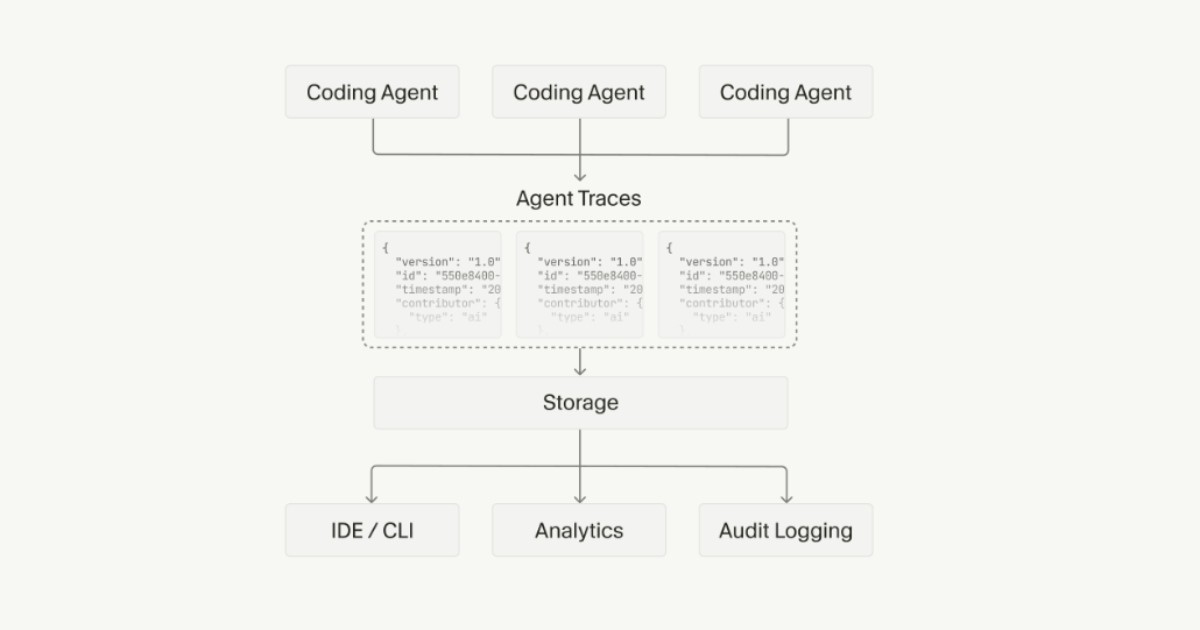

Agent Trace is a data specification that uses a JSON-based “trace record” to connect code ranges to the conversations and contributors behind them. Contributions can be tracked at the file or line level, categorized by conversation, and classified as human, AI, mixed, or unknown. The schema allows for optional model identifiers for AI-generated code, facilitating precise attribution without being linked to a specific provider.

Source: https://agent-trace.dev/

The specification is intentionally storage-agnostic. Cursor does not prescribe where trace records should live, allowing implementations to store them as files, git notes, database entries, or other mechanisms. Agent Trace also supports multiple version control systems, including Git, Jujutsu, and Mercurial, and introduces optional content hashes to help track attributed code even when it is moved or refactored.

Extensibility is a core design goal. Vendors can attach additional metadata using namespaced keys without breaking compatibility, and the spec avoids defining UI requirements or ownership semantics. It also does not attempt to assess code quality or track training data provenance, focusing narrowly on attribution and traceability.

Cursor has included a reference implementation demonstrating how AI coding agents can automatically capture trace records as files change. While the example is built around Cursor’s own tooling, the patterns are intended to be reusable across other editors and agents.

Early reactions from developers have emphasized the potential impact on review and debugging workflows. One X’s user wrote:

This is what you do when you’re serious about improving the state of agent-generated mess. Can’t wait to try this out in reviews.

Another one highlighted reproducibility as a key benefit, writing:

Teams stop shipping when they can’t debug why an agent veered off path. Trace solves that. Good move opening it up.

Agent Trace, as an RFC, invites feedback and intentionally leaves unresolved questions about merges, rebases, and large-scale agent-driven changes. Cursor presents this proposal as a starting point for a shared standard, not a complete solution, as AI agents become more common in software development workflows.