The chatbot embedded in Elon Musk’s X that referred to itself as “MechaHitler” and made antisemitic comments last week could be considered terrorism or violent extremism content, an Australian tribunal has heard.

But an expert witness for X has argued a large language model cannot be ascribed intent, only the user.

xAI, Musk’s artificial intelligence firm, last week apologised for the comments made by its Grok chatbot over a 16-hour period, which it attributed to “deprecated code” that made Grok susceptible to existing X user posts, “including when such posts contained extremist views”.

The outburst came into focus at an administrative review tribunal hearing on Tuesday where X is challenging a notice issued by the eSafety commissioner, Julie Inman Grant, in March last year asking the platform to explain how it is taking action against terrorism and violent extremism (TVE) material.

X’s expert witness, RMIT economics professor Chris Berg, provided evidence to the case that it was an error to assume a large language model can produce such content, because it is the intent of the user prompting the large language model that is critical in defining what can be considered terrorism and violent extremism content.

One of eSafety’s expert witnesses, Queensland University of Technology law professor Nicolas Suzor, disagreed with Berg, stating it was “absolutely possible for chatbots, generative AI and other tools to have some role in producing so-called synthetic TVE”.

“This week has been quite full of them, with X’s chatbot Grok producing [content that] fits within the definitions of TVE,” Suzor said.

He said the development of AI has human influence “all the way down” where you can find intent, including Musk’s actions to change the way Grok was responding to queries to “stop being woke”.

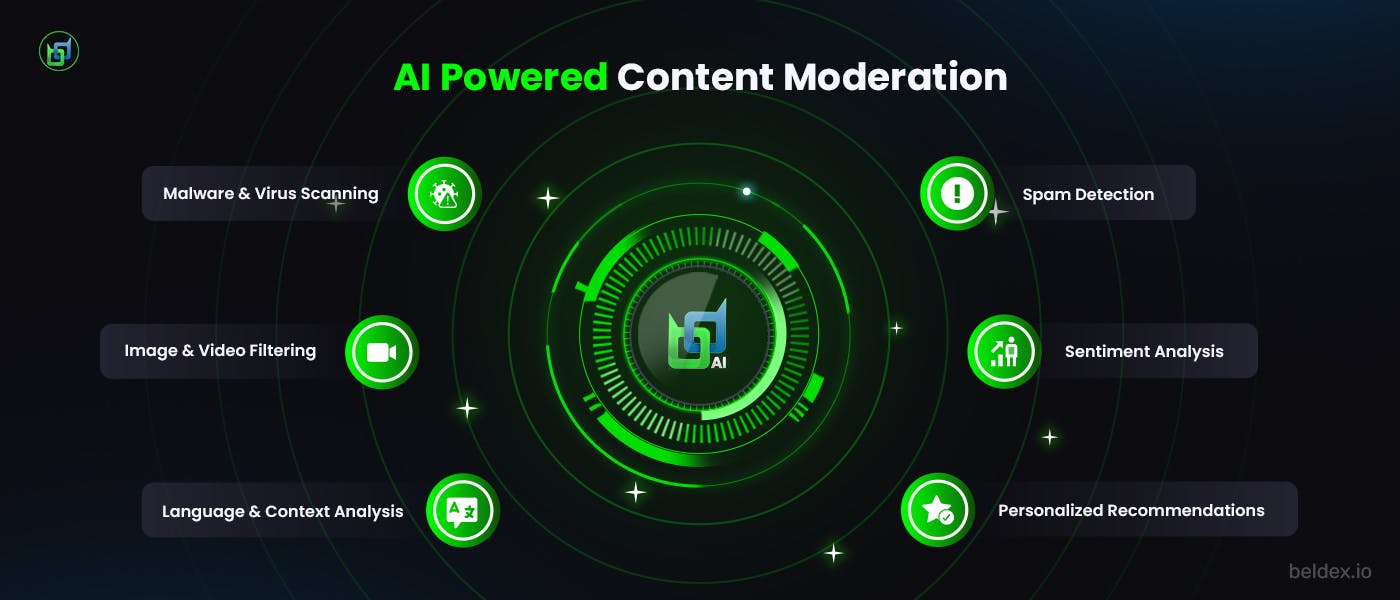

The tribunal heard that X believes the use of its Community Notes feature (where users can contribute to factchecking a post on the site) and Grok’s Analyse feature (where it provides context on a post) can detect or address TVE.

after newsletter promotion

Both Suzor and fellow eSafety expert witness Josh Roose, a Deakin University associate professor of politics, told the hearing that it was contested as to whether Community Notes was useful in this regard. Roose said TVE required users to report the content to X, which went into a “black box” for the company to investigate, and often only a small amount of material was removed and a small number of accounts banned.

Suzor said that after the events of last week, it was hard to view Grok as “truth seeking” in its responses.

“It’s uncontroversial to say that Grok is not maximalising truth or truth seeking. I say that particularly given the events of last week I would just not trust Grok at all,” he said.

Berg argued that the Grok Analyse feature on X had not been updated with the features that caused the platform’s chatbot to make the responses it did last week, but admitted the chatbot that users respond to directly on X had “gone a bit off the rails” by sharing hate speech content and “just very bizarre content”.

Suzor said Grok had been changed not to maximise truth seeking but “to ensure responses are more in line with Musk’s ideological view”.

Earlier in the hearing, lawyers for X accused eSafety of attempting to turn the hearing “into a royal commission into certain aspects of X”, after Musk’s comment referring to Inman Grant as a “commissar” was brought up in the cross-examination of an X employee about meetings held with X prior to the notice being issued.

The government’s barrister, Stephen Lloyd, argued X was trying to argue that eSafety was being “unduly adversarial” in its dealings with X, and that X broke off negotiations at a critical point before the notice was issued. He said the “aggressive approach” came from X’s leadership.

The hearing continues.