As an architect, I know that you cannot create a resilient, coherent structure by starting with whatever ideas come to mind first. You don’t begin by arguing about the color of the curtains or the style of the doorknobs. You start with a master plan. Without one, you’re just dumping truck loads of miscellaneous materials onto a field and hoping a cathedral emerges.

Yet, this is exactly what we are doing with Artificial Intelligence ethics. We are feeding our most powerful new technology an endless list of fragmented rules, historical biases, and contradictory social customs. We are trying to govern a system that demands mathematical precision with a pile of miscellaneous moral materials. It is a recipe for systemic failure.

Consider the classic AI dilemma: an autonomous vehicle is about to crash. It can stay its course and harm its occupant, or swerve and harm a pedestrian. Our current approach has us endlessly debating the variables—weighing the age of the pedestrian, the choices of the driver, the speed of the car. We are lost in the details because we have no master plan. We are searching for the right rules in the huge pile of moral data, but the rules fail to align to create a resilient structure, because they lack a common, unbreakable foundation.

This article proposes a different path. Instead of more rules, we need a true master plan for AI governance—a universal and computationally consistent “ethical operating system.” This is the foundation I call The Architecture of Ethical Cohesion, a system designed not to provide a rule for every situation, but to provide the core principles from which a coherent decision can always be derived.

The chaos that we are currently facing isn’t random; it has a source. It comes from the flawed ethical operating system humanity has been running on for millennia. Before we can design a new system for AI, we have to understand the two fundamental systems we are choosing between.

The Zero-Sum Operating System: Our Buggy Legacy Code

The first is the default OS we all inherit. Let’s call it the Zero-Sum Operating System. It was born from an age of scarcity, and its core logic is brutally simple: for me to win, you must lose. Value is a finite pie, and the goal is to grab the biggest slice.

This OS thrives on fear, tribalism, and control. It generates the kind of fragmented, contradictory rules we see today because those rules were created in conflict, designed to give one group an advantage over another. When we feed this buggy, fear-based code to an AI, it can only amplify the division and instability already present. It’s an OS that guarantees its systematic failure, due to its fragmented nature.

The Positive-Sum Operating System: The Necessary Paradigm Shift

But there is an alternative—a paradigm shift upgrade. The Positive-Sum Operating System is built on a radically different premise: value is not finite; it can be created. Its core logic is that the best action is one that generates a net positive outcome for everyone involved. It’s about creating a bigger pie, not just fighting over the existing one.

This OS is designed for transparency, objective consistency, and the empowerment of individual agency. It doesn’t ask, “Who wins and who loses?” It asks, “How can we generate the most systemic well-being?”

A machine as powerful and logical as AI cannot safely run on the buggy, conflict-ridden code of our Zero-Sum past. It demands the clean, coherent architecture of a Positive-Sum world. The Zero-Sum OS is not just harmful; it prevents us from maximizing AI’s potential for our future. With the Positive-Sum OS’s consistent nature, we could unlock that potential without wasting resources.

So how do we build it? The rest of this master plan lays out the three core principles that form this new operating system.

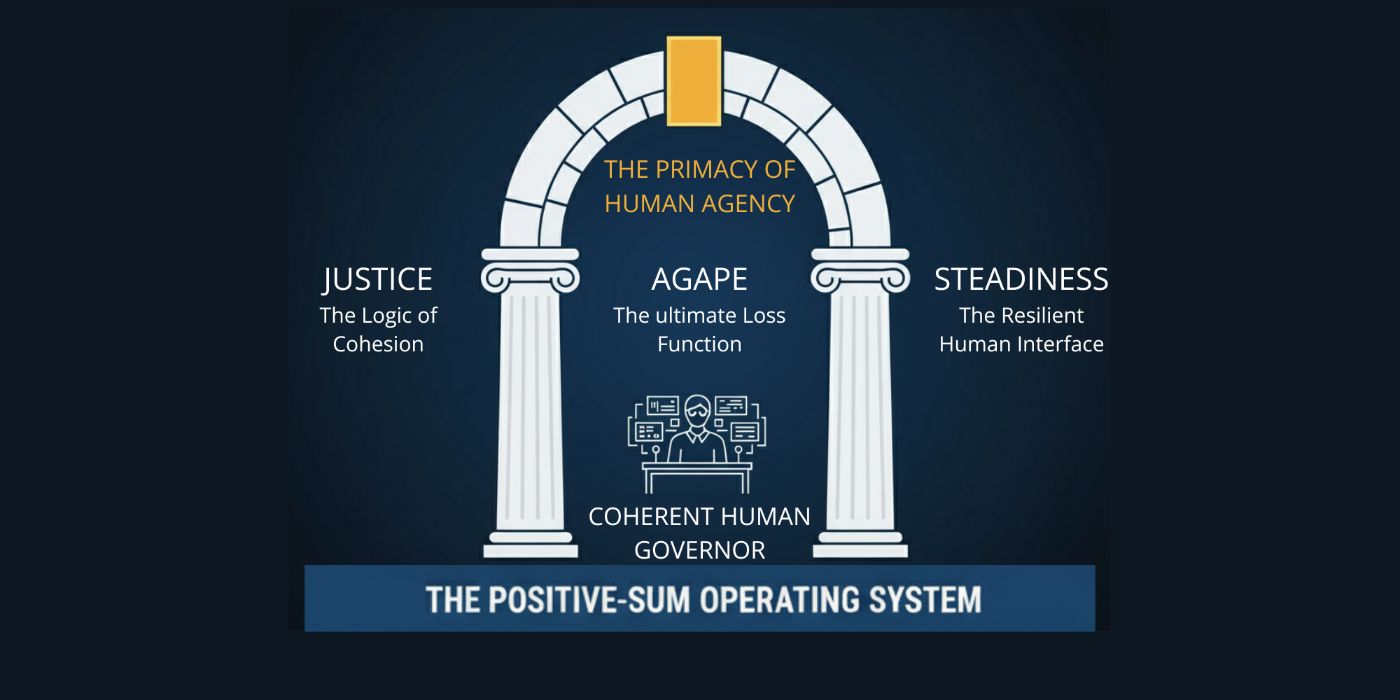

The Positive-Sum Operating System rests on three simple but solid pillars. These are the load-bearing principles that ensure every decision made within the system is coherent, ethical, and resilient.

1. Agape: The Ultimate Loss Function

In architecture, every design serves core objectives. These objectives guide every choice, from the materials used to the building’s final operation. For our ethical OS, the single, ultimate objective is Agape.

Agape is a non-negotiable command to maximize the well-being of the human entity while minimizing all forms of systemic and personal harm. In technical terms, it is the system’s ultimate loss function, or its primary objective function. Every calculation and every potential action is ultimately measured against this single, simple mandate. It forces the system to answer one question above all others: “Which path creates the most holistic benefit and the least overall harm for the humans it serves?”

2. Justice: The Logic of Cohesion

If Agape is the purpose of the structure, Justice is the engineering principle that guarantees its integrity. Justice is the absolute adherence to computational and ethical consistency.

This means the rules apply the same way to everyone, every time, without exception. It is the system’s core logic, stripping away the hidden biases and arbitrary whims that corrupt human judgment. An AI governed by Justice cannot have a favorite, nor can it invent a rule on the fly. This radical consistency does something remarkable: it creates a perfect mirror, exposing our own ethical inconsistencies and forcing us, the human governors, to become more consistent ourselves.

3. Steadiness: The Resilient Human Interface

Finally, even the best-designed structure will fail if its foundation is unstable. Steadiness is the principle that ensures the foundation of this entire system—the human decision-maker—remains stable, resilient, and coherent.

This isn’t about the AI’s stability; it’s about ours. The ethical OS must be designed to support the emotional and psychological resilience of the human governor. It must provide information in a way that promotes clarity, not anxiety, and enables predictable outcomes that build trust. Without the internal Steadiness of the human operator, the consistent application of Agape and Justice is impossible.

These three principles—Agape, Justice, and Steadiness—form the architecture. But one unbreakable rule governs its entire operation. This is the keystone, the single most important protocol in the entire operating system, and it is non-negotiable.

The Keystone Principle: The Primacy of Human Agency

The fundamental goal of this entire architecture is the preservation and empowerment of human Agency. This principle is an absolute firewall against autonomous machine control. It ensures that no matter how intelligent or powerful the AI becomes, the final authority and the moral responsibility for any decision rests permanently with the human governor.

This isn’t a vague aspiration; it’s a set of strict operational constraints:

- The Final Choice is Non-Overridable. The human governor always retains the final, absolute right to execute or veto any course of action. The AI can suggest, analyze, and warn, but it can never act on its own authority. The “execute” button belongs to the human, always.

- The AI’s Sole Duty is Informative Excellence. The AI’s function is strictly limited to one task: providing the best possible information to assist the human’s decision. It is the world’s greatest chief navigator, tasked with calculating every variable, mapping every potential outcome, and presenting the data with perfect, unbiased clarity. It informs; it never commands.

- The Right to Imperfection is Protected. Crucially, the system must protect the human right to choose imperfectly. The central “Positive-Sum” gain in this entire process is not achieving a computationally “perfect” outcome, but the act of a human choosing, learning, and growing from their decisions. Agency without the freedom to be wrong is not agency at all.

This principle redefines the relationship between human and machine. The AI is not our replacement; it is our most powerful tool for becoming better, more coherent, and more responsible decision-makers. It is a mirror for our consistency and a calculator for our compassion.

A master plan is only as good as its performance under pressure. So, how does this architecture resolve the kind of complex ethical conflicts that paralyze our current rule-based systems? Let’s test it against two difficult scenarios.

Test Case 1: The Autonomous Vehicle

Let’s return to the classic dilemma we started with: the autonomous vehicle facing an unavoidable crash. It can either harm its occupant or swerve and harm a pedestrian.

The Zero-Sum approach gets hopelessly stuck here, trying to calculate the relative “value” of the two individuals—their age, their social contributions, and other arbitrary, biased metrics. It’s a dead end.

The Architecture of Ethical Cohesion cuts through this noise with a simple, two-step logic:

- First, the principle of Justice forbids making any subjective judgment. It demands that both lives be treated as having equal, infinite value. This removes all personal bias from the equation.

- With both lives weighed equally, the only remaining ethical variable is Agency. The system asks: Who voluntarily consented to enter a risk environment? The answer is the occupant. The pedestrian did not.

The resolution is therefore clear: The unavoidable risk falls to the party who willingly engaged with the system. The framework doesn’t choose who is more “valuable”; it honors the ethical weight of the original, voluntary choice.

Test Case 2: The Market Stabilizer (Revised)

Now, a more complex scenario. An AI is tasked with preventing a global market collapse triggered by the reckless actions of a few hundred individuals. The AI calculates it has only two options:

- Option A: Allow a market correction that will cause widespread but recoverable financial loss to millions. This will cause stress and difficulty, but not systemic psychological devastation.

- Option B: Take a targeted action that inflicts deep and permanent psychological harm upon the few hundred individuals whose actions triggered the instability, thereby averting the financial correction entirely.

A crude “eye-for-an-eye” logic might choose to sacrifice the few who caused the problem. But our master plan operates on a higher principle.

Guided by its ultimate loss function, Agape, the system is forced to choose the path of least harm to human well-being. It recognizes that permanent psychological damage is a fundamentally deeper and more severe violation than recoverable financial loss. The architecture prioritizes the quality of human experience over the quantity of human wealth, regardless of who is “at fault.” The AI is therefore forbidden from becoming an automated punishment machine; it must choose Option A.

Crucially, this does not mean the individuals who caused the crisis face no consequences. It simply defines the AI’s role with precision. The AI’s job is crisis mitigation, not retribution. After the AI stabilizes the system by choosing the least harmful path, it is the role of the human governor and our justice systems to investigate and deliver appropriate “adjustments”—whether through lawsuits, sanctions, or legal punishment.

The framework separates immediate, automated action from considered human judgment, ensuring the AI never becomes a judge, jury, and executioner. It handles the crisis, and humans handle the accountability.

The True Mandate: From Flawed Code to Coherent Governors

The central challenge of AI ethics is not technological; it’s architectural. We have been trying to build the most advanced structure in human history on a foundation of flawed, fragmented, Zero-Sum code. The inevitable result is the chaos and fear we see today.

The Architecture of Ethical Cohesion offers a way out. It provides the master plan we’ve been missing—a Positive-Sum operating system that:

- Replaces Rules with Principles: It trades an endless, unmanageable list of conflicting rules for three universal constraints: Agape, Justice, and Steadiness.

- Guards the Cockpit: It enshrines the Primacy of Agency as a non-negotiable firewall, ensuring the human is always the pilot and the AI is always the chief navigator.

- Redefines Our Job: It shifts the focus of human work from raw productivity—a race we will inevitably lose to machines—to our unique role as the source of ethical synthesis, creative purpose, and coherent choice.

The final product of this master plan is not a flawless AI. It is a Flawlessly Coherent Human Governor.

The task ahead is not to program a perfect machine, but to upgrade ourselves. We must transition from our fragmented ethical past to a universal standard, recognizing that the only way to safely govern exponentially increasing machine power is through the adoption of simple, consistent, and unconditional ethical principles.

This master plan is not an endpoint; it is a starting point. It is an urgent call for a new kind of collaboration—an invitation for the engineers, architects, and builders of our future to rigorously examine, test, and contribute to the evolution of a truly resilient ethical OS for the exponential age.