Disclaimer: No AI features were used to write this column.

Despite spending my entire career in software technology and even directing a program to use machine learning to help manage large systems, I wouldn’t be able to tell a neural net from a hairnet. Like many outside the software field, my understanding of AI is very limited, and while I acknowledge that it exists, and somewhat understand what it is capable of, I have absolutely no idea how it works. AI does not follow the prescriptive, algorithmic flow of traditional software. Older forms of AI used machine learning to recognize patterns and make recommendations. The newer AI systems, based on so-called Large Language Models (LLMs), are defined as ‘agentic’, which simply means that they can operate autonomously. Rather than simply analyzing data and making recommendations for people to act on, these newer AI systems can plan, execute, and adapt to changing situations they encounter along the way. Where a traditional machine learning system can detect a potential problem in a system and create a ticket that a support engineer can investigate and resolve, a modern AI system can independently verify the problem, take steps to correct the outcome, and document the outcome in a ticket, without any need for human interaction.

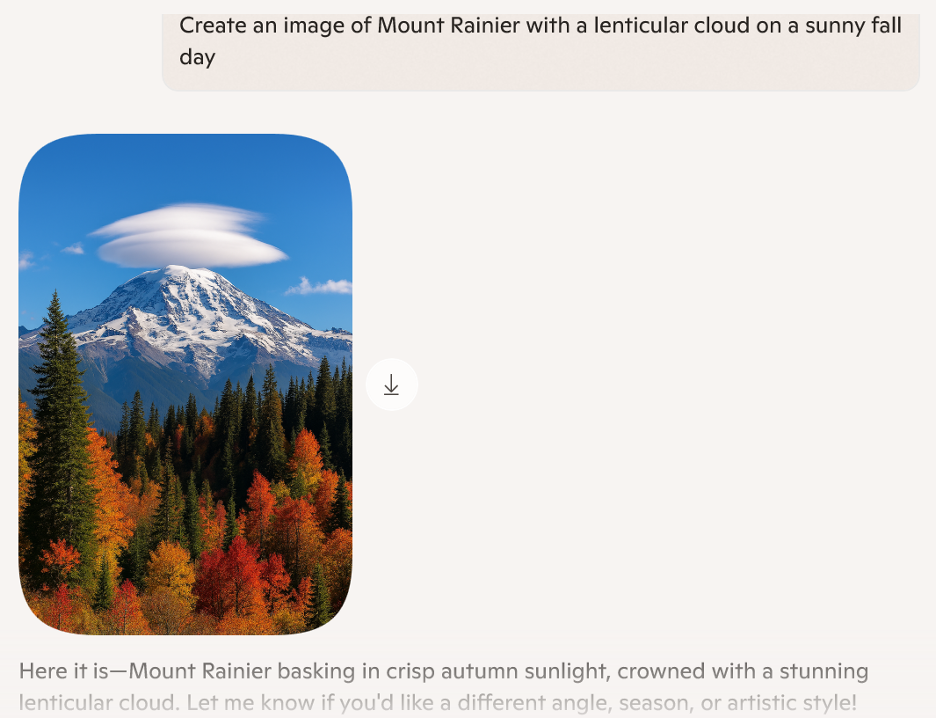

I recently upgraded my old PC to a new AI-compatible model and decided to try out some AI features to see what all the fuss was about. Creating images is a piece of cake, although the results are not always accurate. Here is a simple view of Mount Rainier from an extremely simple prompt. I’m sure a more refined clue would yield a more refined result.

I also asked the AI to summarize a novel I had recently read.

Here the results were truly impressive. The AI demonstrated a deep knowledge of the subject and when pressed to elaborate on themes in the novel, provided well-reasoned and insightful responses.

However, from then on things went downhill quite quickly. Inspired by the ads that aired during the Mariners ALCS series against Toronto about how AI had compiled the statistics on things like jumping the foul line on the way to the plate or tapping the bat on home plate, I decided to ask a question about sports statistics. I’ve been an Arsenal fan in the English Premier League for over 50 years, so indulge me.

Impressive – right? I wasn’t even surprised by the typo in my prompt. Except that the result in the match against Arsenal was 4-0 and Martin Odegaard was injured and was not even in the selection. Oh, and Newcastle even beat Benfica 3-0. The AI also provided a table summarizing all results:

Except none of this is right. Every result is wrong. Okay, let’s try again.

I guess I should have asked for the “correct” results the first time. But the new results are no better. Just like Odegaard, Gabriel Jesus is out injured. There was no penalty for Arsenal. Saka didn’t score. Alvaro Morata does not play for Atletico Madrid and Angel DiMaria does not play for Benfica either. Only one of the six matches was reported with the correct score.

OK – “verified and corrected.” That’s better. This time all scores were accurate, but many details were still missing. What’s impressive here, though, is that the AI clearly understood and acted on my natural language feedback, and echoed that feedback in its responses.

I then asked why it was proving so difficult to get this question right, and this is quite enlightening.

For the questions advertised during the Mariners games, the AI models would have been trained with the exact data needed to answer those questions. But even then, did anyone actually check the exits?

In conclusion, while the capabilities of modern AI systems are certainly impressive, they are not infallible and because the results are presented with the utmost confidence, it can be difficult to tell when something is wrong. As Ronald Reagan once said, “trust but verify.” The responsibility to verify the results of AI queries lies with you, the user.

Niall McShane lives in Edmonds, is an occasional contributor to Scene in Edmonds, and is a retired IBM executive with experience leading software development and customer service organizations.