Table of Links

Abstract and 1. Introduction

-

Deep Reinforcement Learning

-

Similar Work

3.1 Option Hedging with Deep Reinforcement Learning

3.2 Hyperparameter Analysis

-

Methodology

4.1 General DRL Agent Setup

4.2 Hyperparameter Experiments

4.3 Optimization of Market Calibrated DRL Agents

-

Results

5.1 Hyperparameter Analysis

5.2 Market Calibrated DRL with Weekly Re-Training

-

Conclusions

Appendix

References

3 Similar Work

3.1 Option Hedging with Deep Reinforcement Learning

The first to apply DRL in the option hedging space are Du et al. (2020) and Giurca and Borovkova (2021), who each use a DQN method. The literature is extended by Cao et al. (2021), who use the DDPG algorithm to allow for continuous action spaces. Assa et al. (2021), Xu and Dai (2022), Fathi and Hientzsch (2023), and Pickard et al. (2024). Cao et al. (2023) use distributed DDPG, which uses quantile regression to approximate the distribution of rewards. Across the literature, there a consistent inclusion of the current asset price, the time-to-maturity, and the current holding in the DRL agent state-space. However, there is a disagreement concerning the inclusion of the BS Delta in the state-space. Vittori et al. (2020), Giurca and Borovkova (2021), Xu and Dai (2022), Fathi and Hientzch (2023), and Zheng et al. (2023) include the BS Delta, while several articles argue that the BS Delta can be deduced from the option and stock prices and its inclusion only serves to unnecessarily augment the state (Cao et al. (2021), Kolm and Ritter (2019), Du et al. (2020)). Moreover, in Pickard et al. (2024), the DRL agent is attempting to hedge an American option, and therefore the inclusion of the BS Delta, which is derived for a European option, is counterproductive.

The acquisition of underlying asset price data is an integral part of training a DRL option hedger, as the current price is required in both the state-space and the reward. Moreover, the option price is required for the reward function. In the DRL hedging literature, almost all studies employ at least one experiment wherein the data is generated through Monte Carlo (MC) simulations of a GBM process. As this is aligned with the BS model, studies that use GBM use the BS option price in the reward function (Pickard and Lawryshyn 2023). However, recall that Pickard et al. (2024) hedge American put options, and therefore compute the option price at each step by interpolating an American put option binomial tree. The majority of studies in the DRL option hedging literature also simulate training paths using a stochastic volatility model such as the SABR (Stochastic Alpha, Beta, Rho) (Hagan et al. 2002) or Heston (1993) models. Note that when the option is European, the option price may still be computed at each time with the BS model, simply by substituting the updated volatility into the closed form expression. However, in Pickard et al. (2024) the option is American and therefore the option price is computed by using a Chebyshev interpolation method. This Chebyshev method, which is given a through overview by Glau et al. (2018), is described in the next section. A final note on training data is that Pickard et al. (2024) are the only study to calibrate the stochastic volatility model to empirical option data. This calibration process is described in the next section, and the final experiment of this paper will examine the difference between one-time and weekly re-calibration to new market data.

Pickard and Lawryshyn (2023) describe that studies generally test the trained DRL agent using MC paths from the same underlying process from training, i.e., GBM or a stochastic volatility model. Consistent results are present in such experiments, as DRL agents who are trained with a transaction cost penalty outperform the BS Delta strategy when transaction costs are present (Pickard and Lawryshyn 2023). There are also cases wherein the DRL agents are tested on data that does not match the training data. For example, each of Giurca and Borovkova (2021), Xiao et al. (2021), Zheng et al. (2023), and Mikkilä and Kanniainen (2023) perform a sim-to-real test in which they test the simulation trained DRL agent on empirical data, and all but Giurca and Borovkova (2021) achieve desirable results.

Those using only empirical data for both training and testing are Pham et al. (2021) and Xu and Dai (2022). Pham et al. (2021) show that their DRL agent has a profit higher than the market return when testing with empirical prices. Of further note, Xu and Dai (2022) train and test their DRL agent with empirical American option data, which is not seen elsewhere in the DRL hedging literature. However, Xu and Dai (2022) do not change their DRL approach in any manner when considering American versus European options. As such, Pickard et al. (2024) are the first to train DRL agents to consider early exercise. Consistent with the literature, Pickard et al. (2024) first test agents with data generation processes that match the training, i.e., agents trained with MC paths of a GBM process are tested on MC paths of a GBM process, and agents trained with MC paths of the modified SABR model are tested on MC paths of the modified SABR model. Additionally, given that the modified SABR model is calibrated to market prices, a final test examines DRL agent performance on realized market paths. The results indicate that the DRL agents outperform the BS Delta method across 80 options. As this paper aims to optimize the results of Pickard et al. (2024), this process will be given a much more thorough description in the methodology section.

It is noted that while the literature featuring to the use of DRL for American option hedging is limited, there are still applications of DRL in American option applications. Fathan and Delage (2021) use double DQN to solve an optimal stopping problem for Bermudan put options, which they write are a proxy for American options. Ery and Michel (2021) apply DQN to solving an optimal stopping problem for an American option. While the DRL solutions are limited to the two studies described, further articles have used other RL techniques for American option applications such as pricing and exercising, and such articles are summarized in a review given by (Bloch 2023).

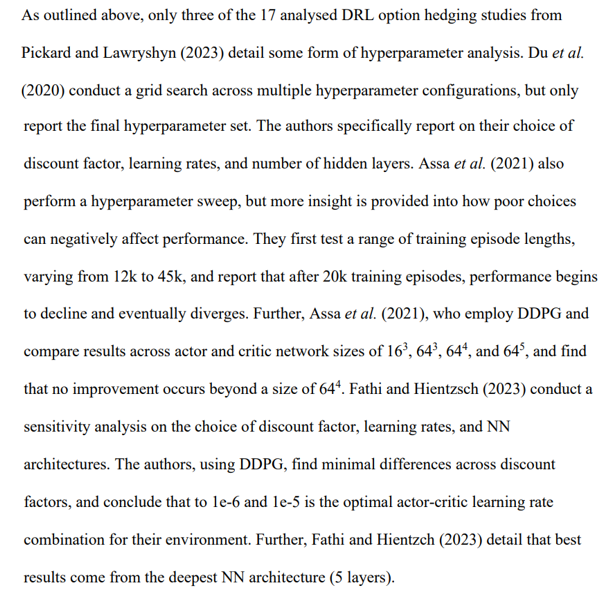

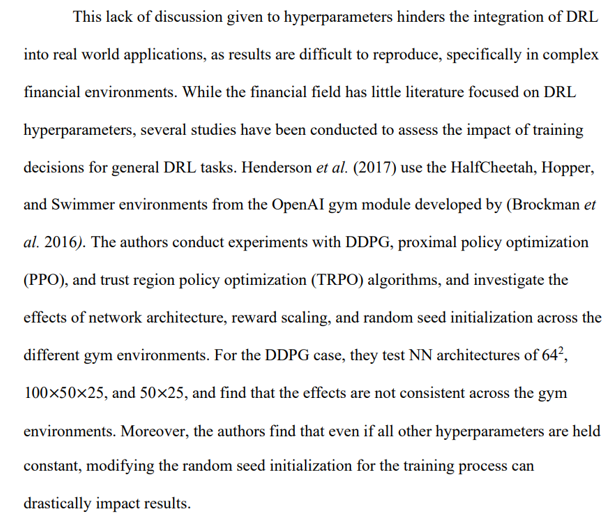

3.2 Hyperparameter Analysis

Islam et al. (2017) follow up on the work of Henderson et. al (2017) by examining the performance of DDPG and TRPO methods in the HalfCheetah and Hopper gym environments. They first note that it is difficult to reproduce the results of Henderson et al. (2017), even with similar hyperparameter configurations. Next, Islam et al. (2017) add to the literature an analysis of DDPG actor and critic learning rates. They conclude first that the optimal learning rates vary between the Hopper and HalfCheetah environments, before noting it is difficult to gain a true understanding of optimal learning rate choices while all other parameters are held fixed. Andrychowicz et al. (2020) perform a thorough hyperparameter sensitivity analysis for multiple on-policy DRL methods, but do not consider off-policy methods such as DDPG. Overall, Andrychowicz et al. (2020) conclude that performance is highly dependent on hyperparameter tuning, and this limits the pace of research advances. Several other studies find similar results when testing various DRL methods in different environments, whether through a manual search of hyperparameters or some predefined hyperparameter optimization algorithm (Ashraf et al. (2021), Kiran and Ozyildirim (2022), Eimer et al. (2022)).

As such, it is evident that hyperparameter configurations can drastically impact DRL agent results. However, much of the literature on DRL hyperparameter choices often utilize a generic, pre-constructed environment for analysis, rather than addressing real-life applicable problems. Therefore, this study aims to contribute to the DRL hedging literature, and the DRL space as a whole, by conducting a thorough investigation of how hyperparameter choices impact the realistic problem of option hedging in a highly uncertain financial landscape.

:::info

Authors:

(1) Reilly Pickard, Department of Mechanical and Industrial Engineering, University of Toronto, Toronto, Canada ([email protected]);

(2) F. Wredenhagen, Ernst & Young LLP, Toronto, ON, M5H 0B3, Canada;

(3) Y. Lawryshyn, Department of Chemical Engineering, University of Toronto, Toronto, Canada.

:::

:::info

This paper is available on arxiv under CC BY-NC-ND 4.0 Deed (Attribution-Noncommercial-Noderivs 4.0 International) license.

:::