Table of Links

Abstract and 1 Introduction

-

Related works

-

Problem setting

-

Methodology

4.1. Decision boundary-aware distillation

4.2. Knowledge consolidation

-

Experimental results and 5.1. Experiment Setup

5.2. Comparison with SOTA methods

5.3. Ablation study

-

Conclusion and future work and References

Supplementary Material

- Details of the theoretical analysis on KCEMA mechanism in IIL

- Algorithm overview

- Dataset details

- Implementation details

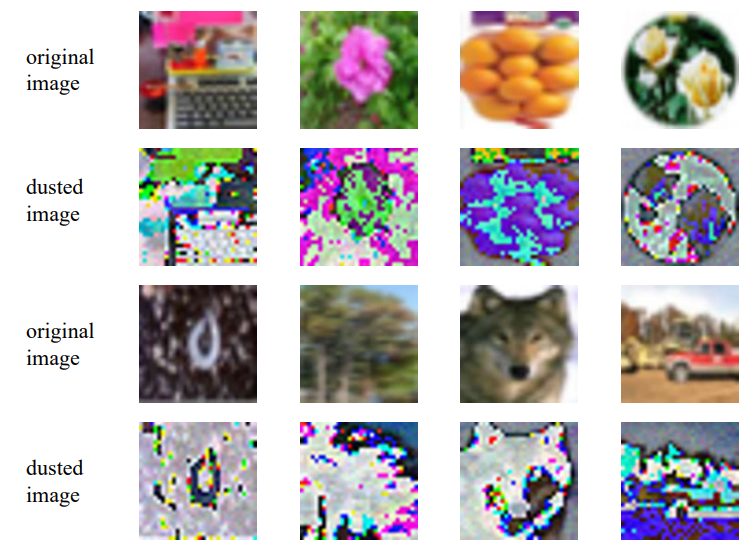

- Visualization of dusted input images

- More experimental results

10. Implementation details

Selection of compared methods. As few existing method is proposed for the IIL setting, we reproduce several classic and SOTA CIL methods by referring to their original code or paper with the minimum revision.

LwF [12] is one of the earliest incremental learning algorithms based on deep learning, which propose to use knowledge distillation for resisting knowledge forgetting. Considering the significance of this method, a lot of CIL methods are still comparing with this baseline.

iCarl [22] is base on the LwF method and propose to use old exemplars for label-level knowledge distillation.

PODNet [4] implements old knowledge distillation at the feature level, which is different from the former two.

Der [31] which expends the network dynamically and attains the best CIL results given task id. Expending the neural network shows great power in learning new knowledge and retain old knowledge. Although in new IIL setting the adding of new parameters is limited, we are glad to know the performance of the method with dynamic network in the new IIL setting.

OnPro [29] uses online prototypes to enhance the existing boundaries with only the data visible at the current time step, which satisfies our setting without old data. Their motion to make the learned feature more generalizable to new tasks is also consist with our method to promote the model continually utilizing only new data.

online learning [6] can be applied to the hybrid-incremental learning. Thus, it can be implemented directly in the new IIL setting. It proposes a modified cross-distillation by smoothing student predictions with teacher predictions for old knowledge retaining, which is different with our method to alter the learning target by fusing annotated label with the teacher predictions.

Besides above CIL methods, ISL [13] is one of the scarce IIL methods that can be directly implemented in our IIL setting. Different from our setting that aims to address all newly achieved instances, ISL is proposed for incremental sub-population learning. Hence, we not only applied ISL in our setting for comparative purposes but also evaluated ours following their setting.

Our extensive experiments real that neither existing CIL methods nor IIL methods can tame the proposed IIL learning problem. Existing methods, especially the CIL methods, primarily concentrate on mitigating catastrophic forgetting, demonstrating limited effectiveness in learning from new data. In real-world applications, enhancing the model with additional instances and achieving more generalizable features is crucial. This underscores the importance and relevance of our proposed IIL setting.

Details for reproduction of compared methods. To reproduce existing rehearsal-based methods, including iCarl [22], PODNet [4], Der [31], OnPro [29], although no old data is available in the new IIL setting, we still set a memory of 20 exemplars per class. For all compared methods, we trained the base model for their own if necessary. For example, some of the methods utilize more comprehensive data augmentation methods and have a higher base performance than others. We kept the data augmentation part in reproducing these methods, even in the IIL learning phase.

For LwF [12], as there is no need to add new classification head, we directly implement their training loss on the old classification head. iCarl [22] is implemented using their provided codes on Tensorflow. PODNet [4] and Der [31] are implemented based on their own codes using Pytorch. For online learning [6], we reproduce it only using the part that is related to learning from new instances of old classes in the first training phase. ISL [13] is also implemented based on its original codes with the original setting of the learning rate, i.e. lr=0.05 for the base training and lr=0.005 for the IIL learning phase. OnPro [29] utilize a quite strong data augmentation during training, which leads to a slightly low accuracy of the based model compared to other methods. As OnPro trains the base model for

only one epoch in the online training, which is not sufficient to achieve a strong base model, we change it to 60 epochs for training the base model as other methods. For the IIL learning phase, we keep the one epoch setting as it is because we found training more epochs don’t lead to better performance. The learning rate of OnPro used in training the based model is 5e-4 and decays with a ratio of 0.1 at epoch 12, 30, 50. In the IIL traing phase, lr=5e-7, which is far smaller than ours (0.01).

:::info

Authors:

(1) Qiang Nie, Hong Kong University of Science and Technology (Guangzhou);

(2) Weifu Fu, Tencent Youtu Lab;

(3) Yuhuan Lin, Tencent Youtu Lab;

(4) Jialin Li, Tencent Youtu Lab;

(5) Yifeng Zhou, Tencent Youtu Lab;

(6) Yong Liu, Tencent Youtu Lab;

(7) Qiang Nie, Hong Kong University of Science and Technology (Guangzhou);

(8) Chengjie Wang, Tencent Youtu Lab.

:::

:::info

This paper is available on arxiv under CC BY-NC-ND 4.0 Deed (Attribution-Noncommercial-Noderivs 4.0 International) license.

:::