Cloudflare recently implemented the node:http client and server APIs in Cloudflare Workers, allowing developers to migrate existing Node.js applications to the serverless computing platform. These HTTP APIs enable popular Node.js frameworks, such as Express.js and Koa.js, to run on Workers.

Yagiz Nizipli and James M Snell, principal systems engineers at Cloudflare, write:

This significant addition brings familiar Node.js HTTP interfaces to the edge, enabling you to deploy existing Express.js, Koa, and other Node.js applications globally with zero cold starts, automatic scaling, and significantly lower latency for your users — all without rewriting your codebase.

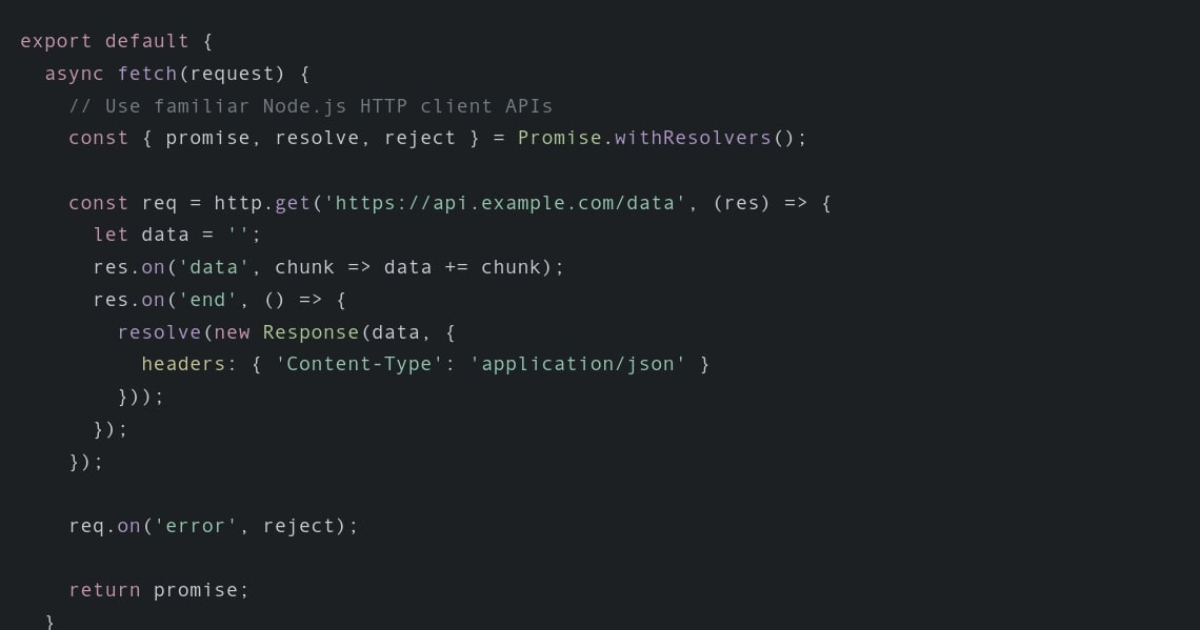

To support HTTP client APIs, Cloudflare reimplemented the core node:http APIs by building on top of the standard fetch() API that Workers use natively, maintaining Node.js compatibility without significantly affecting performance. The wrapper approach supports standard HTTP methods, request and response headers, request and response bodies, streaming responses, and basic authentication.

However, the managed approach makes it impossible to support a subset of Node.js APIs. Among the current limitations and differences with a standard Node.js environment, the Agent API is provided but operates as a no-op, and trailers, early hints, and 1xx responses are not supported. Furthermore, since Workers handle TLS automatically, TLS-specific options are not supported.

The addition received positive feedback from the community, though some users noted that this feature should have been introduced earlier. Himanshu Kumar comments on X:

This unlocks exciting possibilities for serverless Node.js. A smoother transition means wider adoption and faster innovation.

Since Cloudflare Workers operate in a serverless environment, direct TCP connections are not available, and all networking operations are managed by services outside the Workers runtime, handling connection pooling, keeping connections warm, and managing egress IPs. Nizipli and Snell explain:

The server-side implementation is where things get particularly interesting. Since Workers can’t create traditional TCP servers listening on specific ports, we’ve created a bridge system that connects Node.js-style servers to the Workers request handling model.

When a developer creates an HTTP server and calls listen(port), instead of opening a TCP socket, the server is registered in an internal table within Worker, acting as a bridge between http.createServer executions and incoming fetch requests using the port number as the identifier. The developer should then perform either an automatic integration using the httpServerHandler method or a manual integration using the handleAsNodeRequest method.

According to the documentation, when using port-based routing, the port number acts as a routing key to determine which server handles requests, allowing multiple servers to coexist in the same Worker.

Cloudflare is not the only hyperscaler offering Node.js on its serverless computing platform, with Node.js runtimes also available for AWS Lambda, Google Cloud Run, and Azure Functions.