Table of Links

Abstract and 1. Introduction

2 Data

2.1 Data Sources

2.2 SS and SI Categories

3 Methods

3.1 Lexicon Creation and Expansion

3.2 Annotations

3.3 System Description

4 Results

4.1 Demographics and 4.2 System Performance

5 Discussion

5.1 Limitations

6 Conclusion, Reproducibility, Funding, Acknowledgments, Author Contributions, and References

SUPPLEMENTARY

Guidelines for Annotating Social Support and Social Isolation in Clinical Notes

Other Supervised Models

3.3 System Description

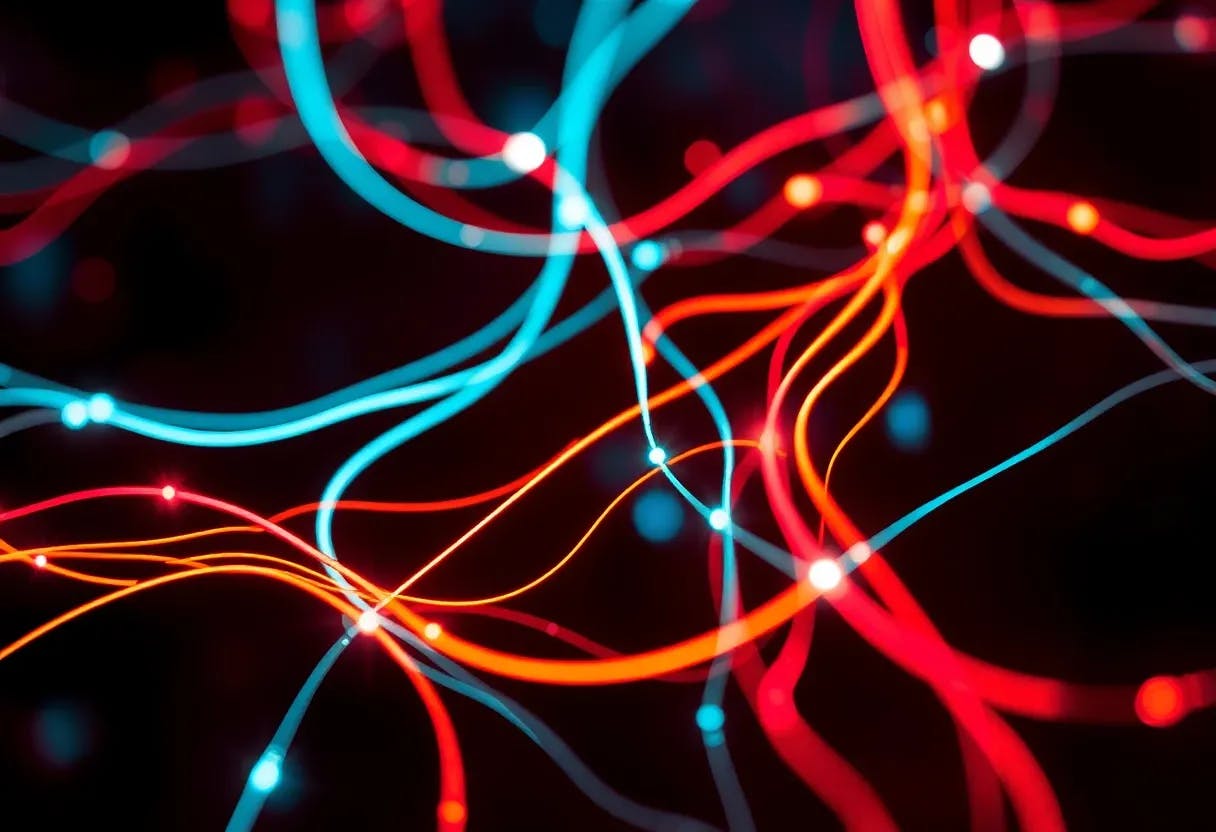

We developed rule- and LLM-based systems to identify mentions of fine-grained categories in clinical notes. Rules were then used to translate entity-level to note-level classifications and fine-grained to coarse-grained labels as mentioned in Section 3.2. An architecture of the NLP systems is provided in Figure 2.

3.3.1 Rule-based System

As aforementioned, a major advantage of the RBS is full transparency in how classification decisions are made. We implemented the system using the open-source spacy Matcher § [41]. Additionally, we compiled a list of exclusion keywords (see Supplementary Table S4) to refine the rules, ensuring relevant identification.

3.3.2 Supervised Models

Expanding on the published literature, we attempted first we began to implement Support Vector Machines (SVMs) and Bidirectional Encoder Representations from Transformers (BERT)-based models at WCM to identify fine-grained categories. However, these models were inappropriate due few SS/SI mentions in the corpus (see Supplementary Material and Table S6).

3.3.3 Large Language Models (LLMs)

We developed a semi-automated method to identify SS and SI using an open-source advanced finetuned LLM called “Fine-tuned LAnguage Net-T5 (FLAN-T5)” [42, 43]. We used FLAN-T5 in a “question-answering” fashion to extract sentences from clinical texts with mentions of SS and SI subcategories. A separate fine-tuned model was created for each of the fine-grained categories.

Model Selection: T5 has been used for other classification tasks in clinical notes, and the FLAN (Fine-tuned Language Net) version of T5, which employs chain-of-thought (COT) prompting, does not require labeled training data [43]. Five variants of FLAN-T5 are available based on the number of model parameters¶. Guevara et al. [32] observed that FLAN-T5-XL performed better than the smaller models (FLAN-T5-L, FLAN-T5-base, and FLAN-T5-small) with no significant improvement with the larger FLAN-T5-XXL. Thus, we selected FLAN-T5-XL for our experimentation.

Zero-shot: Given that LLMs follow instructions and are trained on massive amounts of data, they do not necessarily require labeled training data. This “zero-shot” approach was performed by providing model instruction, context, a question, and possible choice (‘yes,’ ‘no,’ or ‘not relevant’). An example is provided in Table 1. The option ‘no’ was selected for contexts that were negated, and ‘not relevant’ was chosen for those that did not pertain to the subcategory or the question.

Fine-tuning: Since FLAN-T5-XL (zero-shot) with instruction had poor F-scores (see Supplementary Table S8), the models were improved by fine-tuning them with synthetic examples that could help the model learn about the specific SS or SI subcategories. For each fine-grained category, about 50 (yes), 50 (no), and 50 (not relevant) examples were created. The synthetic examples themselves became a validation set to fine-tune the parameters. ChatGPT (with GPT 4.0)‖ was used to help craft context examples, but ultimately after several iterations in the validation set, they were refined by the domain experts so that each example was specifically instructive about the inclusions and exclusions of the category. Examples of prompts for loneliness are provided in Table 1. All fine-tuning examples and questions for each subcategory are provided in Supplementary Material and Table S7. Furthermore, giving the LLMs specific stepwise instructions to follow (“instruction tuining”) has been shown to improve performance by reducing hallucinations [44, 45]. Therefore, we added an instruction as a part of the prompt.

Parameters: Previously, the parameter-efficient Low-Rank Adaptation (LoRA) fine-tuning method was used with FLAN-T5 models to identify SDOH categories [32]. However, the newer Infused Adapter by Inhibiting and Amplifying Inner Activations (IA3 ) was selected for its better performance [46]. We fine-tuned the data on 15-20 epochs. Fine-tuning parameters can be viewed in our publicly available code∗∗.

3.3.4 Evaluation

All evaluations were performed at the note level for both the fine- and coarse-grained categories. To validate the NLP systems, precision, recall, and f-score were all macro-averaged to give equal weight to the number of instances. Instances of emotional support and no emotional support subcategories were rare in the underlying notes (see Supplementary Table S5 for full counts) and therefore the accuracy could not be assessed.

§ https://spacy.io/api/matcher

¶ https://huggingface.co/docs/transformers/model doc/flan-t5

‖https://openai.com/blog/chatgpt

∗∗https://github.com/CornellMHILab/Social Support Social Isolation Extraction

Authors:

(1) Braja Gopal Patra, Weill Cornell Medicine, New York, NY, USA and co-first authors;

(2) Lauren A. Lepow, Icahn School of Medicine at Mount Sinai, New York, NY, USA and co-first authors;

(3) Praneet Kasi Reddy Jagadeesh Kumar. Weill Cornell Medicine, New York, NY, USA;

(4) Veer Vekaria, Weill Cornell Medicine, New York, NY, USA;

(5) Mohit Manoj Sharma, Weill Cornell Medicine, New York, NY, USA;

(6) Prakash Adekkanattu, Weill Cornell Medicine, New York, NY, USA;

(7) Brian Fennessy, Icahn School of Medicine at Mount Sinai, New York, NY, USA;

(8) Gavin Hynes, Icahn School of Medicine at Mount Sinai, New York, NY, USA;

(9) Isotta Landi, Icahn School of Medicine at Mount Sinai, New York, NY, USA;

(10) Jorge A. Sanchez-Ruiz, Mayo Clinic, Rochester, MN, USA;

(11) Euijung Ryu, Mayo Clinic, Rochester, MN, USA;

(12) Joanna M. Biernacka, Mayo Clinic, Rochester, MN, USA;

(13) Girish N. Nadkarni, Icahn School of Medicine at Mount Sinai, New York, NY, USA;

(14) Ardesheer Talati, Columbia University Vagelos College of Physicians and Surgeons, New York, NY, USA and New York State Psychiatric Institute, New York, NY, USA;

(15) Myrna Weissman, Columbia University Vagelos College of Physicians and Surgeons, New York, NY, USA and New York State Psychiatric Institute, New York, NY, USA;

(16) Mark Olfson, Columbia University Vagelos College of Physicians and Surgeons, New York, NY, USA, New York State Psychiatric Institute, New York, NY, USA, and Columbia University Irving Medical Center, New York, NY, USA;

(17) J. John Mann, Columbia University Irving Medical Center, New York, NY, USA;

(18) Alexander W. Charney, Icahn School of Medicine at Mount Sinai, New York, NY, USA;

(19) Jyotishman Pathak, Weill Cornell Medicine, New York, NY, USA.