Transcript

Naveen Mareddy: Imagine binge watching your favorite show, like in Squid Game that most of you might have binge watched, I think, not knowing that there are millions of trace spans and thousands of microservice calls that are orchestrating all of this to happen so that you can watch this title on the product. Did you know it takes about 1 million trace spans to represent the workflow that is used to encode a single episode of Squid Game Season 2, which are roughly like an hour-long episode, and that’s a single episode. Now let’s look at encoding this one episode of Squid Game Season 2 in numbers. When encoding is done for this one episode, it produces 140 video encodes, that is to support different video encode profiles and to support multiple bitrates.

Similarly, for audio, there are 552 audio encodes that this one episode has. Then coming to the compute, 122,000 CPU hours were used to encode this title. Now switching focus into the observability side of the infrastructure, there are 1 million trace spans that represent the whole orchestration that goes on to produce these encodes, 140 video encodes and 552 audio encodes, and 27,000 unique microservice calls happening to orchestrate this encoding process for one episode.

Then 30 microservices that are encoding microservices for audio, video, text, inspection, they were used for processing this title. This is just to show the scale, starting with one episode. Imagine at Netflix scale where we have a wide variety of content to offer to consumers across the world. Imagine immense scale and complexity involved in encoding. Each title must be playable on a wide variety of device types. Generally, Netflix is pretty good at supporting even old Blu-ray players, too. We want to have maximum reach. Also, we have to accommodate multiple media processing use cases like playback, ads, encoding, and trailer generation for marketing, also like pre-production studio workflows. All of this media processing, imagine if you put all of that together, the scale is enormous. The observability that we are to bring into this and instrument the systems will be proportionally at that scale.

Sujana and I are from the encoding infrastructure team at Netflix, who will be explaining about challenges in the observability space in media processing and how we tackle them. We are from the content infrastructure and solutions organization in Netflix under content engineering. We solve for cross-cutting concerns in content engineering so that other content engineers can build applications for business solutions. We provide solutions around digital asset management and workflows to orchestrate studio workflows, and also provide infrastructure platforms on top of compute and storage. In this CIS, Sujana and I, we are from media infrastructure platform team where we focus especially on compute and storage, platform and abstractions.

Outline

For the talk, we’ll talk about challenges in the observability space in media processing. Then how we tackle these challenges head-on, and evolve from there. Then, what wins did we unlock with the strategies we adopted in the observability area. Then the future, what is on top of our mind, and key takeaways.

The Encoding Process – Bird’s Eye View

I would like to give a bird’s eye view of the encoding process for what is involved in producing one video encode. This is a super simplified view to just set the stage. We get a title from the studios that comes through an ingestion process where there are a bunch of checks that we do, inspections to see that the source is meeting the standards that it’s supposed to. Then, coming to encoding phase, it’s a lot of divide and conquer techniques that is applied due to the complexity of video encoding where the video source is split into chunks. They could be split based on time or scene or complexity of the scenes. Then each chunk is individually encoded, then assembled back, which is called an encode. That’s one video encoded.

Then the encoded video is packaged into container formats so that the devices can play. There are some standards there. Then the packaged content is deployed to a CDN, Netflix’s CDN, called Open Connect. At that point, that title is playable, ready to play on the product. That’s what goes on in video encoding. Similar things happen for audio encoding. There could be different nuances there, and text processing and inspections.

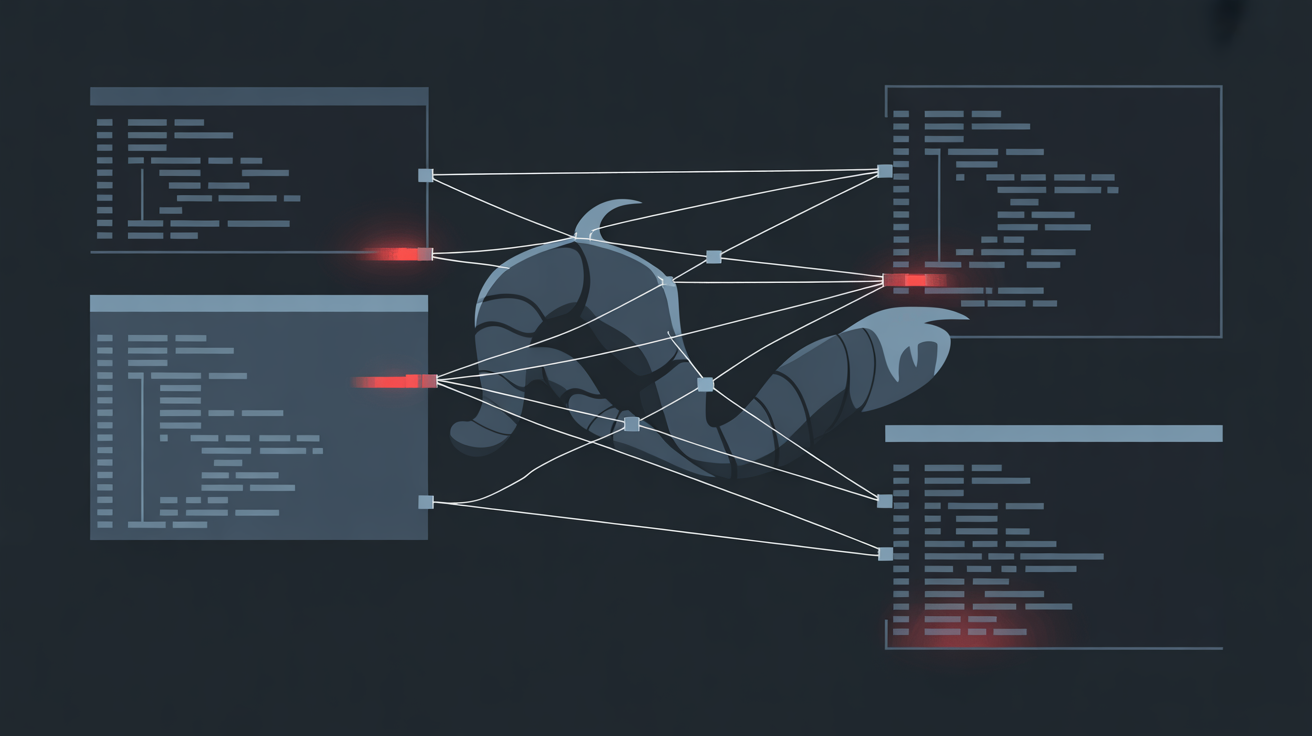

How do we do this? At Netflix, our team built an encoding platform called Cosmos, which we blogged about before. The Cosmos platform combines microservices, asynchronous workflows, and serverless functions, to create one microservice which is like one box here. Cosmos allows the representation of very resource-intensive, hierarchical workflows, to encode the videos and process the full title, and give nice boundaries for each team to have their own microservice. Like audio encoding team will have their own set of services, and video encoding teams would have their own set of services.

All while Cosmos enables building services so that they support latency-sensitive workloads and also throughput-sensitive workloads. A lot of scale, which is throughput-oriented use cases, which is like back catalog and all that. Latency-sensitive workloads where there is some human waiting, or there’s a live title that is converted as word, we have very stringent, tight timelines that we have to meet. Both are facilitated by Cosmos Media Processing Platform. Here in the picture, we’re showing three layers of Cosmos services. The top one is the connected-to-business use cases, and the lower ones get into subject matter expert microservices. Imagine six of them of similar complexity coming together, talking to each other to encode the movie.

Challenges

Let’s look at some challenges here. We’ve seen the scale of encoding, so let’s explore the challenges. Over the past decade, to arrive at Cosmos, we have transformed our monolith, a sophisticated architecture, into a multi-tiered, distributed Cosmos-based architecture. Twelve years ago, we started with a basic monolith setup, and as you can imagine, every story and distributed systems start with a monolith. Then it didn’t scale, and then we built a bigger monolith, which worked until 2017. We started seeing scaling challenges with that. Then we started heading down the distributed and microservices path, by starting with having microservices where each box is a microservice, and orchestrated with two layers like that.

Then we had more layers to have more indirection and more separation of responsibilities. Then today, it looks like that, and even like six levels, even more complex than this. That is where we are. With this evolution, the observability challenges and operational challenges for our users also exponentially grew. In reality, I’ll show how an actual service looks like. Sujana and I, we attempted to take one of those boxes in the previous picture, and open up and see all the steps inside it, and plotted it. This is our Sagan, one of the green boxes on the top tier. It looks like that. It’s not meant to be red, but there’s so many steps that happen within that one microservice. Now imagine there are six of them coming together. Imagine how many things you want to check when things don’t work. You want to trace, is the request stuck, or is it taking genuinely longer to finish? All these things will happen in every layer. Also, our users who are using this platform come to us and ask that when they’re debugging the issues, they want to have this top-down, drill-down support in these complex hierarchical services.

One challenge when we’re building the platform is we want to provide out-of-the-box affordances so that when a top-level service has a question, which is, why is this video encode, like this title, still not ready? Then they click down, they see that audio is good, but video is 80% there, there are 10 more encodes pending. If you drill down further, you see that particular video profile. If you drill down further, it goes to a particular bitrate. All that support, our users want us to build into the platform. That’s one challenge coming our way.

Then, the other one is also supporting bottom-up, where users want to surface error information, which will be rolled up in the top-level services into more meaningful errors for business users. Also, insights, the services want to surface up to get operational insights and also business insights to tune the compute usage. This is a challenge when we’re designing the system. The other one, as I’ve shown in the previous picture, the requests are all hierarchical, and this is a screenshot from our internal tool called Nirvana, which our users use to debug their services and see the progress of the encoding. Here, what you’re seeing is each line in here is a microservice call made from the top-level service. I had to scroll the screen, and take multiple screenshots to fit into one slide here, but this is only from one level. This basically highlights that the fan-out happening at one level is like that, and similarly, if you go down six levels, imagine how much fan-out is happening.

Also, traditional systems versus ours, there’s this difference where like in debugging flows, users start with logs in traditional request-response systems, go to traces, and then domain data. In our system, that exists too. Plus, on top, there’s a request view also. That’s an extra thing that we have. Also, we have to support the reverse direction of debugging workflows for our users so that if there is an issue within video encode where there’s a green frame in some particular device in a certain part of the world, so a user starts, comes with the domain data. This is the problem with debugging, and they want to pinpoint and get to which serverless function execution is exhibiting that issue. Just here is a comparison on traditional microservices environment and our encoding workflows, how the observability needs are different.

In traditional systems, our request-response and our batch, async, long-running, and metrics are generally time series, low cardinality, which suffice in traditional systems. Ours is low cardinality plus derived high cardinality metrics so that we can have extra tags added on the metrics, like device profile and codec type and all that. Similarly, namespace is simpler, and for the traditional services, ours is a complex, tiered system that creates more namespaces. Then tracing is considered optional in the traditional scenarios, but for us, tracing is mandatory since everything starts with a trace. Without that, users cannot navigate in the debugging workflows.

To sum it up, the challenges in this space for us for observability are, monitoring the asynchronous task is hard. Then the span count is super big, which every time we talk to anyone, our use case always is very special, which we have to solve differently. Decorating the trace data so that all the systems across the layers use consistently is a big challenge. Also, reasoning the encoding latencies, which level is contributing to the latency issue, or is it some drop request somewhere? These are the challenges.

Evolution

Sujana Sooreddy: With this journey now, we look into how we evolved our observability solutions. This is a journey of 8 long years, so where we started and how we have evolved. We started with the monolithic architecture. We all know the concerns with it. We said, no, let’s just move on to a different system, and we built a distributed architecture. With our distributed asynchronous architecture, there come the challenges of observability where we deal with tracing, but then our scale hit, and there were more challenges.

Then after that, we wanted more insights. It’s not about singular trace debugging, but insights never stop. The business wanted more insights. After insights, does it stop? No, it needed more, but in this case, we didn’t want islands of data. We wanted connected data. It’s like oceans, like the data that is flowing around versus singular island of data. Let’s see the problems with the microservice architecture. If you know, you know. Then we said like, now let’s just go into our distributed systems. With distributed systems, it’s a layering of services. We have the really basic subject matter expertise of services followed by how they are tied together. Then we have built multiple of those, and then built workflows on top of it.

Now imagine if I want to visually represent these things, allow our engineers to understand where their problems are, we had to develop a solution. There came the different kinds of challenges that we want to focus on. We wanted to focus on tracking our requests, following our logs, so we can start at any point and get to any point in the request. Get those breadcrumbs so the users could follow and not get into the team boundary dynamics. Get analysis on our performance wait times and the execution times. Then as the whole industry did, we also went into the three pillars of observability. We did tracing, logs, metrics, everything connected to our trace data. It was great. We basically adopted OpenTelemetry, used our Zipkin data model, the client spans, server spans, everything that comes with it. We instrumented all our systems because both our queuing system, our serverless encoding platforms, multi-tenant workflow engine, none of them had the traditional instrumentation. We had to go instrument everything.

Then we built custom visualization. We had these beautiful views where the user could go understand what is happening with their system. We could basically pinpoint what their wait times were. Those were the ones which are in your light-colored ones and what their actual execution times were. Why are they sitting in the queue? What are the errors they’re facing? This looks so good when your spans are at that level. Here, if you look, I think there are like 31 spans. That’s it. Then that is where we felt, we solved the distributed systems problem of observability.

Then the scale hit. What happened when scale hits? There were more than 300k spans on average. 300k spans for a human eye, it’s just not possible to contemplate anything from it. Then the trace wasn’t finishing in 30 minutes. The traces were taking upwards of 7 days in some cases when we were running any of our campaigns. When it’s seven days, it means the aggregation is also going to take seven days. We are having more than 100K requests per trace. What happened? Everything was showing this sign. Like, reopen the UI and it says loading and loading. Eventually, if you’re lucky, you’ll get to it, but it times out. Then no one was getting to figure out what is happening, where is it happening? People started retrying at that point. Then it was like nothing, nothing was possible to go ahead. This is the views they were looking at, like, Sujana, what can we make out of this?

Then we said, these are the things you’re doing. Let’s focus on introducing the request ID concept. I think Sam Newman also covered this before. Basically, we introduced something called request ID, and let’s focus on one singular thing. At every service level, we introduced request ID. Every service gets a request ID associated to it. It means our trace, which went on to have these humongous spans, now has something to hold on to where you can group them. We will be able to group by each singular request. You can focus on those particular things. This led us into visualizing, getting to our spans in the context of trees. If you look here, we are talking about requests here. Then we’re also touching audio. We’re not even going from top-down. This gives us, the users, to go back and forth while focusing just on the requests. This basically removed the confusion.

In order to get that view, we still have the humongous scale of the number of spans to load in the UI to get to that. What we did was we should also look at how we store our spans. Traditionally, the tracing is a blob where all of the spans are stored together. Few of them stored in S3, few of them stored in Cassandra with traces key ID. It’s a blob of spans. We said, let’s reverse it, store each individual span as its own entity. If you want to get spans related to a trace, filter by the trace. If you want to get spans for a request, filter by request. Each individual span will be its own entity. What this results in, you could filter out, make your executions faster and get to your things as fast as possible. What wins did we get from this? The overall wait times for loading the trace execution graphs reduced tremendously.

Then we were able to support multi-parameter filtering. Because every span is its own node, now we can basically index on some things and filter it out. We were able to aggregate things at a trace level without fetching the whole trace. I could just aggregate, give me a P95 of wait times and runtimes for every queue or every execution, and you should be able to still get it without getting the whole data. We were able to do those things with explosion of spans. Now we solved these things. Everyone was happy. Me particularly, I was happy.

Then came the question of real-time insights. When I say real-time, let’s put it, it’s near real-time insights. That it takes time, we are all behind queues. Everyone can wait a second to get the insights. Instead of going for singular trace insights, the near real-time insights came into the question of, “Sujana, it’s great. I can know the wait times in my particular execution, but I want to know what is happening in the overall system”. Instead of a singular trace insight, it went into system-level analytics. I want to know if I’m meeting my SLOs kinds of question. Instead of going to what happened to my request, like the green frame error, I want to know all the requests where this green frame error has happened. From moving on to what are my queue wait times, to, ease my system meeting the SLOs. It means our scale has gone from singular trace to analyzing the system as a whole. Previously we solved it at the storage level, now we have to solve it at how we process systems.

Initially, all of our observability, debugging, and performance were for a singular trace. We had to reverse it. Now we basically made the observability, debugging, and performance at the top level, and the traces fall into it. Everything becomes like a problem of observability and debugging, and trace is one of the things that can help us solve it. How did we change our strategy within our teams? We moved from individual trace processing to stream processing, own Flink. Like basically go with all the streams as processing. Then trace level analytics to pre-aggregated data. At that scale, we also want to save cost on the storage. Most of it we could aggregate and just go ahead with it. We do not need the deeper dive, at least for the duration of more than two, three weeks.

Now that brings into the stream processing of our spans. Every service is going to emit these spans into a Kafka topic. Anything, anywhere that is going on in the Cosmos ecosystem is going to come and land in this Kafka topic. What did we do with the data that’s landing in the Kafka topic? We have our own Flink job that’s called a span processor. What does the span processor do? It collects all the spans for a trace. It aggregates the data at the request level. It aggregates a trace level too, and then pushes these aggregations to Elasticsearch and Iceberg. Iceberg, because of update latencies are not great, so we basically just insert it after the end of the processing. ES, we do it as and when it goes. It’s more real time with Elasticsearch.

There is a lag with the analytics of Iceberg. That means we are keying all of our data by trace ID, so every span that’s related to a trace ID falls into that same execution bucket. Spans are collected by request ID, and we are capturing the start and the end time of it. What are we doing inside the span job? We are literally building this job. This is a visual representation. We wrote the code for it. If you look at this, the height of each of this block represents the duration of the execution, and the arrows, the length of it, represents the queue duration. We know that this is the execution that has happened.

Now, the question comes, “My SLOs are not being met. I want to be faster”. Then we want to understand, how fast can we be? Where are the challenges that are stopping us being faster? We did, ok, let’s strip off all the queue wait times. We wanted to get the best-case processing time. This tree gets navigated into this one. Every queue wait time is basically removed from your execution tree. Once those are removed, the total processing time becomes the first to the end. The best-case processing time becomes once you remove all the wait time edges, it’s a depth-first search, from the top to the bottom. After the wait times are removed, that’s your best-case processing time. The wasted queue time is basically the total minus the best-case processing time.

I’ll talk to you about the wasted queue time now. If you look at this graph, the encode video, which is on the rightmost, can actually have a wait time because the audio is anyways going to take time. Encode video, we don’t have to push so much money into it, so many resources into it, for it to finish as and when the messages lands in the queue. These kinds of analytics helped us, the ROI analysis, where should I invest my money? Where should I put my compute into? How can the compute flow on? We also identified, now, if I want to make a data-based judgment on where I have to invest. We have limited resources. Limited resources on compute is one thing, but we also have limited resources on people, like less engineering resources. We have too many problems to solve. It means for the engineers to make a data-based decision, we want to understand the long poles.

In your whole big, ginormous traces, what is that long pole that is causing your execution to be delayed? That is how we have identified what those long poles are. Remove all your waiting edges, go from top to bottom in the depth-first search and get your long pole. That gives you, this is your long pole. If you want to improve anything for your system to be faster, it has to be done here. Improving audio is not going to help, or improving an encoding chunk is not going to help. It helps in compute, but if you want to tackle SLOs. That resulted in, by the end of the stream processing, we are capturing all the best-case actual and the wasted queue times. We are capturing the long poles, the total compute used. Every span of execution, we tag with the total CPUs that were assigned for that container, the memory that has been assigned, the network has been assigned, so we can compute all of them. We know how many errors have happened and how many of them have been retries. This goes directly into our reliability dashboard.

This is the metric which we basically use to talk to our users to say, did we meet our SLOs in terms of errors or did we meet SLOs in terms of our retries? This is always possible when we shifted our mindset from individual traces to streaming mindset. Now then we are able to build these beautiful dashboards. We could say, what are queue times? What are our execution times? How many CPUs did we actually spend? How much money did it cost? Now we were able to understand our bottlenecks, do data-based ROI analysis. These were all our wins. We were able to do aggregates to deep dives because we have pre-aggregated data. From there, because we also have individual spans data, we could do deep dives. We improved both our operational excellence and developer experience. This is like real-time insights. I was so happy after this.

Then the business came in. It’s great the engineers know about these things. Even the engineers came into this, actually. We got new sets of problems. We had engineering questions and then the business questions. What are the engineering questions that we are dealing with? We were dealing with, you are saying that everything in our system is equal, and saying, a request is taking anywhere between 5 minutes to 24 hours, or 7 days, but not all of them are equal. An action sequence is going to take longer to encode versus a sequence where someone is staring at a wall. Everything is different. We need all of this data to be filtered by the tags that I give you. We want the error rates, which are not just the platform captured. I have my own errors, but I’m being a nice citizen. I just don’t throw exceptions. I will actually handle it.

I want some way to capture my error rates. I want to capture the retries that are happening. What is the utilization of the resources? We are always talking about the compute that has been used, but then, what is happening within that? You have assigned 16 CPUs, what is the utilization that is happening with those 16 CPUs? I want to do a next generation of encodes. I really want to know the cost to encode. How can I extrapolate to answer this and provide business judgment on whether I should be doing this or not. Then business came in. The business comes in, I actually want to know the cost it took to encode a particular movie. If we are getting too many redeliveries for a movie and that too at the last minute, what are the delivery costs for it? Is some production house costing us more than the other?

In order to answer all these analytics, it’s just not trace analytics anymore. Inside these trace analytics, we want all of this to be hydrated by the metadata, both the business metadata and the user metadata, and then it has to be combined with the metrics. All of this together became the Cosmos analytics platform, which started as a single engineer trying to solve it, to a team of engineers who are solving the analytics problems of Cosmos.

As the name suggests, like Cosmos, where the software scales, it is like a Cosmos. What does hydration mean? In order to hydrate anything in the metadata, first we want to know what metadata is. We introduced something called a high cardinality metrics client that can add metadata. It can also emit metrics. Any of your systems, whether it’s a workflow, whether it’s an API, whether it’s a serverless function, each of this will get embedded with this metrics client. This can be used by our users to add metadata. All of this gets embedded. What happens with this metrics client is it pushes all of this metadata to a Kafka topic. Now we have two different sources, a metadata or the metric Kafka topic comes with this data. It comes with the identification information. This is what we call any metric, any event that happens within the system always has this identification information. It has a trace ID, the span, the parent, it’s a project ID, and a request ID.

Project ID is our term to say how it is mapped to a request, but it basically has all of this present. It has metadata and a propagation technique. The propagation technique says whether the metadata that I’m emitting, should it go to everywhere in the trace or it should go to only my children, or should it go to only my particular level of execution? Now we are getting hints from our user on how to get the metadata and how it can be attached to everything. Now we have different Kafka topics. We have a traces Kafka topic, which we are already doing. Then we have the metrics Kafka topic coming from the client. Then we also have a metadata Kafka topic. Now our Flink job, which used to be the single processor building the trace trees, now have three different sources, has this really big hydration job. Then what we’re doing in this job, we are having multiple tasks, actually. One of it is hydrate, and then aggregate, and then push to our syncs. This is what happens within our hydration job.

What is happening in hydration? The metrics come in, the metadata come in, the spans come in, all of them, and flow into our hydration task. In the hydration task, we’re basically building this as code, but the visual representation is this. At every request, we are aggregating the spans, and for every span, that is the level of execution, all metrics it belongs to. Then we’re also building a span to a metadata. Every metadata that gets associated, we also tag what span this metadata got added, and what is that propagation tech. Now we use both of this data, the request tree and the metadata collection, and we create these data sources. We create a collection of a metric and metadata. It’s combining both the metrics and the metadata that is coming into it, based on the propagation technique, and appending our metadata to the metric.

Then we’re also building the execution tree. The execution tree is like what we have seen before. This is our execution tree. Both of them are getting built here. Now this data is then used to calculate all of the things that we said before. Now, this data is actually hydrated with the metadata that can be used with business insights. What can we do after this? We can talk performance in terms of the attached metadata. Now I can say, for a bitrate XYZ, it gets to the complexity analysis. How long a video encode of 1080p is taking, and how much of it is just sitting in queue? I can also talk in terms of, for a particular recipe, recipe is like it says how to do a video encode, how to do an audio encode, how to do text, what kind of performance we are talking about. Now we are talking about connected data and distributed world.

The big challenge is, we build these big distributed systems where everything is disconnected, talking through the network. Then we want high alignment. This is a loosely coupled, highly aligned. The loosely coupled happens really great with distributed systems. In order to become highly aligned, it only happens when there is a good observability fabric to it. That is what we are building here. That’s a connected data in a distributed world. Now we have more metrics than just what we are deriving from trace. Overall, trace in itself wasn’t sufficient for us. We needed trace. We needed it to be hydrated with metrics. We needed additional metrics, so we basically built this behemoth of an analytics system.

All the distributed world challenges, like the custom metadata tagging with no universal meaning to it, we still have that problem. Now we have one big system where we are getting insights about our encoding things, but I don’t know what is happening from pitch to play. Pitch to play is when someone pitches to you and it shows on Netflix streaming. So many more things are happening than just encoding. We do not get the insights of it still, because between pitch to play, there are a multitude of systems, each having their own islands of data. We are still not connecting it. That’s still a concern. Metrics make sense only within the team boundaries, because they’re attached with their own metadata. We are still getting an encoding side view, but we are still losing the business view. That is where we introduce taxonomy. It means it is no more a bag of metadata. It is no more a map of string to object or a map of string to string. We needed a registry. It means that every key that you emit has a purpose, and it has the same purpose everywhere in our systems. We are reusing those keys. We are talking domain as context, not team boundaries as context. That is where we are moving beyond the team boundaries.

Basically, now, the user can actually create the metadata. We use Avro schemas to have well-defined clients, and then those are the clients that are used to emit and update metrics. Metrics which are freeform, or metadata which is freeform has now become more defined. Our users are happy users always, gets into the key’s registry, uses the client, emits metadata Kafka. We still use the hydration job, and then we build all of these. Now we have this amazing business. This is the time everyone is happy, actually.

Wins

Then as an engineer, I felt like, no, we could just still do more. The connected data, it is still an ongoing problem we’re trying to solve. Then, so far in this whole big journey of moving from a singular monolithic architecture to highly scalable distributed architecture, and the observability challenges, what did we win? We are now able to do an ROI-based analysis. We can figure out where we can use caches such that we don’t encode the same thing again and again. Caching within this whole workflow architecture which generally does not exist in the industry, as far as I know, we were able to do it. We were able to cache our function responses, the serverless function responses. We were able to cache parts of our workflow execution. All of these without our users reinventing the wheel. The platform built this caching infrastructure for it to happen. Then we’re talking about cost efficiency. We have efficiency numbers removed for legal, but from research to ad hoc use cases. Where there are latency sensitive needs, we were able to actually go with only less efficiency because we want high accuracy in hitting the numbers. In case of research, we wanted to be as efficient as possible. We’re able to talk about cost in terms of resourcefulness.

The Future

Naveen Mareddy: With this, there are still more challenges. The system again got more complex. There could be more layers getting added. It’s always like an iterative process where we keep improving. Looking forward, I think there’s some things, the connected datasets, which Sujana said, which started.

First, within our team boundaries we’re starting, but pitch to play is a much bigger vision, where in early stages of movie making, from there all the way till you put a title on the product, connecting that is an ambitious goal. We’re starting closest to the place where encoding happens and then radiating it out. Also, to encourage what is out there to limit reinventing these metadata tags, we’re proactively putting out a facade of GraphQL API so that the other parts of the encoding, the movie making process, the systems want to discover the tags that we have in our taxonomy service. Those can be discovered using GraphQL API. Then the taxonomy thing is very early, which Sujana just mentioned, like adopting it throughout the system and then reaching out for the upstream and downstream systems is also in progress. Also, performance enhancements that are in various processes in this observability infrastructure, we found that we can tune and reuse some of the pieces of the data that is pre-aggregated. That is also something on top of our mind.

Key Takeaways

The key takeaways if you’re tackling such a challenge is, observability itself, just getting metrics out there will not work. You will soon hit scalability challenges for your observability infrastructure itself. Think about that. Real-time insights means as you keep improving it, users will always be asking you more and more. Just have that in mind when you’re building. Also, data aggregation and tagging. Tagging also we find them to be super helpful, as we pull the thread more and more, we’re finding even more value in that.

Taxonomy and decorating data in a consistent way is giving a lot of benefits that we didn’t even think when we started this work. All of this, think like a much bigger thing, which is not just enabling exploration, or debugging, or logs view for your users, but think even bigger things like, how can we enable business to do proactive decision-making? Such as if there’s a new codec, can we cache the whole catalog of Netflix, is it possible or not? How to do that what-if scenario analysis? How can we use this observability data to answer such questions?

Questions and Answers

Participant 1: You described this observability infrastructure is super complex. How do you monitor that this complex system actually also works? Because you also talked about the performance improvements. I believe you also have something built on top of it, which gives you insights, ok, there is a scope for improvement here and there.

Sujana Sooreddy: How do we monitor our own observability system? Netflix in itself has a system called Atlas, where we basically are able to monitor other systems with low cardinal data. In order to monitor the observability platform itself, we didn’t need that high cardinal infrastructure. We use Atlas to monitor our system. It goes with the saying, don’t use your own system to monitor your own system. We use Atlas to monitor our system.

Participant 2: You seem to be gathering quite some amount of span data, enough to use stream processing to have to pre-aggregate. How do you do sampling? Do you keep everything? How long do you return it for? It sounds like you’re generating more sample data than video data. Just curious, how do you manage sampling and the persistence of this span data?

Sujana Sooreddy: In our use case of encoding, we do not sample it because our compute is more expensive than the observability data itself. It’s crazy expensive than the observability data. We do not sample it as it provides more value than not. Netflix in general, when it talks to anything within the infrastructure of APIs, it does a great level of sampling.

Participant 3: When you’re dealing with such a large amount of data, one thing that does creep up is noise. That last point you made about proactive decision-making, have you all come across that? Has that been a consideration to provide any guardrails, to not have noise in the system?

Naveen Mareddy: I think what we find is that the noise itself is perceived. What is noise itself is very relative. What we’re finding more as platform operators is that allowing users to decorate and have this infrastructure, which is high cardinality metrics client and all that, so have these affordances. Make it easy to do the right things. Have these dashboards so that users can mine this data. Have this quick turnaround time where if they onboard a new tag, then the aggregation and the rollup of the data is also available in dashboards quickly. We’re stopping there, and then giving it to users. For example, caching is a very good example, where they are like, in this whole thing, why are we doing this inspection 60 times? Are there 59 times? We don’t have to. That came out only from this work we did. That’s one thing. The guardrails are also built around this tagged data.

See more presentations with transcripts