I’ve seen configuration managed on paper, with change notices stacked on drawings like a to-do pile. I’ve seen teams lose the ability to rebuild the same product in another country because they couldn’t produce a complete BOM. The problem wasn’t “conservatism.” The problem was a broken evidence supply chain. Certification-ready digital threads fix that.

Here’s what changed when we rebuilt that supply chain:

- Audit pack assembly: 2 months → 2 weeks

- Evidence control: Google Drive (no traceability, low access control) → controlled, end-to-end traceability + access control

This wasn’t “we worked harder.” It was architectural.

Paper vs. digital is the wrong fight

In regulated deep-tech — nuclear, aerospace, defense, medical, advanced robotics — people argue about paper vs. 3D models, spreadsheets vs. metadata report, PDFs vs. PLM, “old-school authorities” vs. modern tools.

That argument misses the point.

Regulators don’t want paper. They want proof with properties that survive scrutiny:

- tied to a baseline (a frozen configuration)

- versioned and reproducible

- traceable end-to-end (requirements → design → verification → approvals)

- protected by access control and accountability

- supported by change impact analysis (so you can prove what changed and what didn’t)

Paper often provides these properties by accident. Digital systems must provide them by design.

Toolchain trust: the question behind “what tools do you use?”

When auditors ask about your tools, they’re not shopping for brands. They’re drawing your trust boundary.

They want to know:

- Which systems are evidence-grade (system of record)?

- Which are supporting (convenient, but not trusted as proof)?

- How do you ensure outputs are reproducible (versions, baselines, controlled exports)?

- Who can change what — and where is the audit trail?

If you can’t answer those cleanly, you’ll be pushed back to PDFs. And you’ll deserve it.

Two industries, same root cause: broken proof supply chains

I’ve built and defended evidence chains across two very different contexts:

- Nuclear power plant projects: configuration management across multiple programmes, with customers in different countries and customer requirements coming from different sources (in one case, ~27,000 requirements).

- Hydrogen-electric aircraft powertrain: a certification route developed with certification bodies, where proven proof patterns were non-negotiable.

Different domains. Same failure mode:

engineering work existed, but evidence was fragmented, inconsistent, and slow to assemble.

Certification is not a document contest. It’s an evidence supply chain.

The moment it became obvious

In an earlier environment, the “audit pack” was paper-based. Changes were happening in the build environment faster than documentation could keep up. On some drawings, there were piles of 4–5 unimplemented change notices.

A TechOps lead summed it up: “If I’m asked to do this again in the same style, I’m quitting.”

, when the same product needed to be assembled outside the original site, the organization struggled — not because engineering was wrong, but because it couldn’t reliably produce a complete, trustworthy BOM across stakeholders.

That’s the whole problem in one line:

If you can’t reproduce proof, you don’t have control.

Digitalization is step 3, not step 1

Most “digital transformations” fail because they start with tools.

The sequence that works is boring:

- Establish the process (what must be true, who approves what, what “done” means)

- Optimize the process (remove invented requirements and legacy rituals)

- Digitize (encode the optimized process into enforceable workflows)

Skip #1 and #2 and you get expensive chaos — now with dashboards.

The questions regulators keep asking (and what they really mean)

Across industries, certification bodies come back to the same three questions:

1) “Which tools do you use, and why should we trust their outputs?”

Translation: show the trust boundary, reproducibility, and audit trails.

2) “Do documented procedures match what’s implemented in the system? How is change managed?”

Translation: does your process live in slides, or in enforced workflows with logs?

3) “Show traceability live in the system.”

Translation: not a PDF export. A real trace from requirement to verified evidence, with access control.

If you can answer these on demand, audits stop being a recurring emergency.

The missing architecture: the Certification Interface Layer

Engineering teams and regulators speak different languages. If you don’t build a translation layer, you translate manually during audit prep — at the worst possible time.

I call this layer the Certification Interface Layer:

Map regulator questions → system objects + proof paths, not documents.

Proof pipeline

Once this exists, “show me” becomes a query and a snapshot — not a two-month scramble.

What changed for us (measurable outcomes)

Over a two-year transition (Jan 2024 → Sep 2025), we rebuilt the evidence pipeline end-to-end. This work supported scaling our compliance posture and helped underpin a DOA journey (organizational capability recognised under Part 21 Subpart J frameworks). (easa.europa.eu)

Results:

- Audit pack assembly: 2 months → 2 weeks

- Evidence control: drive chaos → controlled access + full traceability

- Fragmentation reduction: 4 PDM systems → 1 PLM with a standardized approach

- Change control maturity: manual impact analysis → automated impact analysis

- Adoption scale: ~30 users → ~150 users

No magic. Just proof as a system.

The playbook: 7 steps to certification-ready digital threads

Step 1) Define Units of Compliance

Stop treating all requirements equally. Define what you certify: per requirement? per function? per subsystem?

Output: compliance-unit catalog + definition of “proven.”

Step 2) Map Regulator Questions to Data Objects

Build a one-page matrix: question → object → source of truth → owner → proof rule

Output: Authority Q → Object map.

Step 3) Build the Evidence Graph (not a document tree)

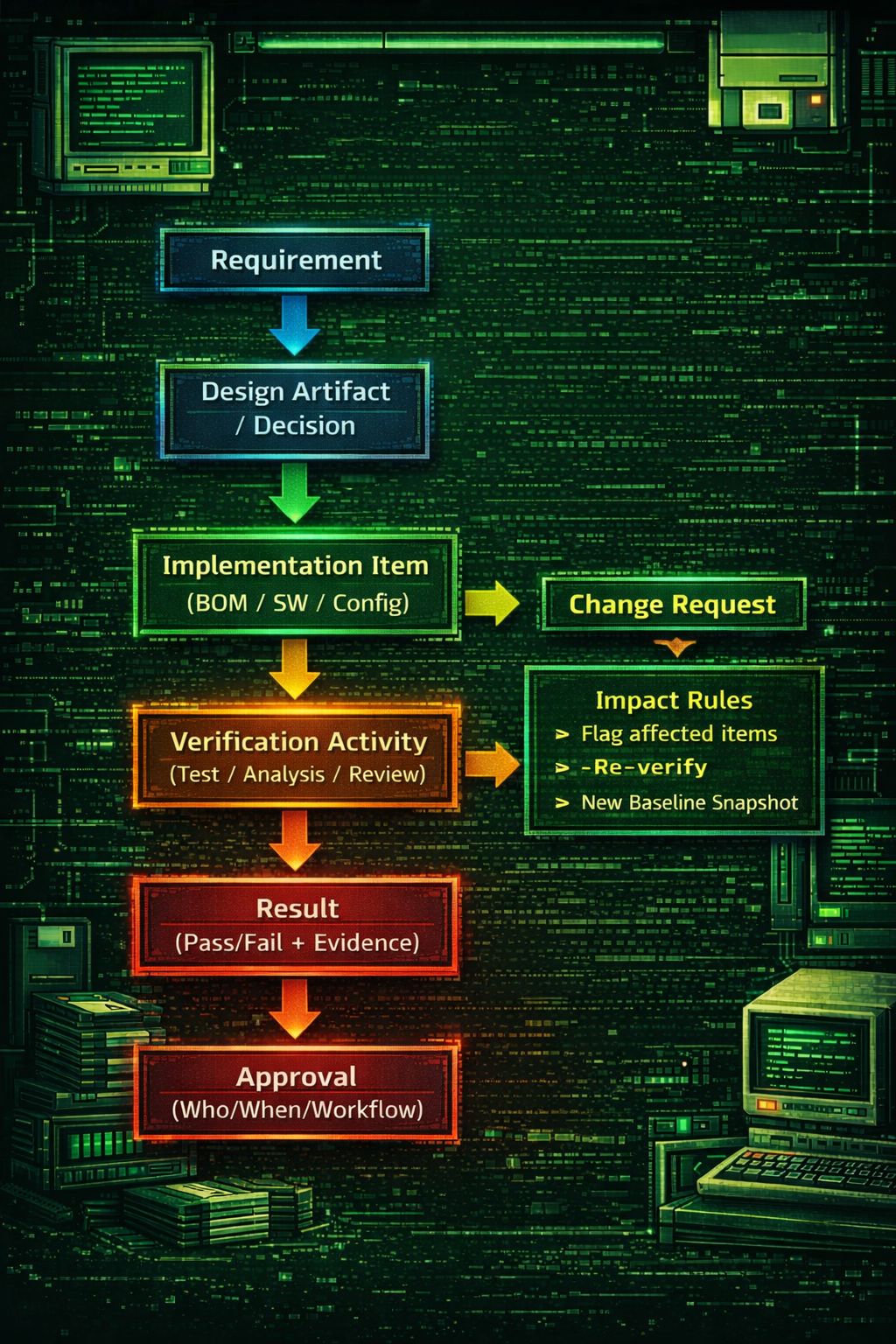

Documents are containers. Evidence is a graph: Requirement → Design → Implementation → Verification → Result → Approval

Make key links enforced. Optional links disappear under pressure.

Output: graph rules + completeness checks.

Step 4) Baselines and Snapshots

Authorities certify a baseline, not your “current database.”

You need repeatable baselining:

- freeze configuration

- capture linked evidence objects

- preserve versions, approvals, access control

Output: baseline freeze procedure + snapshot bundle definition.

Step 5) Change Impact Analysis

Change management isn’t “we have change requests.” It’s:

what becomes invalid and what must be re-verified when something changes?

Define computable impact rules by change type:

- requirement change → which verification artifacts invalidate?

- BOM/part change → what downstream artifacts must update?

- software change → what verification is affected?

Output: impact rules + automated flags.

Step 6) Audit Pack Assembly Pipeline (treat it like a release)

If audit packs are assembled manually, they will be wrong.

Treat assembly like a release pipeline:

- select baseline → generate pack → review → sign → export

- every pack has an ID

- every pack is reproducible

Output: assembly pipeline + definition of done.

Step 7) Governance and Tool Boundaries

Most “digital threads” fail here: no one defines decision rights.

You need explicit answers to:

- which system is the source of truth for what?

- who can change baselines?

- what counts as approval/signature?

- what is evidence-grade vs supporting?

Output: RACI + decision rights + signature model.

A 5-minute live traceability demo script

If you can’t demo this live, you don’t have a digital thread.

- Open one compliance unit

- Show linked design artifact + rationale

- Show linked implementation item (BOM/config/software)

- Show linked verification activity and results

- Show approvals (who/when/under workflow)

- Create a change → show impact analysis outputs

- Generate a baseline snapshot and export the audit pack

Auditors don’t need your entire tool landscape. They need reproducible proof.

Traceability

Why this applies beyond aerospace and nuclear (including humanoid robots and MedTech)

Swap “airworthiness” for “safety case” and the structure stays the same:

- hazards ↔ mitigations ↔ requirements ↔ tests ↔ approvals

- baselines + access control

- change impact rules

- reproducible evidence exports

- toolchain trust boundaries

Different tech. Same proof problem.

The decisive role: the translator

One more uncomfortable truth: the hardest part isn’t integration — it’s requirements hygiene.

We didn’t just ask teams how they work. We asked why. Most “requirements” turned out to be habits.

Using first principles, we reduced requirements to:

- standards and obligations that actually govern the function

- core requirements derived from those sources

- removal of invented constraints, with user alignment

Only after that did digitalization become scalable.

What you can do in 30 days

- Create the Authority Q → Object map (one page, no tools required)

- Define compliance units and traceability completeness criteria

- Implement baseline snapshots for one subsystem

- Run the 5-minute demo weekly internally

- Then automate audit pack assembly