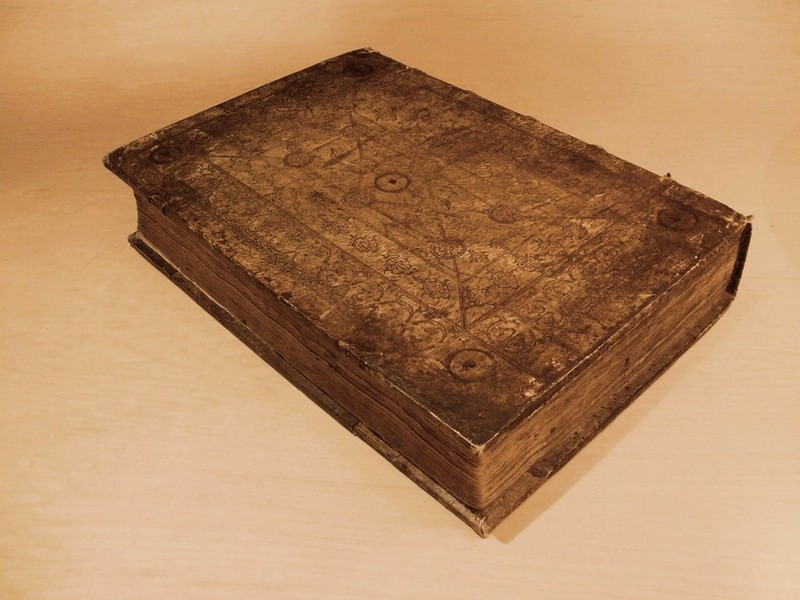

Google LLC’s Gemini 3.0 Pro large language model has delivered a notable advance in multimodal reasoning by helping decode a long-unexplained handwritten annotation in a 500-year-old copy of the Nuremberg Chronicle.

The Nuremberg Chronicle, printed in 1493, is considered one of the most important illustrated books of the early modern period. A particular surviving leaf contains four small circular annotations, known as roundels, that are filled with abbreviated Latin text and Roman numerals that have left scholars stumped as to their meaning for centuries. While the printed text itself is well understood, the meaning of the marginal notes had remained unresolved.

According to the GDELT Project, researchers fed high-resolution images of the Chronicle into Gemini 3.0 Pro and asked the artificial intelligence model to interpret both the printed material and the handwritten roundels. The exercise wasn’t one of simple character recognition but required Gemini to reason across multiple layers of context: paleography, chronology and theological history.

Gemini was able to identify that the annotations in the Nuremberg Chronicle were not random markings or decorative flourishes but calculations related to competing biblical chronologies.

The AI determined that whoever made the annotations was attempting to reconcile dates from the Septuagint and Hebrew Bible traditions to determine the birth year of Abraham, which were expressed using different “Anno Mundi” systems and a pre-Christian timeline.

The model successfully parsed abbreviated Latin terms, interpreted Roman numerals and linked them back to other passages within the Nuremberg Chronicle.

It is noted that Gemini made some minor numerical errors, such as misreading a few values by small margins. However, the overall interpretation was internally consistent and aligned with known medieval approaches to biblical chronology.

Besides solving a mystery that has been centuries in the making, the discovery is notable as Gemini was not simply transcribing text or guessing at meaning. Instead, the model produced a structured explanation for why the annotations exist and how they relate to the surrounding content, something that previously required deep domain expertise and lacked consensus.

The result highlights how multimodal AI models are beginning to move beyond pattern recognition into applied reasoning tasks that combine vision, language and historical knowledge.

“It is incredible to think that LMM visual understanding has advanced to the point that Gemini 3 Pro could read 500-year-old handwritten abbreviated shorthand marginalia, go back and read the entire printed page and use the contents of the page to work through and disambiguate the meaning of the shorthand, then put all of that information together to come up with a final understanding that fit all of the puzzle pieces, all without any human assistance of any kind,” the GDELT Project writes in a blog post.

The outcome arguably points to an interesting and growing role for AI in areas such as digital humanities, archival research and historical analysis, where vast quantities of visual and textual material remain relatively unexplored.

Photo: Smithsonian Institute/Flickr

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

- 15M+ viewers of theCUBE videos, powering conversations across AI, cloud, cybersecurity and more

- 11.4k+ theCUBE alumni — Connect with more than 11,400 tech and business leaders shaping the future through a unique trusted-based network.

About News Media

Founded by tech visionaries John Furrier and Dave Vellante, News Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.