Table Of Links

Abstract

Executive summary

Acknowledgments

1. Introduction and Motivation

- Relevant literature

- Limitations

2. Understanding the AI Supply Chain

- Background history

- Inputs necessary for development of frontier AI models

- Steps of the supply chain

3. Overview of the integration landscape

- Working definitions

- Integration in the AI supply chain

4. Antitrust in the AI supply chain

- Lithography and semiconductors

- Cloud and AI

- Policy: sanctions, tensions, and subsidies

5. Potential drivers

- Synergies

- Strategically harden competition

- Governmental action or industry reaction

- Other reasons

6. Closing remarks and open questions

- Selected Research Questions

References

3. Overview of the integration landscape

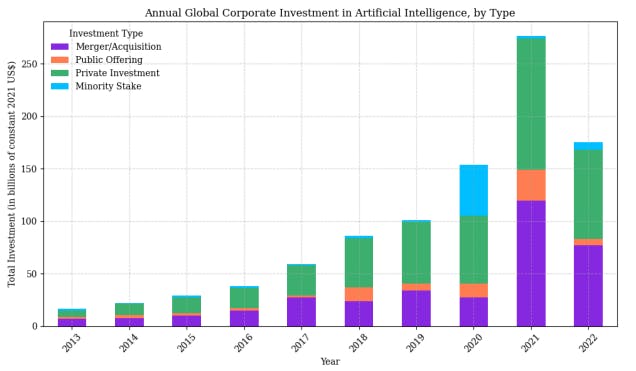

We cover mergers and acquisitions worth at least USD 100 million or that otherwise proved especially relevant. Additionally, we will introduce strategic partnerships, joint ventures, investments, disinvestments, and initial merger and acquisition talks that did not succeed. In Section 4, we will note relevant antitrust litigations. In Section 5, we preliminarily discuss what may be its drivers.

3.1 Working definitions

For this project, we have made preliminary efforts to identify the relevant product markets, trying to be consistent with relevant guidelines in the US and EU. Due to the global nature of these markets, we are not going to focus on discussing geographically relevant markets, though they could prove themselves relevant at times.

In each market, we are going to separate the companies into “frontier” and “non-frontier but relevant”. This distinction is useful in two ways: first, it highlights how contestable each of these markets is; second, it may be useful for identifying potential instances of vertically differentiated products.

For tractability, we are not going to cover companies worth less than USD 1 billion dollars as of October 2023 or that are based mainly in China. As noted by, e.g., Jamison (2014) defining relevant markets is a significant, multidisciplinary challenge, especially in dynamic industry with differentiated products and these are provisional definitions.

3.1.1 AI Labs

==Tentative relevant product market definition:== Large Language Models (LLMs) with capabilities including but not limited to text generation, text summarization, and code creation. In the scope of AI labs, we focused on large language models with varying capabilities, such as text generation, text summarization, and code creation.

These models potentially constitute their own relevant product market due to these unique capabilities that are not easily substitutable. We will consider frontier companies in this market as the ones in which the best-performing models are comparable both in capabilities and quantity of FLOPs utilized from GPT-3.5 to GPT-4. Conversely, companies considered relevant but not at the frontier are those whose best models are equivalent in performance to GPT-3 to GPT-3.5.

Frontier models include OpenAI’s GPT-4, Anthropic’s Claude 3, Meta’s LLaMA 3, and Google’s Gemini. We also decided to include Inflection’s Pi as a frontier. Although its capabilities are more limited by design as it is intended to be a more restricted personal companion, its underlying model (Inflection-2) is arguably as powerful as the above models (Inflection AI, 2023). This highlights how product differentiation may blur the definition of relevant markets, especially in the case of foundation models that by definition can be used in a wide range of downstream tasks.

Both technical and market reports confirm these categorizations. For instance, in a press release, Inflection AI (2023) recognizes PaLM 540B (that underlies Google’s Bard), GPT-3.5 and 4 and LLaMa as its competitors; Mollick’s (2023) ‘opinionated guide’ to use AI in workflows regards GPT-4, Claude and Bard as rough substitutes; and Zhao et al. (2023) point out that GPT-4 and Claude 2 have similar performance as general task solvers in benchmark tests and LLaMA 2 is the best performant open-source model tested. Chiang et al (2023) developed a leaderboard of LLMs, using pairwise crowdsourced comparisons of models, to rank models.

Non-frontier companies include Cohere, which focuses on the integration of LLMs in business processes, and HuggingFace’ Hugging Chat, which borrows from several open-source models. It is essential to acknowledge the existence of other classes of models, such as sentence embedding models and multimodal models, as well as technologies that maximize the utility of large language models (LLMs), like Retrieval-Augmented Generation (RAG). Inherently, the fine-tuning of LLMs for specific downstream tasks could constitute a market of its own significance. However, we have chosen not to focus on these other aspects.

3.1.2 Cloud providers

==Tentative relevant product market definition:==Cloud infrastructure services capable of training LLMs

We are going to consider frontier cloud providers’ infrastructure as the companies with data centers capable of training the frontier foundation models. This includes primarily Google Cloud, Amazon Web Services, and Microsoft Azure. Google’s models are trained in-house, Anthropic used Google Cloud but now is transitioning to Amazon and OpenAI’s exclusive cloud provider is Microsoft Azure. We will also consider non-frontier including Oracle.

Although there are no reports of what we would consider frontier or non-frontier but relevant LLMs trained in their infrastructure it is reasonable to consider they are contenders for that. For instance, Oracle has set up a partnership with Cohere (Oracle, 2024). Big tech companies such as Apple and Meta also have their data centers capable of training frontier foundation models but do not engage in the B2B cloud market.

3.1.3 AI Chip Designers

==Tentative relevant product market definition:== Chips used for training and running LLMs Regarding chips, our focus was on those used in the data centers of the frontier cloud providers, mainly Nvidia’s GPUs and Google’s TPUs. As we will further discuss in subsection 3.3.5, these are the only chips that were used by companies to train frontier LLMs. We also considered as non-frontier but relevant: AMD, which is considered as a substitute in, e.g., Shavit, 2023, and EpochAI, 2023; Intel, which has its own AI accelerators (Intel, 2023); and Cerebras chips, which develops AI accelerators with comparable features as of Nvidia and Google’s, but arguably has not yet achieved commercial viability. Additionally, we will consider AI chips developed internally by Meta, Microsoft, Amazon, and Apple as non-frontier but relevant, since they all can reasonably be contenders. We further discuss them in subsection 3.3.4.

3.1.4 AI Chip Fabricators

==Tentative relevant product market definition==: Companies capable of producing aforementioned chips with sufficient node precision

For chip fabricators, we looked at the companies that produce AI accelerators that the cloud providers use in their data centers. Nvidia’s GPUs are largely produced by TSMC, while the fabricator for Google’s TPUs is undisclosed, though Samsung is a probable source (TrendForce, 2023). Both TSMC and Samsung are producing 3nm chips and plan to produce 2nm until 2025. We will also consider Intel and GlobalFoundries as non-frontier but relevant.

3.1.5 Lithography companies

==Tentative relevant market definition:== Companies capable of producing machines used by chip fabricators with sufficient node precision

The semiconductor lithography equipment market as a whole has an estimated annual revenue of USD 24.66 billion dollars (Mordor Intelligence). ASML provides Extreme Ultraviolet (EUV) and Deep Ultraviolet (DUV) lithography machines for leading chip manufacturers such as TSMC, Samsung, and Intel. With its offerings of advanced lithography systems, ASML has established itself as the de facto monopoly in this sector. On the other hand, Japanese firms Nikon and Canon are recognized as potential competitors within this market. Although they haven’t mastered EUV technology, both companies offer DUV systems suitable for fabricating chips that do not require high-node precision

3.1.5 Additional notes

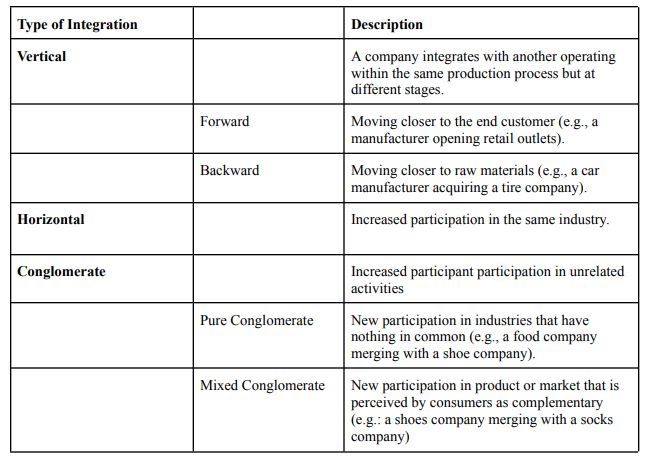

We will also follow the following taxonomy to classify different types of integration between companies:

Each kind of integration can be also classified as: i) integration by acquisition (one company buys another; ii) integration by merge (i.e., two companies create a new one); iii) integration by expansion (i.e., a company starts participating in new industries without acquiring/merging another company); iv) quasi-integration (characterized by minority stake and/or partnership with exclusivity clauses on key inputs). We tried to be generally consistent with the nomenclatures adopted by industrial organization textbooks such as Tirole (1987) and Shy (1996). It is important to note sometimes it can be challenging to define which kind of integration a merger or acquisitions is, since it depends on the definition of the relevant market.

3.2 Integration in the AI supply chain

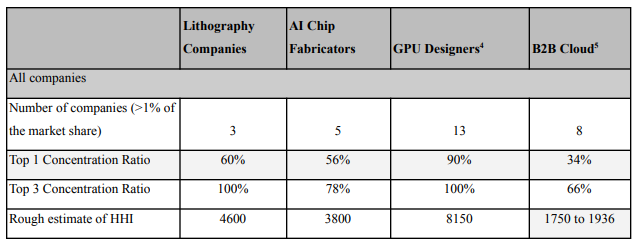

The AI supply chain, especially if we only consider the frontier AI supply chain, is highly horizontally concentrated. There is only one frontier lithography company (ASML), only one or two frontier AI accelerator fabricators (TSMC and Samsung), and only two designers of cutting-edge AI accelerators (Google and Nvidia), with only one supplying the broader market. There are perhaps three to fifteen frontier AI labs, such as Google Deepmind, Meta, Anthropic, Inflection, and OpenAI.

However, there is significant horizontal shareholding. Besides owning Deepmind, Google also invested in Anthropic. Microsoft has a strategic partnership with OpenAI. Microsoft also invested in Inflection and hired a substantial number of its founding members to work at the newly created Microsoft AI. Regarding vertical integration, from approximately the 1950s to the 2000s, there seemed to be a general trend toward less vertical integration in the semiconductor industry, notable through fabless companies becoming more common.

However, with the challenges of maintaining pace with Moore’s Law upstream and the rise of big tech companies downstream, there seems to be a swing back towards increased vertical integration. In the industry, there is a prevalence of strategic partnerships that often involve exclusive clauses or minority stakes. These arrangements closely resemble quasi-integration, particularly concerning the provision of key inputs or technology licensing. From the 25 mapped companies, we considered that nine companies are present in more than one of the five relevant markets mapped.

3.2.1 Lithography Companies

ASML has significantly expanded its market share by being the first and so far the only company to develop EUV (Extreme Ultraviolet) technology. There have been no mergers and acquisitions in the industry that significantly impacted the horizontal integration of the lithography industry in at least the last 25 years. The Japanese companies Nikon and Canon, once significant players in the lithography market, have fallen behind in technological innovation.

Canon lagged by two generations, failing to master immersion lithography, while Nikon remained a generation behind, still selling immersion DUV machines but abandoning attempts at EUV development. ASML has acquired and invested in numerous of its suppliers of key inputs of lithography machines. As part of its strategy to develop Extreme Ultraviolet (EUV) technology (ASML, 2012), ASML purchased Cymer, a supplier of light sources used in lithography processes.

In 2016, ASML bought Hermes Microvision, a Taiwanese company that develops electron beam inspection technology used to identify defects in advanced integrated circuits (ASML, 2023). ASML and ZEISS have a long-standing partnership, specially through the ZEISS subsidiary focused on technologies used in the semiconductor industry, Carl Zeiss SMT. In2016, ASML bought 24,9% of Carl Zeiss SMT for EUR 1 billion (ASML, 2023).

While ASML focuses on the lithography machines, Zeiss specializes in the optics that go into these machines. As stated in the original announcement from the companies, “the main objective of this agreement is to facilitate the development of the future generation of Extreme Ultraviolet (EUV) lithography systems due in the first few years of the next decade” and it has been successful. Canon has recently introduced a nanoimprint lithography (NIL) machine, boasting capabilities for 5nm chip production and tracking 2nm production eventually.

The market acceptance of this new offering from Canon remains an open question as it awaits commercial adoption. Nikon, ASML and Carl Zeiss ran intellectual property litigation against each other for years and it ended up with a legally binding cross-licensing agreement (ASML, 2019). In summary, the market consolidation in the lithography market seems to be mainly driven by the natural growth of ASML given their technical advantage over the competitors and acquisitions of and alliances with key suppliers and customers.

3.2.2 AI Chip Fabricators

There were no major horizontal integrations involving TSMC, Samsung, Intel, or GlobalFoundries that occurred in the industry over the past 25 years. As TSMC’s market share in sub-7nm nodes reached nearly 90% and reportedly had a general market share of 58% as of the last quarter of 2022 (The Motley Fool, 2022), they are widely regarded as the most advanced foundry company. In 2022, they started fabricating 3nm process nodes commercially and currently are developing technology for 2nm processes (TSMC, 2023).

In 2022, Samsung was also able to produce a 3nm node process and is currently starting to sell them commercially, reportedly seducing a large contract for producing specialized data center chips (Pulse, 2023). The South Korean conglomerate is also trying to keep pace with TSMC in the development of the 2nn nodes process. Intel has been losing market share in the world market.

Noteworthily, they have lost significant contracts with Apple and AMD. It is worth noting there are notable licensing agreements, such as those concerning 12nm foundry technology from Samsung to GlobalFoundries (WikiChip, 2018) In 2012, ASML set up a consumer co-investment program with the goal of developing EUV technology that could be employed at scale for the fabrication of chips. Its three larger customers at the time bought a total stake of 23% of ASML.

The program was enabled through a synthetic share buy-back. We put more details of this partnership, which was fundamental to the development of EUV technology on which today’s frontier AI accelerators depend, as a case study in the appendix.

3.2.3 AI Chip Designers

Nvidia has a significant market dominance in the Graphics Processing Unit (GPU) market, with more than 80% of this market (Jon Peddi Research, 2023). Their CUDA environment, which helps the optimization of machine learning training and inference for their specific hardware, is considered by market analysts as a significant advantage. In 2020, Nvidia acquired Mellanox for $7 billion. As Nvidia is a leading developer of chips used in data centers, and Mellanox specializes in complementary data center technologies, this acquisition is an example of mixed conglomerate integration. AMD is one of the top contenders of Nvidia.

They have been investing in open source infrastructure, being a partner of the Torch Foundation, which develops the PyTorch framework, and recently buying the company Nod.ai. Another significant player in this market is Cerebras, which produced the first 1-trillion-transistors chips. Big tech companies have also been engaged in the designing of AI chips, typically for their in-house operations, as we will convert in the vertical integration subsection.

Generally, companies that both fabricate and design chips are called Integrated Device Manufacturers. They usually fabricate their self-designed chips as well as sell them to other (fabless) companies. Intel is a major US integrated manufacturer and has a division specializing in foundry services for other companies. Some of its major clients for these services are Meta, Amazon, Cisco, and MediaTek. TSMC and Nvidia have established a strong partnership and are the dominant players in chip manufacturing and design, respectively.

In March 2023, Nvidia announced CuLitho, a software to improve computation lithography. The company has partnered up with ASML, TSMC, and Synopsis “to accelerate the design and manufacturing of next-generation chips” through integrating computation processes of lithography in GPUs (NVIDIA, 2023). One of the stated goals is to push towards making chips with nodes of 2nm and less. This partnership does not include a company acquiring participation in another, however.

3.2.4 Cloud providers

The cloud market at scale is dominated by AWS, Microsoft Azure, and Google Cloud, and, respectively with 34%, 21%, and 11% of the market. To the best of our knowledge, there have been no mergers and acquisitions in the industry that significantly impacted the horizontal integration of the cloud industry in at least the last 25 years.

There have been, however, noteworthy conglomerate acquisitions, especially focused on cloud providers expanding their portfolio of services. For instance, in 2019 Google bought Looker, a big data analytics platform, for 2.6 billion dollars to expand Google Cloud’s offerings in the business intelligence segment. Also in 2019, IBM bought Red Hat, a software company focused on open-source enterprise software, for 34 billion dollars.

In 2018, Microsoft bought GitHub, which provides a platform for software development, for 7.5 billion dollars, integrating it into the Azure cloud platform. on, GitHub data was used to train foundation models. Cloud providers are actively also involved in chip design, enabling them to have more control over their hardware and optimize it for specific applications. Google’s Tensor Processing Units are available for use through the Google Cloud Platform.

Similarly, Amazon develops its Trainium and Inferecium chips, available through Amazon Web Services. Reportedly, Microsoft has been internally developing AI accelerators. Microsoft CTO confirmed that they are investing in semiconductor solutions, but did not provide further details. Google’s Tensor Processing Unit (TPU) is specifically designed to enhance AI computations and model inference and is one of the most used AI accelerators (Reuther et al, 2021). TPUs are not physically available in the market, one can only use them through Google Cloud (Google, 2023).

Amazon designs chips that are specialized for cloud operations, focusing on the specific requirements of its cloud services and infrastructure (Amazon, 2023). Microsoft’s acquisition of a chip-design startup in early 2023 indicates their interest in developing AI chips (Fool, 2023). While specific details are not available, reports suggest their focus on AI-related chip development (CoinTelegraph, 2023). It is also worth noting that, although they are not cloud providers, Meta also announced in early 2023 their AI chip project, Meta Training and Inference Accelerator (MTIA), designed to improve efficiency for recommendation models used in serving ads and content on news feeds (SourceAbility, 2023). Apple has transitioned from being a customer of Intel to designing its own chips for its latest laptop to having greater control over the performance and integration of its hardware with its software ecosystem, but there is no information on the design chips specialized in AI tasks.

3.2.5 AI Labs

The only significant merger and acquisition that increased horizontal integration of frontier AI labs that we are aware of is Google’s acquisition of DeepMind in 2014. However, as they shared different focuses in AI technology, Google Brain and DeepMind remained independent until 2023 and the key aspect of this deal was Google providing compute at scale to DeepMind, we will treat it as vertical integration. AI labs have a large demand for compute power to train large foundation models.

There is a need to have many frontier AI computers closely connected in a data center, as is explored in the Pilz and Heim (2023) report. As discussed above, there are only three major companies currently capable of supplying at scale advanced AI accelerators to their customers: Google (Google Cloud), Microsoft (Azure), and Amazon (AWS). Besides their use for internal AI development, these three companies have set strategic partnerships with top AI startups. Especially as there is a constrained supply of chips, the allocation of current capabilities is increasingly important and set by these strategic partnerships.

3.2.5.1 Google Cloud ↔ DeepMind

Deepmind was founded in 2010 and was acquired by Google in 2014. The exact purchase price was not disclosed, but it was reported to be between USD 500 million and USD 650 million (Efrati, 2014; Gibbs, 2014; Chowdhry, 2015). Facebook was also interested in purchasing at the time. Reportedly it is unclear why the conversations with Facebook didn’t advance (Efrati, 2014). DeepMind’s acquisition by Google was reportedly led by Google CEO Larry Page. One of the conditions for the purchase was the creation of an ethics board, as DeepMind was created with strong AI safety concerns.

It is uncertain if DeepMind already relied on Google Cloud before the purchase. Since the acquisition, DeepMind has been leveraging Google Cloud’s infrastructure and services for its AI research and development. DeepMind became a wholly owned subsidiary of Google’s parent company Alphabet Inc. after Google’s corporate restructuring in 2015. In April 2023, Google Brain and DeepMind merged to form a new unit named Google DeepMind with the goal of accelerating the development of general AI. Reportedly this was an answer to OpenAI’s breakthrough with ChatGPT (VentureBet, 2023).

3.2.5.2 Microsoft’s Azure ↔ OpenAI

OpenAI, established as a capped-profit company subsidiary of a non-profit organization, has received support from Microsoft since 2019 (Open AI, 2019). In 2023, this commitment from Microsoft with OpenAI was renewed with an investment of 10 billion dollars (Open AI, 2023). Microsoft Azure, which serves as the sole cloud provider for OpenAI. The AI lab does not maintain any data centers of its own. In addition, Microsoft possesses exclusive access to the parameters of the GPT-3 and GPT-4 models, incorporating them into a diverse range of its products (CNBC, 2023).

3.2.5.3 Microsoft’s Azure ↔ Inflection

Inflection was co-founded by Mustafa Suleyman of Google DeepMind and Reid Hoffman of LinkedIn focusing on creating consumer-facing AI products like the chatbot Pi. . As an early investor, Microsoft provided Inflection with access to Azure infrastructure and built a cluster of around 22,000 H100s.

The founders later established Microsoft AI, a division that consolidates consumer AI initiatives from Bing and Edge, enhancing Microsoft’s consumer AI offerings. Now, Inflection concentrates on growing “AI studio”, developing custom AI models for commercial clients and making them available through cloud services such as Azure (Reuters, 2023; Inflection, 2024).

3.2.5.4 Google Cloud ↔ Anthropic

In February 2023, Anthropic partnered with Google Cloud as its cloud provider (Anthropic, 2023). Dario Amodei, Anthropic CEO, has said that “We’ve been impressed with Google Cloud’s open and flexible infrastructure.” (Edgeir, 2023) Anthropic will be able to use GPU and TPU available in Google’s clusters and Google has invested USD 300 million in Anthropic (Financial Times, 2023).

3.2.5.5 AWS ↔ Anthropic

Amazon and Anthropic have established a substantial partnership. Since 2021, Anthropic has been a client of Amazon (AWS, 2023) Amazon, through AWS, has facilitated access to Anthropic’s generative AI model Claude for AWS customers via Amazon Bedrock. On September 25, 2023, Amazon announced an investment of up to $4 billion in Anthropic to bolster the development of language models like Claude 2 using AWS and its specialized chips (Amazon, 2023). According to the announcement, this investment is part of a broader strategic collaboration aimed at advancing safer generative AI technologies and making Anthropic’s future foundation models widely accessible through AWS.

3.2.5.6 AWS ↔ HuggingFace

The two companies have set a strategic partnership and now Amazon offers models available in the HuggingFace (HuggingFace, 2023). This partnership is specially focused on training and deployment of AI models in the HuggingFace platform using Amazon Web Services cloud computing services In the announcement, they focused on the benefits customers may get from this partnership. They don’t mention directly that AWS will provide HuggingFace compute for training their own models (e.g.: HuggingChat).

3.2.5.5 Cohere ↔ Oracle

In June 2023, Cohere announced a partnership with Oracle to enhance AI services in the companies’ platforms. They announced that they are working together to ease the training of specialized large language models for enterprise customers while ensuring data privacy during the training process. Cohere’s generative models are integrated into Oracle Cloud Infrastructure (Oracle, 2023).

3.2.6 AI Chip Designers ↔ AI Labs

Besides Alphabet and Microsoft (which we discussed above considering them mainly as cloud providers), there has been limited integration of AI chip designers and AI labs. In 2018, NVIDIA created its Toronto AI Lab. Their focus “lies at the intersection of computer vision, machine learning, and computer graphics.” (NVIDIA, 2023). NVIDIA has not however acquired companies focussed on AI model development.

Meta has been developing chips specifically for inference in AI tasks such as computer vision and recommendation systems. Other companies that have significant involvement in AI model research and chip designing are Samsung and Apple, though they are not frontier players in any of these industries. Samsung has seven research centers dedicated to AI across countries as South Korea, the United States and Russia (Samsung, 2023) Apple has a significant investment in natural language processing for its voice system, Siri, and has been reportedly trying to integrate foundation models developed internally into the operational systems of its products.

The extent to which Apple is strategically investing in this is not clear and the company is famous for being one of the most secretive in Silicon Valley. They reportedly have been in talks with both Google and OpenAI to integrate their models on iPhone (Euronews, 2023).

3.2.6 Other

In 2016, Softbank, a Japanese holding with various investments, ranging from telecom services to internet-based businesses, acquired the semiconductor intellectual property (IP) company ARM for approximately $31 billion—a conglomerate integration. 36 WORKING PAPER From 2020 to 2022, NVIDIA tried to acquire ARM, the leading licenser of CPU designs. The acquisition could have allowed NVIDIA to integrate ARM’s CPU technology with its AI accelerators, but the deal faced antitrust challenges and NVIDIA ultimately gave up on acquiring ARM.

:::info

Author:

Tomás Aguirre

:::

:::info

This paper is available on arxiv under CC by 4.0 Deed (Attribution 4.0 International) license.

:::