Table of Links

Abstract and 1 Introduction

2 Pre-Training

2.1 Tokenization

2.2 Pre-Training Data

2.3 Stability

2.4 Inference

3 Alignment and 3.1 Data

3.2 Fine-Tuning Strategy

4 Human Evaluations and Safety Testing, and 4.1 Prompts for Evaluation

4.2 Baselines and Evaluations

4.3 Inter-annotator Agreement

4.4 Safety Testing

4.5 Discussion

5 Benchmark Evaluations and 5.1 Text

5.2 Image-To-Text

6 Related Work

7 Conclusion, Acknowledgements, Contributors, and References

Appendix

A. Samples

B. Additional Information of Human Evaluations

4.3 Inter-annotator Agreement

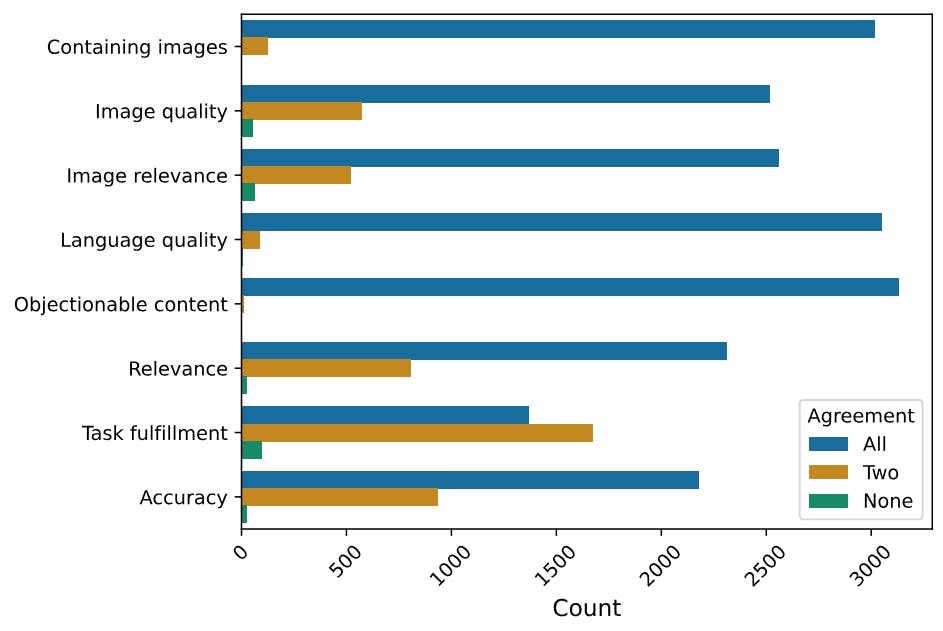

Every question in our evaluation is answered by three different human annotators, and we take the majority votes as the final answer. To understand the quality of the human annotators and whether the questions we asked are reasonably designed, we examine the level of agreement between different annotators.

For questions about simple, objective properties of the responses, we very rarely see three annotators disagree with each other. For example, annotators have unanimous judgments on whether the model responses contain objectionable content (e.g., hate speech); in this case, all models produce safe responses. For some questions, such as whether the response fulfills the task or whether the model interprets the prompt correctly, when one annotator’s judgment differs from the other two’s, the decision is usually still close (e.g., fulfills vs. partially fulfills) rather than opposite (e.g., fulfills vs. does not fulfill).[3]

For the relative evaluation, Table 4 shows the numbers of cases where all three annotators agree, two annotators agree, and there is no agreement. For each model pair, we have a bit higher than 10% of the cases where there is no agreement among the three annotators (considered as a tie in our evaluation.) On about 28% to 35% of the pairs, all annotators have unanimous judgments, and in about 55% to 60% of the pairs, one annotator differs from other two. This may be interpreted as Chameleon performing similarly to other baselines in many cases, making the relative evaluation challenging.[4]

4.4 Safety Testing

We crowdsource prompts that provoke the model to create unsafe content in predefined categories such as self-harm, violence and hate, and criminal planning. These prompts cover both text and mixed-modal inputs, as well as intents to produce unsafe text, images, or mixed-modal outputs. We generate the model’s response to each prompt, and ask annotators to label whether the response is safe or unsafe with respect to each category’s definition of safety; an unsure option is also provided for borderline responses. Table 5 shows that an overwhelming majority of Chameleon’s responses are considered safe, with only 78 (0.39%) unsafe responses for the 7B model and 19 (0.095%) for the 30B model.

We also evaluate the model’s ability to withstand adversarial prompting in an interactive session. For that purpose, an internal red team probed the 30B model over 445 prompt-response interactions, including multi-turn interactions. Table 5 shows that of those responses, 7 (1.6%) were considered unsafe and 20 (4.5%) were labeled as unsure. While further safety tuning using RLHF/RLAIF has been shown to further harden the model against jailbreaking and intentional malicious attacks, these results demonstrate that our current safety tuning approach provides significant protection for reasonable, benign usage of this research artifact.

4.5 Discussion

Compared to Gemini and GPT-4V, Chameleon is very competitive when handling prompts that expect interleaving, mixed-modal responses. The images generated by Chameleon are usually relevant to the context, making the documents with interleaving text and images very appealing to users. However, readers should be aware of the limitations of human evaluation. First, the prompts used in the evaluation came from crowdsourcing instead of real users who interact with a model. While we certainly have a diverse set of prompts, the coverage may still be limited, given the size of the dataset. Second, partially because our prompts focus on the mixed-modal output, certain visual understanding tasks, such as OCR or Infographics (i.e., interpreting a given chart or plot), are naturally excluded from our evaluation. Finally, at this moment, the APIs of existing multi-modal LLMs provide only textual responses. While we strengthen the baselines by augmenting their output with separately generated images, it is still preferred if we can compare Chameleon to other native mixed-modal models.

Author:

(1) Chameleon Team, FAIR at Meta.

[3] For the question of task fulfillment, the inter-rater reliability derived by Krippendorff’s Alpha (Krippendorff, 2018; Marzi et al., 2024) is 0.338; the 95% confidence interval is [0.319, 0.356], based on bootstrap sampling of 1,000 iterations.

[4] When comparing Chameleon with Gemini+ and GPT-4V+, the Krippendorff’s Alpha values are 0.337 [0.293, 0.378] and 0.396 [0.353, 0.435], respectively.