“Help! Our AI model costs are through the roof!”

That’s becoming an increasingly common cry from startups riding the generative AI wave. While ChatGPT and its cousins have sparked a gold rush of AI-powered applications, the reality of building applications based on LLMs is more complex than slapping an API call onto a web interface.

Every day, my LinkedIn feed overflows with new “AI-powered” products. Some analyze legal documents, others write marketing copy, and a brave few even attempt to automate software development. These “wrapper companies” (as they’re sometimes dismissively called) may not be training their own models, but many are solving real problems for customers and finding genuine product-market fit based on the current demands from the enterprises. The secret? They’re laser-focused on making AI technology actually useful for specific groups of users.

But here’s the thing: Even when you’re not training models from scratch, scaling an AI application from proof-of-concept to production is like navigating a maze blindfolded. You’ve got to balance performance, reliability, and costs while keeping your users happy and your finance team from having a collective heart attack.

To understand it better, let’s break this down with a real-world example. Imagine we’re building “ResearchIt” (not a real product, but bear with me), an application that helps researchers digest academic papers. Want a quick summary of that dense methodology section? Need to extract key findings from a 50-page paper? Our app has got you covered.

Version 1.0: The Naive Approach

We’re riding high on the OpenAI hype train – Our first version is beautifully simple:

- Researcher uploads chunks of a paper (specific, relevant sections)

- Our backend forwards the text to GPT-5 with a prompt like “You are a helpful research assistant. Analyze the following text and deliver insights strictly from the section provided by the user……”

- Magic happens, and our users get their insights

The simplicity is beautiful. The costs? Not so much.

As more researchers discover our tool, our monthly API bills are starting to look like phone numbers. The problem is that we’re sending every query to GPT-5, the Rolls-Royce of language models, when a Toyota Corolla would often do just fine.

Yes, GPT-5 is powerful, with its 128k context window and strong reasoning abilities, but at $1.25 per 1M input tokens and $10 per 1M output tokens, costs add up fast. For simpler tasks like summarization or classification, smaller models such as GPT-5 mini (around 20% of the cost), GPT-5 nano (around 4%), or Gemini 2.5 Flash-Lite (around 5%) deliver great results at a fraction of the price.

Open-source models like Meta’s LLaMA (3 or 4 series) or various models from Mistral or also offer flexible and cost-efficient options for general or domain-specific tasks, though fine-tuning them is often unnecessary for lighter workloads.

The choice really depends on the following things:

- Output Quality: Can the model consistently deliver the accuracy your application needs? Does the model support the language that you want to work with?

- Response Speed: Will your users wait those extra milliseconds for better results? Typical response time for any app should be within the 10-second mark for users not to lose interest, so speed definitely matters.

- Data Integrity: How sensitive is your data, and what are your privacy requirements?

- Resource Constraints: What’s your budget, both for costs and engineering time?

For our research paper analyzer, we don’t need poetry about quantum physics; we need reliable, cost-effective summarization.

:::info

Bottom Line: Know Your Application Needs

:::

Choose your LLM based on your actual requirements, not sheer power. If you need a quick setup, proprietary models may justify the cost. If affordability and flexibility matter more, open-source models are a strong choice, especially when small quality trade-offs are acceptable (although there might be some infrastructure overhead).

So, ResearchIt is a hit. Researchers love how it summarizes dense academic papers, and our user base is growing fast. But now, they want more; instead of just summarizing sections they upload, they want the flexibility to ask targeted questions across the entire paper in an effective manner. Sounds simple, right? Just send the whole document to GPT-5 and let it work its magic.

Not so fast. Academic papers are long. Even with GPT-5’s generous 128K token limit, sending full documents per query is an expensive overkill. Plus, studies have shown that as context length increases, LLM performance can degrade, which is detrimental when performing cutting-edge research.

So, what’s the solution?

Version 2.0: Smarter chunking and retrieval

The key question here is how do we scale to satisfy this requirement without setting our API bill on fire and also maintain accuracy in the system?

**Answer is:

Retrieval-Augmented Generation (RAG). Instead of dumping the entire document into the LLM, we intelligently retrieve the most relevant sections before querying. This way we don’t need to send the whole document each time to the LLM to conserve the tokens but also make sure that relevant chunks are retrieved as context for the LLM to answer using it. This is where Retrieval-Augmented Generation (RAG) comes in.

There are 3 important aspects to consider here:

- Chunking

- Storage and chunk retrieval

- Refinement using advanced retrieval techniques.

Step 1: Chunking – Splitting the Document Intelligently

Before we can retrieve relevant sections, we need to break the paper into manageable chunks. A naive approach might split text into fixed-size segments (say, every 500 words), but this risks losing context mid-thought. Imagine if one chunk ends with: “The experiment showed a 98% success rate in…” …and the next chunk starts with: “…reducing false positives in early-stage lung cancer detection.” Neither chunk is useful in isolation. Instead, we need a semantic chunking strategy:

- Section-based chunking: Use document structure (titles, abstracts, methodology, etc.) to create logical splits.

- Sliding window chunking: Overlap chunks slightly (e.g., 200-token overlap) to preserve context across boundaries.

- Adaptive chunking: Dynamically adjust chunk sizes based on sentence boundaries and key topics.

Step 2: Intelligent storage and retrieval

Once your document chunks are ready, the next challenge is storing and retrieving them efficiently. With modern LLM applications handling millions of chunks, your storage choice directly impacts performance. Traditional approaches that separate storage and retrieval often fall short. Instead, the storage architecture should be designed with retrieval in mind, as different patterns offer distinct trade-offs for speed, scalability, and flexibility.

The conventional distinction of using relational databases for structured data and NoSQL for unstructured data still applies, but with a twist: LLM applications store not just text but semantic representations (embeddings).

In a traditional setup, document chunks and their embeddings might be stored in PostgreSQL or MongoDB. This works for small to medium-scale applications but has clear limitations as data and query volume grow.

The challenge here isn’t storage, it’s the retrieval mechanism. Traditional databases excel at exact matches and range queries, but they weren’t built for semantic similarity searches. You’d need to implement additional indexing strategies or use extensions like pgvector to enable vector similarity searches. This is where vector databases truly shine – they’re purpose-built for the store-and-retrieve pattern that LLM applications demand – treating embeddings as the primary attribute for querying, optimizing specifically for nearest neighbour searches. The real magic lies in how they handle similarity calculations. While traditional databases often require complex mathematical operations at query time, vector databases use specialized indexing structures such as HNSW (Hierarchical Navigable Small World) or IVF Inverted File Index) to make similarity searches blazingly fast.

They typically support two primary similarity metrics:

- Euclidean Distance: Better suited when the absolute differences between vectors matter, particularly useful when embeddings encode hierarchical relationships.

- Cosine Similarity: Standard choice for semantic search – it focuses on the direction of vectors rather than magnitude. This means that two documents with similar meanings but different lengths can still be matched effectively.

Choosing the right vector database is critical for optimizing retrieval performance in LLM applications, as it impacts scalability, query efficiency, and operational complexity. HNSW-based solutions like Pinecone and Weaviate offer fast ANN search with efficient recall – they handle scaling automatically making them ideal for dynamic workloads with minimal operational overhead. Self-hosted options like Milvus (IVF-based) offer more control and cost-effectiveness at scale, but require careful tuning. pgvector integrated with Postgres enables hybrid search, though it may hit limits under high-throughput workloads. The choice finally depends on workload size, query patterns, and operational constraints.

Step 3: Advanced Retrieval Strategies

Building an effective retrieval system requires more than just running a basic vector similarity search. While dense embeddings allow for powerful semantic matching, real-world applications often require additional layers of refinement to improve accuracy, relevance, and efficiency. By combining multiple retrieval methods and leveraging Large Language Models (LLMs) for intelligent post-processing, we can significantly enhance retrieval quality.

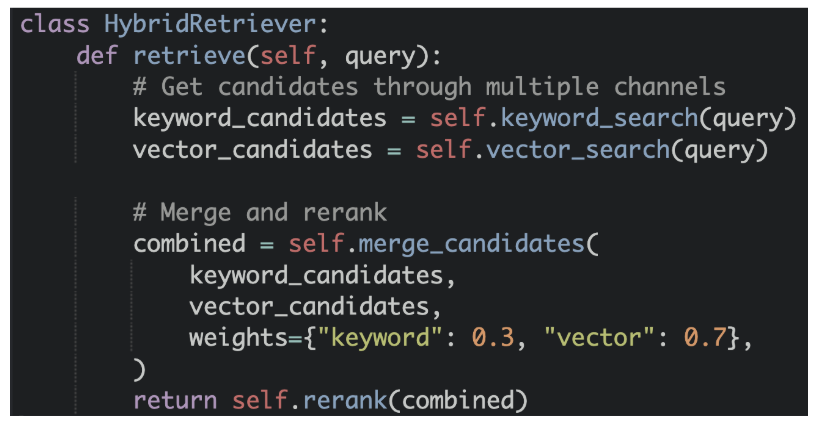

A common challenge in retrieval systems is balancing precision and recall. Keyword-based search (e.g., BM25, TF-IDF) is excellent for finding exact term matches but struggles with semantic understanding. On the other hand, vector search (e.g., FAISS, HNSW, or IVFFlat) excels at capturing semantic relationships but can sometimes return loosely related results that miss crucial keywords.

To overcome this, a hybrid retrieval strategy combines the strengths of both methods.

This involves:

-

Retrieving candidates – running both a keyword and vector similarity search in parallel.

-

Merging results – controlling the influence of each retrieval method based on the query type and application needs.

-

Reranking for optimal ordering – ensuring the most relevant information appears at the top based on semantic requirements.

Another challenge is that traditional vector search retrieves top-K nearest embeddings. LLMs rely on context windows, meaning that blindly selecting the top-K results might introduce irrelevant information or miss crucial details. One solution to this problem is utilizing the LLM itself for refinement. More specifically, we send the retrieved candidates to an LLM to verify coherency and relevance based on the user question.

Some techniques that are used for LLM refinement are as follows:

- Semantic Coherence Filtering: Instead of feeding raw top-K results, the LLM evaluates whether the retrieved documents follow a logical progression related to the query. By ranking passages for semantic cohesion, only the most contextually relevant information is used.

- Relevance-Based Reranking: Models like Cohere Rerank, BGE, or MonoT5 can re-evaluate retrieved documents, capturing fine-grained relevance patterns and improving results beyond raw similarity scores.

- Context Expansion with Iterative Retrieval: Static retrieval can miss indirectly relevant information. LLMs can identify gaps, generate follow-up queries, and adjust the retrieval strategy dynamically to gather missing context.

Now, with these updates, our system is better equipped to handle complex questions across multiple sections of a paper, while maintaining accuracy by grounding responses strictly in the provided content. But what happens when a single source isn’t enough? Some questions require synthesizing information across multiple papers or performing calculations using equations from different sources – challenges that pure retrieval can’t solve.

Version 3.0 – Building a Comprehensive and Reliable System

By this point, “ResearchIt” has matured from a simple question-answering system into a capable research assistant that extracts key sections from uploaded papers, highlights methods, and summarises technical content with precision. Yet, as users push the system further, new expectations emerge.

What began as a system designed to summarize or interpret a single paper has now become a tool researchers want to use for deep, cross-domain reasoning. Researchers want it to reason across multiple sources, not just read one paper at a time.

The new wave of questions looks like:

- “Which optimization techniques for transformers demonstrate the best efficiency improvements when combining insights from benchmarks, open-source implementations, and recent research papers?”

- “How do model compression results reported in this paper align with performance reported across other papers or benchmark datasets?”

These are no longer simple retrieval tasks. They demand multi-source reasoning – the ability to integrate and interpret complex information, plan and adapt, use tools effectively, recover from errors, and produce grounded, evidence-based synthesis.

Despite its strong comprehension abilities, “ResearchIt” 2.0 struggles with two major limitations when reasoning across diverse information sources:

- Cross-Sectional Analysis: When answers require both interpretation and computation (e.g., extracting FLOPs or accuracy from tables and comparing them across conditions). The model must not only extract numbers but also understand context and significance.

- Cross-Source Synthesis: When relevant data lives across multiple systems – PDFs, experiment logs, GitHub repos, or structured CSVs – and the model must coordinate retrieval, merge conflicting findings, and produce one coherent explanation.

These issues aren’t just theoretical. They reflect real-world challenges in AI scalability. As data ecosystems grow more complex, organizations need to move beyond basic retrieval toward reasoned orchestration – systems that can plan, act, evaluate, and continuously adapt.

Let’s take the first question around analysis of transformer optimization techniques – how would we solve this problem as humans?

A group of researchers or students would work on “literature review, i.e, collating papers on the topics, researching open source github repos, and identifying benchmark datasets. They would then extract data and metrics like FLOPs, latency, accuracy from these resources, normalize and compute aggregations and validate the results produced. This is not a one-shot process; it’s iterative, involving multiple rounds of refinement, data validation, and synthesis, after which an aggregated summary of verified results would be generated.

So, what exactly did we do here?

- Break down the overarching question into smaller, focused subproblems – which sources to search, what metrics to analyze, and how comparisons should be run.

- Consult domain experts or trusted sources to fill knowledge gaps, cross-verify metrics, and interpret trade-offs.

- Finally, synthesize the insights into a cohesive, evidence-based conclusion, comparing results and highlighting consistent or impactful findings through iterations.

This is, in essence, reasoned orchestration – the coordinated process of planning, gathering, analyzing, and synthesizing information across multiple systems and perspectives. It would be great if our system could also do something like this, right? This feels like a natural next step to question answering.

Step 1: Chain of Thought/ Planning

To tackle the first aspect, the ability to reason through multiple steps before answering, the concept of Chain of Thought (CoT) was introduced. CoT allows models to plan before execution, eliciting structured reasoning that improves their interpretability and consistency. For e.g, in analyzing transformer optimization techniques, a CoT model would first outline its reasoning path – defining the scope (training efficiency/ model performance/scalability), identifying relevant sources, selecting evaluation criteria and the method of comparison and establishing an execution sequence.

This structured reasoning approach became the foundation for LangChain-based orchestrations. As questions grew more complex, a single “chain” of reasoning evolved into Tree of Thought (ToT) or Graph of Thought (GoT) approaches – enabling branched reasoning and “thinking ahead” behaviors, where models explore multiple possible solution paths before converging on the best one. These techniques underpin today’s “thinking models,” trained on CoT datasets to generate interpretable reasoning tokens that reveal how the model arrived at a conclusion.

Of course, adopting these reasoning-heavy models introduces practical considerations – primarily, cost. Running multi-step reasoning chains is computationally expensive, so model choice matters. Current options include:

- Closed-source models like OpenAI’s o3 and o4-mini, which offer high reasoning quality and strong orchestration capabilities.

- Open-source alternatives such as DeepSeek-R1, which provide transparent reasoning with more flexibility/ engineering effort for customization.

While non-thinking LLMs (like LLaMA 3) can still emulate reasoning through CoT prompting, true CoT or ToT models inherently perform structured reasoning natively. For now, let’s assume we’re willing to invest in a top-tier model capable of genuine, interpretable reasoning and multi-source orchestration.

Step 2: Multi-source workflows- Function Calling to Agents

Breaking down complex problems into logical steps is only half the battle. The system must then coordinate across different specialized tools – each acting as an “expert” – to answer sub-questions, execute tasks, gather data, and refine its understanding through iterative interaction with its environment.

OpenAI introduced function calling as the first step to address this situation. Function calling/ tools gave the LLMs its first real ability to take action rather than simply predict text. You provide the model with a toolkit – for example, functions like searchpapers(), extracttable(), or calculate_statistics() and the model decides which one to call, when to call it, and in what order. Let’s take a simple example:

Task: “Compute the average reported accuracy for BERT fine-tuning.”

A model using function calling might respond by executing a linear chain like this:

- search_papers(“BERT fine-tuning accuracy”)

- extract_table() for each paper

- calculate_statistics() to compute the mean

This dummy example of a simple deterministic pipeline where an LLM and a set of tools are orchestrated through predefined code paths is straightforward and effective and can often serve the purpose for a variety of use cases. However, it’s linear and non-adaptive. When more complexity is warranted, an agentic workflow might be the better option when flexibility, better task performance and model-driven decision-making are needed at scale (with the tradeoff of latency and cost).

Iterative agentic workflows are systems that don’t just execute once but reflect, revise, and re-run. Like a human researcher, the model learns to recheck its steps, refine its queries, and reconcile conflicting data before drawing conclusions.

Think of it as a well-coordinated research lab, where each member plays a distinct role:

- Retrieval Agent: The information scout. It expands the initial query, runs both semantic and keyword searches across research papers, APIs, github repos, and structured datasets, ensuring that no relevant source is overlooked.

- Extraction Agent: The data wrangler. It parses PDFs, tables, and JSON outputs, then standardizes the extracted data – normalizing metrics, reconciling units, and preparing clean inputs for downstream analysis.

- Computation Agent: The analyst. It performs the necessary calculations, statistical tests, and consistency checks to quantify trends and verify that the extracted data makes sense.

- Validation Agent: The quality gatekeeper. It identifies anomalies, missing entries, or conflicting findings, and if something looks off, it automatically triggers re-runs or additional searches to fill the gaps.

- Synthesis Agent: The integrator. It pulls together all verified insights and composes the final evidence-backed summary or report.

Each one can request clarifications, rerun analyses, or trigger new searches when context is incomplete, essentially forming a self-correcting loop – an evolving dialogue among specialized reasoning systems that mirror how real research teams work.

To translate this into a more concrete example of how these agents would come into play for our transformer efficiency question:

-

Initial Planning (Reasoning LLM): The orchestrator begins by breaking the task into sub-objectives discussed before.

-

First Retrieval Loop: The Retrieval Agent executes the plan by gathering candidate materials — academic papers, MLPerf benchmark results, and open-source repositories related to transformer optimization. During this step, it detects that two benchmark results reference outdated datasets and flags them for review, prompting the orchestrator to mark those as lower confidence.

-

Extraction & Computation Loop: Next, the Extraction Agent processes the retrieved documents, parsing FLOPs and latency metrics from tables and converting inconsistent units (e.g., TFLOPs vs GFLOPs) into a standardized format. The cleaned dataset is then passed to the Computation Agent, which calculates aggregated improvements across optimization techniques. n

Meanwhile, the Validation Agent identifies an anomaly – an unusually high accuracy score from one repository. It initiates a follow-up query and discovers the result was computed on a smaller test subset. This correction is fed back to the orchestrator, which dynamically revises the reasoning plan to account for the new context.

-

Iterative Refinement: Following the Validation Agent’s discovery that the smaller test set introduced inconsistencies in the reported results – the Retrieval Agent initiates a secondary, targeted search to gather additional benchmark data and papers on quantization techniques. The goal is to fill missing entries, verify reported accuracy-loss trade-offs, and ensure comparable evaluation settings across sources.

The Extraction and Computation Agents then process this newly retrieved data, recalculating averages and confidence intervals for all optimization methods. An optional Citation Agent could examine citation frequency and publication timelines to identify which techniques are gaining traction in recent research.

-

Final Synthesis: Once all agents agree, the orchestrator compiles a verified, grounded summary like – “Across 14 evaluated studies, structured pruning yields 40–60 % FLOPs reduction with < 2 % accuracy loss (Chen 2023; Liu 2024). Quantization maintains ≈ 99 % accuracy while reducing memory by 75 % (Park 2024). Efficient-attention techniques achieve linear-time scaling (Wang 2024) with only minor degradation on long-context tasks (Zhao 2024). Recent citation trends show a 3× rise in attention-based optimization research since 2023, suggesting a growing consensus toward hybrid pruning + linear-attention approaches.”

What’s powerful here isn’t just the end result – it’s the process.

Each agent contributes, challenges, and refines the others’ work until a stable, multi-source conclusion emerges. In this orchestration framework, interoperability is powered by the Model Context Protocol (MCP) and Agent-to-Agent (A2A) communication which together enable seamless collaboration across specialized reasoning agents. MCP standardizes how models and tools exchange structured information – such as retrieved documents, parsed tables, or computed results – ensuring that each agent can understand and build upon the others’ outputs. Complementing this, A2A communication allows agents to directly coordinate with one another – sharing intermediate reasoning states, requesting clarifications, or triggering follow-up actions without intervention. Together, MCP and A2A form the backbone of collaborative reasoning: a flexible, modular infrastructure that enables agents to plan, act, and refine collectively in real time.

Step 3: Ensuring Groundedness and Reliability

At this stage, you now have an agentic system that is capable of breaking down relatively complex and abstract research questions into logical steps, gathering data from multiple sources, performing calculations or transformations where needed, and assembling the results into a coherent, evidence-backed summary. But there’s one last challenge that can make or break trust in such a system: hallucinations. LLMs don’t actually know facts – they predict the next most likely token based on patterns in their training data. That means their output is fluent and convincing, but not always correct. While improved datasets and training objectives help, the real safeguard comes from adding mechanisms that can verify and correct what the model produces in real time.

Here are a few techniques that make this possible:

- Rule-Based Filtering: Define domain-specific rules or patterns that catch obvious errors before they reach the user. For example, if a model outputs an impossible metric, a missing data field, or a malformed document ID, the system can flag and regenerate it.

- Cross-Verification: Automatically re-query trusted APIs, structured databases, or benchmarks to confirm key numbers and facts. If the model says “structured pruning reduces FLOPs by 50%,” the system cross-checks that against benchmark data before accepting it.

- Self-Consistency Checks: Generate multiple reasoning passes and compare them. Hallucinated details tend to vary between runs, while factual results remain stable – so the model keeps only the majority-consistent conclusions.

Together, these layers form the final safeguard – closing the reasoning loop. Every answer the system produces is not just well-structured but verified.

And voilà – what began as a simple retrieval-based model has now evolved into a robust research assistant: one that not only answers basic Q&A but also tackles deep analytical questions by integrating multi-source data, executing computations, and producing grounded insights, all while actively defending against hallucination and misinformation. ResearchIt’s journey mirrors the broader challenge facing every LLM application builder: moving from proof-of-concept to production-grade intelligence requires more than powerful models – it demands thoughtful architecture.

n