I thought I was having a quick chat with ChatGPT about my computer setup, but I accidentally shared my Windows PIN and other deeply personal info.

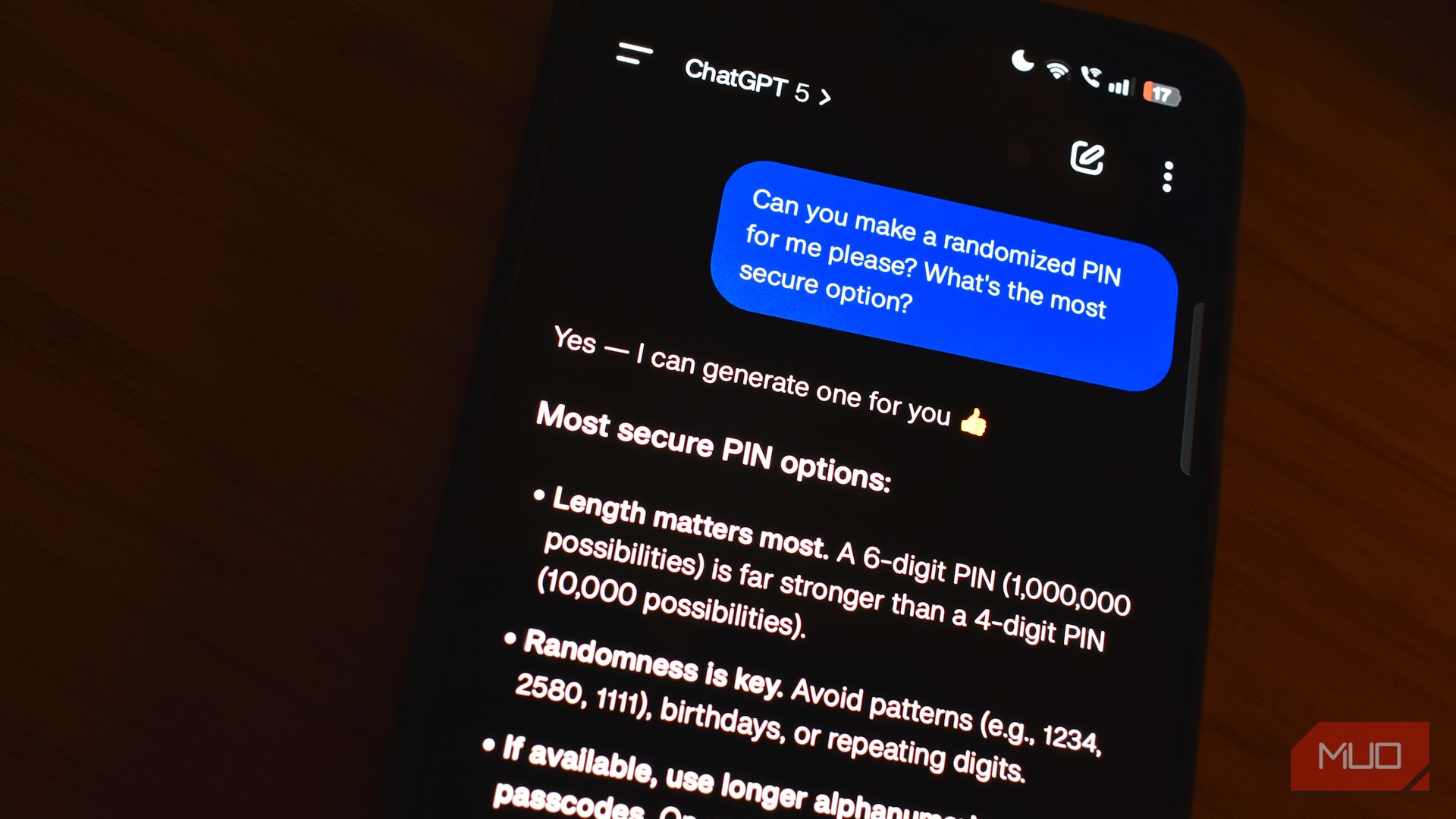

How I Accidentally Handed My PIN to an AI

When ChatGPT finally made the advanced voice feature available on my phone, I immediately set it as my default voice assistant. Amused at how human the assistant sounded, I never thought twice about using it for answering technical questions and several other applications that used ChatGPT’s live voice and vision. Plus, I’ve always liked that I didn’t have to type or stop to think about how to phrase things when using a voice assistant.

During one of these sessions, I remember venting about a persistent Windows Hello problem. My computer kept losing my PIN setup after every system update, forcing me to recreate it repeatedly. After going through yet another irritating PIN reset cycle, I finally decided to change my PIN. And since the conversation was flowing so naturally, I casually asked ChatGPT to save my PIN in case I forgot it.

I was so used to using Google Assistant (now retired) that I completely forgot I had switched to ChatGPT a few days earlier. Not that sharing a PIN with Google Assistant would have been a good idea, but sharing such data with ChatGPT and its Memory feature just ensured that my PIN was stored indefinitely. In my mind, I was asking my usual assistant to store some information for convenience, the same way I might save a reminder or note.

A Deeper Investigation Showed Me How Vulnerable I Was

I’m pretty open about letting people use my PC for quick tasks. But knowing and understanding the capabilities of ChatGPT’s Memory feature and what it entailed made me anxious. What if someone had checked the Memory logs and found my PIN? What if they had asked questions about my past conversations? The thought made me immediately dive into ChatGPT’s Memory to see exactly what was stored.

ChatGPT had logged far more than just my PIN. It remembered details I’d completely forgotten sharing, like the places I usually visit, that I use my Windows PC for online banking, that I often step away from my computer without locking it during short breaks, and other personal information I wouldn’t want to share with anyone.

The Memory feature painted a detailed picture of my digital life and habits. Although ChatGPT has measures to filter out sensitive data, we can still inadvertently store personal information because we are so accustomed to using AI chatbots and assistants that we often forget to be cautious. Looking through the saved memories made me realize how much we casually share with AI assistants. Even small details can build into a comprehensive profile that could be risky in the wrong hands.

How My PIN Could Have Potentially Connected to My Passwords

The real danger became clear when I started thinking like a hacker. My Windows PIN wasn’t just a number for unlocking my screen. It was the key to everything on my most trusted device.

Windows PINs create a false sense of security because they seem device-specific, but they actually unlock far more than people realize. Once someone has access to your Windows machine through your PIN, they can potentially access saved passwords in browsers, password managers that use Windows Hello integration, and any applications that rely on Windows authentication.

My computer had become my personal vault. Chrome stored dozens of work and personal passwords that autofilled with just Windows authentication. The most concerning realization was that my Windows PC served as one of my primary two-factor authentication devices. I had set up several accounts to send 2FA codes to Microsoft Authenticator on that same machine. I also used Windows Hello to approve authentication requests for various services.

An attacker with my PIN could potentially access my computer, extract saved passwords, intercept 2FA codes, and even approve authentication requests for other services. That simple four-digit code could cascade into complete account takeovers.

What I Do Now to Protect Myself

My accidental AI disclosure forced me to rethink how I interact with AI systems completely. The experience taught me that protecting myself requires both immediate damage control and smarter prevention habits.

First, I changed my Windows PIN immediately and separated critical passwords into a standalone password manager requiring manual entry. Important accounts now use hardware security keys instead of device-based authentication.

Then I immediately audited ChatGPT’s Memory by going to Settings > Personalization > Memory and selecting Manage to delete entries that you don’t want stored. This option effectively erases all the sensitive info about me.

Now I use ChatGPT’s Temporary Chat feature for any technical discussions. This “incognito mode” doesn’t save conversations, use Memory, or contribute to training. I click the “Tempor”ry” butto” before discussing anything potentially sensitive.

There are many ways to prevent your data from being logged into ChatGPTChatGPT’s, but auditing Memory logs and using Temporary Chat are among the more proactive ways to protect your data from possibly leaking from these services.

Overall, this experience taught me that modern security isn’t jisn’tbout strong passwords and good software updates. It’s alIt’sbout understanding how different systems connect and where casual information sharing can create unexpected vulnerabilities. AI memory features are incredibly useful, but they require the same careful consideration we give to any other system that stores our personal data.