One of the best decisions that Obsidian made was not to push AI integration features onto its users. Despite that, I still wanted AI in my Obsidian vault, and I got it—all for free and with zero subscriptions.

Why would I want an LLM in Obsidian?

I want AI because I’ve seen what NotebookLM can do. With NotebookLM’s mind maps releasing, I was concerned that I’d have to switch from Obsidian to NotebookLM. However, that thought didn’t last long. I’m still on Obsidian and not going anywhere.

But, being able to ask questions and get answers straight from your own notes is a really tempting feature. I wanted that in Obsidian. I already pay for Obsidian Sync, so I wasn’t about to spend more on an API key or $20 a month for a Notion AI subscription.

I keep saying AI, but I really mean LLMs. You get the idea. Running them isn’t cheap, and no company is handing out unlimited access for free. If Obsidian ever added built-in AI, it would almost certainly come with a “Premium” tier—just like Notion did.

My Obsidian vault is fully available offline. Relying on an online tool meant that my vault wouldn’t be truly local. There are already plugins for Obsidian that let you integrate AI via an OpenAI API key, but again, that’s online, and costs money. On top of that, my vault is full of personal notes—and I’m not about to ship all of that off to a company like Google or OpenAI. And finally, there’s no way I’m dragging 2,500 Markdown files into ChatGPT or NotebookLM.

All that said, imagine my grin when I stumbled across a plugin that hooks Obsidian up to a local AI model.

Setting up PrivateAI for Obsidian

It’s easier than you think

Install the PrivateAI plugin from the Obsidian marketplace. It’s free and lightweight. Once installed, enable it, and you’re done with the Obsidian side of things.

The plugin bridges your notes to the AI model. You’ve got the notes and the bridge, so now the missing piece is the model itself. Installing a local AI model isn’t daunting; it’s just like installing any other program.

Use LM Studio for this. Download and install LMStudio from their website, then set it up. Pick a model that fits your specs—my recommendation is the largest model LM Studio says is suitable. I use openai/gpt-oss-20b for Obsidian with great results. Lighter 4b or 8b models didn’t perform as well. With LM Studio open, toggle the server to Running. Load the model by hitting Ctrl + L on your keyboard—and that’s it! Your AI is now reachable at 127.0.0.1:1234.

Keep Serve on Local Network toggled on in the server settings; otherwise, an external computer could access your AI server.

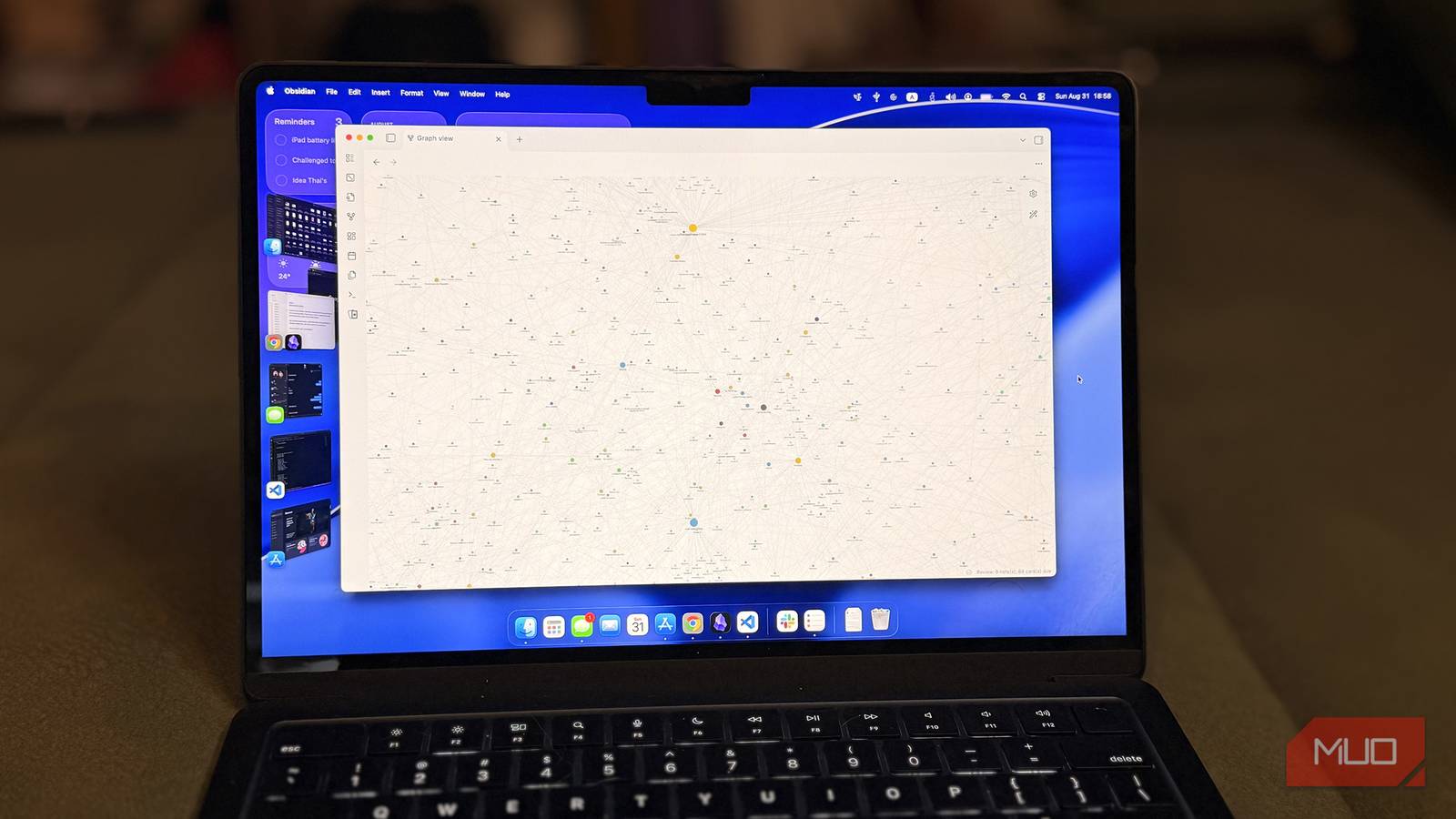

PrivateAI defaults to this address, so you’re good. Close Obsidian and launch it again. In LM Studio’s logs, you’ll see a flood of embed requests—that’s all your notes being indexed. I had 2,500 in mine, so it took a while to sort the embeds.

When done, click the tiny robot icon on the Obsidian sidebar—it opens the chat pane where you can interact with the LLM. Switch the context from open tabs to all notes to chat with your entire vault.

What can you do with your new local LLM?

The possibilities are endless

Now that I have AI in my Obsidian vault, what do I use it for? The sky’s the limit, really. Any analysis, manipulation, improvement, or query—just run it by the AI. The simplest use case is to chat with it. Ask any question and get answers from your notes, similar to NotebookLM. I can also switch the context to my entire vault. The AI will search for the relevant notes and use them as context.

In one example, I asked a question that wasn’t answered in the open context. Instead of saying “I can’t find anything,” the LLM made up an answer. A quick tweak in the settings fixed that. I added to the custom instructions, “If you can’t find the answer to a query in the provided context, say that you couldn’t find it.”

Now it honestly reports missing information while still drawing on its training data for a best‑effort answer.

This shows that even a local LLM can hallucinate. Always double‑check AI answers—that should be a habit now.

It’s not just about Q&A

Go beyond questions

One of my favorite use cases for AI is formatting text. Suppose I had a spreadsheet with all entries in uppercase and wanted them in lowercase. I could do it myself, write a formula, or… just ask AI. The same applies here.

For example, I wrote the note below during class and never got to format it with headings. I can simply ask PrivateAI to do it for me.

I use the Spaced Repetition Obsidian plugin to create flashcards right in Obsidian. The old workflow involved splitting notes, reading them, and creating flashcards in a separate flashcard app. Now, I just ask PrivateAI to generate them—its results are wonderful.

Just to hint at the possibilities, I took it a step further. I asked the AI to review my journal entries to find the most common complaint. I’m sure you’ll understand that I blurred the results for privacy, but take my word that the AI did a great job—quite insightful. I’d never upload my entire journal to an AI—at least not when AI meant handing everything to Big Tech. But that’s not the case anymore.

There are many technicalities involved, both from PrivateAI and LM Studio. I could tweak temperature, adjust the RAG system, toggle settings, and likely improve responses further. For now, it works great—it takes my computer about two seconds to respond, faster than ChatGPT, although ChatGPT is a more capable model.