In our view, the Intel–Nvidia pact further accentuates Nvidia Corp.’s dominant market position and represents a milestone in the transition to the next era of computing.

Just as Intel Corp. had a lock on the market in the ’80s and ’90s, Nvidia has now extended its moat deep into the x86 ecosystem (in both PCs and data center) and expanded its total available market by $500 billion, according to our estimates. The subtext here is that Intel Chief Executive Lip-Bu Tan is taking necessary steps to save Intel. In doing so, he’s hitching the company’s wagon to the future, which is being defined by CUDA, Nvidia’s programming model and platform.

This move, by our estimates, also increases Intel’s TAM, by roughly $100 billion, and breathes new life into the x86 franchise, which was rapidly deteriorating. Our economic models suggest that Intel foundry remains a significant drag on its turnaround but it will get a much needed boost from the system-on-chip products that this partnership will produce. On balance, we see this as a win for both companies and x86 customers and a further tailwind for artificial intelligence’s momentum.

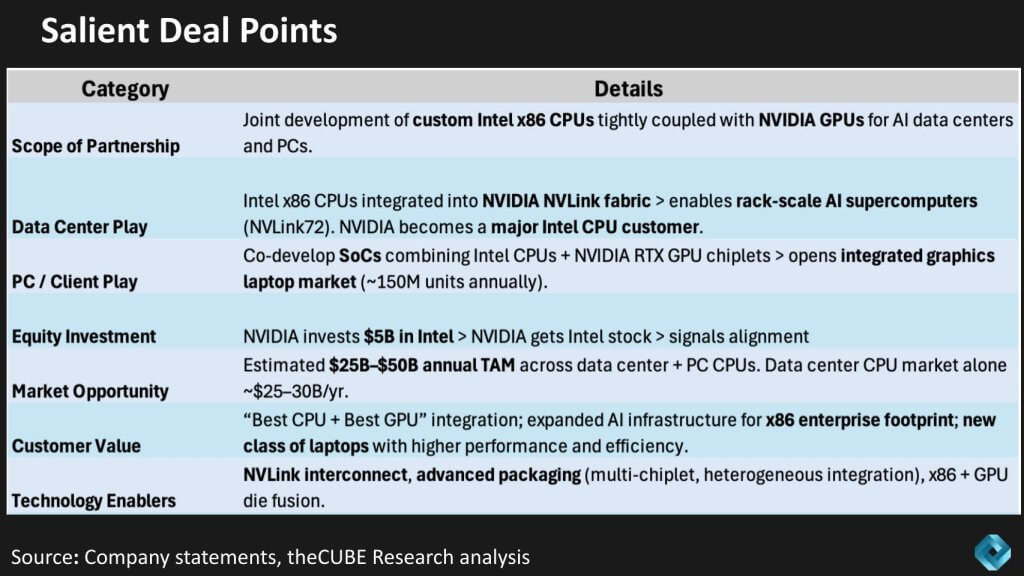

In this Breaking Analysis, we review the salient deal points, forecast the economic impact of the partnership in both the PC and data center sectors and break down the strategic implications for Intel, Nvidia, Advanced Micro Devices Inc., Arm Ltd. and the hyperscalers, and we’ll close with what to watch going forward.

Our advice to Intel in June 2025

We’ll start with a look back and what we said in June of this year. In a research note titled Inside Intel’s Bid to Rewire Its Destiny, we suggested that Intel should partner with Nvidia – basically hitch its wagon to CUDA – and lean into adopting Nvidia’s standards. Though we didn’t expect this investment by Nvidia, it directionally aligns with what happened this week — although the two companies took it much further to include data center opportunities, which we’ll assess in this research piece.

A key to this deal is the hybrid x86-CUDA stack which we’ll dig into in this post.

The deal

We believe the Intel-Nvidia pact should be understood as a systems bet, not a point-product tie-up. The companies are effectively standardizing on a CUDA-first, x86-compatible architecture that spans rack-scale AI in the data center and integrated graphics in client devices. By anchoring the design to NVLink, advanced packaging and chiplets, the partnership moves competition from individual components to tightly coupled central-processing unit-graphics processing unit subsystems where software stickiness (CUDA) and integration economics are key. In our opinion, though the equity component is helpful for Intel, it also signals a commitment to align roadmaps to reduce the risk that this becomes a short-lived OEM arrangement.

Our analysis indicates the near-term value is twofold. First, it gives Nvidia a large, stable x86 partner for heterogeneous systems where CPU proximity and memory semantics matter, while preserving TSMC as the likely manufacturing source for leading dies. Second, it gives Intel a credible path to participate in accelerated computing demand – both in the rack and in AI PCs – without having to own the full GPU software stack. If executed, Intel’s SoC assembly that incorporates Nvidia GPU chiplets could increase unit throughput for Intel’s manufacturing business and create a repeatable pattern for x86-centric enterprises that want CUDA without ripping and replacing existing software and hardware estates.

We see the following implications for enterprise architects and investors:

- If successful, procurement will to shift toward prevalidated CPU-GPU “systems SKUs,” shortening time-to-production for enterprise AI workloads.

- The PC angle: If even a modest piece of the claimed ~150 million units tilts toward AI-ready SoCs, software distribution and developer affinity will follow.

- Foundry ambiguity remains a major concern for us. We see the most likely split as TSMC for leading dies, Intel for SoC integration, which somewhat balances risk while volume increases. Intel foundry is almost completely reliant upon its own captive capacity demands and while this doesn’t solve the foundry quandary, it certainly doesn’t hurt.

The bottom line in our view is this agreement formalizes the baton pass from x86-only designs to CUDA-centric systems, with Intel leveraging packaging and scale to stay in the race and Nvidia extending its moat by making x86 a first-class citizen on its fabric. As well, it gives Nvidia an x86-to-CUDA bridge into enterprise AI, the projected tranche beyond AI for hyperscalers and neoclouds.

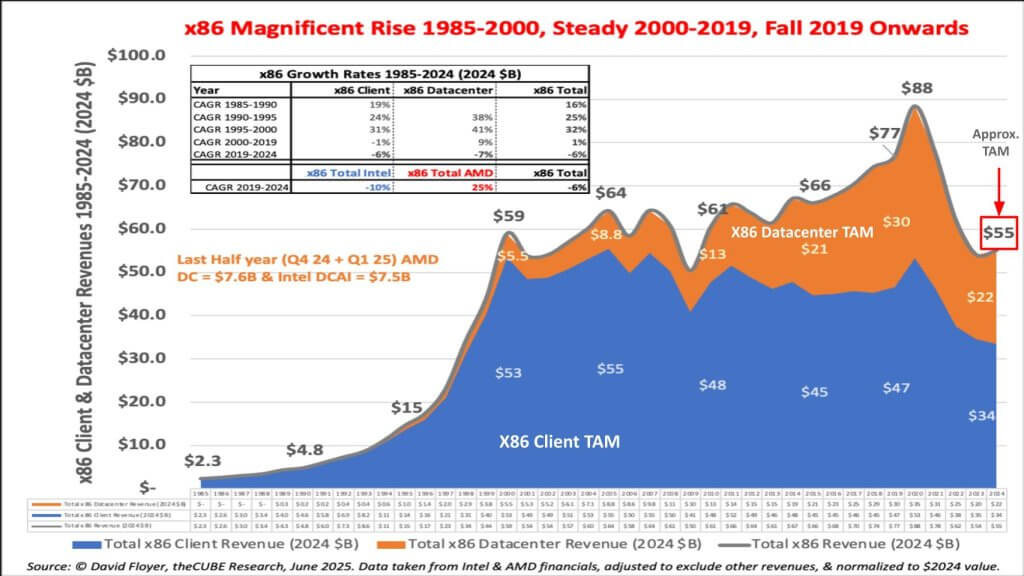

Assessing the hybrid x86-CUDA TAM

The chart below shows the arc of the x86’s history and the its massive ascendancy in both client (blue) and data center (brown). And you can see the upward trajectory in the ’80s and ’90s and then the peak in the COVID era, followed by a rapid decline. Note that PC revenue peaked in the early part of last decade, which was the canary in the coal mine for Intel. COVID provided a “dead cat bounce” for x86 PCs but volume has since decelerated. As we’ve reported ad nauseam, the loss of volume leadership goes to the heart of Intel’s foundry troubles.

Regardless, we had that TAM pegged last year at $55 billion. The companies indicated it was a $25 billion to $50 billion market opportunity. Our data below includes total spend, which includes the revenue from the likes of Dell Technologies Inc., HP Inc., Hewlett Packard Enterprise Co., Super Micro Computers Inc. and so on. So in that sense it aligns with company statements. But we think there’s much more to the opportunity, which we explain later.

We believe the more important story behind the x86 TAM isn’t the historical arc — it’s the new access model. Nvidia now has a clearer onramp to the x86 installed base — both in racks and in clients — by making CUDA a first-class citizen on custom Intel CPUs and co-developed SoCs. In our view, the companies’ $25 billion to $50B opportunity range maps reasonably to the post-COVID-reset market size shown above, with the mix shifting toward accelerated, CUDA-addressable systems rather than stand-alone CPUs.

- Our assumption is that value capture migrates from “CPU-only” designs toward tightly integrated CPU-GPU subsystems, where software lock-in and packaging economics dominate.

- Intel, under pressure from AMD and Arm, gains a credible CUDA pathway; Nvidia gains a direct route into x86 estates that previously defaulted to Intel-centric solutions.

But the TAM is much larger in our view. The following two points underscore our assumptions that lead to our forecast of a $500 billion to $1 trillion and a $100 billion TAM expansion for Nvidia and Intel, respectively:

- We assume the AI marketplace will grow because it will be easier now to migrate. This enables enterprises to get to AI faster. So this serves as both an acceleration and an expansion of the TAM in our view.

- We also assume the PC market will accelerate as opposed to be in decline. This is part of the total marketplace for both Intel and Nvidia as they will both take back share back from AMD. Plus a larger portion of the PCs being developed will go into the SoC.

- CUDA is the main driver. We assume the PC market will accelerate and reverse the decline. This is part of the total marketplace for both Intel and Nvidia as we expect they will both take share from AMD. In addition, a larger portion of the PCs being developed will go into the SoC development and support Intel’s foundry aspirations.

Bottom line: We see this agreement converting a declining x86 market into a CUDA-expandable one. If execution holds, x86 stops being a managed-decline business and becomes a distribution channel for Nvidia’s platform while restoring relevance for Intel through SoC integration, a hybrid bridge to enterprise AI and volume manufacturing for Intel and TSMC.

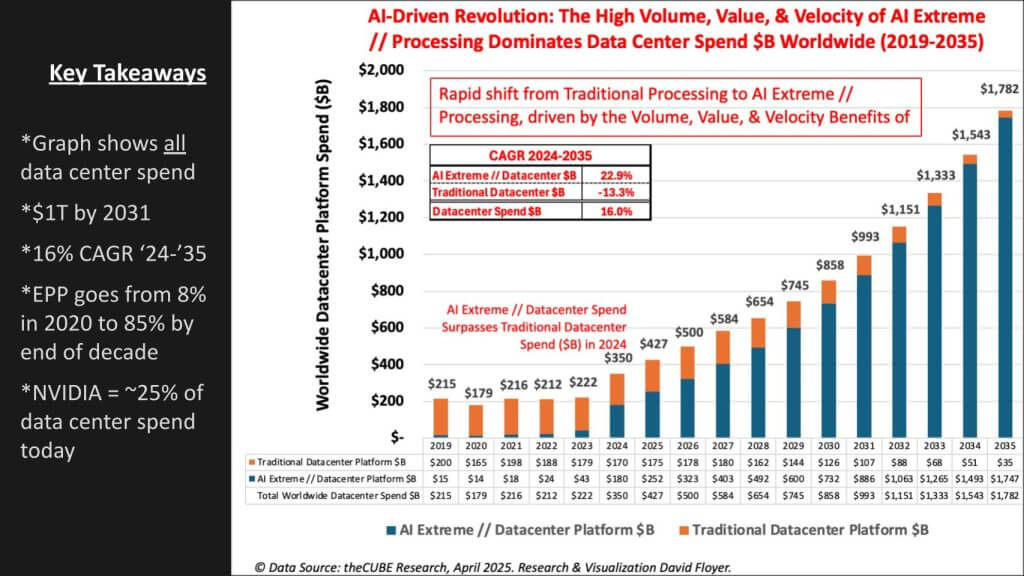

The data center supercycle gets another lift: A hybrid x86-CUDA stack

We believe the data center story here is not only about the massive absolute dollars but also the mix shift. The slide below accentuates the dominant trend — that “AI Extreme” (accelerated/parallel) workloads overtake traditional x86 processing and compound through the decade. The data clearly indicates this is the demand vector Nvidia has already turned in its favor. Today the buildout is concentrated in hyperscaler CapEx while enterprises are still testing the water. The Intel partnership creates a practical on-ramp in the form of a CUDA-first stack that remains x86-compatible, minimizing code migration risk and letting general-purpose estates step into acceleration without an Arm rewrite. In our view, that bridge will unlock software gravity with operational familiarity.

- Execution focus shifts from chip selection to prevalidated CPU-GPU systems, shortening time-to-production for enterprise AI.

- By reducing porting friction, x86-CUDA eases the path for core workloads (databases, analytics, app servers) to adopt acceleration alongside traditional compute.

Key takeaway: Our research indicates Nvidia keeps its platform advantage as “AI Extreme” becomes the default, while Intel gains relevance by turning x86 into a first-class transport for CUDA. The result is a faster enterprise ramp – beyond hyperscalers and neoclouds – without forcing a wholesale architectural migration.

Numbers tell the story of the transition to ‘Extreme AI’

We believe the latest earnings prints from Intel and NVIDAI crystallize the transition visible in the market data. Nvidia now generates roughly 10X Intel’s data center revenue and about 4X total revenue, while Intel’s relative strength sits in consumer PCs — a profit pool that the joint SoC plan explicitly targets. In our view, this is the practical end of Intel’s x86-era monopoly, with value is accruing to accelerated systems and the software that makes them productive.

Without a platform pivot, Intel’s multi-year revenue decline (roughly down mid- to single-digits) would have constrained its ability to sustain a leading-edge foundry. Partnering with Nvidia gives Intel a somewhat viable path to volume and relevance in the very segment that is expanding fastest, while giving Nvidia access to x86 distribution where PCs and enterprise estates remain entrenched.

Though we remain skeptical of Intel’s ability to become viable leading-edge alternative to TSMC, this partnership is the first positive sign we’ve seen in years.

Bottom line: Our research indicates the SoC roadmap is the key factor for the pc market. If the companies can execute to plan, Nvidia can translate data center leadership into client market share while navigating the bridge of x86 compatibility.

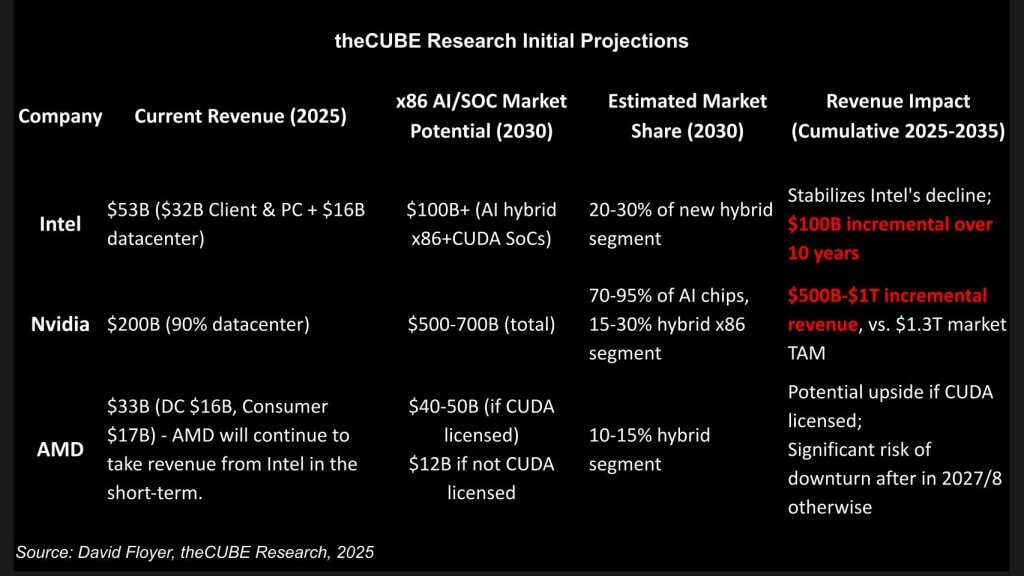

2025-2035: Nvidia’s and Intel’s TAM expands, AMD is boxed in

We believe the road ahead shows a stark reality. Nvidia is already more than twice Intel and AMD combined on 2025 revenue run-rate, and this deal tilts the next leg of growth further toward CUDA-centered systems. Our research indicates Intel’s opportunity expands by roughly $100 billion in the forecast period but is less about reclaiming GPU leadership and more about stabilizing client/datacenter share through x86-CUDA SoCs and somewhat higher foundry throughput. Nvidia’s upside is an order of magnitude larger — a platform TAM expansion that could surpass $500 billion and approach $1 trillion as accelerated systems become the default footprint in both clouds and enterprises.

AMD, by contrast, is boxed in in our scenario. It will likely keep taking CPU share near-term as the market transitions, but its risk profile increases after 2027 if it cannot secure a durable position in the CUDA wave. In our view, a CUDA licensing path for AMD is both strategically rational for Nvidia (introducing a credible second source that moderates Intel’s chiplet pricing power) and politically pragmatic given historical antitrust precedents that pushed IBM, Microsoft, and Intel to open interfaces and preserve competition.

The following additional points are noteworthy:

- Practical sourcing dynamics shift in that Intel can become Nvidia’s manufacturing/SoC second source, pushing AMD toward third place unless it gains CUDA access.

- Policy tailwinds matter: If Nvidia doesn’t voluntarily broaden CUDA access, regulators could compel it to do so, accelerating AMD’s path but reinforcing CUDA’s centrality.

Key takeaway: The center of gravity moves to CUDA hybrids. Intel regains relevance through packaging and volume; Nvidia compounds platform economics; AMD must either plug into CUDA or risk brisk headwinds in our forecast scenario.

Strategic impact and implications

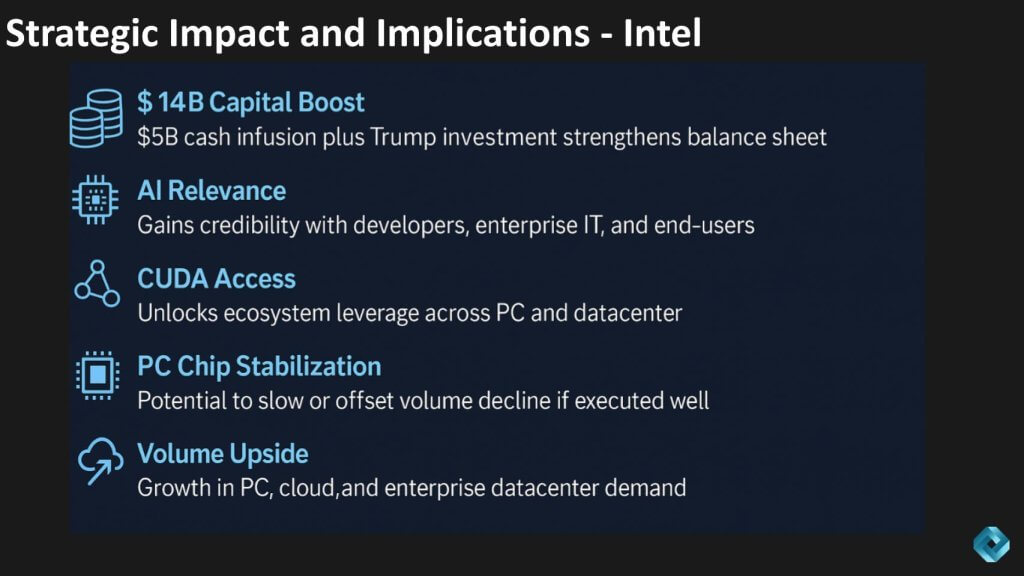

Intel

We believe this deal gives Intel something it hasn’t had in years – a credible narrative in accelerated computing that resonates with developers and enterprise information technology. The immediate cash infusion helps, but the strategic implication is access to CUDA at both ends of the stack – PCs and the data center. That access restores relevance with the people who actually determine workload investments.

If Intel executes on x86-CUDA SoCs and rack-scale integration, unit volumes can stabilize in client and rise in server packages, even if leading-edge die work remains with TSMC. We remain cautious on the foundry turnaround; the pact doesn’t solve Intel’s process gap. But it does create repeatable, shippable systems Intel can assemble at scale, which is the right bridge while process technology catches up.

In addition, we make the following observations:

- Our assumptions are that CUDA becomes the de facto system application programming interface for new AI-first workloads, recreating for Nvidia the developer lock-in Intel once enjoyed, with Intel as a distribution partner rather than the platform owner.

- The net for Intel is regained relevance with developers, a path to volume that supports manufacturing economics, and time to repair foundry competitiveness, provided it ships compelling SoCs on schedule.

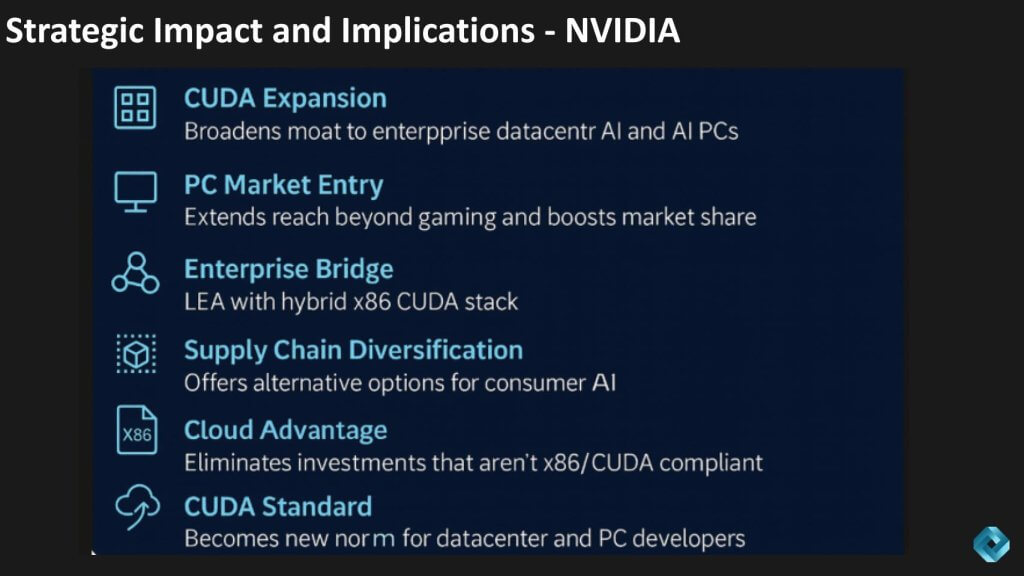

Nvidia

We believe this pact turns CUDA from a datacenter phenomenon into a full-stack standard that spans enterprise AI and mainstream PCs. Nvidia gains a distribution partner with unmatched x86 reach, converting its software gravity into new surface area in the form of client SoCs, rack-scale x86-CUDA hybrids, and an easier onramp for enterprises that have been slow to adopt AI. In our view, that bridge is the potential accelerant as customers maintain x86 familiarity while stepping into accelerated computing, which further broadens Nvidia’s moat and weakens bespoke alternatives.

Two near-term effects stand out, specifically: 1) Nvidia enters the mainstream PC profit pool directly with x86-CUDA laptops that extend beyond gaming into volume client markets; 2) The partnership pressures cloud-specific silicon strategies that aren’t CUDA-compatible. We don’t expect hyperscaler alternatives to disappear, but we do expect large-scale bets outside CUDA to narrow until (or unless) they gain first-class CUDA status.

Bottom line: Our research indicates CUDA becomes the default development platform for data center and AI PCs, with Intel acting as the strategic partner rather than a desperate competitor. Nvidia trades some control for much more surface area, turning x86 estates into a distribution channel for its platform. Genius.

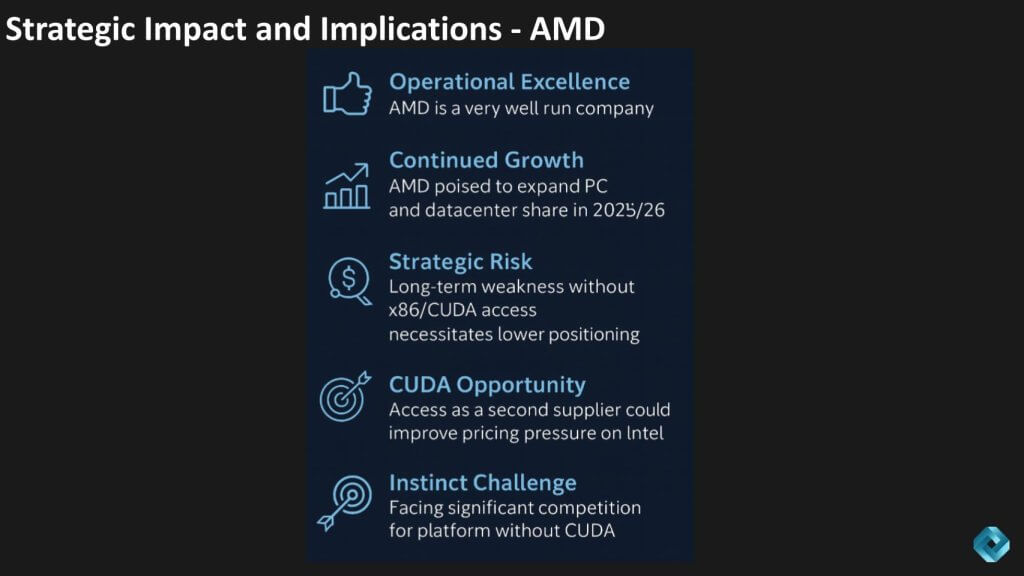

AMD

We believe AMD remains one of the industry’s best operators and is positioned to keep taking near-term share in PCs and x86 data center markets. It is unburdened by a captive foundry and supported by solid product execution. The strategic problem is medium-term in that the center of gravity is shifting to CUDA-aligned hybrids, and Intel now has a clearer x86-CUDA lane that AMD lacks. Without first-class CUDA access, AMD’s addressable market narrows as enterprises and OEMs standardize on pre-validated CPU–GPU systems with CUDA as the de facto API. Instinct accelerators have shown real progress with its own software stack, but once CUDA becomes native to x86, the rationale for a parallel ecosystem weakens and the investment case gets harder beyond the next couple of years.

- Our research indicates the upside path is straightforward: If AMD secures CUDA access as a second supplier, it would preserve relevance, increase pricing leverage against Intel in chiplet negotiations, and keep AMD relevant in the fastest-growing segment.

Bottom line: AMD can still grow through 2025–26 on execution and x86 share gains, but sustaining that trajectory post-2027 likely requires a key role inside the CUDA wave. Without it, we see a tougher slope for Instinct and a gradual repositioning to the lower tier of accelerated platforms. Open-source CUDA alternatives remain a wildcard but currently we see them as niche in specific segments such as high-performance computing and not disrupting CUDA in the near term.

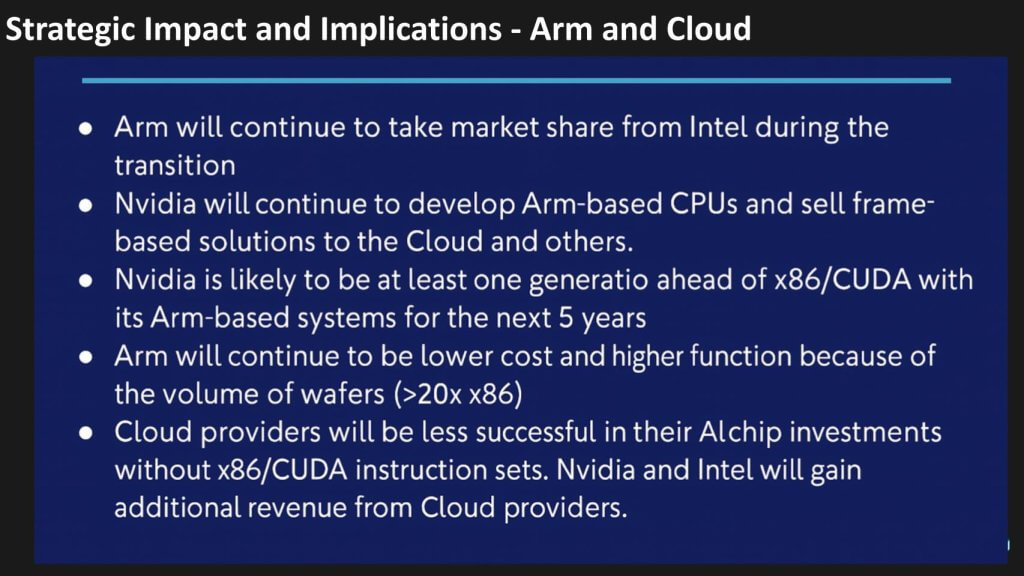

Arm and hyperscalers

Despite pundit commentary to the contrary, we believe Arm is a clear beneficiary, not a casualty. Nvidia will continue to ship Arm-based processors and full frames to clouds and OEMs even as it pursues x86-CUDA hybrids with Intel. In our view, Arm keeps its structural advantages – faster tape-outs, lower cost per function, low power, strong ecosystem and massive wafer volume – and remains at least a generation ahead on time-to-market for many workloads.

The result is a dual-track Nvidia roadmap in the form of Arm systems that push performance per watt and cost efficiency, alongside x86-CUDA systems that maximize compatibility and enterprise adoption. For hyperscalers, we expect them to continue to thrive in AI but there will be friction in silicon initiatives. They want less dependency on Nvidia, yet the economics and software gravity of CUDA narrow the window for off-stack alternatives.

- Expect clouds to keep investing in custom silicon, but large-scale deployments that aren’t first-class with CUDA will likely be more targeted and slower to scale.

- As CUDA becomes the default API, both Arm and x86 footprints can expand – with Arm winning on speed and cost, and x86 winning on familiarity and distribution.

Bottom line: our research indicates there are no obvious losers here. Arm rides the same AI wave with superior cost/function curves, Nvidia monetizes both instruction sets, and clouds grow their TAM – just with fewer places to hide from CUDA’s center of gravity.

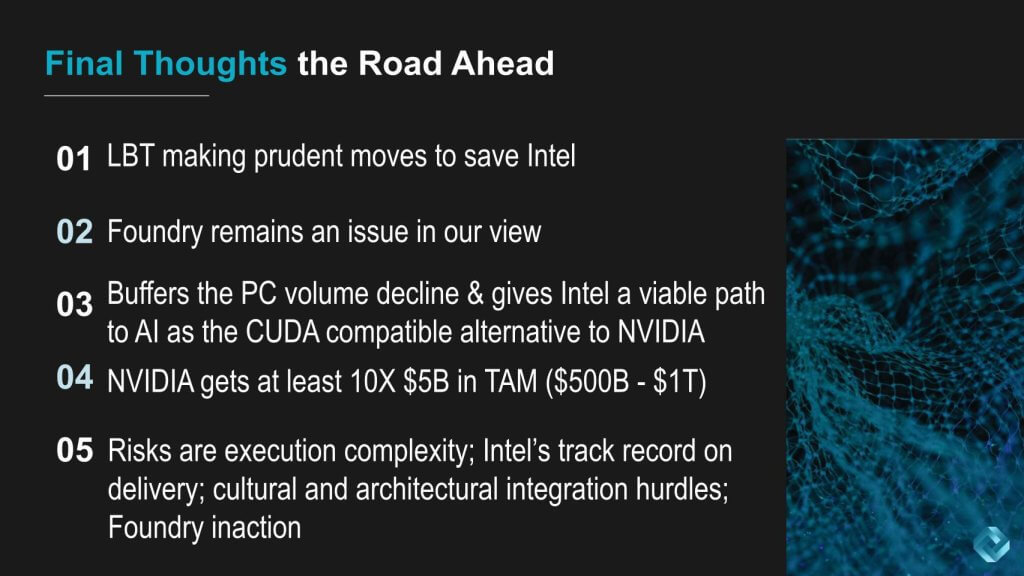

What’s ahead

We believe this pact resets competitive assumptions. Lip-Bu Tan is making a pragmatic move that gives Intel a credible lane back into accelerated computing without assuming unlimited cash or time. CUDA on x86 creates an immediate bridge for enterprises and PC OEMs, buffering client volume erosion and giving Intel a repeatable SoC product line to assemble at scale.

It does not, in our view, fix the process gap with foundry remaining a structural overhang until Intel proves sustained node cadence or forges a JV/partnership model that de-risks capital and attracts external customers. The most rational near-term split is still leading dies at TSMC with Intel driving system integration and volume packaging.

We continue to favor a plan that spins foundry.

For Nvidia, the math is compelling. A $5 billion equity check unlocks a distribution flywheel across PCs and enterprise data centers and extends CUDA’s reach into the one market where Nvidia has historically been underpenetrated. Our research indicates Nvidia captures a 10X larger TAM uplift versus its cash outlay as accelerated systems become the default footprint and x86 turns from competitor to onramp. The company trades a measure of control for far greater market surface area that we see as accretive to the platform.

Risks remain executional rather than conceptual in our scenarios. Intel must ship on schedule, align cultures, and keep the foundry story from constraining its velocity; Nvidia must balance openness with platform integrity and manage hyperscaler tensions. In our opinion, these risks are exceedingly manageable relative to the strategic upside.

The following two points are noteworthy:

- Net effect for operators is a simpler, CUDA-first on-ramp that preserves x86 familiarity and shortens time-to-production.

- Net effect for investors: Intel gains time and volume; Nvidia compounds software-led economics across a larger addressable installed base.

Bottom line: This deal represents the functional handoff from the x86 era to a CUDA-centric system era. Intel regains relevance through packaging, systems and distribution; Nvidia becomes the new default in AI infrastructure with a bigger, more durable TAM.

Disclaimer: All statements made regarding companies or securities are strictly beliefs, points of view and opinions held by News Media, Enterprise Technology Research, other guests on theCUBE and guest writers. Such statements are not recommendations by these individuals to buy, sell or hold any security. The content presented does not constitute investment advice and should not be used as the basis for any investment decision. You and only you are responsible for your investment decisions.

Disclosure: Many of the companies cited in Breaking Analysis are sponsors of theCUBE and/or clients of theCUBE Research. None of these firms or other companies have any editorial control over or advanced viewing of what’s published in Breaking Analysis.

Image: theCUBE Research/Gemini

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

- 15M+ viewers of theCUBE videos, powering conversations across AI, cloud, cybersecurity and more

- 11.4k+ theCUBE alumni — Connect with more than 11,400 tech and business leaders shaping the future through a unique trusted-based network.

About News Media

Founded by tech visionaries John Furrier and Dave Vellante, News Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.