Karrot replaced its legacy recommendation system with a scalable architecture that leverages various AWS services. The company sought to address challenges related to tight coupling, limited scalability, and poor reliability in its previous solution, opting instead for a distributed, event-driven architecture built on top of scalable cloud services.

Karrot, a leading platform for building local communities in Korea, uses a recommendation system to provide users with personalized content on the home screen. The system consists of the recommendation machine learning model and a feature platform that acts as a data store for users’ behaviour history and article information. As the company has been evolving the recommendation system over recent years, it became apparent that adding new functionality was becoming challenging, and the system began to suffer from limited scalability and poor data quality due to the fragmented nature of feature storage and ingestion processes.

The initial architecture of the recommendation system was tightly coupled with the flea market web application, with feature-specific code hard-coded. Even though the architecture used scalable data services, such as Amazon Aurora, Amazon ElastiCache, and Amazon S3, sourcing data from multiple data stores led to data inconsistencies and challenges when introducing new content types, such as local community, jobs, and advertisements.

Karrot’s engineers, Hyeonho Kim, Jinhyeong Seo, and Minjae Kwon, explain the importance of using a unified, flexible, and scalable feature store:

In ML-based systems, various high-quality input data (clicks, conversion actions, and so on) are considered a crucial element. These input data are typically called features. At Karrot, data, including user behavior logs, action logs, and status values, are collectively referred to as user features, and logs related to articles are called article features. To improve the accuracy of personalized recommendations, various types of features are needed. A system that can efficiently manage these features and quickly deliver them to ML recommendation models is essential.

The company set ambitious goals for the new feature platform, which would support future product development and traffic growth. The technical team additionally identified technical requirements around serving and ingestion traffic, total data volume, and maximum record sizes.

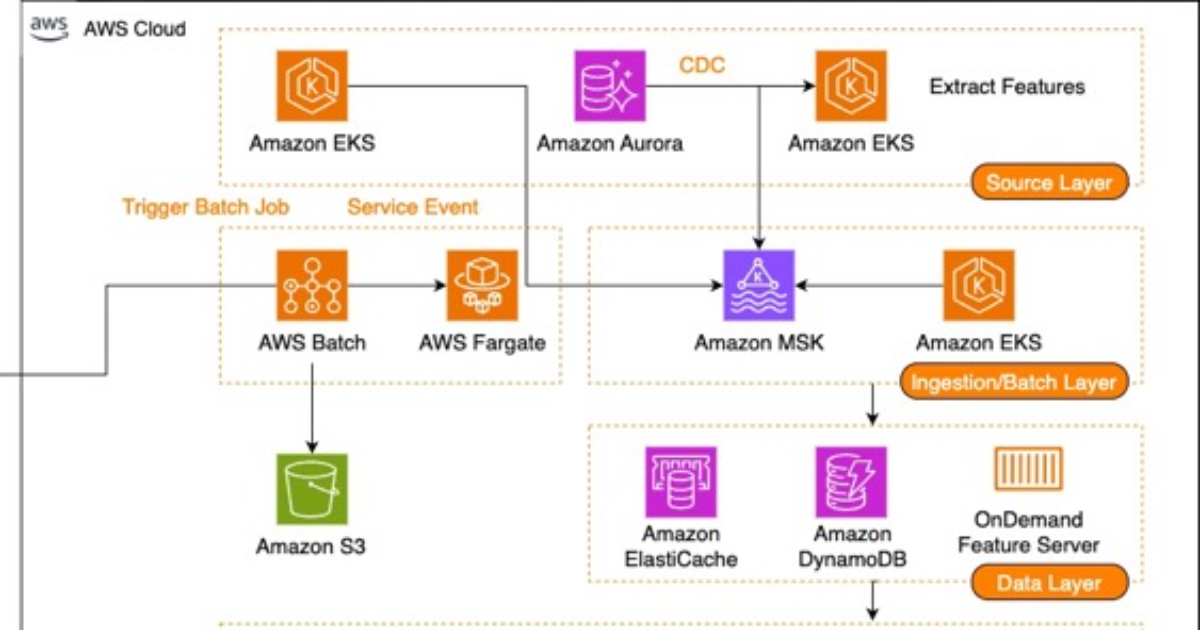

The New Architecture of Karrot’s Feature Platform (Source: AWS Architecture Blog)

The new architecture encompassed three main areas: feature serving, stream ingestion pipeline, and batch ingestion pipeline. The feature serving layer was responsible for making the latest feature data available to the recommendation engine. Engineers considered functional and technical requirements and devised a multi-level caching solution and dedicated serving strategies tailored to the features’ characteristics. Small, frequently used data sets were to be served from in-memory caches located on Amazon EKS pods of the Feature Server. Medium-sized and reasonably frequently used features were sourced from the remote ElastiCache caches. Infrequently used feature types with large records would be fetched directly from DynamoDB tables, using a unified schema. Additionally, the team created a dedicated On-Demand Feature Server EKS service for features that were either dynamically computed or couldn’t be persisted due to compliance issues.

While working on the service layer, engineers had to address several challenges related to common caching issues. They adopted the Probabilistic Early Expirations (PEE) technique to refresh popular content and proactively address cache misses, mitigating cache stampedes and improving latency. They also used separate soft and hard TTLs with jitter and write-through caching to alleviate consistency problems, and negative caching to reduce unnecessary database queries.

Stream Ingestion Pipeline of Karrot’s Feature Platform (Source: AWS Architecture Blog)

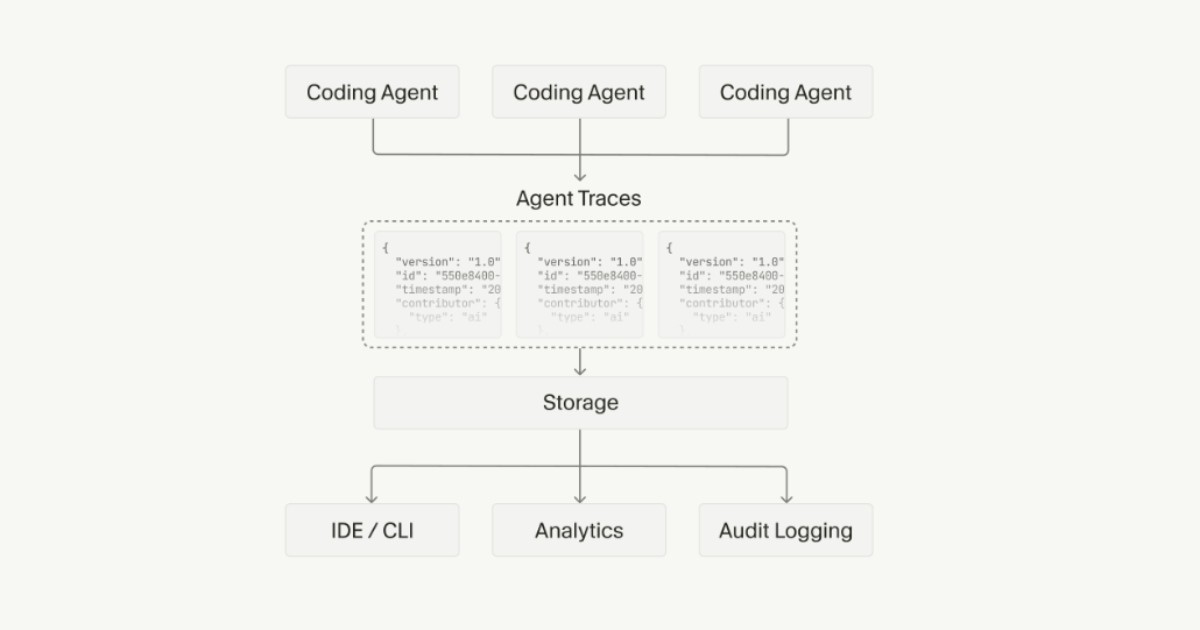

As part of the feature platform overhaul, Karrot created a new data ingestion architecture for real-time events and batch mode. The company focused on simple ETL logic and validation for its primary stream-processing mechanism, leaving custom solutions to handle more complex use cases, such as creating content embeddings from pre-trained models or using LLMs to enrich content features. Engineers used a combination of event dispatcher and aggregator services running on EKS, sourcing events from Amazon MSK, to efficiently handle M:N relationships between events and features.

Batch Ingestion Pipeline of Karrot’s Feature Platform (Source: AWS Architecture Blog)

The team considered using Apache Airflow but opted for AWS Batch on AWS Fargate for batch ingestion, primarily driven by simplicity and cost-effectiveness. Over time, however, engineers identified several areas for improvement, including the lack of DAG support, manual configuration for parallel processing, and limited monitoring.

Karrot benefited from the new platform, increasing click-through rates by 30% and conversion rates by 70% for article recommendations. The company uses the new platform across more than 10 different spaces and services, and stores over 1000 features across many services and content types.