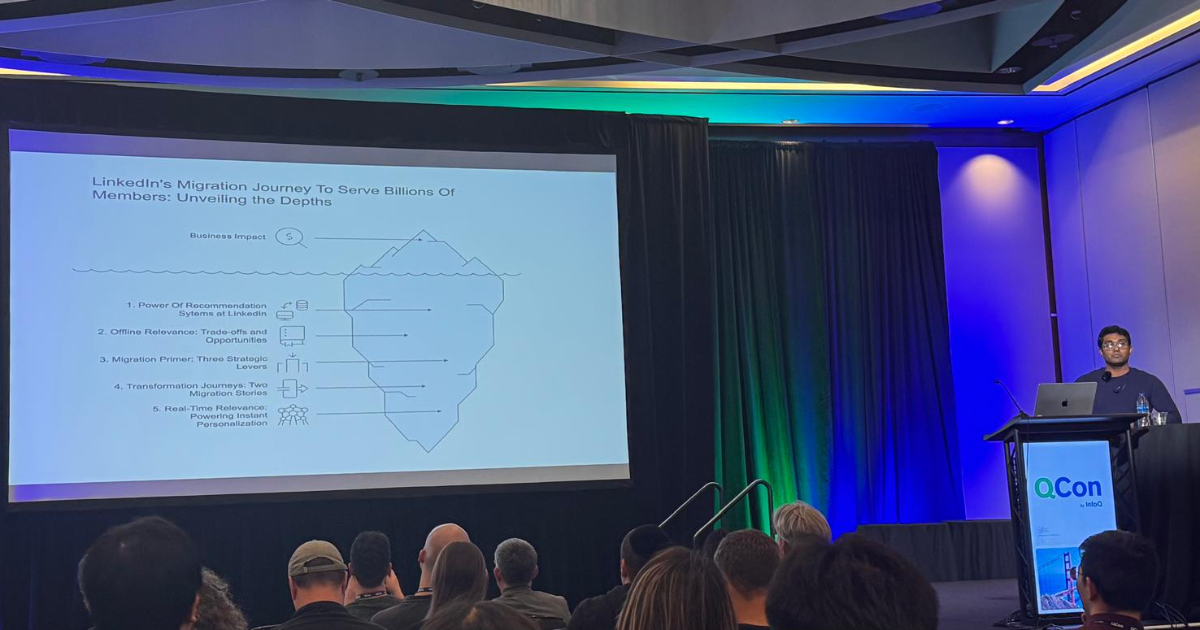

At QCon San Francisco 2025, Engineering Manager Nishant Lakshmikanth provided a deep dive into how LinkedIn systematically dismantled its legacy, batch-centric recommendation infrastructure to achieve real-time relevance while dramatically improving operational efficiency.

The legacy architecture, responsible for critical products like “People You May Know” and “People You Follow,” suffered from three major constraints: Low freshness, high latency, and high compute cost.

In the prior system, recommendations were precomputed for the entire user base, regardless of whether a user logged in. This resulted in significant compute wastage and static, stale results. A pipeline incident could delay updates for days, directly impacting core business metrics. The clear goal was to achieve instant personalization while drastically reducing associated costs.

LinkedIn approached this monumental migration not as a single cutover, but as an iterative process defined by four architectural phases:

- Offline Scoring: The starting point is heavy batch computation, high latency, and massive precomputed storage.

- Nearline Scoring: An intermediate step, providing hourly or daily freshness rather than a multi-day lag.

- Online Scoring: The critical pivot, where model inference is run on-demand in real-time, reacting to the user’s current session and intent.

- Remote Scoring: The final destination, moving heavy model scoring to a high-performance cloud environment, often leveraging GPU-accelerated inference.

This framework enabled two parallel migrations: Offline-to-Online Scoring and Nearline-to-Online Freshness, shifting the focus from precomputation to dynamic execution.

The success hinged on a fundamental architectural decoupling: separating the Candidate Generation (CG) pipeline from the Online Scoring Service.

- Dynamic Candidate Generation: CG moved away from static lists. It now uses real-time search index queries, Embedding-Based Retrieval (EBR) for new user and content discovery (solving the cold-start problem), and immediate user context to retrieve a relevant set of candidates on the fly.

- Intelligent Scoring: The Online Scoring Service uses a context-rich feature store, enabling modern models like Graph Neural Networks (GNNs) and Transformer-based models for precise ranking. Crucially, the team implemented Bidirectional Modeling, which scores connections from both the sender’s and recipient’s perspectives, yielding superior results.

Regarding LLMs, Lakshmikanth clarified the cost-performance trade-off: due to high computational overhead, LLMs are primarily reserved for Candidate Generation and Post-Ranking flows, where they add value without crippling the latency-sensitive, real-time core ranking loop.

The move to real-time architecture has delivered quantifiable results and future-proofed the system:

- Cost Reduction: The cleanup of batch dependencies resulted in over 90% reduction in offline compute and storage costs and an overall compute cost reduction of up to 68% in some core flows.

- Session-Level Freshness: The system now achieves session-level freshness, reacting to user clicks, searches, and profile views instantly, leading to significant increases in member engagement and connection rates.

- Platform Flexibility: The compartmentalized design simplifies maintenance and enables faster model experimentation, allowing rapid deployment of cutting-edge models and seamless rollbacks.

These architectural principles are now being applied to other critical surfaces, including LinkedIn Jobs (to better interpret career intent) and Video (where content freshness is paramount).