The era of passive AI chatbots is ending. We are now entering the age of Agentic AI: systems that actively reason, plan, and execute tasks.

For organizations, this represents a potential leap in productivity, but it also introduces new engineering challenges. Moving from a simple prompt to a reliable agent ecosystem requires a new, robust architecture.

In this article, we’ll explore the anatomy of AI agents, how the Model Context Protocol (MCP) has finally solved the integration bottleneck, and how you can architect safe, scalable systems where humans and agents collaborate effectively.

What is an AI agent?

Some AI agents are simply a system prompt and a collection of tools that are sent to a model that does all the thinking. However, there are also more powerful AI agents that use the LLM to recommend and propose actions, then the AI agent runs its own code to perform functions such as:

• Control execution: state machines, task graphs, retries, timeouts

• Enforce policy: auth, scopes, RBAC, allow/deny rules

• Validate actions: schema checks, safety filters, sandboxing

• Manage memory/state: databases, vector stores, session state

• Coordinate agents: message passing, role separation, voting

• Handle failure: rollbacks, circuit breakers, human-in-the-loop

While a standard LLM (large language model) is passive and waits for your input to generate a response, an AI agent is active. It uses reasoning to break down a goal into steps, decides which tools to use, and executes actions to achieve an outcome.

Agentic AI systems initiate a session by sending an LLM a system prompt, which may include the definition of multiple agents and their tools. Some of these tools may allow agents to invoke other agents, manage the context itself, and even select the model for the next step.

| n | LLM Chatbot | AI Agent |

|—-|—-|—-|

| Flow | User Input -> Model -> Output | User Goal -> LLM -> Reasoning/Planning -> Tool Use -> Action -> Verification -> Output |

| Summary | Gives you advice but you have to do the work. | You give the agents a goal and access to software, they come back when the job is done. |

The mastery of building Agentic AI systems is finding the right mix of agents, tools, and prompts that allow the LLM to accomplish your goals while still providing adequate guardrails and verification.

To this end, managing the tools and other resources available to the AI agents is a major focus. This is where the Model Context Protocol (MCP) comes in.

The Model Context Protocol

MCP is an open standard introduced by Anthropic in November 2024. It standardizes how AI systems connect to external data and services.

The idea behind MCP is that all LLM API providers allow the LLM to invoke tools. Developers can benefit from a structured way to define those tools and make them available to the LLM in a uniform and consistent way.

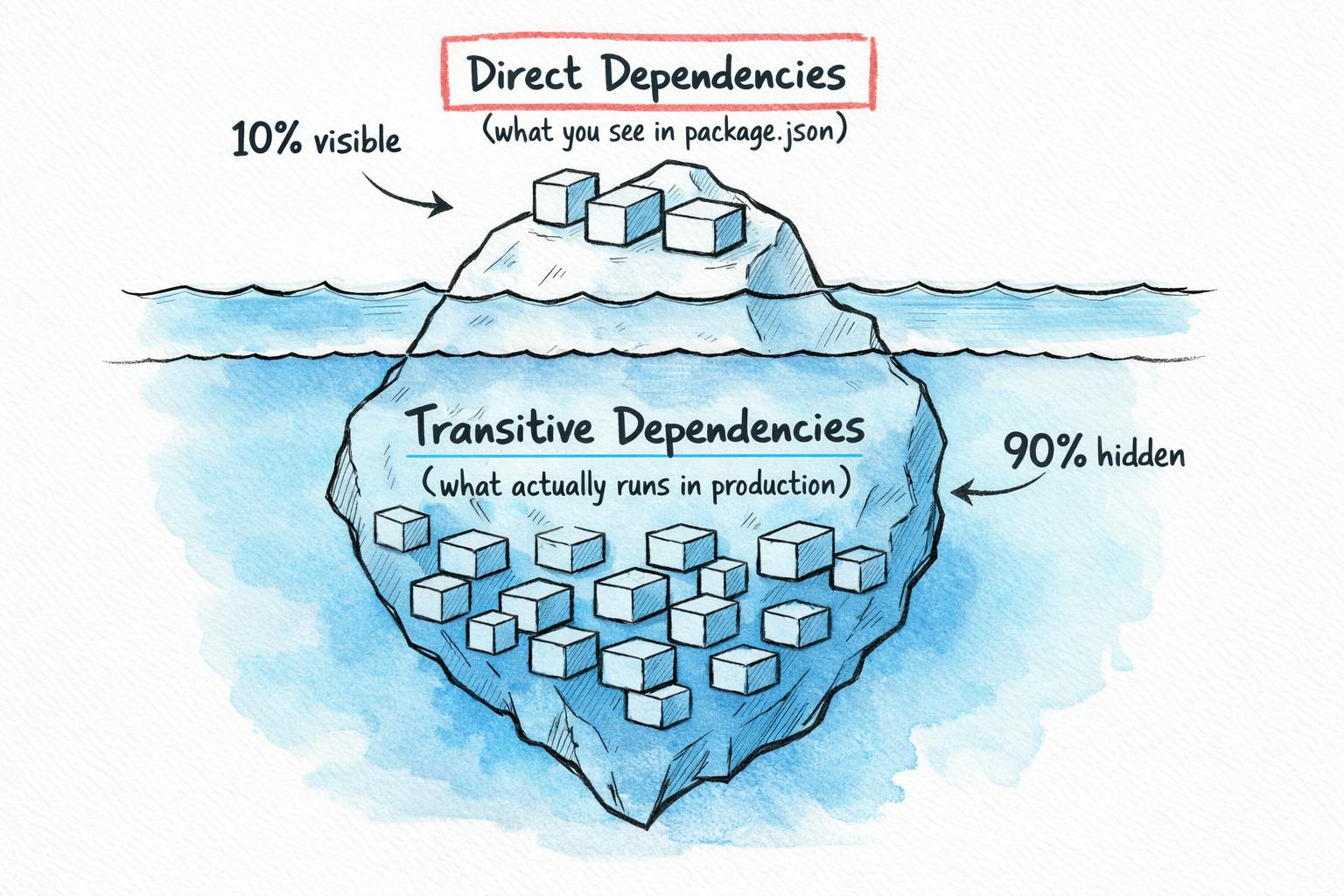

Prior to MCP, the integration of 3rd party tools into Agentic AI systems added a lot of friction. But by providing a universal interface for reading files, executing functions, and handling contextual prompts, MCP enables AI models to access the data they need securely and consistently, regardless of where that information lives.

Since its release, the protocol has been adopted by major AI providers including OpenAI and Google, cementing its role as the industry standard for AI system integration.

MCP operates through a straightforward client-server architecture with four key components:

- The host application such as Claude Desktop, modern IDEs, or your AI system.

- The MCP client which establishes one-to-one connections from the host to a server, often a built-in capability of AI frameworks.

- The MCP server which exposes tools, resources, and prompts.

- The transport layer which manages communication between clients and servers.

MCP also opened the door to an ecosystem where 3rd party platforms expose their capabilities to AI agents by publishing their own official MCP servers. Large enterprises such as Microsoft, AWS, Atlassian, and Sumo Logic have all published MCP servers.

MCP solves an important problem, but it is just one among many for agents. Let’s look next at how to design safe Agentic AI systems.

Designing Safe Agentic AI Systems

Agentic AI can go catastrophically wrong. There are multiple risks such as:

- Prompt injection that hijacks workflows to exfiltrate data or execute ransomware.

- Privilege escalation via tool chaining that drains accounts or deletes production systems.

- Infinite loops that burn millions in API costs.

- Hallucinated actions that trigger irreversible trades or compliance violations.

- **Token Torching **where malicious actors hijack token spend through MCP.

Agents are often entrusted with access to APIs, browsers, and infra systems. Without safeguards, this greatly amplifies your risks. Safe Agentic AI requires a “defense-in-depth” approach built on multiple overlapping layers.

- Input validation, output auditing, and human-in-the-loop escalation form the verification backbone.

- Decisions are never fully autonomous when the blast radius or financial impact is high.

- Sandboxing and explicit permission boundaries prevent unauthorized access.

- Each agent should receive a distinct identity with least-privilege credentials and scoped tokens, rather than inheriting user permissions.

- Fault tolerance through retry logic, fallback models, and anomaly detection ensures that systems degrade gracefully under failure.

- Deep observability implemented via standardized telemetry, structured logging, metrics collection, and real-time monitoring dashboards enables rapid detection and response.

Engineering effective multi-agent systems requires deliberate architecture design that incorporates one or more coordination patterns:

- Centralized orchestration where a supervisor agent coordinates specialized workers and maintains global state.

- Decentralized peer-to-peer communication enabling flexible agent-to-agent interaction.

- Hierarchical delegation that organizes agents into levels of abstraction.

Development environments like Sumo Logic’s Dojo AI (an Agentic AI platform for security operations centers) can help significantly, providing essential infrastructure for safely iterating on agentic systems before production deployment. Dojo AI is carefully curated according to its design principles and safeguards. Customers can use Dojo AI as is and they can build their own agentic AI environment (similar to Dojo AI) for their own AI-based core competencies. The Sumo Logic MCP server lets you run data queries and make Dojo AI agent calls when needed from your own AI agents.

Next, let’s see some of the different ways people interact with Agentic AI systems.

How People Collaborate with AI Agents

Traditional systems follow a well-defined workflow and pre-programmed algorithms. User input and outputs are fully structured. Even in dynamic systems, user input can deterministically control the flow.

Agentic AI systems, however, are different. The LLM controls the flow (within its guardrails). Users provide initial intent and motivation, and later operate as approvers and a gating function. In particular, the free text conversation is novel.

So how do we best collaborate with these agents?

One of the most common ways to interact with AI agents is through chatbots, where you can exchange text, images, and files with LLMs and their agents. Voice conversations are also becoming more popular.

Of course, generic chatbots like ChatGPT, Gemini, and Claude Desktop are not aware of your agents out of the box. However, agents can be introduced as MCP tools.

n Another interesting option is to build a Slack application allowing agents to join channels, interact with users, automatically monitor the channels, and automatically respond to events.

This is a rich environment as it allows humans and agents to collaborate smoothly. The Slack user experience already supports group channels and threads, so agents can add details such as their chain of thoughts or citations without cluttering the screen. Multiple human users can engage with each other and AI agents all in the same channel. n n If you need even more specialized user experience, you could build a custom web, desktop, or mobile application for your agents. n n You could even create a chatbot likeMobot, a Slack application integration, or a custom suite of agents like Dojo AI.

The Future of Agents

Perhaps the most important thing to understand about AI agents is that they are coming faster than you think. In the past, major technological revolutions like the personal computer, the internet, and mobile phones took decades to become ubiquitous and the pace of innovation was manageable. AI is different.

Many experts predict that in just the next few years, AI agents will be able to perform any knowledge work better than the best humans. They will unlock scientific discoveries and provide unprecedented productivity gains. Manual labor is not far behind, with humanoid robots making impressive strides powered by AI agents.

LLMs can already perform many tasks as well as humans, though they lack the ability to plan and operate over long time horizons, deal with complexity, and maintain coherence. But with a carefully constructed web of agents and a curated set of tools that collaborate over multiple iterations to accomplish long horizon tasks, these constraints are being removed. There is much innovation in this domain beyond just MCP such as agent orchestration, toolkits, and verification layers.

Industry Standardization

We’re starting now to see the standardization of techniques, tools, and formats for AI agents. For example, the Agentic AI Foundation (AAIF) is a new initiative under the Linux Foundation to ensure Agentic AI evolves in an open and collaborative manner. Its members include Anthropic, OpenAI, Amazon, Google, Microsoft and Block. It hosts several prominent agent technologies, including MCP, goose, and AGENTS.md.

There are other prominent open efforts as well, including Google’s Agent2Agent (A2A) protocol and Agent Skills (also originating from Anthropic).

Dynamic User Experiences

The future of the user experience is all about generative UI. The LLM and agents will generate an appropriate UI on the fly depending on the query, user, conversation history, and more.

For example, if you ask about the stock market, rather than provide a generic overview of today’s business news, the AI system may decide to show a historical timeline and a pie chart with your current positions as well as links to relevant educational material. Everything will be tailored per user.

The Shift to AI Agents

The agentic shift is here. We’re moving from passive text generation to active, autonomous work. As we’ve seen, this shift requires more than just new models. It calls for a careful architecture.

To succeed, organizations should focus on:

- Leveraging the Model Context Protocol (MCP).

- Moving beyond simple prompts to a “defense-in-depth” strategy.

- Designing interfaces, such as Slack apps and custom UIs, where humans provide the intent and agents handle the execution.

AI agents may soon outperform top human knowledge workers, unlock major scientific and productivity gains, and eventually expand into physical work through robotics. Understanding their basics is the first step to harnessing their power for your organization.

Have a really great day!